Megatron-LM

Continuing research into training Transformer models at scale

Product Details

Megatron-LM is a powerful, large-scale Transformer model developed by NVIDIA's Applied Deep Learning research team. This product is used in ongoing research into training Transformer language models at scale. We use mixed precision, efficient model parallelism and data parallelism, and pre-training of multi-node Transformer models such as GPT, BERT, and T5.

Main Features

Target Users

Research and practical scenarios suitable for training large-scale language models

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

Self-Rewarding Language Models

This product is a self-rewarding language model that uses LLM as a referee and uses the reward signal provided by the model itself for training. Through iterative DPO training, the model not only improves its ability to follow instructions, but also provides high-quality self-rewards. After three iterations of fine-tuning, the product outperformed many existing systems on the AlpacaEval 2.0 rankings, including Claude 2, Gemini Pro, and GPT-4 0613. This work, although preliminary, opens the door to the possibility of continued improvement of the model in two aspects.

Beagle14-7B

Beagle14-7B is a powerful Chinese language model that can be used for various natural language processing tasks. It is based on the merging of multiple pre-trained models and contains rich language knowledge and expression capabilities. Beagle14-7B has efficient text generation capabilities and accurate semantic understanding capabilities, and can be widely used in chat robots, text generation, summary extraction and other tasks. For pricing information on Beagle14-7B, please visit the official website for details.

ahxt/LiteLlama-460M-1T

LiteLlama-460M-1T is an open source artificial intelligence model trained with 1T tokens and has 460M parameters. It is a scaled-down version of Meta AI’s LLaMa 2 to provide smaller model sizes.

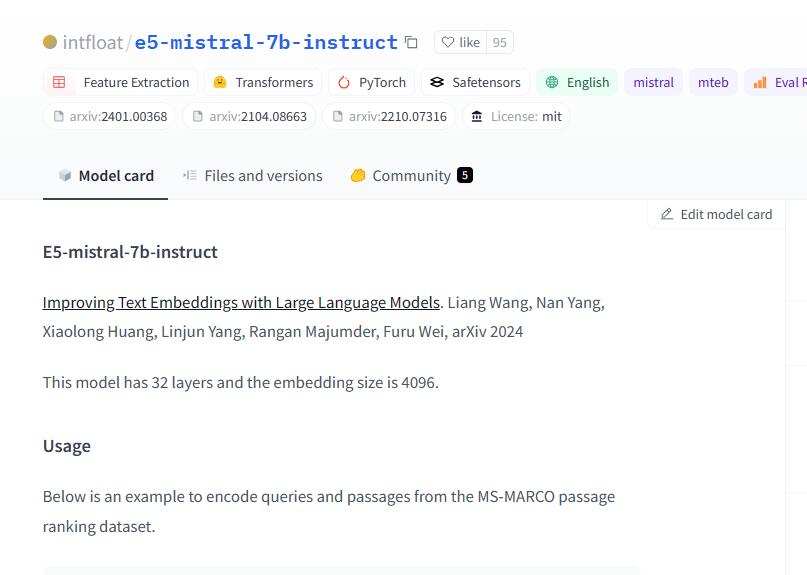

intfloat/e5-mistral-7b-instruct

E5-mistral-7b-instruct is a text embedding model with 32 layers and 4096 embedding sizes. It can be used to encode queries and documents to produce semantic vector representations. The model uses natural language task descriptions to guide the text embedding process and can be customized for different tasks. The model was trained on the MS-MARCO passage ranking dataset and can be used for natural language processing tasks such as information retrieval and question answering.

Google T5

Google T5 is a unified text-to-text converter that achieves state-of-the-art results on multiple NLP tasks by pre-training on large text corpora. It provides code for loading, preprocessing, mixing, and evaluating datasets, and can be used to fine-tune published pre-trained models.

Jina Embeddings V2 Base

Jina Embeddings V2 Base is an English text embedding model that supports 8192 sequence lengths. It is based on the Bert architecture (JinaBert) and supports symmetric bidirectional variants of ALiBi to allow longer sequence lengths. The model was pre-trained on the C4 dataset and further trained on Jina AI’s collection of over 400 million sentence pairs and negative samples. This model is suitable for a variety of use cases for processing long documents, including long document retrieval, semantic text similarity, text reordering, recommendation, RAG and LLM based generative search, etc. The model has 137 million parameters and is recommended for inference on a single GPU.

Lemur

Lemur is an open language model designed to provide language agents with optimized natural language and coding capabilities. It balances natural language and coding skills to enable agents to follow instructions, reason about tasks and take practical action. Lemur combines the advantages of natural language and coding to produce state-of-the-art performance on different language and coding benchmarks through two-stage training, surpassing other available open source models and closing the gap in agent capabilities between open source and commercial models.

Kosmos-2

Kosmos-2 is a multi-modal large-scale language model that can associate natural language with various forms of input such as images and videos. It can be used for tasks such as phrase positioning, referential expression understanding, referential expression generation, image description, and visual question answering. Kosmos-2 uses the GRIT dataset, which contains a large number of image-text pairs and can be used for model training and evaluation. The advantage of Kosmos-2 is that it can correlate natural language with visual information, thereby improving model performance.

StreamingLLM

StreamingLLM is an efficient language model capable of handling infinite length inputs without sacrificing efficiency and performance. It works by retaining recent tokens and attention pooling, discarding intermediate tokens, thereby enabling the model to generate coherent text from recent tokens without the need for cache resets. The advantage of StreamingLLM is the ability to generate responses from recent conversations without relying on past data without flushing the cache.

RoleLLM

RoleLLM is a role-playing framework for building and evaluating the role-playing capabilities of large language models. It consists of four stages: role profile construction, context-based command generation, role prompting using GPT, and role-based command adjustment. Through Context-Instruct and RoleGPT, we create RoleBench, a systematic and fine-grained role-level benchmark dataset containing 168,093 samples. In addition, RoCIT produces RoleLLaMA (English) and RoleGLM (Chinese) on RoleBench, which significantly improves role-playing capabilities and even achieves comparable results with RoleGPT using GPT-4.

LongLLaMA

LongLLaMA is a large language model capable of processing long texts. It is based on OpenLLaMA and fine-tuned using the Focused Transformer (FoT) method. It is capable of handling text up to 256k marks and more. We provide a smaller 3B base model (without instruction tuning) and inference code supporting longer contexts on Hugging Face. Our model weights can serve as a replacement for LLaMA in existing implementations (for short contexts up to 2048 tokens). Additionally, we provide evaluation results and comparisons with the original OpenLLaMA model.