Clone-Voice

A sound cloning tool with a web interface

Product Details

Clone-Voice is a voice cloning tool with a web interface that can use any human voice to synthesize a piece of text into a speaking voice using that voice, or convert a voice into another voice using that voice. Supports 16 languages including Chinese, English, Japanese, Korean, French, German, and Italian, and can record sounds from the microphone online. Features include text-to-speech and voice-to-voice conversion. The advantages are that it is easy to use and does not require an N-card GPU, supports multiple languages, and is flexible in recording sounds. The product is currently free to use.

Main Features

Target Users

Clone-Voice is suitable for users who need to synthesize sounds or convert sounds using different timbres, including speech synthesis, audio production, personalized sounds and other scenarios.

Examples

Synthesize a piece of text into a star’s voice

Convert a sound into the sound of an animal

Synthesize sounds in multiple languages using different timbres

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

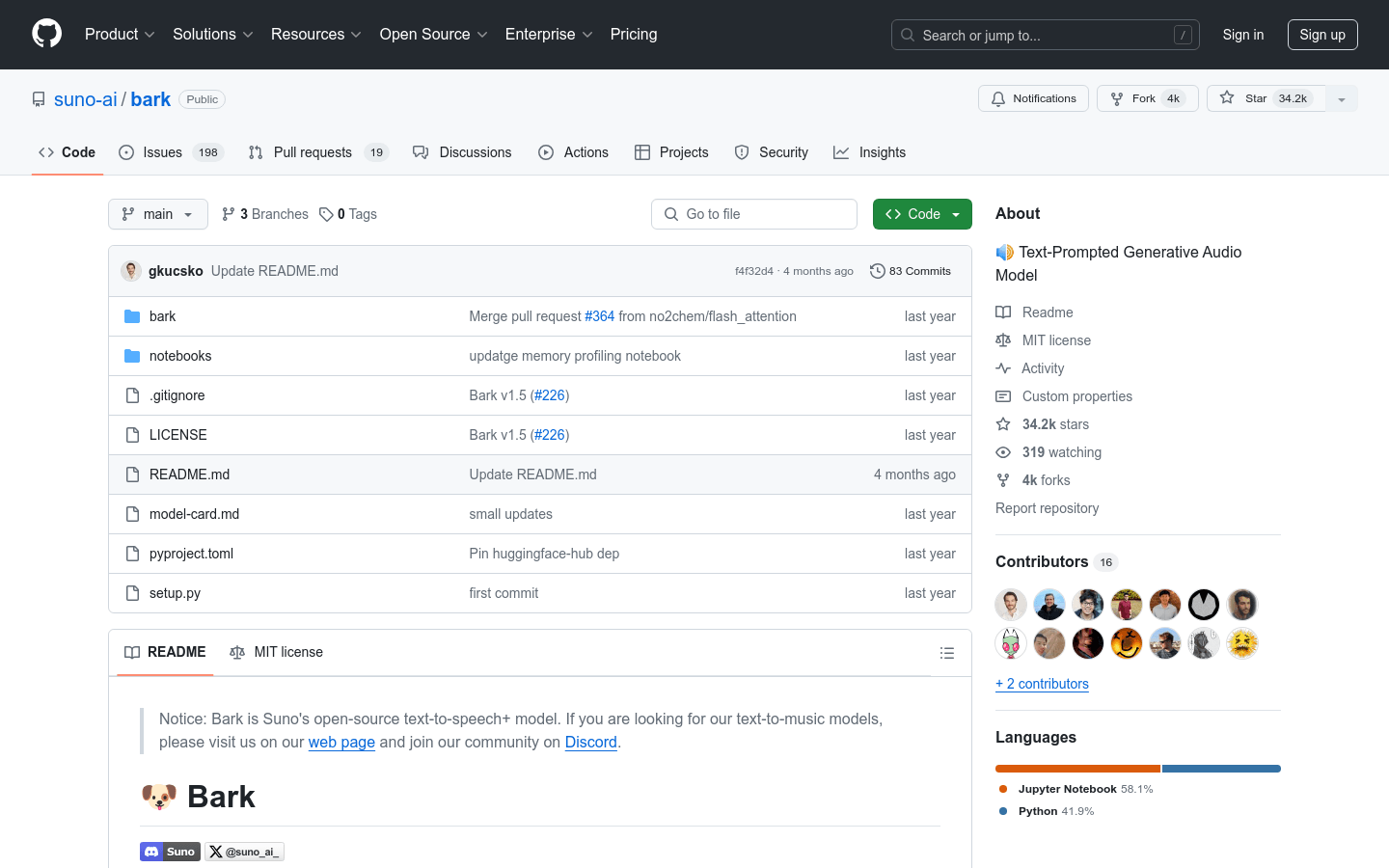

Bark

Bark is a Transformer-based text-to-audio model developed by Suno that is capable of generating realistic multilingual speech as well as other types of audio such as music, background noise, and simple sound effects. It also supports the generation of non-verbal communication such as laughter, sighs and cries. Bark supports the research community, providing pre-trained model checkpoints suitable for inference and available for commercial use.

Must cut Studio

Bi-Cut Studio is a digital avatar tool that supports image-driven and timbre customization. Users can customize their own digital avatars for use in dubbing, oral broadcasting and other scenarios. The product background is to solve the problem of users' personalized needs in audio production, and is positioned to provide convenient digital avatar creation tools.

StreamVoice

StreamVoice is a language model-based zero-lip speech conversion model that enables real-time conversion without the need for a complete source speech. It employs a fully causal context-aware language model combined with a time-independent acoustic predictor capable of processing semantic and acoustic features alternately at each time step, thus eliminating the dependence on the complete source speech. In order to enhance the performance degradation caused by incomplete context that may occur in streaming processing, StreamVoice enhances the context awareness of the language model through two strategies: 1) teacher-guided context foresight, which uses the teacher model to summarize current and future semantic context during the training process and guides the model to predict the missing context; 2) semantic masking strategy, which promotes acoustic prediction from previously damaged semantic and acoustic input to enhance context learning capabilities. Notably, StreamVoice is the first language model-based streaming zero-lip speech conversion model without any future prediction. Experimental results show that StreamVoice has streaming conversion capabilities while maintaining zero-lip performance comparable to non-streaming voice conversion systems.

Whisper Speech

Whisper Speech is a fully open source text-to-speech model trained by Collabora and Lion on Juwels supercomputers. It supports multiple languages and multiple forms of input, including Node.js, Python, Elixir, HTTP, Cog, and Docker. The advantages of this model are efficient speech synthesis and flexible deployment. In terms of pricing, Whisper Speech is completely free. It is positioned to provide developers and researchers with a powerful, customizable text-to-speech solution.

GPT-SoVITS

GPT-SoVITS-WebUI is a powerful zero-sample speech conversion and text-to-speech WebUI. It has features such as zero-sample TTS, few-sample TTS, cross-language support, and WebUI tools. The product supports English, Japanese and Chinese, and provides integrated tools, including voice accompaniment separation, automatic training set segmentation, Chinese ASR and text annotation, to help beginners create training data sets and GPT/SoVITS models. Users can experience instant text-to-speech conversion by inputting a 5-second sound sample, and can fine-tune the model to improve speech similarity and fidelity by using only 1 minute of training data. Product support environment preparation, Python and PyTorch versions, quick installation, manual installation, pre-trained models, dataset format, to-do items and acknowledgments.

sound reproduction

Sound reproduction is an efficient and lightweight sound customization solution. Users can quickly have exclusive AI-customized sounds by recording in seconds in an open environment. The core product advantages include ultra-low cost, extremely fast reproduction, high degree of restoration and technological leadership. Applicable scenarios include video dubbing, voice assistants, car assistants, online education and audio reading, etc.

HitPaw Voice Changer

HitPaw Voice Changer is an intelligent assistant tool that can intelligently assist you in changing into any voice in any scene. It is the best voice changer for real-time voice changing.

Blerp Sound Memes. AI TTS Voices Emotes GIFS

Blerp is an AI TTS voice meme, emoticon GIF and voice prompt product. It provides the most interesting AI TTS alerts, expressions and sound packs for chat and live streaming communities. Viewers can stream the best sounds and AI TTS voices on any streaming platform and attach emoticons and GIFs to them. As a viewer, you can also collect channel points on your favorite streamers and play your own WalkOn Sounds. Anchors can set their own sounds and use WalkOn Subscriber sounds on any supported extension platform.

RealtimeTTS

RealtimeTTS is an easy-to-use, low-latency text-to-speech library for real-time applications. It can convert text streams into immediate audio output. Key features include real-time streaming synthesis and playback, advanced sentence boundary detection, modular engine design, and more. The library supports multiple text-to-speech engines and is suitable for voice assistants and applications requiring instant audio feedback. Please refer to the official website for detailed pricing and positioning information.

StyleTTS 2

StyleTTS 2 is a text-to-speech (TTS) model that uses large-scale speech language models (SLMs) for style diffusion and adversarial training to achieve human-level TTS synthesis. It models style as a latent random variable through a diffusion model to generate a style that best fits the text without reference to speech. Furthermore, we use large pre-trained SLMs (such as WavLM) as the discriminator and combine them with our innovative differentiable duration modeling for end-to-end training, thereby improving the naturalness of speech. StyleTTS 2 outperformed human recordings on the single-speaker LJSpeech dataset and matched them on the multi-speaker VCTK dataset, gaining approval from native English-speaking reviewers. Furthermore, our model outperforms previous publicly available zero-shot extension models when trained on the LibriTTS dataset. By demonstrating the potential of style diffusion and adversarial training with large SLMs, this work enables a human-level TTS synthesis on single and multi-speaker datasets.

SALMONN

SALMONN is a large language model (LLM) developed by the Department of Electronic Engineering of Tsinghua University and ByteDance that supports speech, audio events and music input. Unlike models that only support speech or audio event input, SALMONN can perceive and understand a variety of audio inputs, enabling emerging capabilities such as multilingual speech recognition and translation, and audio-speech co-reasoning. This can be seen as giving LLM "hearing" and cognitive hearing capabilities, making SALMONN a step towards an artificial general intelligence with hearing capabilities.

Voices AI

Voices AI is a voice conversion app designed for iOS that generates voices, clones custom voices, and improves voice quality with AI audio enhancement. It offers an extensive library of voices, from iconic political figures to Hollywood celebrities, to bring your text to life. For content creators, it can provide industry-standard voiceovers for videos, TV clips, commercials, and more. It can also create special birthday wishes for your friends, or let you enjoy the thrill of hearing famous sounds echo your emotions. It features high-quality audio, intuitive interface, and privacy protection. You can use it to clone your own voice and improve audio quality with its AI audio enhancement feature.

Spotify Voice Translation

Spotify recently launched a voice translation feature that can translate podcast content into other languages while retaining the original host's voice style. This technology independently developed by Spotify uses OpenAI's latest speech generation technology to match the intonation and tone of the original anchor, providing a more realistic and natural translation experience. This makes podcasts that were originally only available in English now available to global users in other languages, such as Spanish, French, and German.

voice-swap.ai

Voice-Swap is an audio conversion tool that uses artificial intelligence technology to transform your voice into the style of top singers, suitable for making demos or finding the perfect voice for your tracks. We offer free trials and subscription plans to support remote collaboration and presentation production.

Altered Studio

Altered Studio is a unique technology that transforms your voice into one of carefully curated AI voices to create compelling, professional voice-driven performances. It provides professional sound editing tools and flexibly customized AI sounds, suitable for various media projects such as voice actors, film and television production, and advertising. With Altered Studio, you can transform your voice into any style, gender, age or language, adding a unique touch to your productions.

FineShare FineVoice

FineShare FineVoice is an AI digital voice solution that features a powerful and easy-to-use real-time voice changer, high-quality voice recorder, fast and accurate automatic transcription, and a lifelike AI voice generator. It's based on AI voice processing algorithms that can easily optimize and customize your voice.