Meshy-2

A huge leap forward from Text to 3D, optimized web application, welcome to share and learn.

Product Details

Meshy-2 is the latest addition to our 3D generative AI product family, coming three months after the release of Meshy-1. This version is a huge leap forward in the field of Text to 3D, providing better structured meshes and rich geometric details for 3D objects. In Meshy-2, Text to 3D offers four style options: Realistic, Cartoon, Low Polygon and Voxel to satisfy a variety of artistic preferences and inspire new creative directions. We've increased the speed of generation without compromising quality, with preview time around 25 seconds and fine results within 5 minutes. Additionally, Meshy-2 introduces a user-friendly mesh editor with polygon count control and a quad mesh conversion system to provide more control and flexibility in 3D projects. The Text to Texture feature has been optimized to render textures more clearly and twice as fast. Enhanced features of Image to 3D produce higher quality results in 2 minutes. We are shifting our focus from Discord to web applications, encouraging users to share AI-generated 3D art in the web application community.

Main Features

Target Users

Emerging 3D artists, designers, and developers can try Meshy-2 for free, and use promo code MESHY2GO to get a 20% discount on Pro or Max versions. Users are welcome to join the web application community to share and explore AI-generated 3D art.

Examples

The newly opened 3D project uses Meshy-2 to quickly generate better-structured meshes and rich geometric details.

Designers use Meshy-2’s four Text to 3D style options to create realistic, cartoon, low-poly and Voxel 3D models.

Developers upload images and improve the resulting 3D objects through Meshy-2's Image to 3D feature.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

AI Hug Video

AI Hug Video Generator is an online platform that uses advanced machine learning technology to transform static photos into dynamic, lifelike hug videos. Users can create personalized, emotion-filled videos based on their precious photos. The technology creates photorealistic digital hugs by analyzing real human interactions, including subtle gestures and emotions. The platform provides a user-friendly interface, making it easy for both technology enthusiasts and video production novices to create AI hug videos. Additionally, the resulting video is high-definition and suitable for sharing on any platform, ensuring great results on every screen.

MIMO

MIMO is a universal video synthesis model capable of simulating anyone interacting with objects in complex movements. It is capable of synthesizing character videos with controllable attributes (such as characters, actions, and scenes) based on simple user-provided inputs (such as reference images, pose sequences, scene videos, or images). MIMO achieves this by encoding 2D video into a compact spatial code and decomposing it into three spatial components (main character, underlying scene, and floating occlusion). This approach allows flexible user control, spatial motion expression, and 3D perception synthesis, suitable for interactive real-world scenarios.

LVCD

LVCD is a reference-based line drawing video coloring technology that uses a large-scale pre-trained video diffusion model to generate colorized animated videos. This technology uses Sketch-guided ControlNet and Reference Attention to achieve color processing of animation videos with fast and large movements while ensuring temporal coherence. The main advantages of LVCD include temporal coherence in generating colorized animated videos, the ability to handle large motions, and high-quality output results.

ComfyUI-LumaAI-API

ComfyUI-LumaAI-API is a plug-in designed for ComfyUI, which allows users to use the Luma AI API directly in ComfyUI. The Luma AI API is based on the Dream Machine video generation model, developed by Luma. This plug-in greatly enriches the possibilities of video generation by providing a variety of nodes, such as text to video, image to video, video preview, etc., and provides convenient tools for video creators and developers.

Tongyi Wanxiang AI video generation

Tongyi Wanxiang AI Creative Painting is a product that uses artificial intelligence technology to convert users' text descriptions or images into video content. Through advanced AI algorithms, it can understand the user's creative intentions and automatically generate artistic videos. This product can not only improve the efficiency of content creation, but also stimulate users' creativity, and is suitable for many fields such as advertising, education, and entertainment.

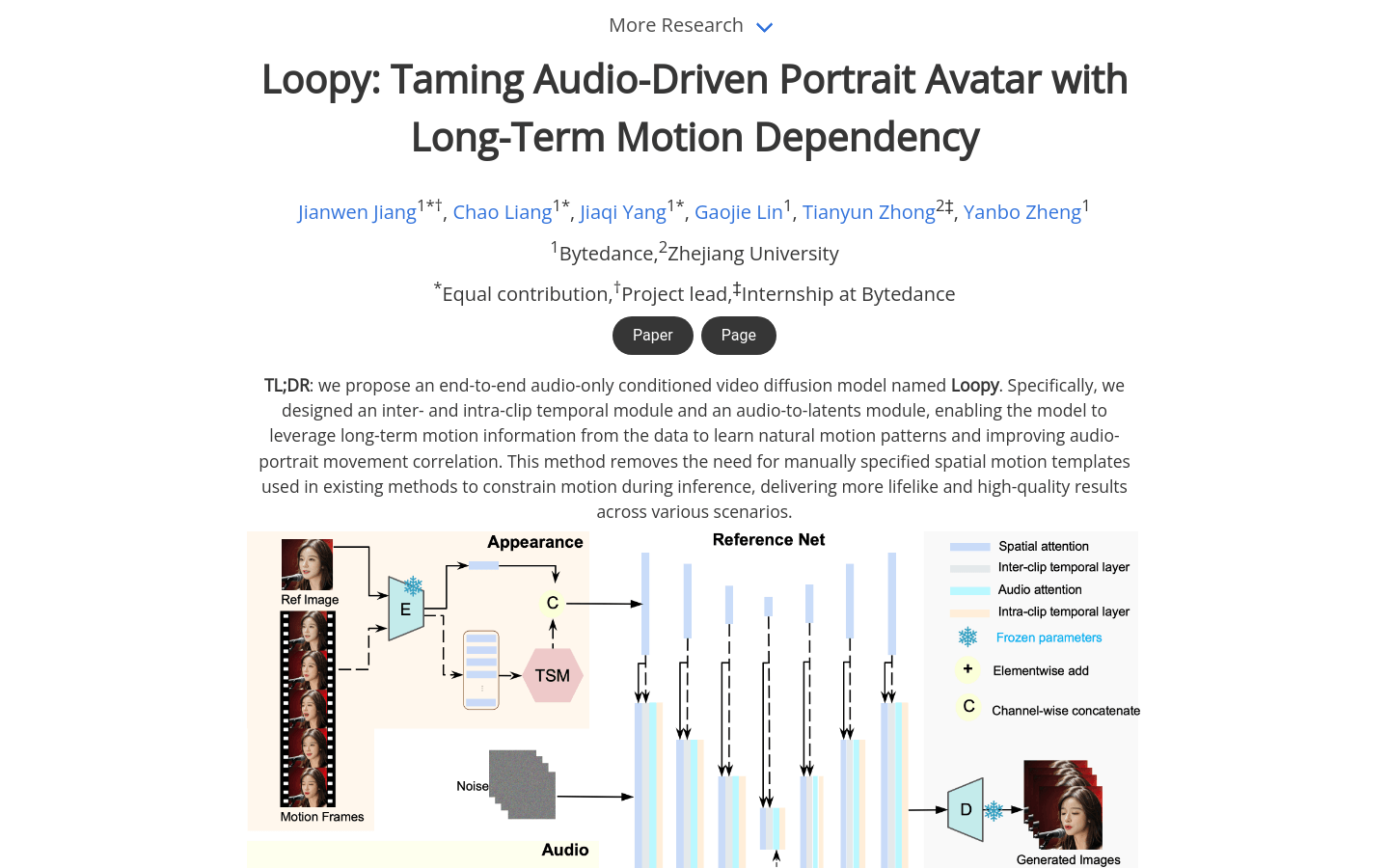

Loopy model

Loopy is an end-to-end audio-driven video diffusion model specifically designed with a temporal module across clips and within clips and an audio-to-latent representation module, enabling the model to leverage long-term motion information in the data to learn natural motion patterns and improve the correlation of audio with portrait motion. This approach eliminates the need for manually specified spatial motion templates in existing methods, enabling more realistic, high-quality results in a variety of scenarios.

CyberHost

CyberHost is an end-to-end audio-driven human animation framework that achieves hand integrity, identity consistency, and natural motion generation through a regional codebook attention mechanism. This model utilizes the dual U-Net architecture as the basic structure and uses a motion frame strategy for temporal continuation to establish a baseline for audio-driven human animation. CyberHost improves the quality of synthesis results through a series of human-led training strategies, including body motion maps, hand articulation scores, pose-aligned reference features, and local augmentation supervision. CyberHost is the first audio-driven human body diffusion model capable of zero-shot video generation at the human body scale.

EmoTalk3D

EmoTalk3D is a research project focused on 3D virtual head synthesis. It solves the problems of perspective consistency and insufficient emotional expression in traditional 3D head synthesis by collecting multi-view videos, emotional annotations and 3D geometric data per frame. This project proposes a novel approach to achieve emotion-controlled 3D human head synthesis with enhanced lip synchronization and rendering quality by training on the EmoTalk3D dataset. The EmoTalk 3D model is capable of generating 3D animations with a wide viewing angle and high rendering quality, while capturing dynamic facial details such as wrinkles and subtle expressions.

Clapper.app

Clapper.app is an open source AI story visualization tool that can interpret and render scripts into storyboards, videos, sounds and music. Currently, the tool is still in the early stages of development and is not suitable for ordinary users as some features are not yet complete and there are no tutorials.

SV4D

Stable Video 4D (SV4D) is a generative model based on Stable Video Diffusion (SVD) and Stable Video 3D (SV3D) that accepts a single view video and generates multiple new view videos (4D image matrices) of the object. The model is trained to generate 40 frames (5 video frames x 8 camera views) at 576x576 resolution, given 5 reference frames of the same size. Generate an orbital video by running SV3D, then use the orbital video as a reference view for SV4D and the input video as a reference frame for 4D sampling. The model also generates longer new perspective videos by using the first generated frame as an anchor and then densely sampling (interpolating) the remaining frames.

FasterLivePortrait

FasterLivePortrait is a real-time portrait animation project based on deep learning. It achieves real-time running speeds of 30+ FPS on the RTX 3090 GPU by using TensorRT, including pre- and post-processing, not just model inference speed. The project also implemented the conversion of LivePortrait model to Onnx model, and used onnxruntime-gpu on RTX 3090 to achieve an inference speed of about 70ms/frame, supporting cross-platform deployment. In addition, the project also supports the native Gradio app, which increases the speed several times and supports simultaneous inference of multiple faces. The code structure has been restructured and no longer relies on PyTorch. All models use onnx or tensorrt for inference.

RunwayML App

RunwayML is a leading next-generation creative suite that provides a rich set of tools that enable users to turn any idea into reality. The app, through its unique text-to-video generation technology, allows users to generate videos on their phone using only text descriptions. Its main advantages include: 1. Text-to-video generation: Users only need to enter a text description to generate a video. 2. Real-time updates: New features and updates are launched regularly to ensure that users can always use the latest AI video and picture tools. 3. Seamless asset transfer: Users can seamlessly transfer assets between mobile phones and computers. 4. Multiple subscription options: Standard, Professional and monthly 1000 credits generated subscription options are available.

TCAN

TCAN is a novel portrait animation framework based on the diffusion model that maintains temporal consistency and generalizes well to unseen domains. The framework uses unique modules such as appearance-pose adaptation layer (APPA layer), temporal control network and attitude-driven temperature map to ensure that the generated video maintains the appearance of the source image and follows the pose of the driving video, while maintaining background consistency.

LivePortrait

LivePortrait is a generative portrait animation model based on an implicit keypoint framework that synthesizes photorealistic videos by using a single source image as a reference for appearance and deriving actions (such as facial expressions and head poses) from driving video, audio, text, or generation. The model not only achieves an effective balance between computational efficiency and controllability, but also significantly improves the generation quality and generalization ability by expanding the training data, adopting a hybrid image-video training strategy, upgrading the network architecture, and designing better motion conversion and optimization goals.

MimicMotion

MimicMotion is a high-quality human action video generation model jointly developed by Tencent and Shanghai Jiao Tong University. This model achieves controllability of the video generation process through confidence-aware posture guidance, improves the temporal smoothness of the video, and reduces image distortion. It adopts an advanced image-to-video diffusion model and combines spatiotemporal U-Net and PoseNet to generate high-quality videos of arbitrary length based on pose sequence conditions. MimicMotion significantly outperforms previous methods in several aspects, including hand generation quality, accurate adherence to reference poses, etc.

Gen-3 Alpha

Gen-3 Alpha is the first in a series of models trained by Runway on new infrastructure built for multi-modal training at scale. It offers significant improvements over Gen-2 in fidelity, consistency, and motion, and is a step toward building a universal world model. The model's ability to generate expressive characters with rich movements, gestures and emotions offers new opportunities for storytelling.