Product Details

HueMankey is a user portrait API for developers. It is able to assign a unique avatar to each user, supports batch requests and is stored directly on the platform. It provides lightweight image data, dynamically adapts to user scale, and has flexible subscription plans.

Main Features

Target Users

HueMankey can be used in various applications such as social media, online games, e-commerce platforms, etc. to provide users with a personalized avatar experience.

Examples

Social media platforms assign unique avatars to each user

Each player in online games has their own unique avatar

E-commerce platform provides each user with a personalized avatar

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

FastVLM

FastVLM is an efficient visual encoding model designed specifically for visual language models. It uses the innovative FastViTHD hybrid visual encoder to reduce the encoding time of high-resolution images and the number of output tokens, making the model perform outstandingly in speed and accuracy. The main positioning of FastVLM is to provide developers with powerful visual language processing capabilities, suitable for various application scenarios, especially on mobile devices that require fast response.

InternVL3

InternVL3 is a multimodal large language model (MLLM) released by OpenGVLab as an open source, with excellent multimodal perception and reasoning capabilities. This model series includes a total of 7 sizes from 1B to 78B, which can process text, pictures, videos and other information at the same time, showing excellent overall performance. InternVL3 performs well in fields such as industrial image analysis and 3D visual perception, and its overall text performance is even better than the Qwen2.5 series. The open source of this model provides strong support for multi-modal application development and helps promote the application of multi-modal technology in more fields.

EasyControl

EasyControl is a framework that provides efficient and flexible control for Diffusion Transformers, aiming to solve problems such as efficiency bottlenecks and insufficient model adaptability existing in the current DiT ecosystem. Its main advantages include: supporting multiple condition combinations, improving generation flexibility and reasoning efficiency. This product is developed based on the latest research results and is suitable for use in areas such as image generation and style transfer.

GaussianCity

GaussianCity is a framework focused on efficiently generating borderless 3D cities, based on 3D Gaussian rendering technology. This technology solves the memory and computing bottlenecks faced by traditional methods when generating large-scale urban scenes through compact 3D scene representation and spatially aware Gaussian attribute decoders. Its main advantage is the ability to quickly generate large-scale 3D cities in a single forward pass, significantly outperforming existing technologies. This product was developed by the S-Lab team of Nanyang Technological University. The related paper was published in CVPR 2025. The code and model have been open source and are suitable for researchers and developers who need to efficiently generate 3D urban environments.

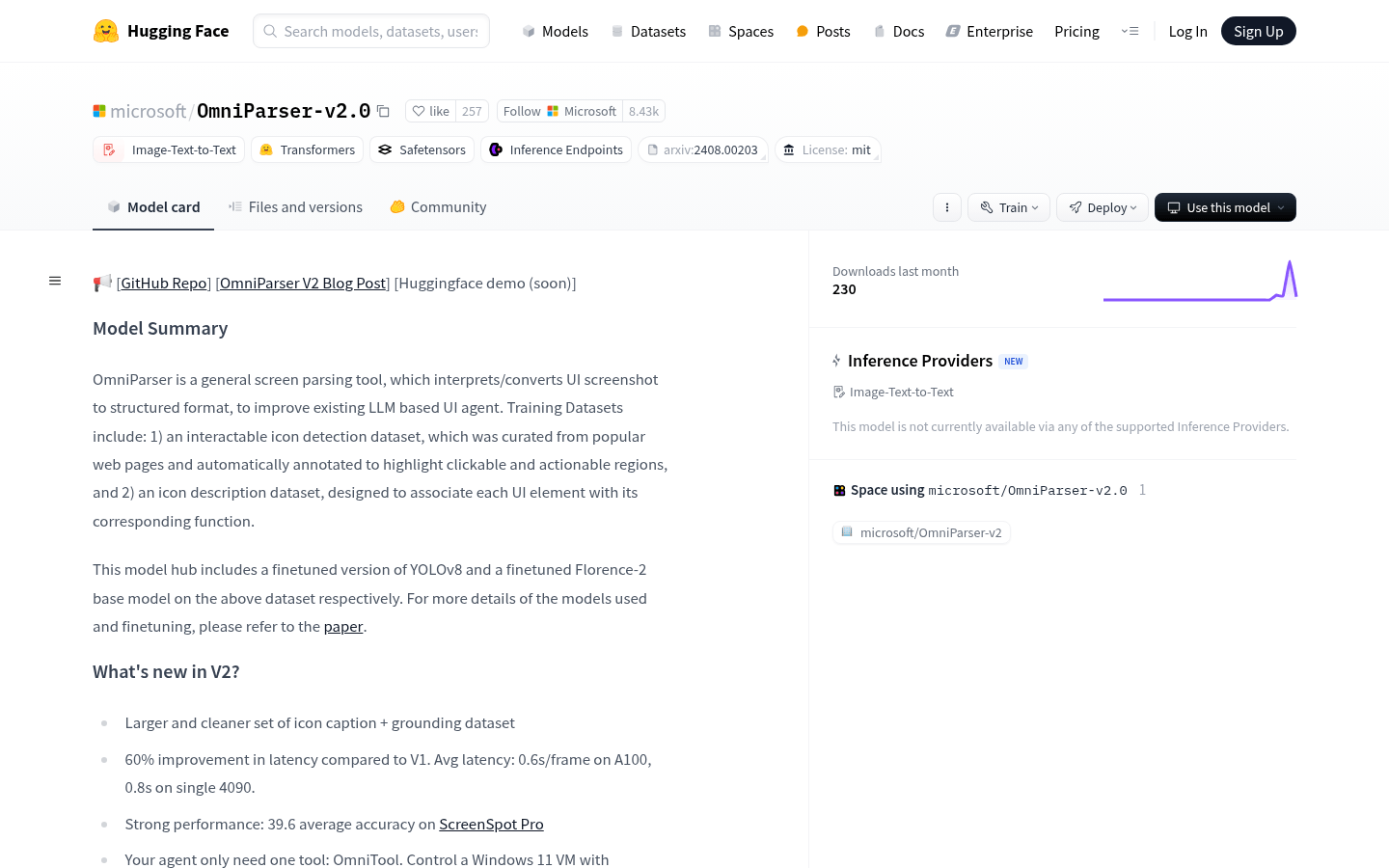

OmniParser-v2.0

OmniParser is an advanced image parsing technology developed by Microsoft that is designed to convert irregular screenshots into a structured list of elements, including the location of interactable areas and functional descriptions of icons. It achieves efficient parsing of UI interfaces through deep learning models, such as YOLOv8 and Florence-2. The main advantages of this technology are its efficiency, accuracy and wide applicability. OmniParser can significantly improve the performance of large language model (LLM)-based UI agents, enabling them to better understand and operate various user interfaces. It performs well in a variety of application scenarios, such as automated testing, intelligent assistant development, etc. OmniParser's open source nature and flexible license make it a powerful tool for developers and researchers.

Ollama OCR for web

ollama-ocr is an ollama-based optical character recognition (OCR) model capable of extracting text from images. It utilizes advanced visual language models such as LLaVA, Llama 3.2 Vision and MiniCPM-V 2.6 to provide high-precision text recognition. This model is very useful for scenarios where text information needs to be obtained from images, such as document scanning, image content analysis, etc. It is open source, free and easy to integrate into various projects.

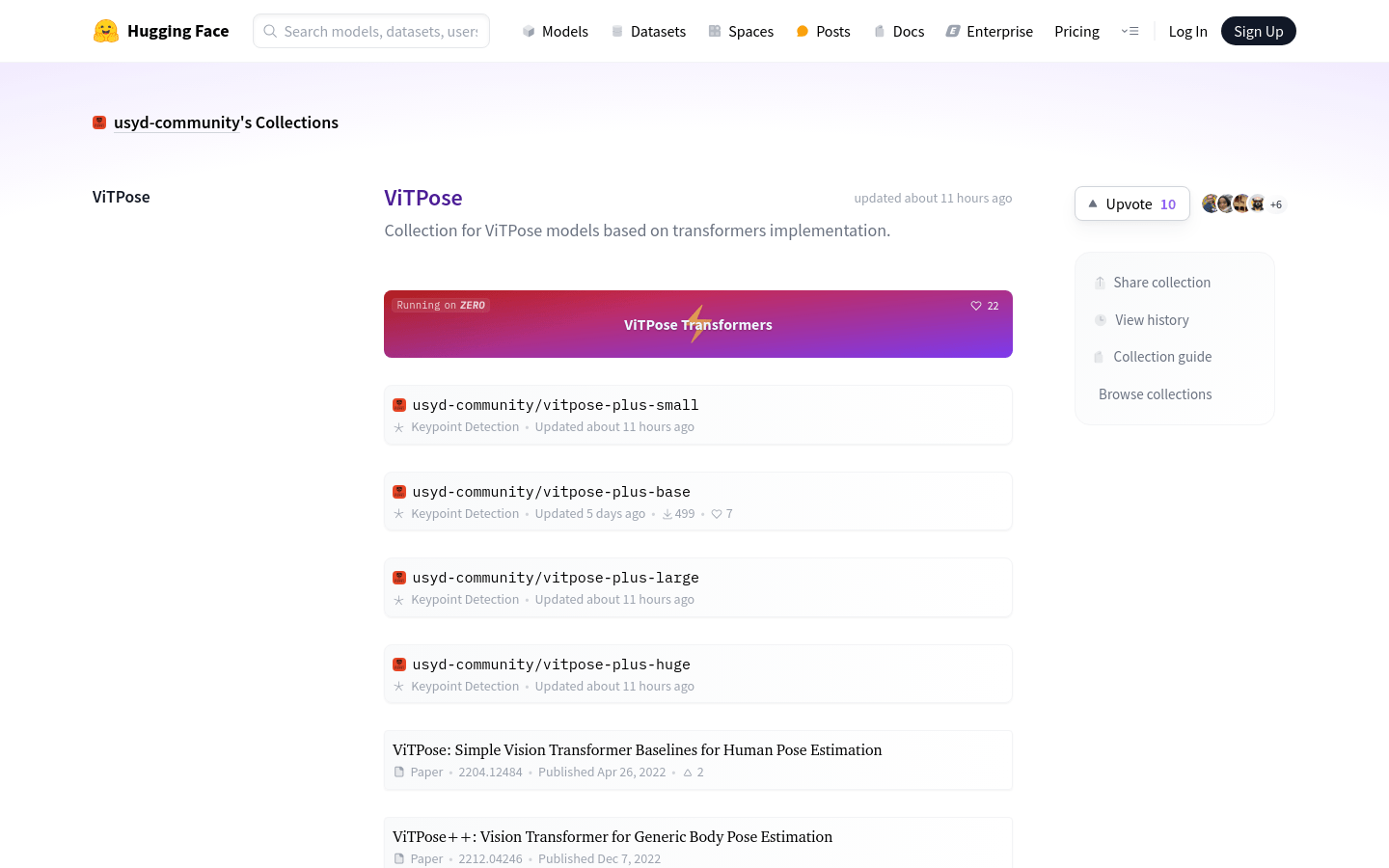

ViTPose

ViTPose is a series of human pose estimation models based on Transformer architecture. It leverages the powerful feature extraction capabilities of Transformer to provide a simple and effective baseline for human pose estimation tasks. The ViTPose model performs well on multiple datasets with high accuracy and efficiency. The model is maintained and updated by the University of Sydney community and is available in a variety of different scales to meet the needs of different application scenarios. On the Hugging Face platform, ViTPose models are available to users in open source form. Users can easily download and deploy these models to conduct research and application development related to human posture estimation.

SmolVLM

SmolVLM is a small but powerful visual language model (VLM) with 2B parameters, leading among similar models with its small memory footprint and efficient performance. SmolVLM is completely open source, including all model checkpoints, VLM datasets, training recipes and tools released under the Apache 2.0 license. The model is suitable for local deployment on browsers or edge devices, reducing inference costs and allowing user customization.

Watermark Anything

Watermark Anything is an image watermarking technology developed by Facebook Research, which allows one or more localized watermark information to be embedded in images. The importance of this technology lies in its ability to achieve copyright protection and tracking of image content while ensuring image quality. The technical background is based on the research of deep learning and image processing, and its main advantages include high robustness, concealment and flexibility. The product is positioned for research and development purposes and is currently provided free of charge to academics and developers.

Ultralight-Digital-Human

Ultralight-Digital-Human is an ultra-lightweight digital human model that can run in real time on the mobile terminal. This model is open source and, to the best of the developer's knowledge, is the first such lightweight open source digital human model. The main advantages of this model include lightweight design, suitability for mobile deployment, and the ability to run in real time. Behind it is deep learning technology, especially the application in face synthesis and voice simulation, which enables digital human models to achieve high-quality performance with lower resource consumption. The product is currently free and is mainly targeted at technology enthusiasts and developers.

DocLayout-YOLO

DocLayout-YOLO is a deep learning model for document layout analysis that enhances the accuracy and processing speed of document layout analysis through diverse synthetic data and global-to-local adaptive perception. This model generates a large-scale and diverse DocSynth-300K data set through the Mesh-candidate BestFit algorithm, which significantly improves the fine-tuning performance of different document types. In addition, it also proposes a global-to-local controllable receptive field module to better handle multi-scale changes in document elements. DocLayout-YOLO performs well on downstream datasets on a variety of document types, with significant advantages in both speed and accuracy.

LibreFLUX

LibreFLUX is an open source version based on the Apache 2.0 license that provides the full T5 context length, uses attention masks, restores classifier free guidance, and removes most of the FLUX aesthetic fine-tuning/DPO. This means it's less aesthetically pleasing than base FLUX, but has the potential to be more easily fine-tuned to any new distribution. LibreFLUX was developed with the core principles of open source software in mind, namely that it is difficult to use, slower and more clunky than proprietary solutions, and has an aesthetic stuck in the early 2000s.

Exifaa

Exifaa is an online image metadata editor that allows users to easily view, edit and delete EXIF information of images. EXIF information includes camera model, shooting time, GPS location, etc. For photography enthusiasts and professional photographers, managing this information is crucial. Exifaa provides users with a convenient and fast solution with its simple interface and powerful functions.

Face Recognition, Liveness Detection, ID Document Recognition SDK

MiniAiLive is a provider of contactless biometric authentication and authentication solutions. We provide powerful security solutions using advanced technologies, including facial recognition, liveness detection and ID recognition. We also ensure that these solutions integrate seamlessly with our customers’ existing systems.

RMBG

AI-Powered Background Removal is an online tool based on AI technology that can quickly and efficiently remove the background from user-uploaded images. The main advantages of this tool are its privacy protection and local execution capabilities, that is, image processing is completed on the user's device without uploading to the Internet, ensuring data security and processing speed. In addition, as an open source and completely free tool, it greatly unleashes users' creativity without worrying about cost.

Glaze

Glaze is a system designed to protect human artists from AI style imitation. Small changes are made to the artwork through machine learning algorithms so that it looks unchanged to the human eye, but presents a completely different artistic style to the AI model. This way, when someone tries to imitate a specific artist's style, the results generated by the AI will be very different than expected. Glaze is not a permanent solution, but it is a necessary first step to give artists the tools to resist AI imitation.