OpenVoice V2

OpenVoice V2 is a speech synthesis model that supports multiple languages and provides high-quality voice cloning and style control functions.

Product Details

OpenVoice V2 is a Text-to-Speech (TTS) model. It will be released in April 2024 and contains all the features of V1 and has been improved. It uses different training strategies, provides better sound quality, and supports multiple languages such as English, Spanish, French, Chinese, Japanese and Korean. Additionally, it allows free use for commercial purposes. OpenVoice V2 is able to accurately clone reference tonal colors and generate speech in a variety of languages and accents. It also supports zero-shot cross-language speech cloning, that is, the language of the generated speech and the language of the reference speech do not need to be present in large-scale multilingual training data sets.

Main Features

How to Use

Target Users

Researchers and Developers: Provides Linux installation guides to facilitate in-depth research and development.

Commercial users: Since it is free for commercial use, it is suitable for commercial users who need to integrate high-quality speech synthesis technology into their products.

Multi-language needs: supports multiple languages, suitable for international users who need cross-language speech synthesis.

Examples

Provide realistic voices for video game characters.

Generate instructional content for learners of different languages in educational software.

Create multilingual narration for commercials.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

F5-TTS

F5-TTS is a text-to-speech synthesis (TTS) model developed by the SWivid team. It uses deep learning technology to convert text into natural and smooth speech output that is faithful to the original text. When generating speech, this model not only pursues high naturalness, but also focuses on the clarity and accuracy of speech. It is suitable for various application scenarios that require high-quality speech synthesis, such as voice assistants, audiobook production, automatic news broadcasts, etc. The F5-TTS model is released on the Hugging Face platform, which users can easily download and deploy. It supports multiple languages and sound types and has high flexibility and scalability.

Praises

Praises is a text-to-speech (TTS) tool that helps users access information more easily by converting text into speech output. This tool supports multiple APIs, including Azure API, Edge API, etc., and supports multiple languages, allowing it to serve users around the world. The main advantages of Praises include support for multiple speech synthesis technologies, ease of integration and use, and open source features, allowing developers to freely modify and optimize. Background information on Praises shows that it was developed by individual developer ElmTran and follows the MIT open source license, which means that users can use and modify the software for free.

FineVoice

FineVoice is a multifunctional AI dubbing platform that uses advanced artificial intelligence technology to provide users with realistic and personalized voice services. This platform can not only convert text into natural and lifelike sounds, but also perform speech-to-text, voice-change and other operations, greatly enriching the possibilities of content creation. The main advantages of FineVoice include high efficiency, low cost, multi-language support and ease of use. It is especially suitable for individual and enterprise users who need to quickly generate large amounts of dubbing content.

Llama 3.2 3b Voice

Llama 3.2 3b Voice is a speech synthesis model based on the Hugging Face platform, which can convert text into natural and smooth speech. This model uses advanced deep learning technology and can imitate the intonation, rhythm and emotion of human speech, and is suitable for a variety of scenarios, such as voice assistants, audio books, automatic broadcasts, etc.

ebook2audiobookXTTS

ebook2audiobookXTTS is a model that uses Caliber and Coqui TTS technology to convert e-books into audiobooks. It supports the preservation of chapters and metadata, and has the option of using a custom voice model for voice cloning, supporting multiple languages. The main advantage of this technology is that it can convert text content into high-quality audiobooks, which is suitable for users who need to convert a large amount of text information into audio format, such as the visually impaired, users who like to listen to books, or users who need to learn foreign languages.

OptiSpeech

OptiSpeech is an efficient, lightweight and fast text-to-speech model designed for on-device text-to-speech conversion. It leverages advanced deep learning technology to convert text into natural-sounding speech, making it suitable for applications that require speech synthesis in mobile devices or embedded systems. The development of OptiSpeech was supported by GPU resources provided by Pneuma Solutions, which significantly accelerated the development process.

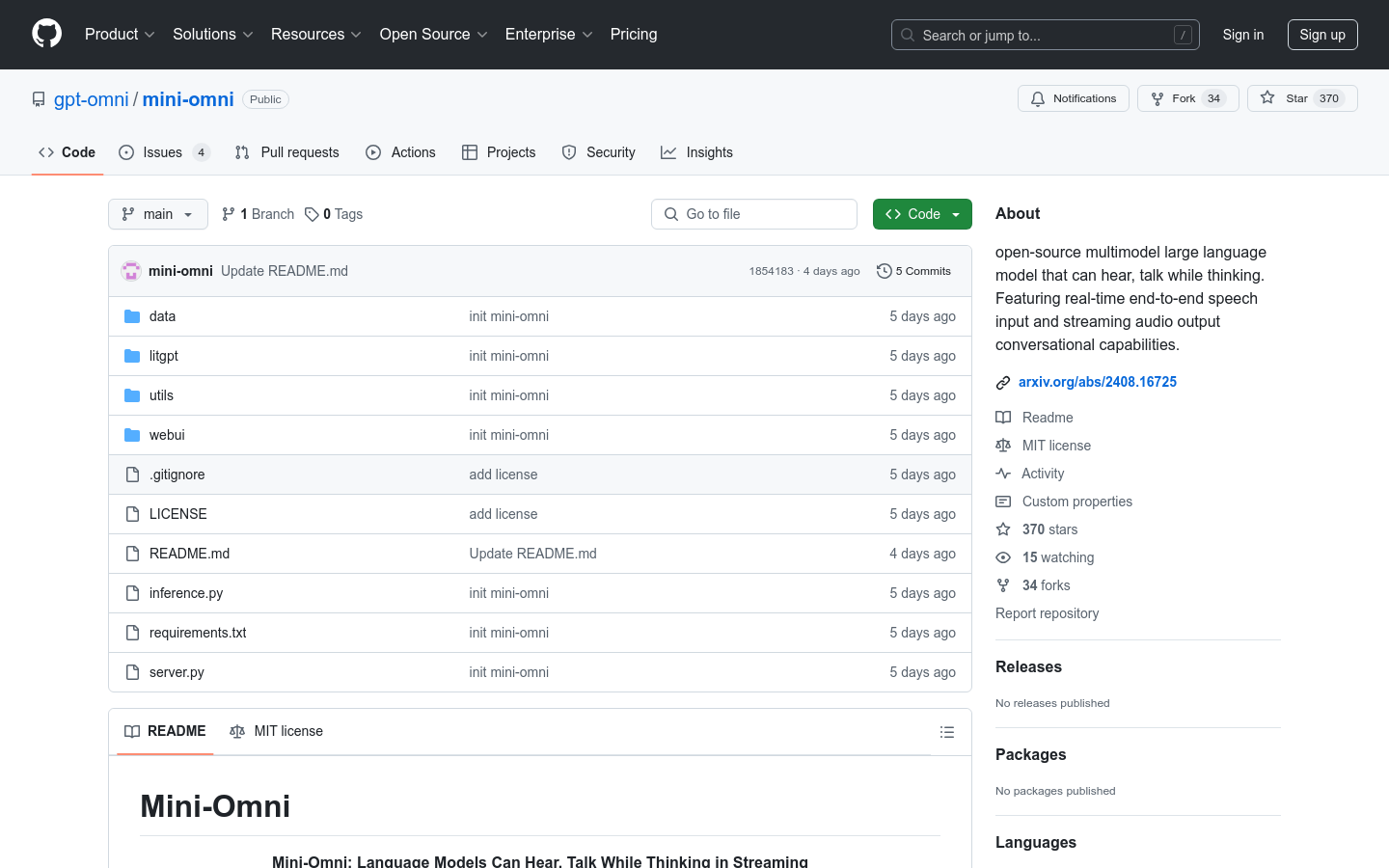

Mini-Omni

Mini-Omni is an open source multi-modal large-scale language model that can achieve real-time speech input and streaming audio output dialogue capabilities. It features real-time speech-to-speech dialogue without the need for additional ASR or TTS models. In addition, it can also perform speech output while thinking, supporting the simultaneous generation of text and audio. Mini-Omni further enhances performance with batch inference of 'Audio-to-Text' and 'Audio-to-Audio'.

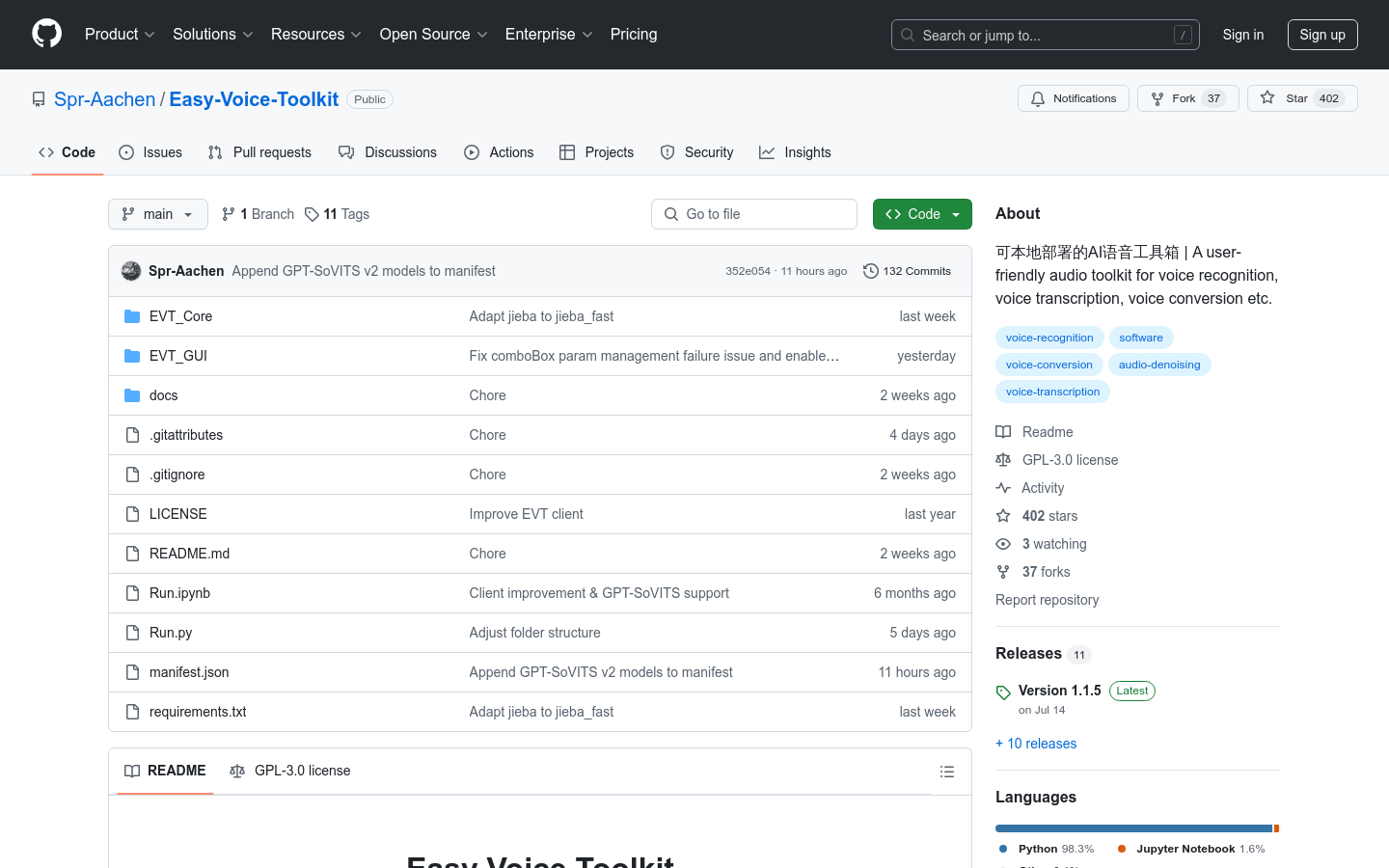

Easy Voice Toolkit

Easy Voice Toolkit is an AI voice toolbox based on open source voice projects, providing a variety of automated audio tools including voice model training. The toolbox integrates seamlessly to form a complete workflow, and users can use the tools selectively as needed or in sequence to gradually convert raw audio files into ideal speech models.

ElevenStudios

ElevenStudios provides fully managed video and podcast dubbing services, leveraging AI and bilingual dubbing experts to translate content into multiple languages to expand global audiences. The audio generated by the AI sound model sounds like the user himself speaking a foreign language, while ensuring that the translation is faithful to the original meaning and resonates with foreign audiences.

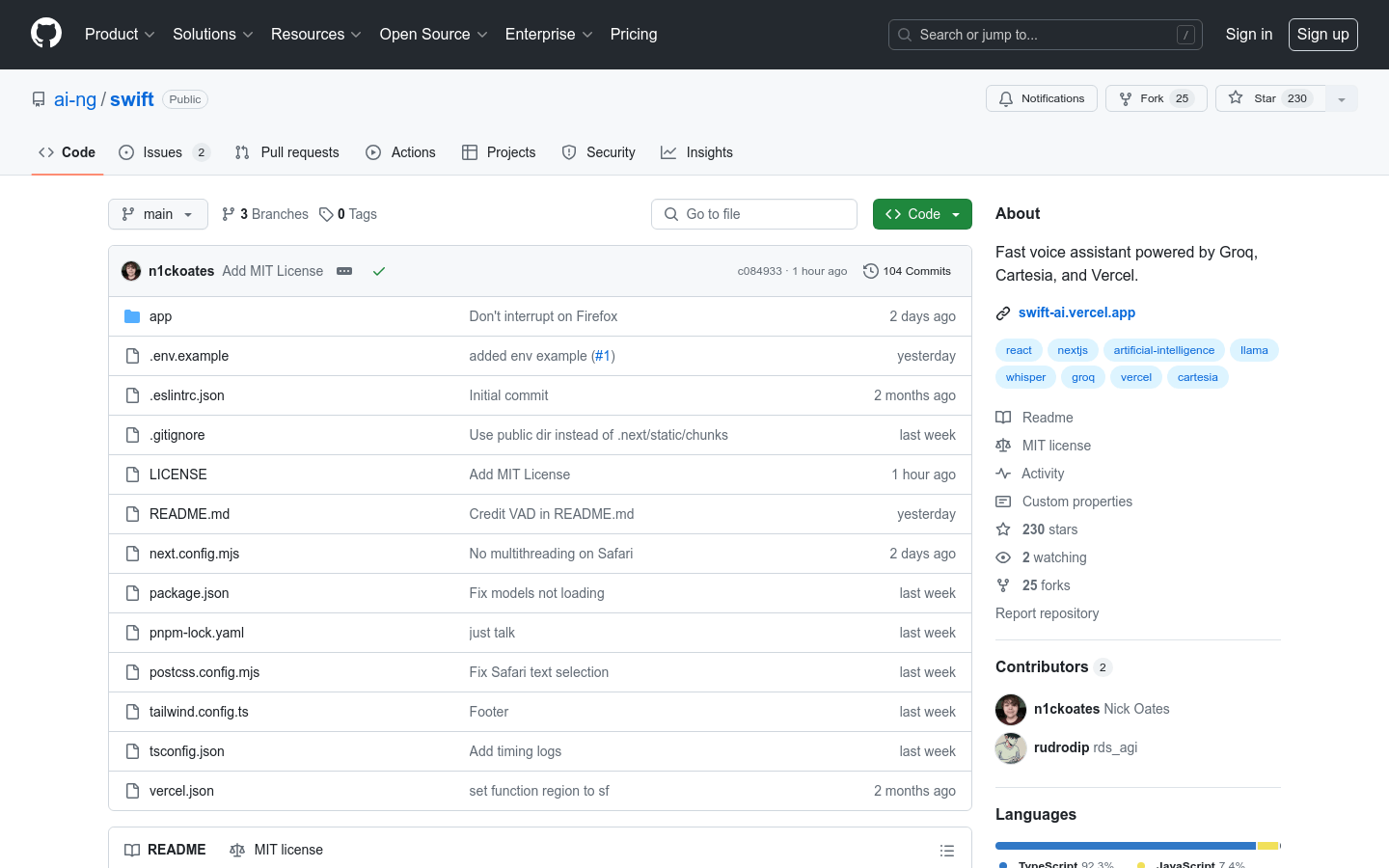

Swift

Swift is a fast AI voice assistant powered by Groq, Cartesia and Vercel. It uses Groq for fast inference with OpenAI Whisper and Meta Llama 3, Cartesia's Sonic speech model for fast speech synthesis, and real-time streaming to the front end. VAD technology is used to detect the user speaking and run callbacks on the speech clips. Swift is a Next.js project written in TypeScript and deployed on Vercel.

ChatTTS-Forge

ChatTTS-Forge is a project developed around the TTS generation model ChatTTS. It implements an API server and a Gradio-based WebUI. It can provide comprehensive API services, support the generation of long texts of more than 1,000 words, maintain consistency, and manage style through built-in 32 different styles.

Seed-TTS

Seed-TTS is a series of large-scale autoregressive text-to-speech (TTS) models launched by ByteDance that can generate speech that is indistinguishable from human speech. It excels in speech context learning, speaker similarity, and naturalness, and can be fine-tuned to further improve subjective ratings. Seed-TTS also provides superior control over speech attributes such as emotion, and can generate highly expressive and diverse speech. Furthermore, a self-distillation method is proposed for speech decomposition, as well as a reinforcement learning method to enhance model robustness, speaker similarity, and controllability. Also presented is Seed-TTSDiT, a non-autoregressive (NAR) variant of the Seed-TTS model, which adopts a completely diffusion-based architecture and does not rely on pre-estimated phoneme durations for speech generation through end-to-end processing.

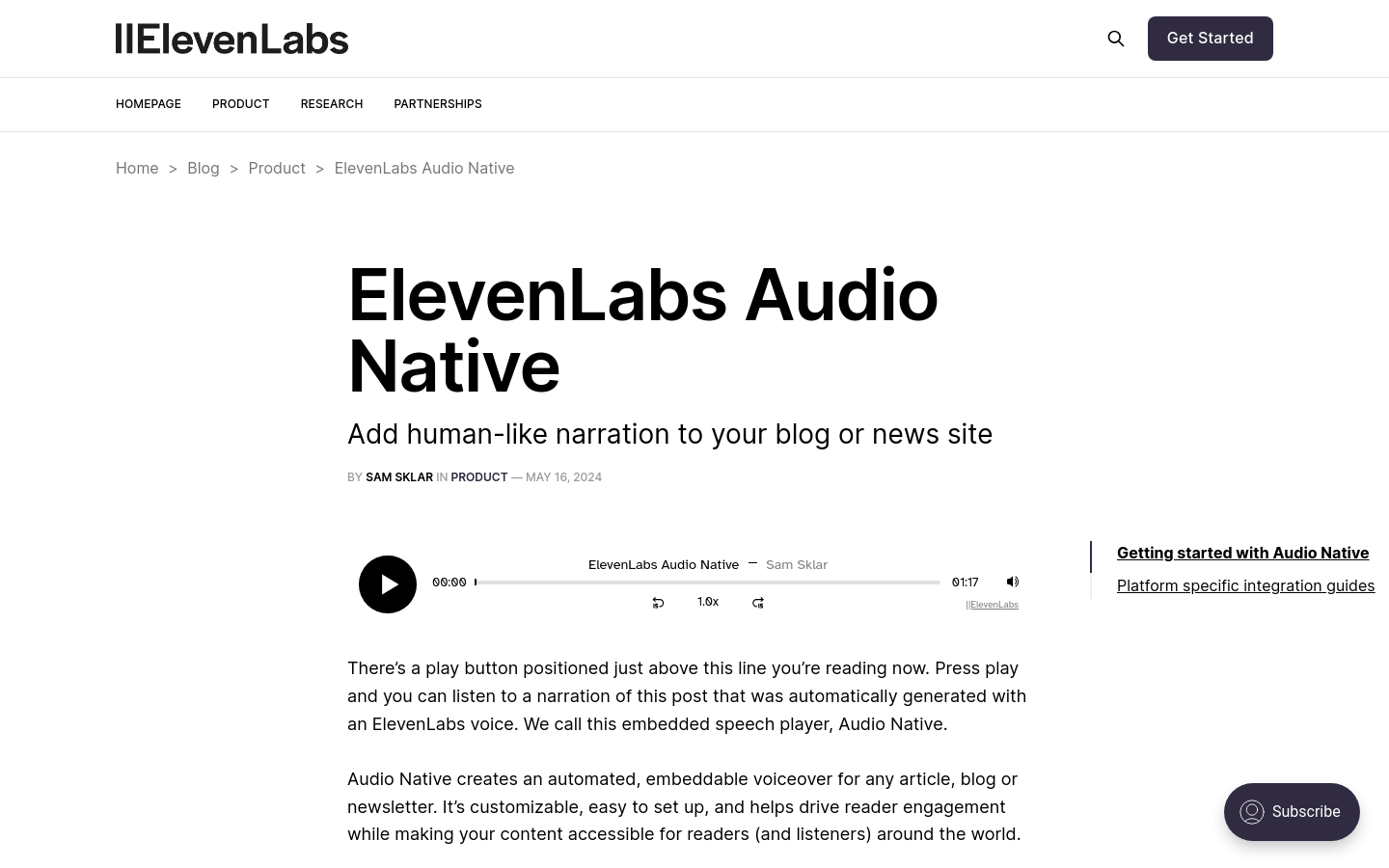

ElevenLabs Audio Native

ElevenLabs Audio Native is an automated embedded voice player that automatically generates human-like narration for any article, blog, or newsletter. It's customizable, easy to set up, and helps increase reader engagement while making content more accessible to readers and listeners around the world.

Parler-TTS

Parler-TTS is a lightweight text-to-speech (TTS) model developed by Hugging Face that can generate high-quality, natural-sounding speech in a given speaker's style (gender, pitch, speaking style, etc.). It is a reproduction of the work of Dan Lyth and Simon King, from Stability AI and the University of Edinburgh respectively. Unlike other TTS models, Parler-TTS is released completely as open source, including data sets, preprocessing, training code and weights. Features include: generating high-quality and natural-sounding speech output, flexible use and deployment, and providing rich annotated speech data sets. Pricing: Free.

Azure AI Studio - Speech Service

Azure AI Studio is a set of artificial intelligence services provided by Microsoft Azure, including voice services. These services may include speech recognition, speech synthesis, speech translation and other functions to help developers integrate speech-related intelligent functions into their applications.

Voice Engine

Voice Engine is an advanced speech synthesis model that can generate natural speech that is very similar to the original speaker with only 15 seconds of speech samples. This model is widely used in education, entertainment, medical and other fields. It can provide reading assistance for non-literate people, translate speech for video and podcast content, and give unique voices to non-verbal people. Its significant advantages are that it requires fewer speech samples, generates high-quality speech, and supports multiple languages. Voice Engine is currently in a small-scale preview stage, and OpenAI is discussing its potential applications and ethical challenges with people from all walks of life.