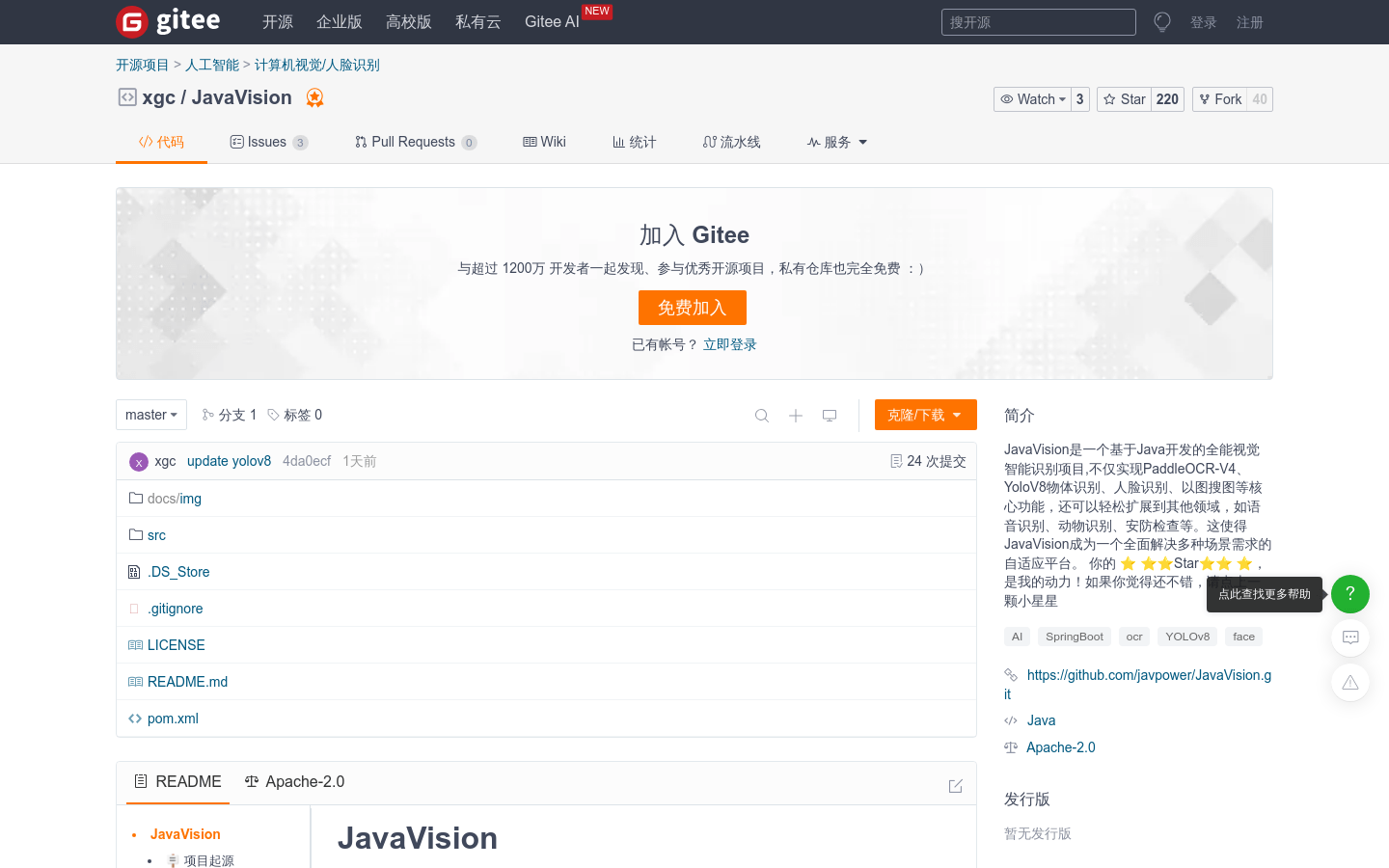

JavaVision

All-round visual intelligent recognition project based on Java

Product Details

JavaVision is an all-round visual intelligent recognition project developed based on Java. It not only implements core functions such as PaddleOCR-V4, YoloV8 object recognition, face recognition, and image search, but can also be easily expanded to other fields, such as speech recognition, animal recognition, security inspection, etc. Project features include the use of the SpringBoot framework, versatility, high performance, reliability and stability, easy integration and flexible scalability. JavaVision aims to provide Java developers with a comprehensive visual intelligent recognition solution, allowing them to build advanced, reliable and easy-to-integrate AI applications in a familiar and favorite programming language.

Main Features

How to Use

Target Users

Java developers: Provide visual intelligent recognition solutions in a familiar programming language environment

Enterprise-level users: Build stable and reliable enterprise-level AI applications

Educational field: as a teaching tool to help students understand AI image recognition technology

Independent developers: quickly integrate and develop personalized visual recognition applications

Examples

Enterprises use JavaVision for security monitoring and real-time detection of helmet wearing conditions

Developers use JavaVision to develop personalized OCR applications to improve work efficiency

Educational institutions use JavaVision as a teaching aid to enhance students’ AI practical capabilities

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

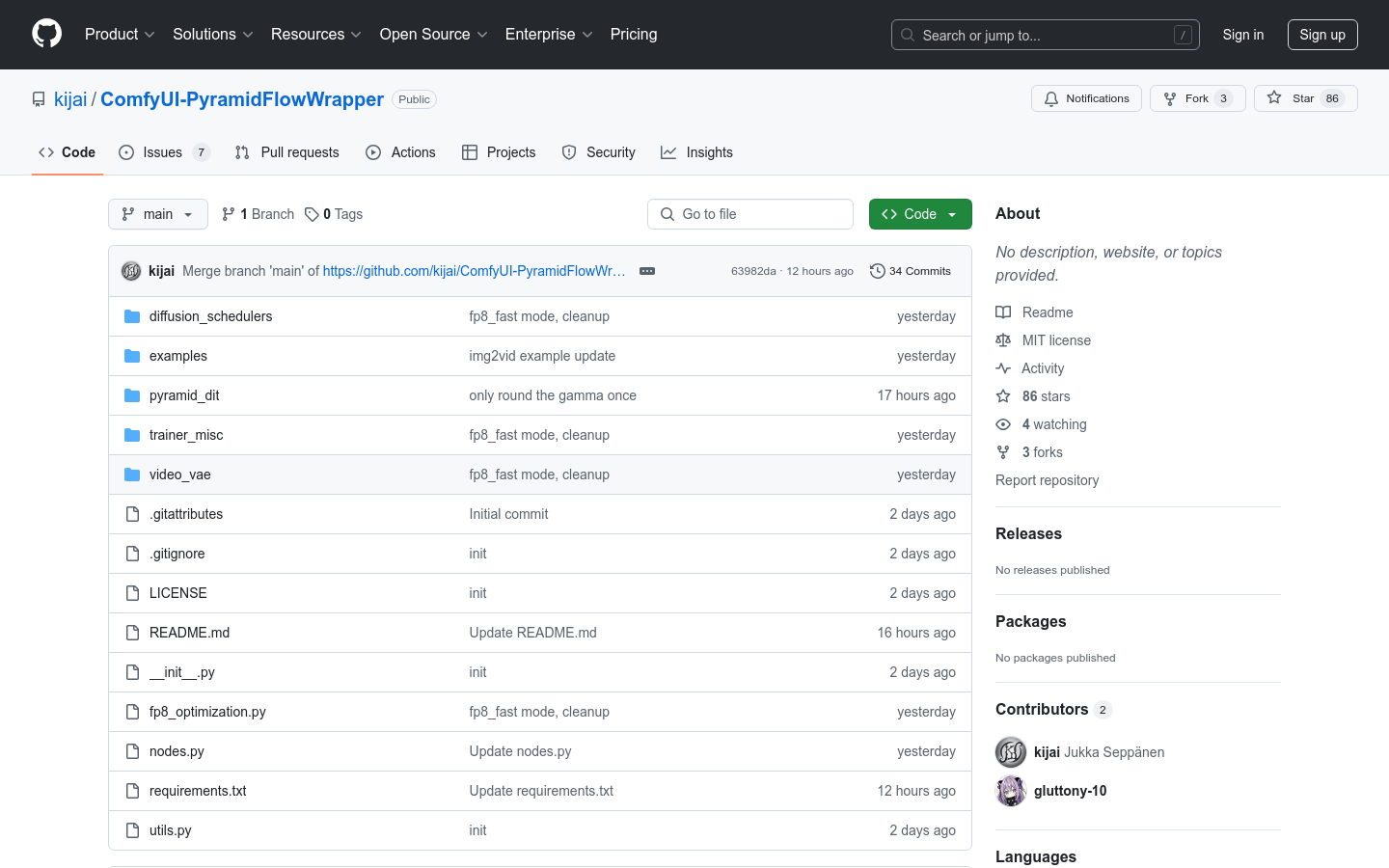

ComfyUI-PyramidFlowWrapper

ComfyUI-PyramidFlowWrapper is a set of packaging nodes based on the Pyramid-Flow model, aiming to provide a more efficient user interface and more convenient operation process through ComfyUI. This model uses deep learning technology to focus on the generation and processing of visual content, and has the ability to efficiently process large amounts of data. Product background information shows that it is an open source project initiated and maintained by developer kijai. It has not yet fully implemented its functions, but it already has certain use value. Since it is an open source project, its price is free and is mainly targeted at developers and technology enthusiasts.

ComfyUI LLM Party

ComfyUI LLM Party aims to develop a complete set of LLM workflow nodes based on the ComfyUI front-end, allowing users to quickly and easily build their own LLM workflows and easily integrate them into existing image workflows.

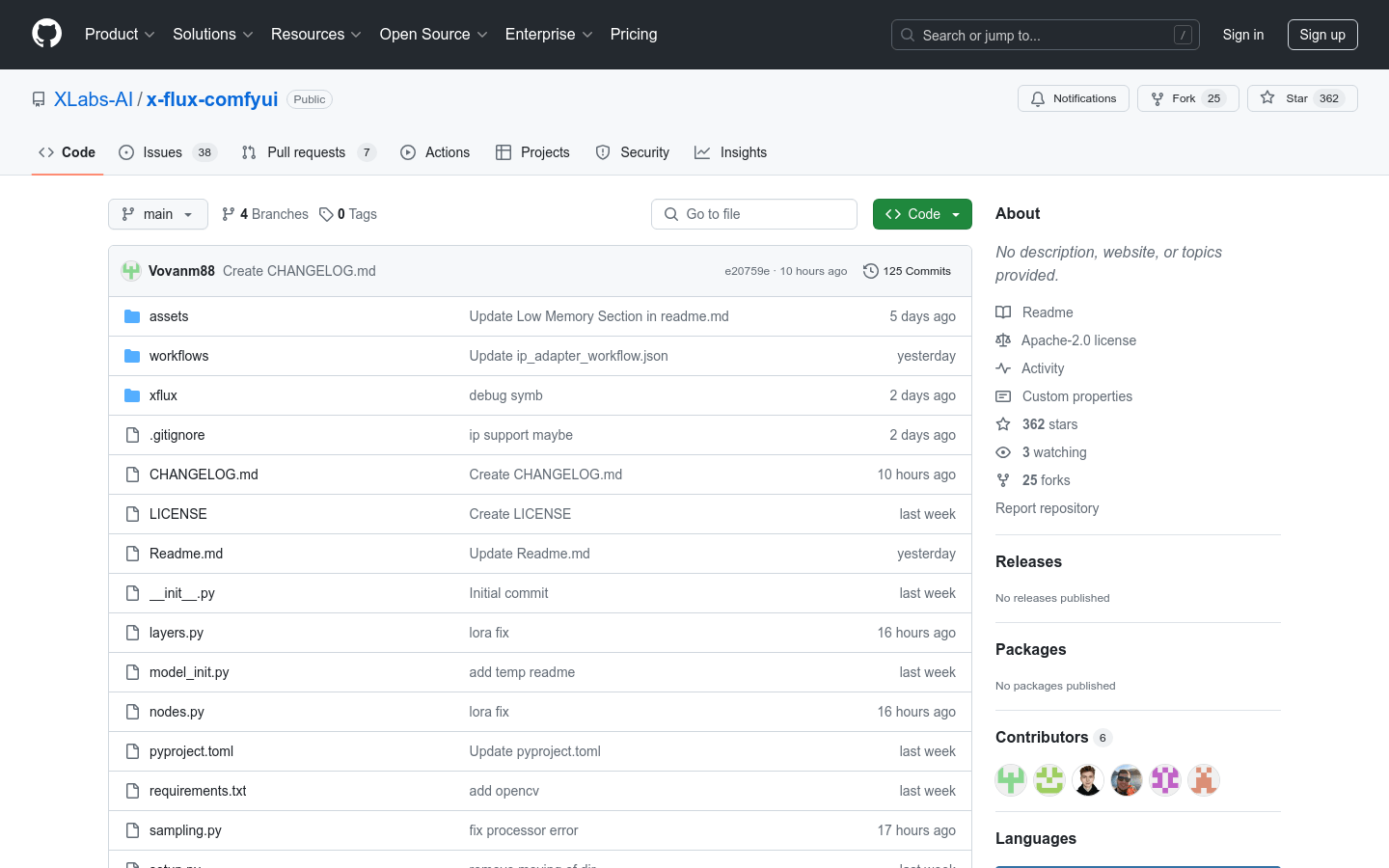

x-flux-comfyui

x-flux-comfyui is an AI model tool integrated in ComfyUI. It provides a variety of functions, including model training, model loading, and image processing. The tool supports low memory mode, which can optimize VRAM usage and is suitable for users who need to run AI models in resource-constrained environments. In addition, it provides an IP Adapter function that can be used with OpenAI’s VIT CLIP model to enhance the diversity and quality of generated images.

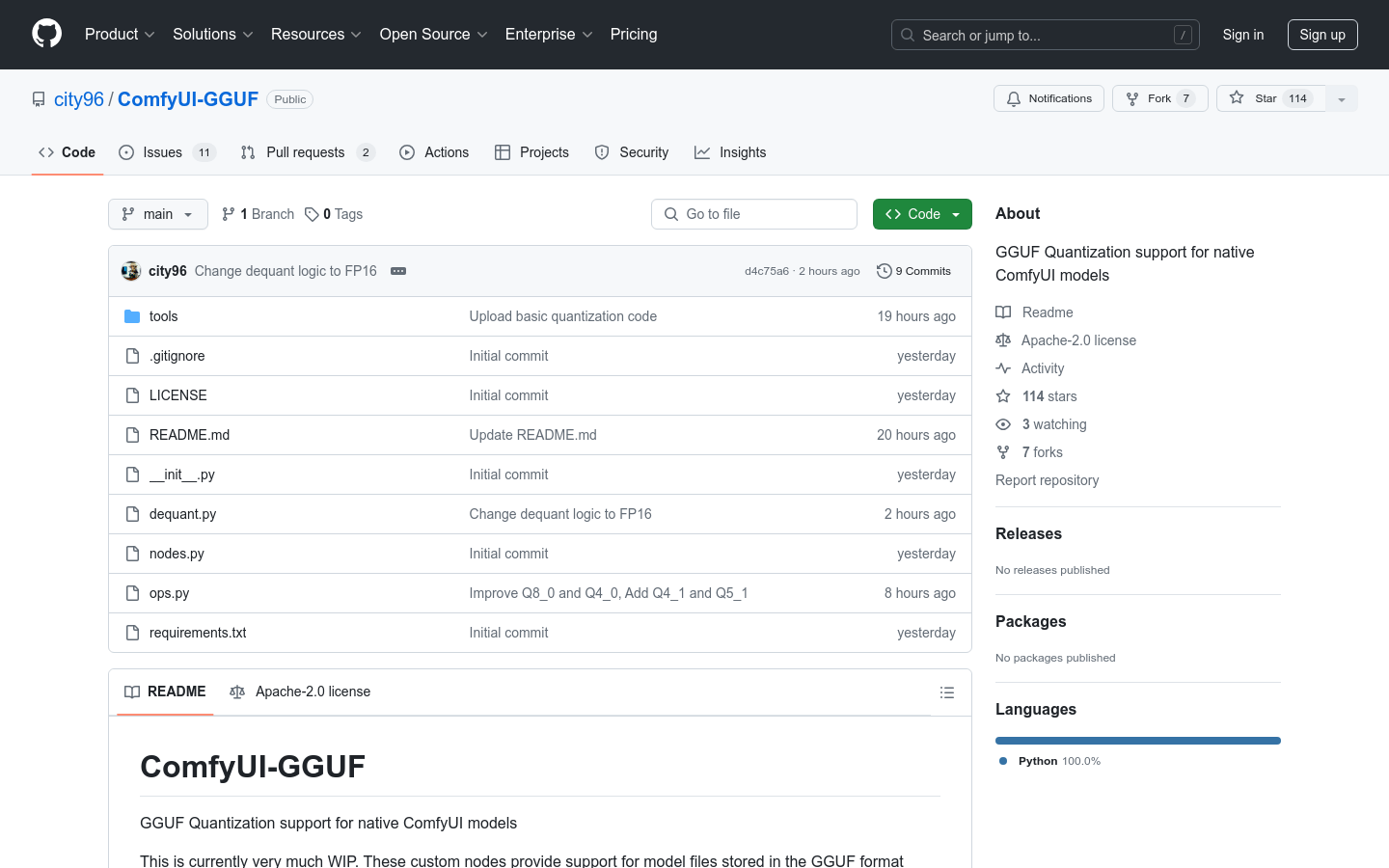

ComfyUI-GGUF

ComfyUI-GGUF is a project that provides GGUF quantitative support for ComfyUI native models. It allows model files to be stored in the GGUF format, a format popularized by llama.cpp. Although regular UNET models (conv2d) are not suitable for quantization, transformer/DiT models like flux seem to be less affected by quantization. This allows them to run at lower variable bitrates per weight on low-end GPUs.

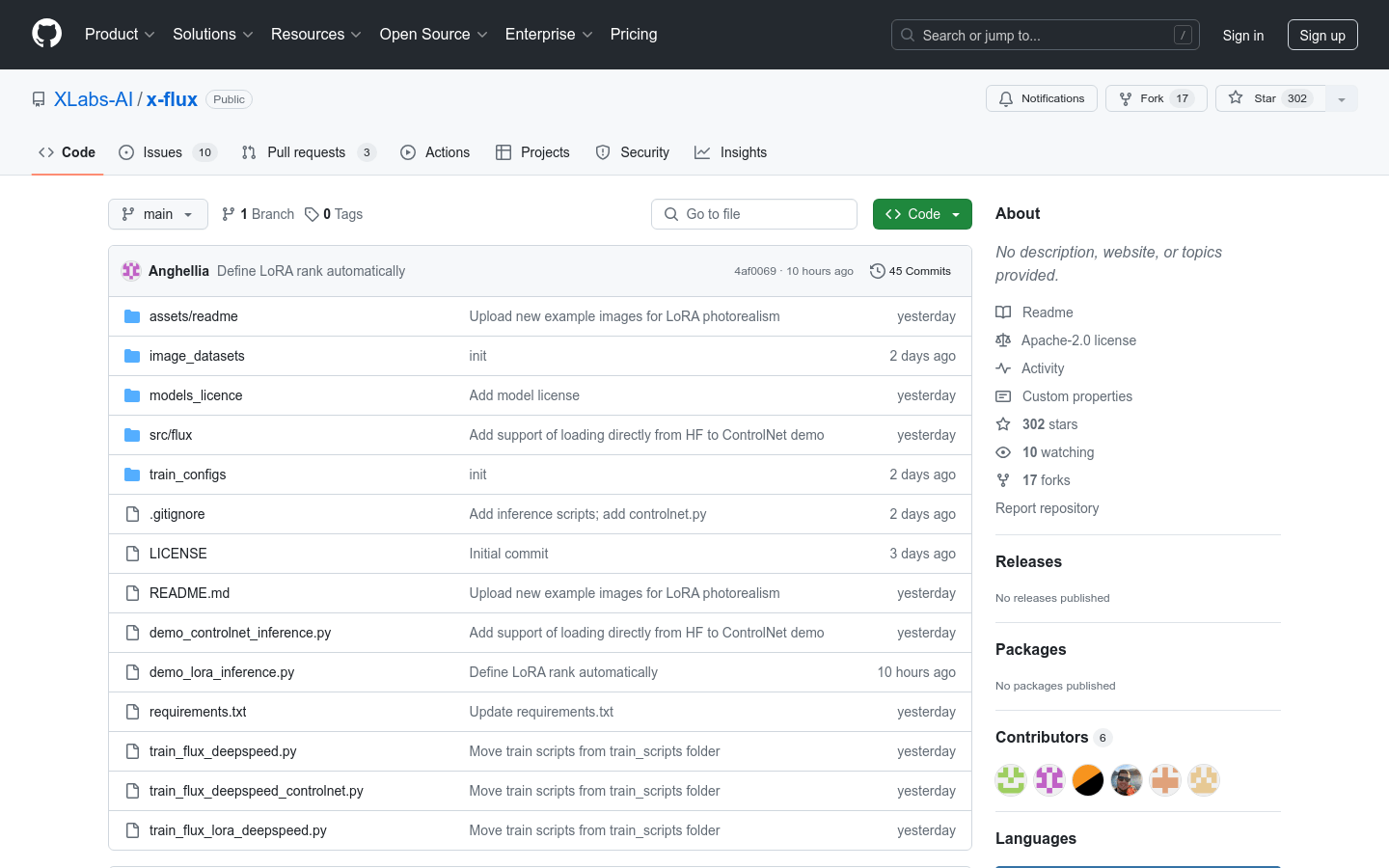

x-flux

x-flux is a set of deep learning model training scripts released by the XLabs AI team, including LoRA and ControlNet models. These models are trained using DeepSpeed, support 512x512 and 1024x1024 image sizes, and corresponding training configuration files and examples are provided. x-flux model training aims to improve the quality and efficiency of image generation, which is of great significance to the field of AI image generation.

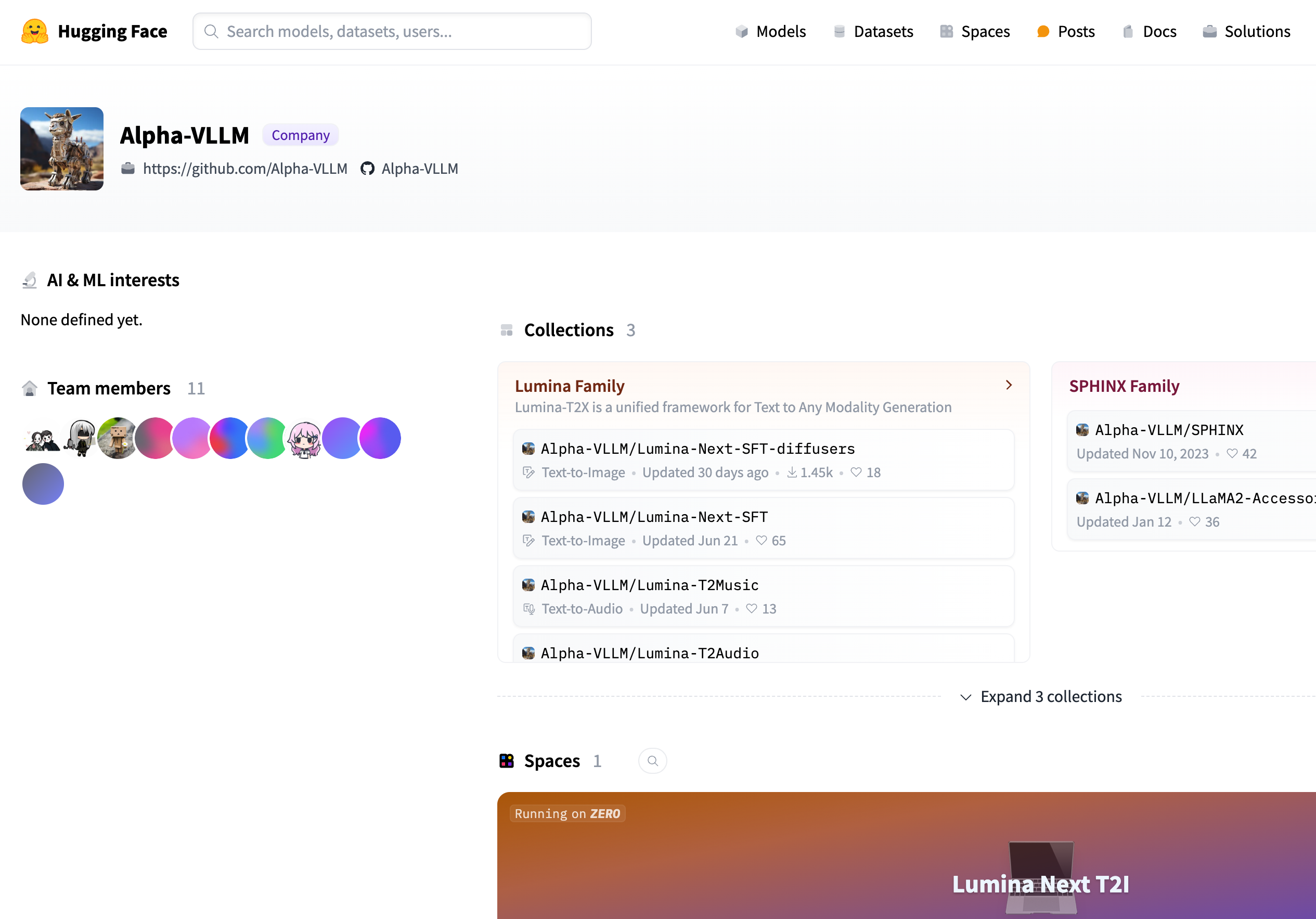

Alpha-VLLM

Alpha-VLLM provides a series of models that support the generation of multi-modal content from text to images, audio, etc. These models are based on deep learning technology and can be widely used in content creation, data enhancement, automated design and other fields.

ComfyUI-Sub-Nodes

ComfyUI-Sub-Nodes is an open source project on GitHub that aims to provide subgraph node functionality for ComfyUI. It allows users to create and use subdiagrams in ComfyUI to improve workflow organization and reusability. This plug-in is especially suitable for developers who need to manage complex workflows in the UI.

MG-LLaVA

MG-LLaVA is a machine learning language model (MLLM) that enhances the model's visual processing capabilities by integrating multi-granularity visual processes, including low-resolution, high-resolution, and object-centric features. An additional high-resolution visual encoder is proposed to capture details and fused with the underlying visual features through a Conv-Gate fusion network. Additionally, object-level features are integrated through bounding boxes identified by offline detectors to further refine the model’s object recognition capabilities. MG-LLaVA is trained exclusively on publicly available multi-modal data with instruction tuning, demonstrating superior perception skills.

AsyncDiff

AsyncDiff is an asynchronous denoising acceleration scheme for parallelizing diffusion models. It enables parallel processing of the model by splitting the noise prediction model into multiple components and distributing them to different devices. This approach significantly reduces inference latency with minimal impact on generation quality. AsyncDiff supports multiple diffusion models, including Stable Diffusion 2.1, Stable Diffusion 1.5, Stable Diffusion x4 Upscaler, Stable Diffusion XL 1.0, ControlNet, Stable Video Diffusion, and AnimateDiff.

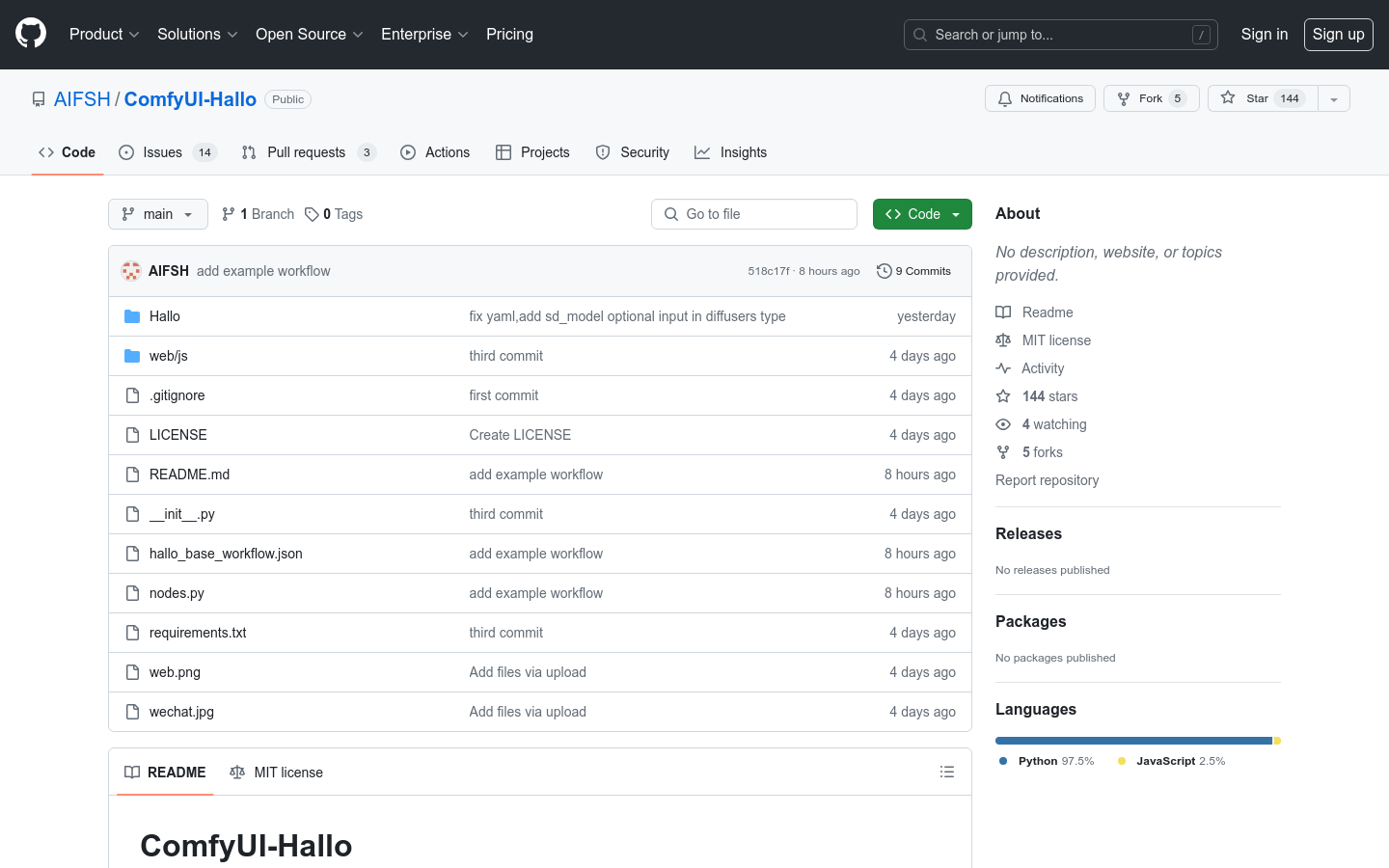

ComfyUI-Hallo

ComfyUI-Hallo is a ComfyUI plug-in customized for Hallo models. It allows users to use ffmpeg in the command line and download model weights from Hugging Face, or manually download and place them in a specified directory. It provides developers with an easy-to-use interface to integrate Hallo models, thereby enhancing development efficiency and user experience.

ComfyUI-LuminaWrapper

ComfyUI-LuminaWrapper is an open source Python wrapper for simplifying the loading and use of Lumina models. It supports custom nodes and workflows, making it easier for developers to integrate Lumina models into their projects. This plug-in is mainly aimed at developers who want to use Lumina models for deep learning or machine learning in a Python environment.

EVE

EVE is an encoder-free visual-language model jointly developed by researchers from Dalian University of Technology, Beijing Institute of Artificial Intelligence, and Peking University. It demonstrates excellent capabilities at different image aspect ratios, surpassing Fuyu-8B in performance and approaching modular encoder-based LVLMs. EVE performs outstandingly in terms of data efficiency and training efficiency. It uses 33M public data for pre-training, 665K LLaVA SFT data for EVE-7B model training, and an additional 1.2M SFT data for EVE-7B (HD) model training. The development of EVE adopts an efficient, transparent, and practical strategy, opening up a new approach to cross-modal pure decoder architecture.

ComfyUI Ollama

ComfyUI Ollama is a custom node designed for ComfyUI workflows using the ollama Python client, allowing users to easily integrate large language models (LLMs) into their workflows, or simply conduct GPT experiments. The main advantage of this plugin is that it provides the ability to interact with the Ollama server, allowing users to perform image queries, query LLM with given hints, and perform LLM queries with finely tuned parameters while maintaining the context of the generated chain.

llava-llama-3-8b-v1_1

llava-llama-3-8b-v1_1 is an LLaVA model optimized by XTuner, based on meta-llama/Meta-Llama-3-8B-Instruct and CLIP-ViT-Large-patch14-336, and fine-tuned with ShareGPT4V-PT and InternVL-SFT. The model is designed for combined processing of images and text, has strong multi-modal learning capabilities, and is suitable for various downstream deployment and evaluation toolkits.

MiniGemini

Mini-Gemini is a multi-modal visual language model that supports a series of dense and MoE large-scale language models from 2B to 34B, and has image understanding, reasoning and generation capabilities. It is built on LLaVA, utilizes dual visual encoders to provide low-resolution visual embeddings and high-resolution candidate regions, uses patch information mining to perform patch-level mining between high-resolution regions and low-resolution visual queries, and fuses text and images for understanding and generation tasks. Supports multiple visual understanding benchmark tests including COCO, GQA, OCR-VQA, VisualGenome, etc.

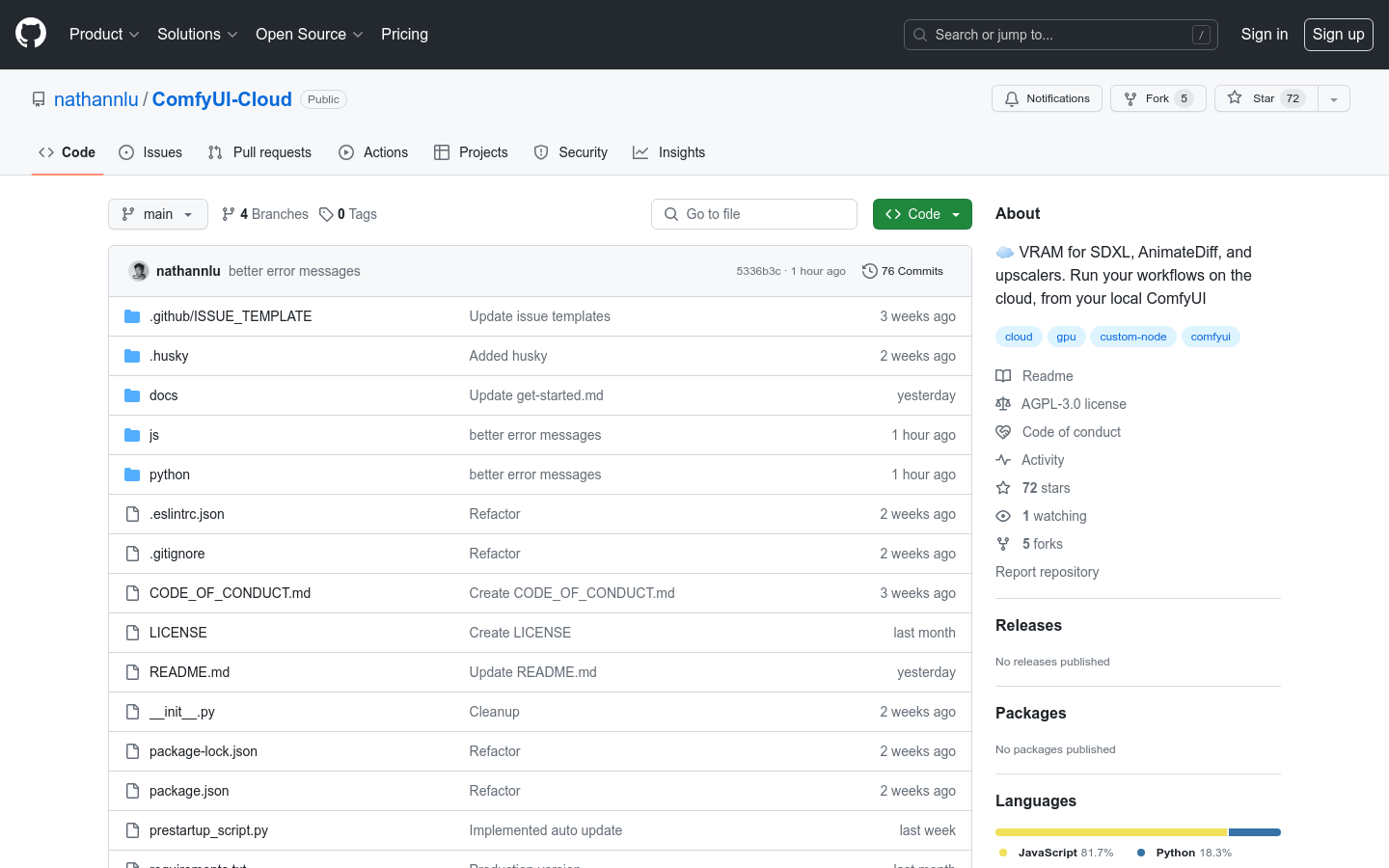

ComfyUI-Cloud

ComfyUI-Cloud is a custom node that allows users to take full control of ComfyUI locally while leveraging cloud GPU resources to run their workflows. It allows users to run workflows that require high VRAM without the need to import custom nodes/models to a cloud provider or spend money on new GPUs.