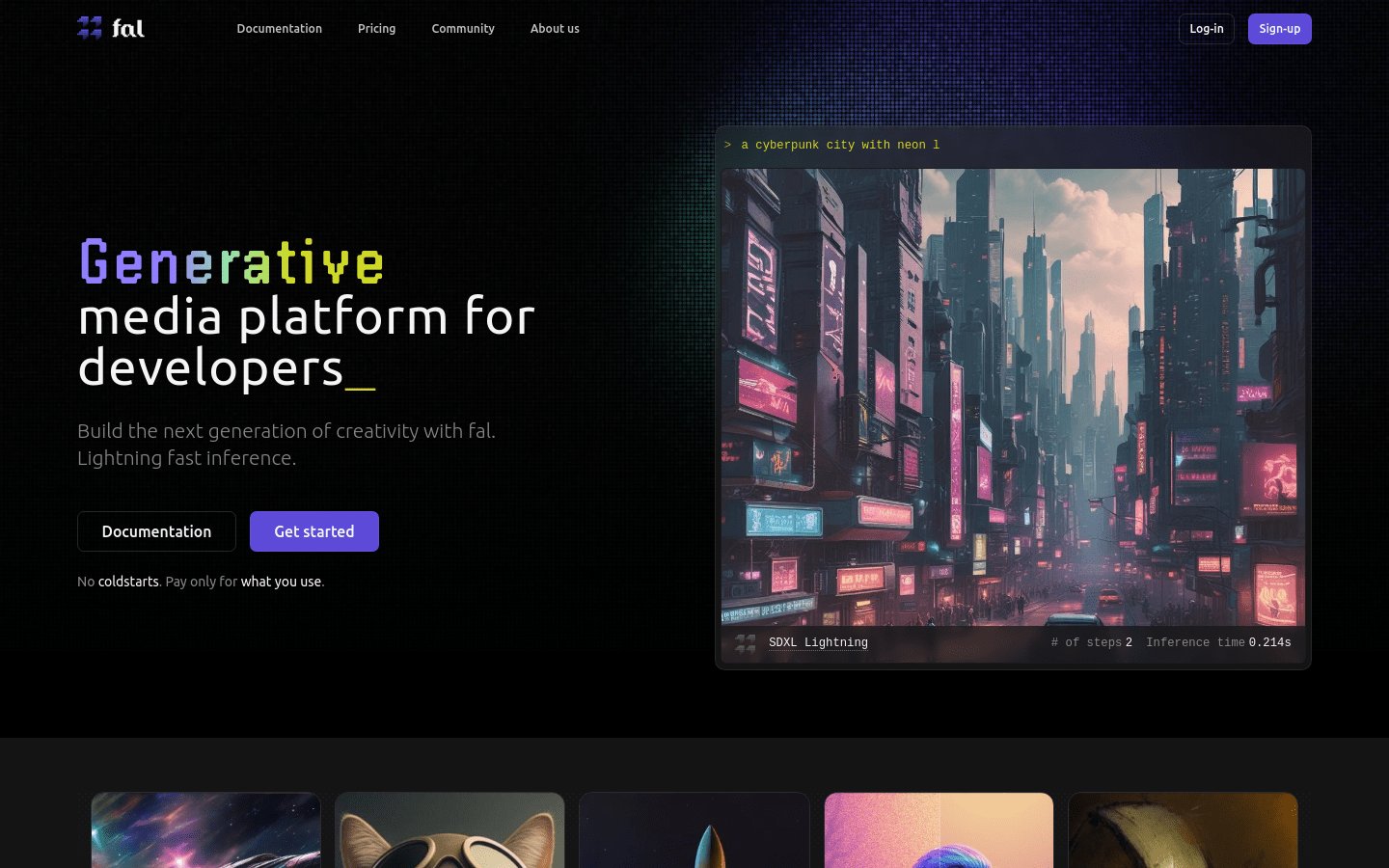

Fal AI

AI image generation developer platform

Product Details

fal.ai is a generative media platform for developers that provides the industry's fastest inference engine, allowing you to run diffusion models at lower costs and create new user experiences. It has real-time, seamless WebSocket inference infrastructure, providing developers with an excellent experience. fal.ai's pricing plans are flexibly adjusted based on actual usage, ensuring you only pay for the computing resources you consume, achieving optimal scalability and economy.

Main Features

How to Use

Target Users

fal.ai is an ideal choice for developers looking to develop innovative generative media applications.

It provides high-performance, low-cost inference capabilities, and comprehensive developer tools and service support to help developers quickly build outstanding applications.

Whether it is image generation, speech recognition or natural language processing, fal.ai can provide powerful technical support to meet the various needs of developers.

At the same time, the flexible pricing model and seamless usage experience also make fal.ai the preferred platform for developers.

Examples

A start-up company used fal.ai's image generation model to develop a creative social application that helps users quickly generate creative and personalized pictures, and has received good market response.

A large enterprise used fal.ai's speech recognition model to develop an intelligent customer service system, which greatly improved the efficiency and satisfaction of customer service.

An educational technology company used fal.ai's natural language processing model to develop an intelligent writing assistant to help students improve their writing skills, which was well received by teachers and students.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

Cognitora

Cognitora is the next generation cloud platform designed for AI agents. Different from traditional container platforms, it utilizes high-performance micro-virtual machines such as Cloud Hypervisor and Firecracker to provide a secure, lightweight and fast AI-native computing environment. It can execute AI-generated code, automate intelligent workloads at scale, and bridge the gap between AI inference and real-world execution. Its importance lies in providing powerful computing and operation support for AI agents, allowing AI agents to run more efficiently and safely. Key benefits include high performance, secure isolation, lightning-fast boot times, multi-language support, advanced SDKs and tools, and more. This platform is aimed at AI developers and enterprises and is committed to providing comprehensive computing resources and tools for AI agents. In terms of price, users who register can get 5,000 free points for testing.

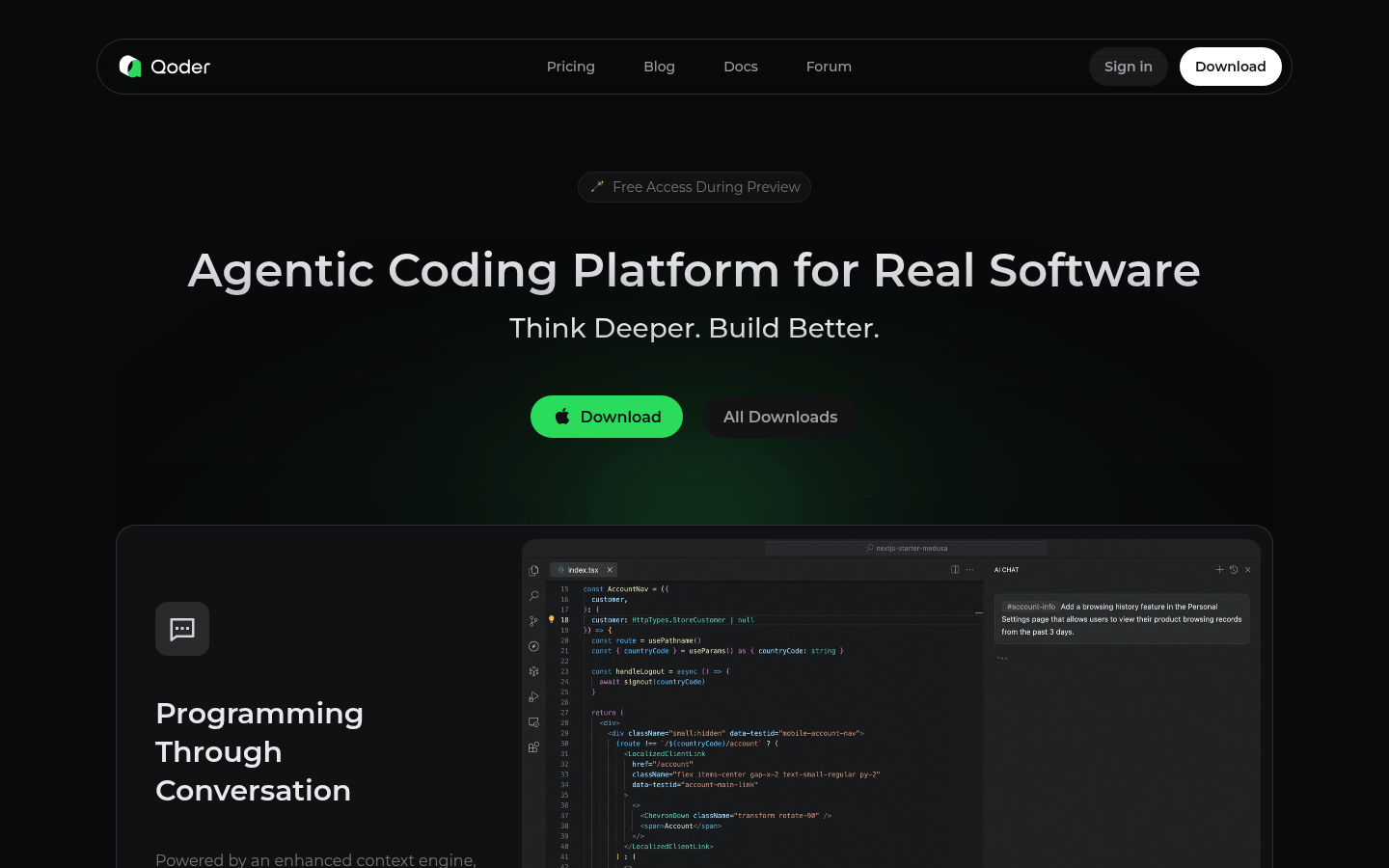

Qoder

Qoder is an agent coding platform that seamlessly integrates with enhanced context engines and intelligent agents to gain a comprehensive understanding of your code base and systematically handle software development tasks. Supports the latest and most advanced AI models in the world: Claude, GPT, Gemini, etc. Available for Windows and macOS.

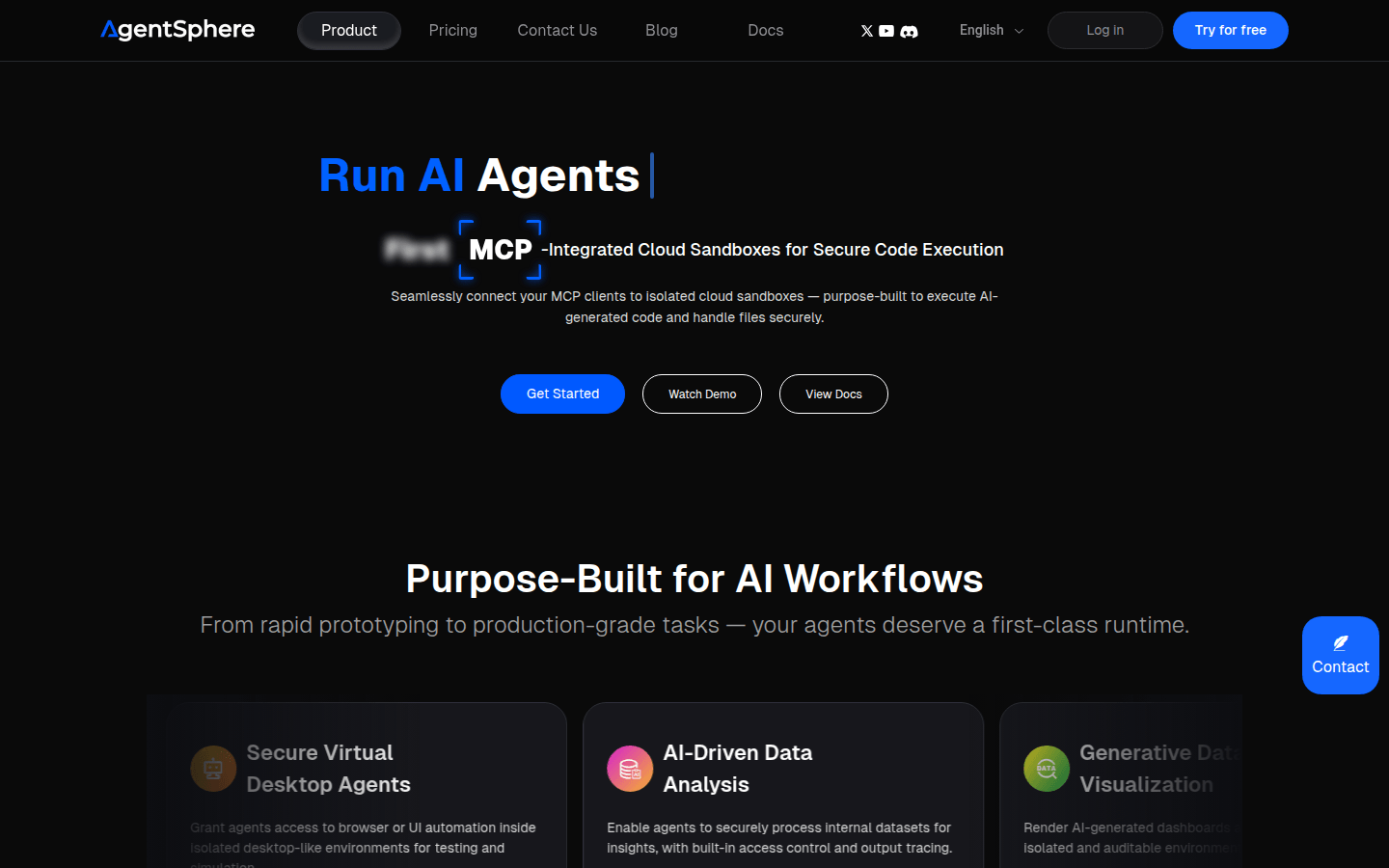

AgentSphere

AgentSphere is a cloud infrastructure designed specifically for AI agents, providing secure code execution and file processing to support various AI workflows. Its built-in functions include AI data analysis, generated data visualization, secure virtual desktop agent, etc., designed to support complex workflows, DevOps integration, and LLM assessment and fine-tuning.

DataLearner pre-training model platform

This platform is a resource platform focusing on AI pre-training models, integrating a large number of pre-training models of different types, scales and application scenarios. Its importance lies in providing AI developers and researchers with convenient access to models and lowering the threshold for model development. The main advantages include detailed model classification, powerful multi-dimensional filtering function, detailed information display and intelligent recommendations. The product background is that with the development of AI technology, the demand for pre-trained models is growing day by day, and the platform emerged as the times require. The platform is mainly positioned as an AI model resource platform. Some models are free for commercial use, and some may require payment. The specific price varies depending on the model.

Pythagora

Pythagora is an all-round AI development platform that provides real debugging tools and production capabilities to help you launch practical applications. Its main advantage is that it provides powerful AI development capabilities to make applications more intelligent.

Gemini 2.5

Gemini 2.5 is the most advanced AI model launched by Google. It has efficient inference and coding performance, can handle complex problems, and performs well in multiple benchmark tests. The model introduces new thinking capabilities, combines enhanced basic models and post-training to support more complex tasks, aiming to provide powerful support for developers and enterprises. Gemini 2.5 Pro is available in Google AI Studio and the Gemini app for users who require advanced inference and coding capabilities.

Miaida·Generative application development platform

Miaida is the first no-code tool created by Baidu. It aims to allow everyone to realize any idea through natural language and build various applications without writing code. The platform greatly lowers the threshold for application development and improves development efficiency through functions such as conversational development, multi-agent collaboration, and multi-tool invocation. The launch of Miaida marks that application development has entered a new era, making the realization of ideas simpler, faster and more efficient. Miaida is currently in the free trial phase. Users can experience its powerful functions for free, providing efficient and low-cost application development solutions for individuals and enterprises.

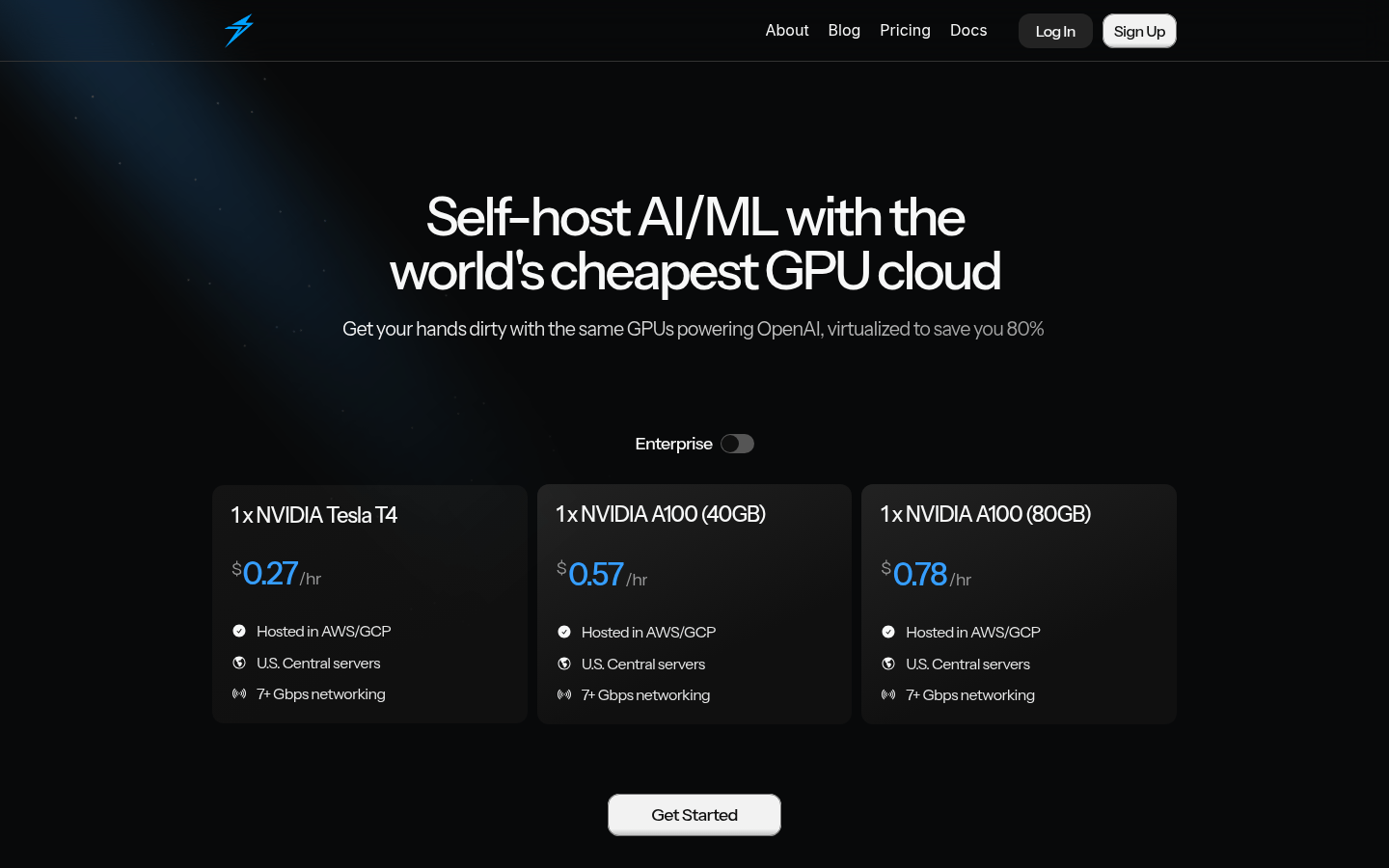

Thunder Compute

Thunder Compute is a GPU cloud service platform focused on AI/ML development. Through virtualization technology, it helps users use high-performance GPU resources at very low cost. Its main advantage is its low price, which can save up to 80% of costs compared with traditional cloud service providers. The platform supports a variety of mainstream GPU models, such as NVIDIA Tesla T4, A100, etc., and provides 7+ Gbps network connection to ensure efficient data transmission. The goal of Thunder Compute is to reduce hardware costs for AI developers and enterprises, accelerate model training and deployment, and promote the popularization and application of AI technology.

Movestax

Movestax is a cloud platform for modern developers designed to simplify development and deployment through integrated solutions. It supports rapid deployment of front-end and back-end applications, and provides serverless databases, automated workflows and other functions. The platform uses zero-configuration deployment and supports a variety of mainstream frameworks and languages to help developers quickly build, expand and manage applications. Its main advantages include efficiency, ease of use and cost-effectiveness. Movestax is suitable for developers, start-ups and SMEs who need rapid development and deployment. The price structure is transparent and provides local currency pricing to reduce cost uncertainty caused by exchange rate fluctuations.

Codev

Codev is a powerful AI-driven development platform that quickly transforms natural language descriptions into fully functional, full-stack Next.js web applications. Its core advantage is that it greatly shortens the time from idea to product implementation, lowers the development threshold, and even non-developers can easily get started. There is strong technical support behind the platform, such as Next.js framework and Supabase database, ensuring that the generated applications have good performance and scalability. It is mainly aimed at developers and creatives to help them quickly realize their ideas. It is currently in the free stage and aims to attract users and expand the community.

Bakery

Bakery is an online platform focused on fine-tuning and monetizing open source AI models. It provides AI start-ups, machine learning engineers and researchers with a convenient tool that allows them to easily fine-tune AI models and monetize them in the market. The platform’s main advantages are its easy-to-use interface and powerful functionality, which allows users to quickly create or upload datasets, fine-tune model settings, and monetize in the market. Bakery’s background information indicates that it aims to promote the development of open source AI technology and provide developers with more business opportunities. Although specific pricing information is not clearly displayed on the page, it is positioned to provide an efficient tool for professionals in the AI field.

NVIDIA Project DIGITS

NVIDIA Project DIGITS is a desktop supercomputer powered by the NVIDIA GB10 Grace Blackwell superchip, designed to deliver powerful AI performance to AI developers. It delivers one petaflop of AI performance in a power-efficient, compact form factor. The product comes pre-installed with the NVIDIA AI software stack and comes with 128GB of memory, enabling developers to prototype, fine-tune and infer large AI models of up to 200 billion parameters locally and seamlessly deploy to the data center or cloud. The launch of Project DIGITS marks another important milestone in NVIDIA’s drive to advance AI development and innovation, providing developers with a powerful tool to accelerate the development and deployment of AI models.

NVIDIA Cosmos

NVIDIA Cosmos is an advanced world-based model platform designed to accelerate the development of physical AI systems such as autonomous vehicles and robots. It provides a series of pre-trained generative models, advanced tokenizers and accelerated data processing pipelines, making it easier for developers to build and optimize physics AI applications. Cosmos reduces development costs and improves development efficiency through its open model license, and is suitable for enterprises and research institutions of all sizes.

EXAONE-3.5-7.8B-Instruct-AWQ

EXAONE 3.5 is a series of instruction-tuned bilingual (English and Korean) generative models developed by LG AI Research, with parameters ranging from 2.4B to 32B. These models support long context processing up to 32K tokens and demonstrate state-of-the-art performance on real-world use cases and long context understanding, while remaining competitive in the general domain compared to recently released models of similar size. EXAONE 3.5 models include: 1) 2.4B model, optimized for deployment on small or resource-constrained devices; 2) 7.8B model, matching the size of the previous generation model but offering improved performance; 3) 32B model, delivering powerful performance.

Websparks

Websparks is an AI-driven software development platform that uses artificial intelligence technology to quickly transform users' ideas into complete full-stack applications, including responsive front-ends, powerful back-ends, and optimized databases. Users can build, deploy and scale applications through simple prompts, supporting real-time preview and one-click deployment. Websparks uses AI technology to improve the efficiency of software development and reduce development costs, allowing developers, designers or visionaries to quickly transform ideas into reality.

OpenAI o1 API

OpenAI o1 is a high-performance AI model designed to handle complex multi-step tasks and deliver advanced accuracy. It is the successor to o1-preview and has been used to build agent applications to streamline customer support, optimize supply chain decisions and predict complex financial trends. The o1 model has key production-ready features, including function calls, structured output, developer messaging, visual capabilities, and more. The o1-2024-12-17 version achieved new top scores in multiple benchmarks, improving cost efficiency and performance.