AudioLCM

Efficient text-to-audio generative models with latent consistency.

Product Details

AudioLCM is a text-to-audio generation model based on PyTorch, which uses a latent consistency model to generate high-quality and efficient audio. This model was developed by Huadai Liu and others, providing an open source implementation and pre-trained model. It can convert text descriptions into near-real audio and has important application value, especially in fields such as speech synthesis and audio production.

Main Features

How to Use

Target Users

The AudioLCM model is mainly intended for audio engineers, speech synthesis researchers and developers, as well as scholars and enthusiasts interested in audio generation technology. It is suitable for application scenarios that require automatic conversion of text descriptions into audio, such as virtual assistants, audiobook production, language learning tools, etc.

Examples

Use AudioLCM to generate audio readings of specific texts for use in audiobooks or podcasts.

Convert speeches from historical figures into lifelike voices for educational or exhibition use.

Generate customized voices for video game or animated characters to enhance their personality and expressiveness.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

EzAudio

EzAudio is an advanced text-to-audio (T2A) generation model capable of creating high-quality audio from text prompts. It sets a new standard for open source T2A models, providing fast, efficient and realistic sound effect generation.

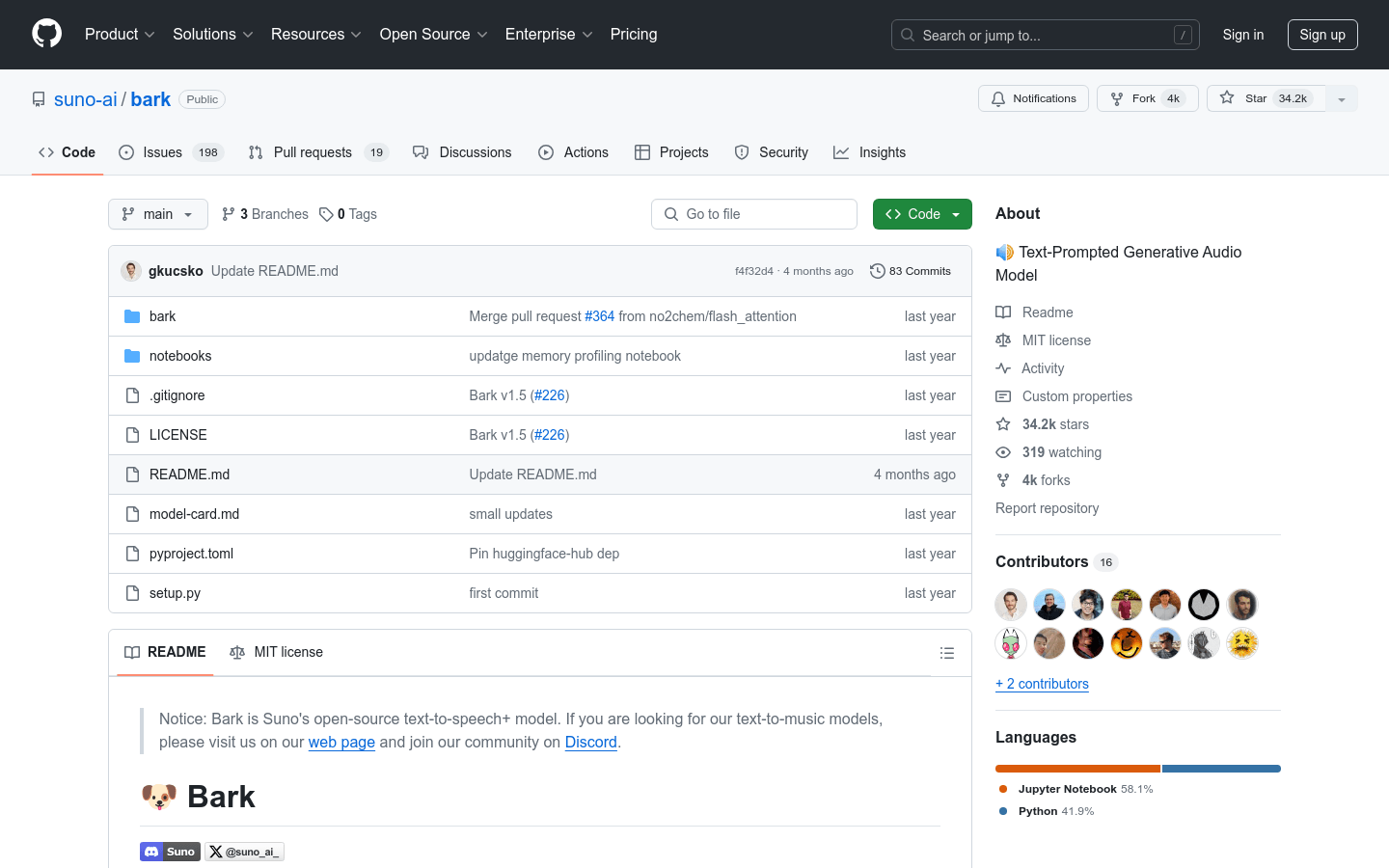

Bark

Bark is a Transformer-based text-to-audio model developed by Suno that is capable of generating realistic multilingual speech as well as other types of audio such as music, background noise, and simple sound effects. It also supports the generation of non-verbal communication such as laughter, sighs and cries. Bark supports the research community, providing pre-trained model checkpoints suitable for inference and available for commercial use.

Whisper Speech

Whisper Speech is a fully open source text-to-speech model trained by Collabora and Lion on Juwels supercomputers. It supports multiple languages and multiple forms of input, including Node.js, Python, Elixir, HTTP, Cog, and Docker. The advantages of this model are efficient speech synthesis and flexible deployment. In terms of pricing, Whisper Speech is completely free. It is positioned to provide developers and researchers with a powerful, customizable text-to-speech solution.

GPT-SoVITS

GPT-SoVITS-WebUI is a powerful zero-sample speech conversion and text-to-speech WebUI. It has features such as zero-sample TTS, few-sample TTS, cross-language support, and WebUI tools. The product supports English, Japanese and Chinese, and provides integrated tools, including voice accompaniment separation, automatic training set segmentation, Chinese ASR and text annotation, to help beginners create training data sets and GPT/SoVITS models. Users can experience instant text-to-speech conversion by inputting a 5-second sound sample, and can fine-tune the model to improve speech similarity and fidelity by using only 1 minute of training data. Product support environment preparation, Python and PyTorch versions, quick installation, manual installation, pre-trained models, dataset format, to-do items and acknowledgments.

RealtimeTTS

RealtimeTTS is an easy-to-use, low-latency text-to-speech library for real-time applications. It can convert text streams into immediate audio output. Key features include real-time streaming synthesis and playback, advanced sentence boundary detection, modular engine design, and more. The library supports multiple text-to-speech engines and is suitable for voice assistants and applications requiring instant audio feedback. Please refer to the official website for detailed pricing and positioning information.

StyleTTS 2

StyleTTS 2 is a text-to-speech (TTS) model that uses large-scale speech language models (SLMs) for style diffusion and adversarial training to achieve human-level TTS synthesis. It models style as a latent random variable through a diffusion model to generate a style that best fits the text without reference to speech. Furthermore, we use large pre-trained SLMs (such as WavLM) as the discriminator and combine them with our innovative differentiable duration modeling for end-to-end training, thereby improving the naturalness of speech. StyleTTS 2 outperformed human recordings on the single-speaker LJSpeech dataset and matched them on the multi-speaker VCTK dataset, gaining approval from native English-speaking reviewers. Furthermore, our model outperforms previous publicly available zero-shot extension models when trained on the LibriTTS dataset. By demonstrating the potential of style diffusion and adversarial training with large SLMs, this work enables a human-level TTS synthesis on single and multi-speaker datasets.

AutoMusic

AutoMusic is a cutting-edge AI song maker that uses artificial intelligence technology to quickly convert text or lyrics into original music. The importance of this product is that it lowers the threshold for music creation, allowing people without a musical background to easily compose songs. Its main advantages include fast creation speed, simple operation, and the music generated is completely free and has no copyright issues. The product background is developed to meet the needs of music lovers and creators for convenient music creation tools. In terms of price, you can start using it for free, but points may be required to generate songs. Positioning is for creators in various fields, whether it is entertainment creation for ordinary users or project production for professionals, it can provide support.

Suno V5

Suno V5 is the world's leading AI music generation platform. Its revolutionary AI technology can accurately identify music styles and achieve seamless style mixing and true style reproduction. The platform can create professional music of up to 8 minutes, output studio-level sound quality, and is suitable for a variety of commercial uses. In terms of price, it provides free basic functions, and also has a professional version of US$29 and a studio version of US$99 for users to choose from. Its positioning is to meet the music creation needs of different user groups such as content creators, enterprises and professional media production.

Suno V5 App

Suno V5 music generator is an independent music generator built based on the Suno V5 model function and is not an official product. It provides powerful music generation capabilities, with breakthrough features such as studio-level vocal generation, multi-instrument support, and local track editing. Its main advantages include extremely fast generation of high-quality finished products, linkage between style templates and lyrics, controllable structure, etc. The product supports free quota and pay-per-view. New users have free trial points and can also obtain additional points through daily check-in and other methods. It is suitable for startups, creators and music technology innovators to use for music creation.

AISong.org

AI Song is an online music creation platform that uses advanced AI technology to quickly transform user ideas into professional music. This platform is suitable for creators, musicians, content producers, etc., who can easily create music without any music experience. In terms of price, a limited number of free services are provided, and there is also a paid model. Its advantage is that it supports 30 music styles, the output is professional studio quality, and it has full commercial copyright.

AI Song Online

AI Song is an AI music generator designed to provide creators and artists with functions such as generating music, writing lyrics, and extending audio tracks. It's fast, convenient, and suitable for all kinds of creators. AI Song has the advantages of rapid generation, free storage, and multiple functional modes. It is a powerful music creation tool.

aimusicmaker

AI Music Maker is an AI music generator that can easily generate original songs from text or lyrics. It simplifies the entire creative process, requiring no complex setup or knowledge of music theory, just your imagination. This product provides high-quality music output and is suitable for a variety of creative projects and music creation needs.

Suno

Suno is an AI music generator that helps users create high-quality music in seconds without requiring professional skills. It is free for users to use, and different paid plans are also available. The product background includes market-leading AI music generation technology, targeting users who want to create music but do not have professional skills.

BPM Finder

BPM Finder is an advanced BPM analysis tool that can accurately detect the rhythm of any audio source, with three powerful analysis modes. It provides music creators and DJs with professional BPM detection capabilities for accurate rhythm analysis.

Free AI Vocal Remover & Stem Splitter

Music and Voice Separation is an online service that uses advanced AI technology to separate vocals and accompaniment in music. Its main advantages are that it is fast, free and requires no login, helping users to easily separate different elements in their music.