AI PhotoCaption

An AI application that automatically generates captions for social media pictures.

Product Details

AI PhotoCaption—Text Generator is an application that uses advanced GPT-4 Vision technology to automatically generate attractive social media captions for pictures uploaded by users. It analyzes image content, provides multiple language options, and allows users to choose different tone styles to adapt to the characteristics of different social media platforms. The app is designed to save users time, increase post engagement, and showcase users’ creativity through unique AI-enhanced captions while enabling cross-cultural communication.

Main Features

How to Use

Target Users

This product is suitable for social media enthusiasts, influencers and marketers, as well as global citizens, helping them share photos in a more efficient and engaging way and enhance the interactivity of social media posts.

Examples

Social media users use AI PhotoCaption to quickly generate attractive picture captions and increase the likes and shares of posts.

Marketers use AI technology to generate creative text for product images to enhance advertising effects.

Global Citizen uses multi-language support to share life moments with friends in different countries.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

Shutubao

Shutubao is a batch generation tool designed to improve the efficiency of image and text production. It quickly generates a large number of images through a combination of personalized templates and copywriting data. It is suitable for image and text production on all platforms such as Xiaohongshu, Douyin, and video accounts. Product background information shows that Shutubao can greatly improve production efficiency and reduce costs, and is especially suitable for companies or individuals that require a large amount of graphic content. In terms of price, we provide annual and permanent packages to meet the needs of different users.

MM1.5

MM1.5 is a family of multimodal large language models (MLLMs) designed to enhance text-rich image understanding, visual referential representation and grounding, and multi-image reasoning. This model is based on the MM1 architecture and adopts a data-centric model training method to systematically explore the impact of different data mixtures throughout the model training life cycle. MM1.5 models range from 1B to 30B parameters, including intensive and mixed expert (MoE) variants, and provide detailed training process and decision-making insights through extensive empirical studies and ablation studies, providing valuable guidance for future MLLM development research.

NVLM-D-72B

NVLM-D-72B is a multi-modal large-scale language model launched by NVIDIA. It focuses on visual-language tasks and improves text performance through multi-modal training. The model achieves results comparable to industry-leading models on visual-language benchmarks.

Llama-3.2-11B-Vision

Llama-3.2-11B-Vision is a multi-modal large language model (LLMs) released by Meta that combines the capabilities of image and text processing and aims to improve the performance of visual recognition, image reasoning, image description and answering general questions about images. The model outperforms numerous open source and closed multi-modal models on common industry benchmarks.

Llama-3.2-90B-Vision

Llama-3.2-90B-Vision is a multi-modal large language model (LLM) released by Meta Company, focusing on visual recognition, image reasoning, picture description and answering general questions about pictures. The model outperforms many existing open source and closed multi-modal models on common industry benchmarks.

NVLM

NVLM 1.0 is a series of cutting-edge multi-modal large language models (LLMs) that achieve advanced results on visual-linguistic tasks that are comparable to leading proprietary models and open-access models. It is worth noting that NVLM 1.0’s text performance even surpasses its LLM backbone model after multi-modal training. We open sourced the model weights and code for the community.

Pixtral-12B-2409

Pixtral-12B-2409 is a multi-modal model developed by the Mistral AI team, containing a 12B parameter multi-modal decoder and a 400M parameter visual encoder. The model performs well in multi-modal tasks, supports images of different sizes, and maintains state-of-the-art performance on text benchmarks. It is suitable for advanced applications that need to process image and text data, such as image description generation, visual question answering, etc.

Pixtral 12B

Pixtral 12B is a multi-modal AI model developed by the Mistral AI team that understands natural images and documents and has excellent multi-modal task processing capabilities while maintaining state-of-the-art performance on text benchmarks. The model supports multiple image sizes and aspect ratios and is capable of processing any number of images in long context windows. It is an upgraded version of Mistral Nemo 12B and is designed for multi-modal inference without sacrificing critical text processing capabilities.

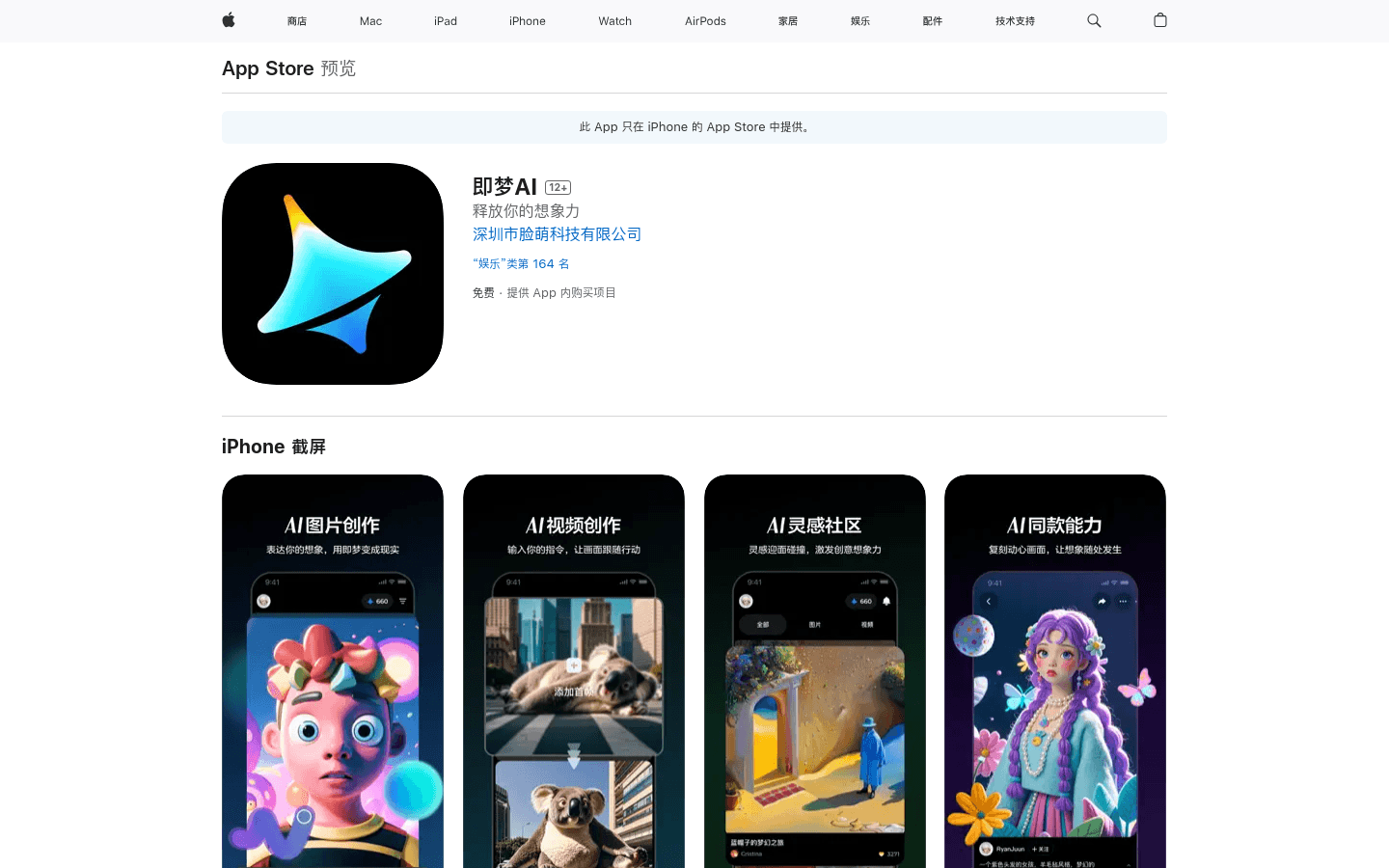

Dream AI

Jimeng AI is an AI expression platform specially built for creative enthusiasts. It generates unique pictures and videos through natural language description, supports editing and sharing functions, and allows users to fully display their imagination. Developed by Shenzhen Facemeng Technology Co., Ltd., it provides Jimeng membership subscription service to enjoy more privileges.

SEED-Story

SEED-Story is a multi-modal long story generation model based on large language models (MLLM), which can generate rich, coherent narrative text and pictures with consistent styles based on pictures and text provided by users. It represents the cutting-edge technology of artificial intelligence in the fields of creative writing and visual arts, and has the ability to generate high-quality, multi-modal story content, providing new possibilities for the creative industry.

BizyAir

BizyAir is a plug-in developed by siliconflow, designed to help users overcome environmental and hardware limitations and more easily use ComfyUI to generate high-quality content. It supports running in any environment without worrying about environmental or hardware requirements.

Glyph-ByT5-v2

Glyph-ByT5-v2 is a model launched by Microsoft Research Asia for accurate multi-language visual text rendering. Not only does it support accurate visual text rendering in 10 different languages, but it also offers significant improvements in aesthetic quality. The model builds a multilingual visual paragraph benchmark by creating high-quality multilingual glyph text and graphic design datasets, and leverages the latest gait-aware preference learning methods to improve visual aesthetic quality.

Phi-3-vision-128k-instruct

Phi-3 Vision is a lightweight, state-of-the-art open multi-modal model built on datasets including synthetic data and filtered publicly available websites, focusing on very high-quality inference-intensive data for text and vision. This model belongs to the Phi-3 model family. The multi-modal version supports 128K context length (in tokens). It has undergone a rigorous enhancement process that combines supervised fine-tuning and direct preference optimization to ensure precise instruction following and strong security measures.

Mini-Gemini

Mini-Gemini is a multi-modal model developed by the team of Jia Jiaya, a tenured professor at the Chinese University of Hong Kong. It has accurate image understanding capabilities and high-quality training data. This model combines image inference and generation, and is available in different scale versions with performance comparable to GPT-4 and DALLE3. Mini-Gemini uses Gemini's visual dual-branch information mining method and SDXL technology, encodes images through a convolutional network and uses the Attention mechanism to mine information, and combines LLM to generate text links between the two models.

EMAGE

EMAGE is a unified holistic co-conversational gesture generation model that generates natural gesture movements through expressive masked audio gesture modeling. It can capture speech and prosodic information from audio input and generate corresponding body posture and gesture action sequences. EMAGE is able to generate highly dynamic and expressive gestures, thereby enhancing the interactive experience of virtual characters.

Al Comic Factory

Al Comic Factory uses large-scale language models and SDXL technology to automatically generate emotional and story-telling comic content. Users only need to provide simple text prompts, and AI Comic Factory can generate comics containing character dialogue and scene descriptions. Supports multiple configurations, user interaction, multi-language content creation, batch generation of comic variants and other functions.