MotionClone

Training-independent motion cloning for controllable video generation

Product Details

MotionClone is a training-agnostic framework that allows motion cloning from reference videos to control text-to-video generation. It utilizes a temporal attention mechanism to represent motion in the reference video in video inversion, and introduces primary temporal attention guidance to mitigate the impact of noise or very subtle motion in the attention weights. Furthermore, in order to assist the generative model in synthesizing reasonable spatial relationships and enhancing its cue following ability, a position-aware semantic guidance mechanism utilizing the rough position of the foreground in the reference video and the original classifier's free guidance features is proposed.

Main Features

How to Use

Target Users

MotionClone is suitable for video makers, animators, and researchers because it provides a way to quickly generate video content without training. Especially for professionals who need to generate videos based on specific text prompts, MotionClone provides an efficient and flexible tool.

Examples

Animators use MotionClone to quickly generate animated video sketches based on scripts

Video producers use MotionClone to generate preliminary versions of video content based on scripts

Researchers use MotionClone for research and development of video generation technology

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

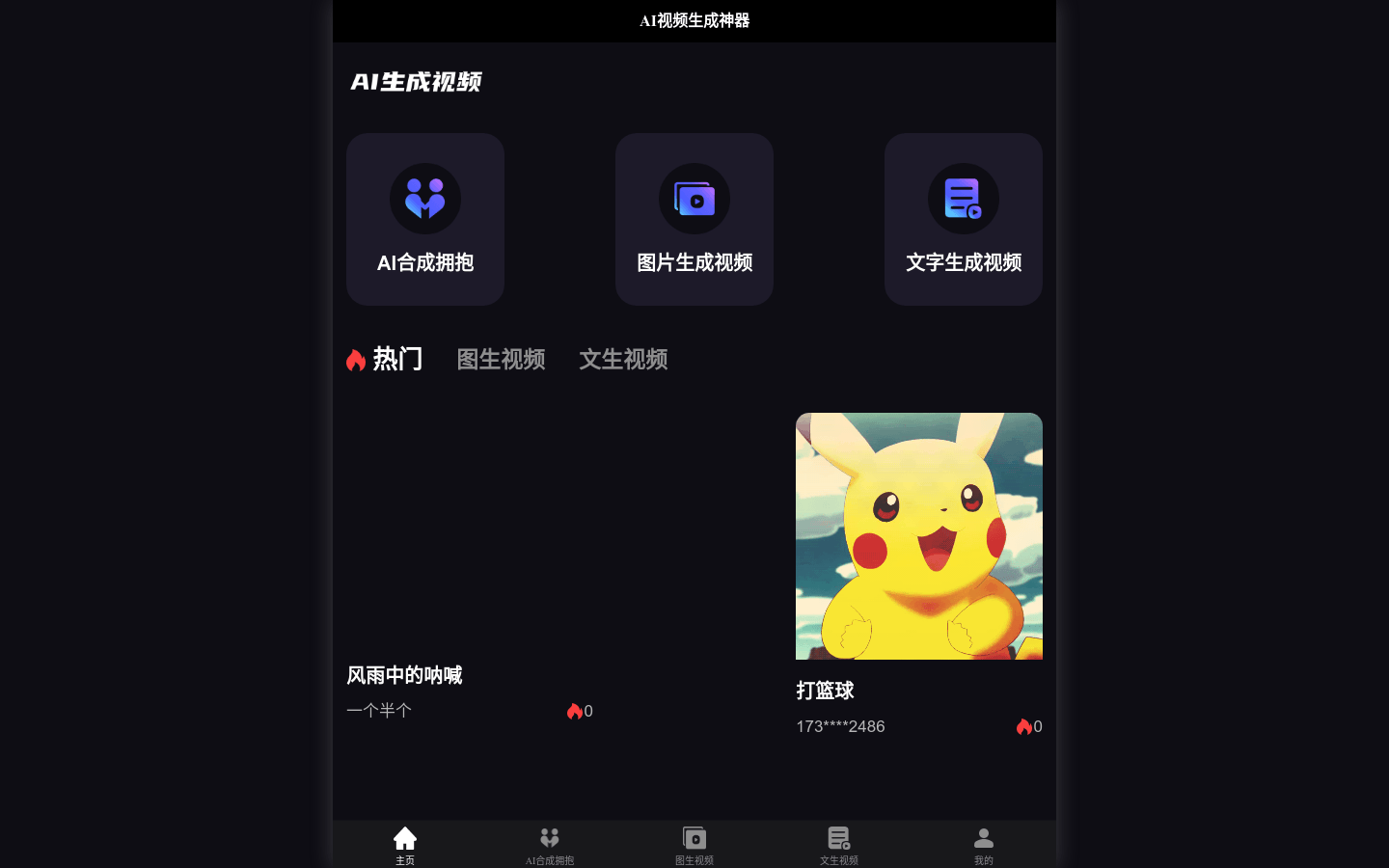

Jingyi Intelligent AI Video Generation

Jingyi Intelligent AI Video Generation Artifact is a product that uses artificial intelligence technology to convert static old photos into dynamic videos. It combines deep learning and image processing technology to allow users to easily resurrect precious old photos and create memorable video content. The main advantages of this product include easy operation, realistic effects, and personalized customization. It can not only meet the needs of individual users for the organization and innovation of home imaging materials, but also provide a novel marketing and publicity method for business users. Currently, this product provides a free trial, and further information on specific pricing and positioning is required.

TANGO Model

TANGO is a co-lingual gesture video reproduction technology based on hierarchical audio-motion embedding and diffusion interpolation. It uses advanced artificial intelligence algorithms to convert voice signals into corresponding gesture movements to achieve natural reproduction of the gestures of the characters in the video. This technology has broad application prospects in video production, virtual reality, augmented reality and other fields, and can improve the interactivity and realism of video content. TANGO was jointly developed by the University of Tokyo and CyberAgent AI Lab and represents the current cutting-edge level of artificial intelligence in the fields of gesture recognition and action generation.

Coverr AI Workflows

Coverr AI Workflows is a platform focused on AI video generation, providing a variety of AI tools and workflows to help users generate high-quality video content in simple steps. The platform brings together the wisdom of AI video experts. Through workflows shared by the community, users can learn how to use different AI tools to create videos. The background of Coverr AI Workflows is based on the increasingly widespread application of artificial intelligence technology in the field of video production. It lowers the technical threshold of video creation by providing an easy-to-understand and operate workflow, allowing non-professionals to create professional-level video content. Coverr AI Workflows currently provides free video and music resources, targeting the video production needs of creative workers and small businesses.

AI video generation artifact

AI video generation artifact is an online tool that uses artificial intelligence technology to convert pictures or text into video content. Through deep learning algorithms, it can understand the meaning of pictures and text and automatically generate attractive video content. The application of this technology has greatly reduced the cost and threshold of video production, allowing ordinary users to easily produce professional-level videos. Product background information shows that with the rise of social media and video platforms, users' demand for video content is growing day by day. However, traditional video production methods are costly and time-consuming, making it difficult to meet the rapidly changing market demand. The emergence of AI video generation artifacts has just filled this market gap, providing users with a fast and low-cost video production solution. Currently, this product provides a free trial, and the specific price needs to be checked on the website.

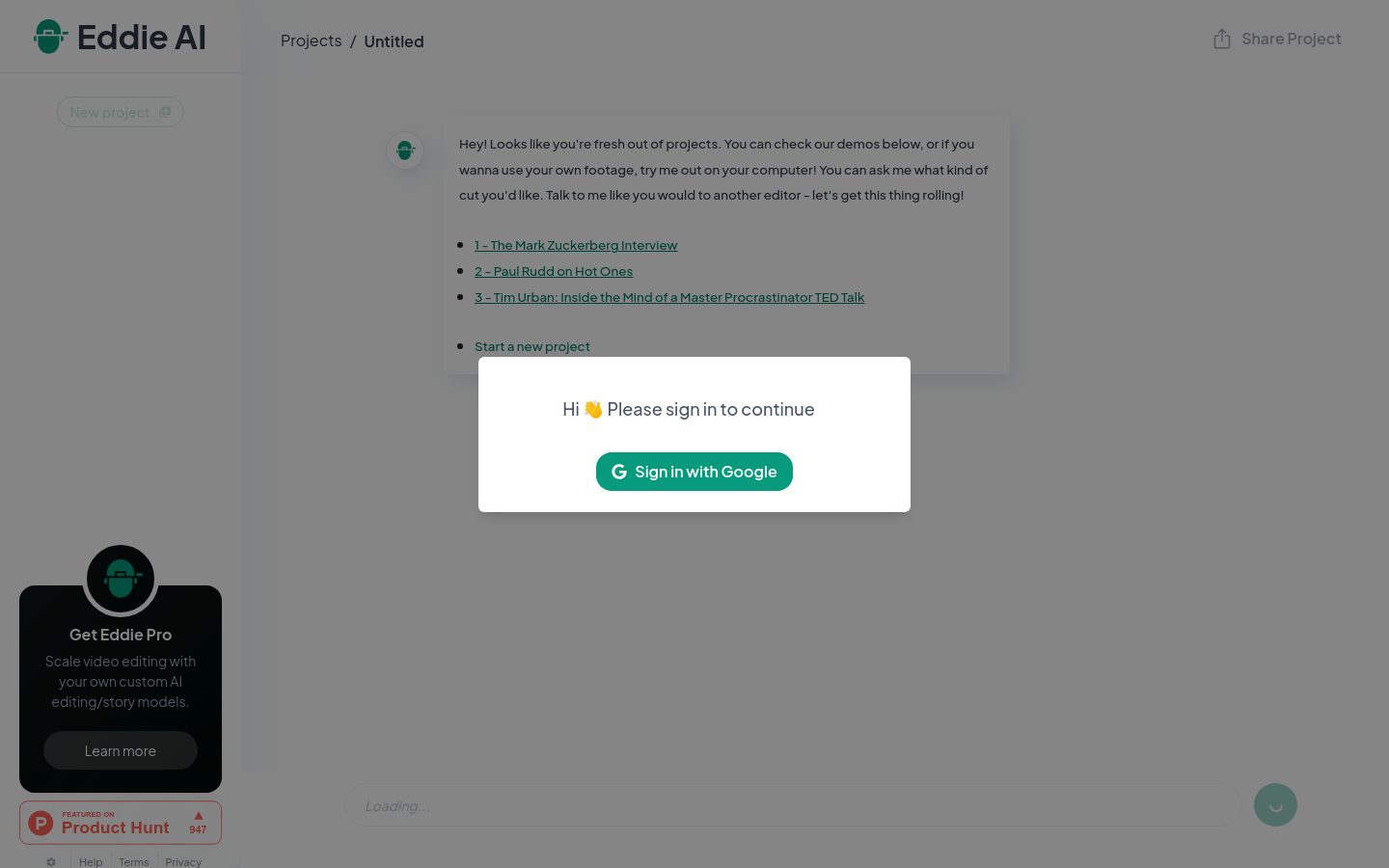

Eddie AI

Eddie AI is an innovative video editing platform that uses artificial intelligence technology to help users edit videos quickly and easily. The main advantage of this platform is its user-friendliness and efficiency, which allows users to talk to the AI as if they were talking to another editor, proposing the type of video clip they want. Background information on Eddie AI reveals that it aims to scale video editing through the use of custom AI editing/storytelling models, suggesting its potential revolutionary impact in the world of video production.

Pyramid Flow

Pyramid Flow is an efficient video generation modeling technology based on flow matching methods and implemented through autoregressive video generation models. The main advantage of this technology is that it has high training efficiency and can be trained on open source data sets with low GPU hours to generate high-quality video content. The background information of Pyramid Flow includes that it was jointly developed by Peking University, Kuaishou Technology and Beijing University of Posts and Telecommunications, and related papers, codes and models have been published on multiple platforms.

AI Hug Video

AI Hug Video Generator is an online platform that uses advanced machine learning technology to transform static photos into dynamic, lifelike hug videos. Users can create personalized, emotion-filled videos based on their precious photos. The technology creates photorealistic digital hugs by analyzing real human interactions, including subtle gestures and emotions. The platform provides a user-friendly interface, making it easy for both technology enthusiasts and video production novices to create AI hug videos. Additionally, the resulting video is high-definition and suitable for sharing on any platform, ensuring great results on every screen.

LLaVA-Video

LLaVA-Video is a large-scale multi-modal model (LMMs) focused on video instruction tuning. It solves the problem of obtaining large amounts of high-quality raw data from the network by creating a high-quality synthetic dataset LLaVA-Video-178K. This dataset includes tasks such as detailed video description, open-ended question and answer, and multiple-choice question and answer, and is designed to improve the understanding and reasoning capabilities of video language models. The LLaVA-Video model performs well on multiple video benchmarks, proving its effectiveness on the dataset.

JoggAI

JoggAI is a platform that uses artificial intelligence technology to help users quickly convert product links or visual materials into attractive video ads. It provides rich templates, diverse AI avatars, and fast-response services to create engaging content and drive website traffic and sales. The main advantages of JoggAI include rapid video content creation, AI script writing, batch mode production, video clip understanding, text-to-speech conversion, etc. These features make JoggAI ideal for e-commerce, marketing, sales and business owners as well as agencies and freelancers who need to produce video content efficiently.

Hailuo AI

Hailuo AI Video Generator is a tool that uses artificial intelligence technology to automatically generate video content based on text prompts. It uses deep learning algorithms to convert users' text descriptions into visual images, which greatly simplifies the video production process and improves creation efficiency. This product is suitable for individuals and businesses who need to quickly generate video content, especially in areas such as advertising, social media content production and movie previews.

Lighting AI

Guangying AI is a platform that uses artificial intelligence technology to help users quickly create popular videos. It simplifies the video editing process through AI technology, allowing users to produce high-quality video content without video editing skills. This platform is particularly suitable for individuals and businesses that need to quickly produce video content, such as social media operators, video bloggers, etc.

Meta Movie Gen

Meta Movie Gen is an advanced media-based AI model that allows users to generate customized video and sound, edit existing videos or convert personal images into unique videos with simple text input. This technology represents the latest breakthrough of AI in content creation, providing content creators with unprecedented creative freedom and efficiency.

JoyHallo

JoyHallo is a digital human model designed for Mandarin video generation. It created the jdh-Hallo dataset by collecting 29 hours of Mandarin videos from employees of JD Health International Co., Ltd. The dataset covers different ages and speaking styles, including conversational and professional medical topics. The JoyHallo model uses the Chinese wav2vec2 model for audio feature embedding, and proposes a semi-decoupled structure to capture the interrelationships between lips, expressions and gesture features, improving information utilization efficiency and speeding up inference by 14.3%. In addition, JoyHallo also performs well in generating English videos, demonstrating excellent cross-language generation capabilities.

MIMO

MIMO is a universal video synthesis model capable of simulating anyone interacting with objects in complex movements. It is capable of synthesizing character videos with controllable attributes (such as characters, actions, and scenes) based on simple user-provided inputs (such as reference images, pose sequences, scene videos, or images). MIMO achieves this by encoding 2D video into a compact spatial code and decomposing it into three spatial components (main character, underlying scene, and floating occlusion). This approach allows flexible user control, spatial motion expression, and 3D perception synthesis, suitable for interactive real-world scenarios.

LVCD

LVCD is a reference-based line drawing video coloring technology that uses a large-scale pre-trained video diffusion model to generate colorized animated videos. This technology uses Sketch-guided ControlNet and Reference Attention to achieve color processing of animation videos with fast and large movements while ensuring temporal coherence. The main advantages of LVCD include temporal coherence in generating colorized animated videos, the ability to handle large motions, and high-quality output results.

ComfyUI-LumaAI-API

ComfyUI-LumaAI-API is a plug-in designed for ComfyUI, which allows users to use the Luma AI API directly in ComfyUI. The Luma AI API is based on the Dream Machine video generation model, developed by Luma. This plug-in greatly enriches the possibilities of video generation by providing a variety of nodes, such as text to video, image to video, video preview, etc., and provides convenient tools for video creators and developers.