Awesome-Cluade-Artifacts

Share interesting content generated by Anthropic’s AI assistant Claude

Product Details

Awesome-Cluade-Artifacts is a GitHub repository dedicated to collecting and displaying interesting, substantive content generated in conversations by Anthropic's AI assistant Claude. These contents can be code snippets, Markdown documents, HTML pages, SVG images, Mermaid charts, or React components, etc. The platform encourages community members to share Claude Artifacts they find interesting, useful or creative, and provides detailed guidelines for contribution.

Main Features

How to Use

Target Users

The target audience is mainly developers, designers and creative workers who are interested in AI-generated content. They can find inspiration on this platform, learn how to use AI assistants to generate various contents, or gain recognition and feedback from the community by sharing their works.

Examples

An AI-generated job search assistant/CRM system

A snake game played by an AI

A 3D game with physics effects

An unfinished Worms-like full-screen game

A generator that generates word clouds by inputting a URL

A double pendulum physics simulator with adjustable initial angle to switch between regular and chaotic motion

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

Skywork-Reward-Gemma-2-27B

Skywork-Reward-Gemma-2-27B is an advanced reward model based on the Gemma-2-27B architecture, specifically designed to handle preferences in complex scenarios. The model was trained using 80K high-quality preference data from multiple fields including mathematics, programming, and security. Skywork-Reward-Gemma-2-27B ranked first in the RewardBench rankings in September 2024, demonstrating its strong capabilities in preference processing.

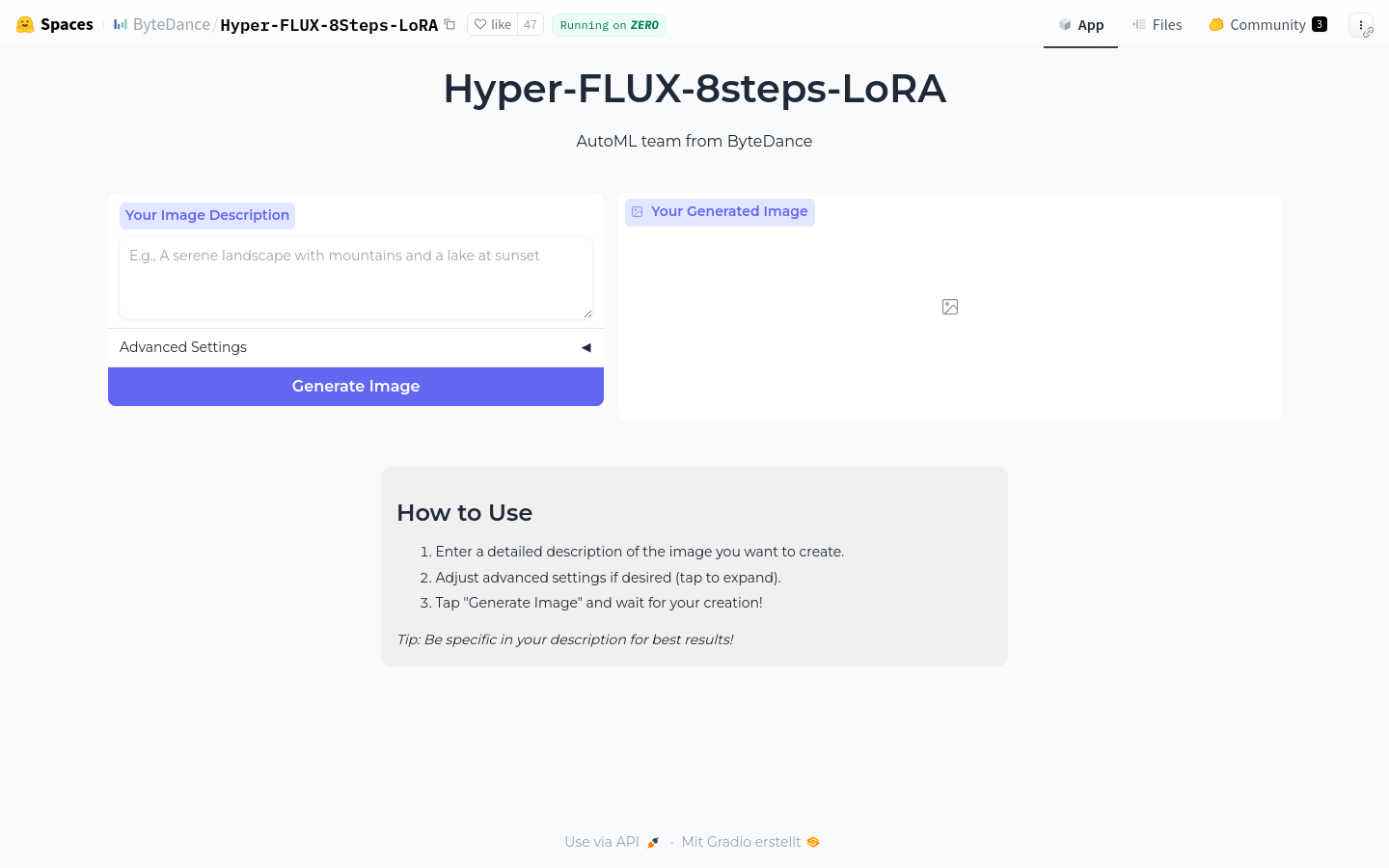

Hyper FLUX 8Steps LoRA

Hyper FLUX 8Steps LoRA is an AI model based on LoRA technology developed by ByteDance, aiming to improve the efficiency and effectiveness of model training. It provides an efficient and easy-to-use solution for AI researchers and developers by simplifying the model structure and reducing training steps while maintaining or improving model performance.

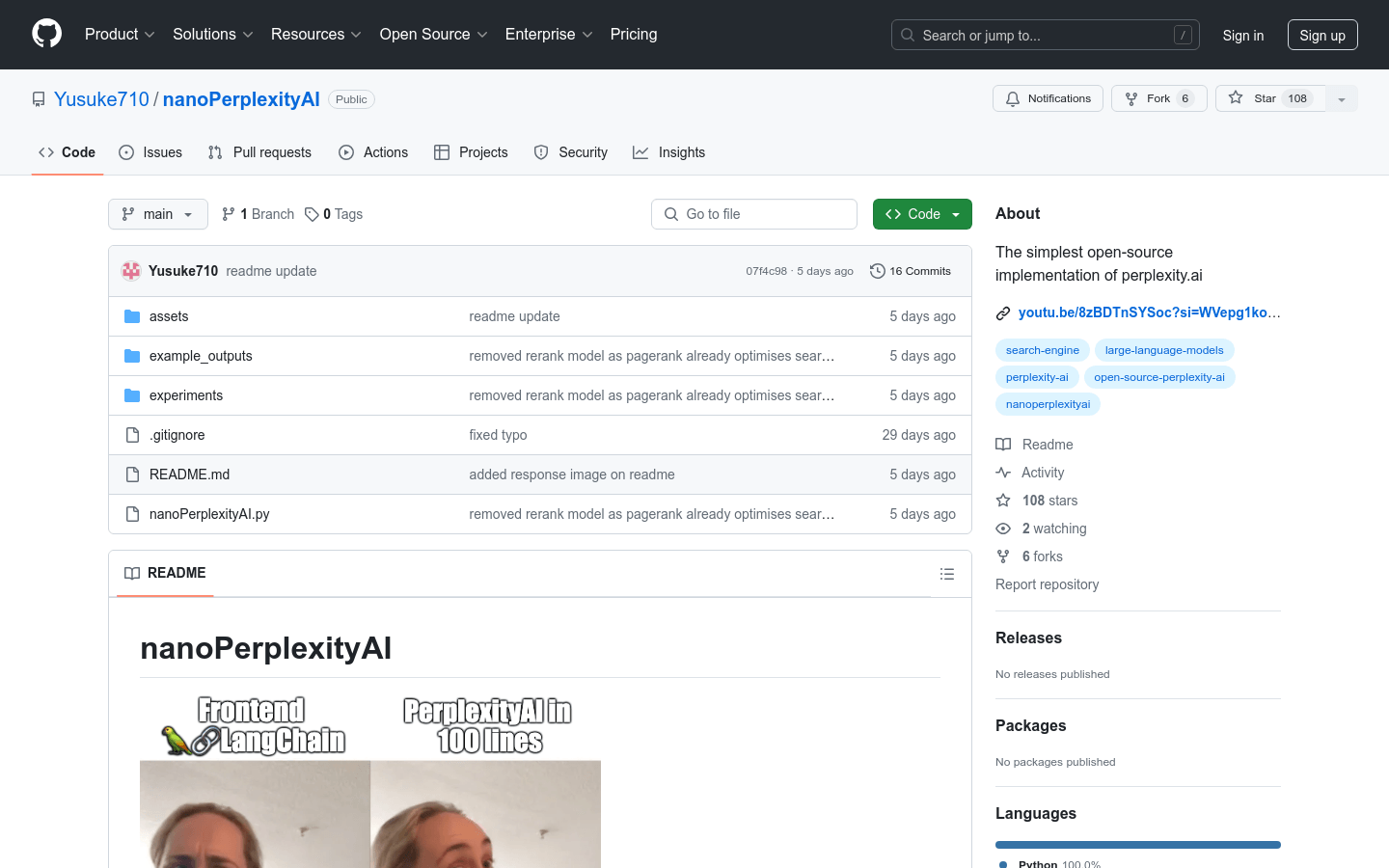

nanoPerplexityAI

nanoPerplexityAI is an open source implementation of a Large Language Model (LLM) service, citing information from Google. No complex GUI or LLM agent, just 100 lines of Python code.

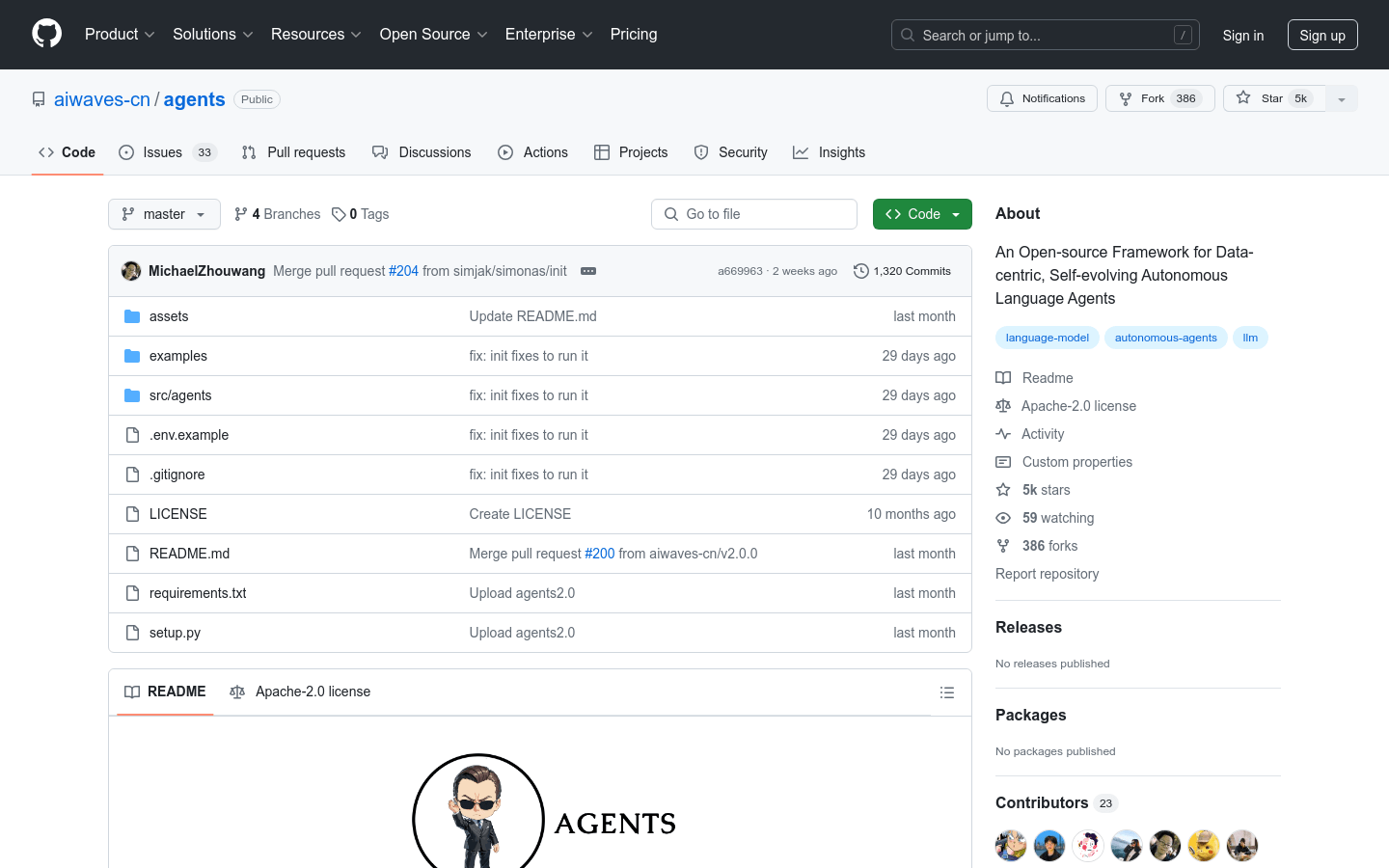

Agents 2.0

aiwaves-cn/agents is an open source framework focusing on data-driven adaptive language agents. It provides a systematic framework for training language agents through symbolic learning, inspired by the connectionist learning process used to train neural networks. The framework implements backpropagation and gradient-based weight updates using language-based losses, gradients, and weights, supporting the optimization of multi-agent systems.

DiT-MoE

DiT-MoE is a diffusion transformer model implemented using PyTorch, capable of scaling to 16 billion parameters, demonstrating highly optimized inference capabilities while competing with dense networks. It represents the cutting-edge technology in the field of deep learning when processing large-scale data sets and has important research and application value.

MInference

MInference is an inference acceleration framework for long-context large language models (LLMs). It takes advantage of the dynamic sparsity characteristics in the LLMs attention mechanism, significantly improves the speed of pre-filling through static pattern recognition and online sparse index approximate calculation, achieving a 10x acceleration in processing 1M context on a single A100 GPU, while maintaining the accuracy of inference.

Expert Specialized Fine-Tuning

Expert Specialized Fine-Tuning (ESFT) is an efficient customized fine-tuning method for large language models (LLMs) with a mixture of experts (MoE) architecture. It optimizes model performance by adjusting only the task-relevant parts, increasing efficiency while reducing resource and storage usage.

Nemotron-4-340B-Reward

Nemotron-4-340B-Reward is a multi-dimensional reward model developed by NVIDIA for use in synthetic data generation pipelines to help researchers and developers build their own large language models (LLMs). The model consists of a Nemotron-4-340B-Base model and a linear layer capable of converting the token at the end of the response into five scalar values, corresponding to the HelpSteer2 attribute. It supports context lengths of up to 4096 tokens and is able to score five attributes per assistant turn.

Skywork-MoE-Base

Skywork-MoE-Base is a high-performance hybrid expert (MoE) model with 146 billion parameters, consisting of 16 experts and 22 billion parameters activated. The model is initialized from the intensive checkpointing of the Skywork-13B model and introduces two innovative techniques: gated logic normalization to enhance expert diversity, and adaptive auxiliary loss coefficients, which allow layer-specific adjustment of the auxiliary loss coefficients. Skywork-MoE shows comparable or superior performance to models with more parameters or more activated parameters on various popular benchmarks.

Skywork-MoE

Skywork-MoE is a high-performance hybrid expert (MoE) model with 14.6 billion parameters, containing 16 experts and 2.2 billion activation parameters. The model is initialized from the intensive checkpointing of the Skywork-13B model and introduces two innovative techniques: gated logic normalization to enhance expert diversity, and adaptive auxiliary loss coefficients to allow layer-specific auxiliary loss coefficient adjustments. Skywork-MoE is comparable or better in performance than models with more parameters or more activated parameters such as Grok-1, DBRX, Mistral 8*22 and Deepseek-V2.

Llama-3 70B Gradient 524K Adapter

Llama-3 70B Gradient 524K Adapter is an adapter based on the Llama-3 70B model, developed by the Gradient AI Team. It aims to extend the context length of the model to 524K through LoRA technology, thereby improving the performance of the model when processing long text data. The model uses advanced training techniques, including NTK-aware interpolation and the RingAttention library, to efficiently train on high-performance computing clusters.

SliceGPT

Sliced GPT is a new post-training sparsification scheme that reduces the embedding dimension of the network by replacing each weight matrix with a smaller (dense) matrix. Through extensive experiments, we show that sliced GPT can remove up to 25% of model parameters (including embeddings) of LLAMA2-70B, OPT 66B, and Phi-2 models while maintaining 99%, 99%, and 90% of zero-shot task performance. Our slicing model runs on fewer GPUs and runs faster without any additional code optimization: on a 24GB consumer GPU, we reduce the total computation of LLAMA2-70B inference to 64% of the dense model; on a 40GB A100 GPU, we reduce it to 66%. We provide a new insight into computational invariance in transformer networks, which enables slicing GPT. We hope it inspires and facilitates new ways to reduce the memory and computational requirements of pretrained models in the future.

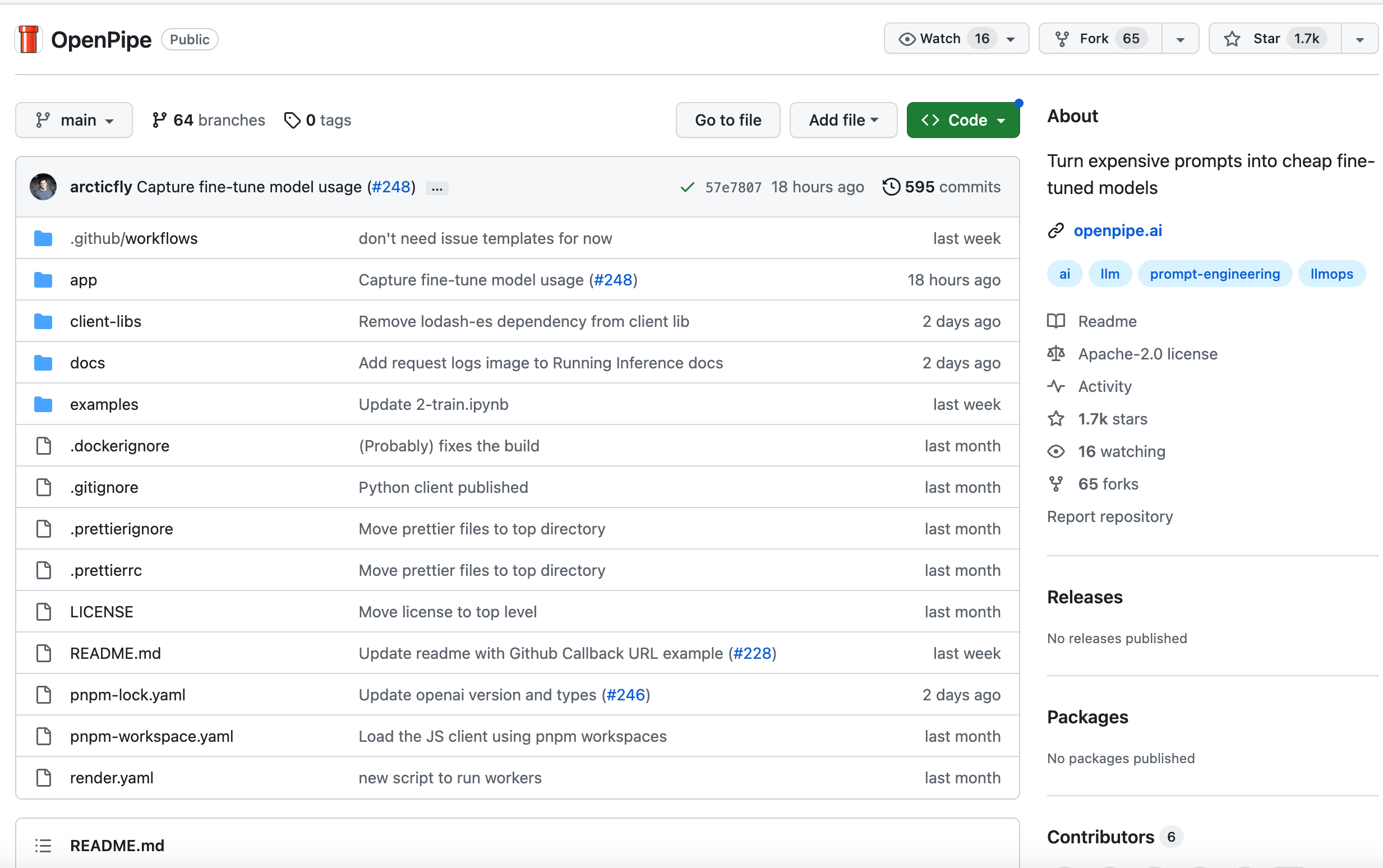

OpenPipe

OpenPipe/OpenPipe is a project that turns expensive hints into cheap fine-tuned models. It enables more efficient text generation by using pre-trained models and custom training data to create personalized models. The advantage of OpenPipe/OpenPipe is that it can provide users with high-quality text generation results while reducing training costs. The project's pricing strategy is flexible and offers a variety of pricing options to suit different user needs. The main functions of OpenPipe/OpenPipe include: training with expensive hints, generating customized models, efficient text generation, reducing training costs, etc.