SAM-Graph

An innovative approach to 3D instance segmentation

Product Details

SAM-guided Graph Cut for 3D Instance Segmentation is a deep learning method that utilizes 3D geometry and multi-view image information for 3D instance segmentation. This method effectively utilizes 2D segmentation models for 3D instance segmentation through a 3D to 2D query framework, constructs superpoint graphs through graph cut problems, and achieves robust segmentation performance for different types of scenes through graph neural network training.

Main Features

How to Use

Target Users

This technology is suitable for fields that require 3D instance segmentation, such as autonomous driving, robot navigation, augmented reality, etc., and is especially suitable for application scenarios that need to process complex scenes and lack high-diversity 3D annotation data.

Examples

In autonomous driving, 3D instance segmentation of the surrounding environment is performed to identify and track vehicles and pedestrians.

In robot navigation, 3D instance segmentation is performed on the indoor environment to achieve accurate path planning.

In augmented reality, 3D instance segmentation is performed on real-world scenes to achieve natural integration of virtual objects and the real world.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

Image Describer

Image Describer is a tool that uses artificial intelligence technology to upload images and output image descriptions according to user needs. It understands image content and generates detailed descriptions or explanations to help users better understand the meaning of the image. This tool is not only suitable for ordinary users, but also helps visually impaired people understand the content of pictures through text-to-speech function. The importance of the image description generator lies in its ability to improve the accessibility of image content and enhance the efficiency of information dissemination.

Viewly

Viewly is a powerful AI image recognition application that can identify the content in images, compose poems and translate them into multiple languages through AI technology. It represents the current cutting-edge technology of artificial intelligence in the fields of image recognition and language processing. Its main advantages include high recognition accuracy, multi-language support and creative AI poetry writing functions. Viewly’s background information shows that it is a continuously updated product dedicated to providing users with more innovative features. Currently, the product is available to users for free.

PimEyes

PimEyes is a website that uses facial recognition technology to provide a reverse image search service. Users can upload photos to find pictures or personal information on the Internet that are similar to the photo. This service is valuable in protecting privacy, locating missing persons, and verifying copyrights. Through its advanced algorithms, PimEyes provides users with a powerful tool to help them find and identify images on the web.

YOLO11

Ultralytics YOLO11 is a further development of previous YOLO series models, introducing new features and improvements to increase performance and flexibility. YOLO11 is designed to be fast, accurate, and easy to use, making it ideal for a wide range of object detection, tracking, instance segmentation, image classification, and pose estimation tasks.

Revisit Anything

Revisit Anything is a visual location recognition system that uses image fragment retrieval technology to identify and match locations in different images. It combines SAM (Spatial Attention Module) and DINO (Distributed Knowledge Distillation) technologies to improve the accuracy and efficiency of visual recognition. This technology has important application value in fields such as robot navigation and autonomous driving.

Joy Caption Alpha One

Joy Caption Alpha One is an AI-based image caption generator that converts image content into text descriptions. It leverages deep learning technology to generate accurate and vivid descriptions by understanding objects, scenes, and actions in images. This technology is important in assisting visually impaired people to understand image content, enhance image search capabilities, and improve the accessibility of social media content.

Open Source Computer Vision Library

OpenCV is a cross-platform open source computer vision and machine learning software library that provides a range of programming functions, including but not limited to image processing, video analysis, feature detection, machine learning, etc. This library is widely used in academic research and commercial projects, and is favored by developers because of its powerful functionality and flexibility.

GOT-OCR2.0

GOT-OCR2.0 is an open source OCR model that aims to promote optical character recognition technology towards OCR-2.0 through a unified end-to-end model. This model supports a variety of OCR tasks, including but not limited to ordinary text recognition, formatted text recognition, fine-grained OCR, multi-crop OCR and multi-page OCR. It is based on the latest deep learning technology and can handle complex text recognition scenarios with high accuracy and efficiency.

bonding_w_geimini

bonding_w_geimini is an image processing application developed based on the Streamlit framework. It allows users to upload pictures, perform object detection through the Gemini API, and draw the bounding box of the object directly on the picture. This application uses machine learning models to identify and locate objects in pictures, which is of great significance to fields such as image analysis, data annotation, and automated image processing.

clip-image-search

clip-image-search is an image search tool based on Open AI's pre-trained CLIP model, capable of retrieving images through text or image queries. CLIP models are trained to map images and text into the same latent space, allowing comparison through similarity measures. The tool uses images from the Unsplash dataset and utilizes Amazon Elasticsearch Service for k-nearest neighbor search. It deploys query services through AWS Lambda functions and API gateways, and the front end is developed using Streamlit.

Segment Anything 2 for Surgical Video Segmentation

Segment Anything 2 for Surgical Video Segmentation is a surgical video segmentation model based on Segment Anything Model 2. It uses advanced computer vision technology to automatically segment surgical videos to identify and locate surgical tools, improving the efficiency and accuracy of surgical video analysis. This model is suitable for various surgical scenarios such as endoscopic surgery and cochlear implant surgery, and has the characteristics of high accuracy and high robustness.

SA-V Dataset

SA-V Dataset is an open-world video dataset designed for training general object segmentation models, containing 51K diverse videos and 643K spatio-temporal segmentation masks (masklets). This dataset is used for computer vision research and is allowed to be used under the CC BY 4.0 license. Video content is diverse and includes topics such as places, objects, and scenes, with masks ranging from large-scale objects such as buildings to details such as interior decorations.

Segment Anything Model 2

Segment Anything Model 2 (SAM 2) is a visual segmentation model launched by FAIR, the AI research department of Meta Corporation. It implements real-time video processing through a simple transformer architecture and streaming memory design. The model builds a model loop data engine through user interaction, collecting SA-V, the largest video segmentation dataset to date. SAM 2 is trained on this dataset and provides strong performance across a wide range of tasks and vision domains.

SAM 2

Meta Segment Anything Model 2 (SAM 2) is a next-generation model developed by Meta for real-time, promptable object segmentation in videos and images. It achieves state-of-the-art performance and supports zero-shot generalization, i.e., no need for custom adaptation to apply to previously unseen visual content. The release of SAM 2 follows an open science approach, with the code and model weights shared under the Apache 2.0 license, and the SA-V dataset also shared under the CC BY 4.0 license.

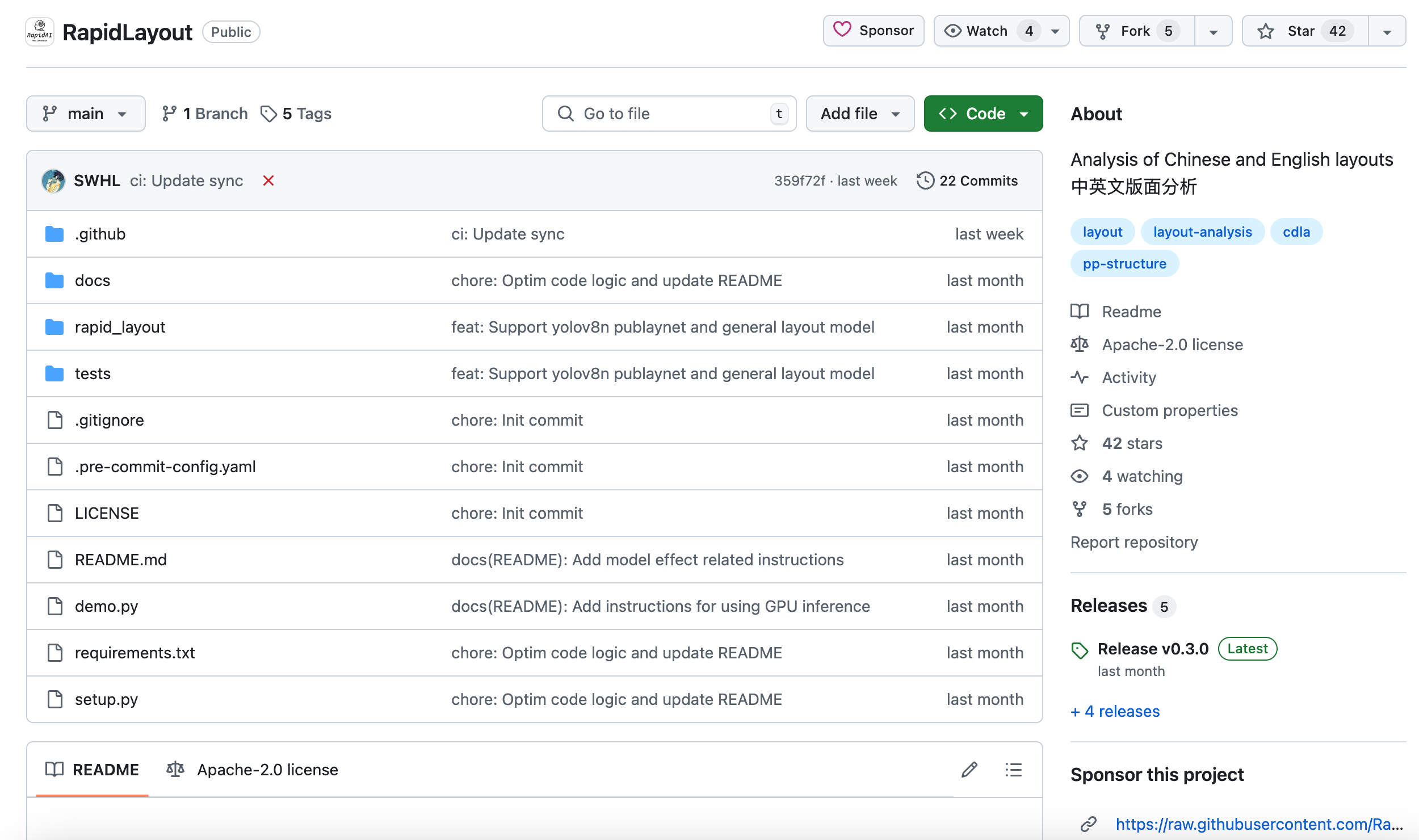

RapidLayout

RapidLayout is an open source tool that focuses on document image layout analysis. It can analyze the layout structure of document category images and locate various parts such as titles, paragraphs, tables, and pictures. It supports layout analysis in multiple languages and scenarios, including Chinese and English, and can meet the needs of different business scenarios.

RoboflowSports

roboflow/sports is an open source computer vision toolset focusing on applications in the sports field. It utilizes advanced image processing technologies such as object detection, image segmentation, key point detection, etc. to solve challenges in sports analysis. This toolset was developed by Roboflow to promote the application of computer vision technology in the sports field and is continuously optimized through community contributions.