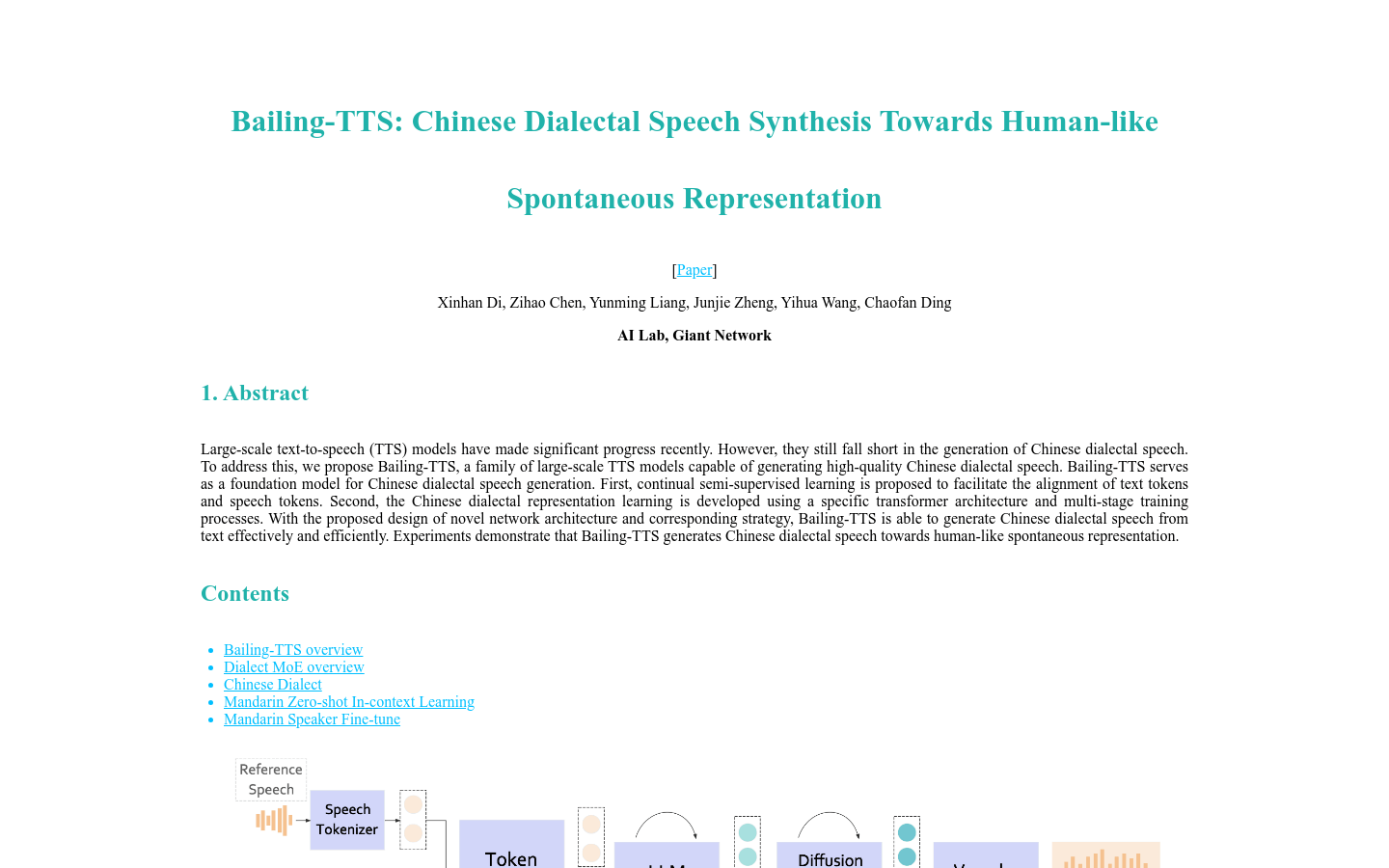

Bailing-TTS

Large-scale text-to-speech models that generate high-quality Chinese dialect speech.

Product Details

Bailing-TTS is a large-scale text-to-speech (TTS) model series developed by Giant Network’s AI Lab that focuses on generating high-quality Chinese dialect speech. The model uses continuous semi-supervised learning and a specific Transformer architecture to effectively align text and speech tokens through a multi-stage training process to achieve high-quality speech synthesis in Chinese dialects. Bailing-TTS has demonstrated speech synthesis effects close to natural human expressions in experiments, which is of great significance to the field of dialect speech synthesis.

Main Features

How to Use

Target Users

Bailing-TTS is mainly targeted at developers and enterprises that require high-quality Chinese dialect speech synthesis, such as speech synthesis application developers, smart assistants, educational software, etc. It is particularly suitable for scenarios that require a natural and authentic dialect experience in voice interaction to enhance user experience.

Examples

The intelligent assistant uses Bailing-TTS to generate voice feedback in Henan dialect, providing a more intimate interactive experience.

Educational software uses Bailing-TTS to provide speech synthesis of native language teaching content for students in dialect areas.

Speech synthesis application developers use Bailing-TTS to provide customized dialect voice services for users in different regions.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

Fish Audio

Fish Audio is a platform that provides text-to-speech conversion services. Using generative AI technology, users can convert text into natural and smooth speech. The platform supports voice cloning technology, allowing users to create and use personalized voices. It is suitable for a variety of scenarios such as entertainment, education and business, providing users with an innovative way of interaction.

Pandrator

Pandrator is an open source software-based tool capable of converting text, PDF, EPUB and SRT files into speech audio in multiple languages, including speech cloning, LLM-based text preprocessing and saving the generated subtitle audio directly to the video file, mixed with the video's original audio track. It is designed to be easy to use and install, with a one-click installer and graphical user interface.

StreamVC

StreamVC is a real-time low-latency speech conversion solution developed by Google that can match the timbre of the target speech while maintaining the content and rhythm of the source speech. The technology is particularly suitable for real-time communication scenarios such as phone calls and video conferencing, and can be used for use cases such as voice anonymization. StreamVC utilizes the architecture and training strategy of the SoundStream neural audio codec to achieve lightweight and high-quality speech synthesis. It also demonstrates the effectiveness of learning soft speech units and providing whitened fundamental frequency information to improve pitch stability without leaking source timbre information.

CosyVoice

CosyVoice is a large-scale multi-lingual speech generation model that not only supports speech generation in multiple languages, but also provides full-stack capabilities from inference to training to deployment. This model is important in the field of speech synthesis because it can generate natural and smooth speech that is close to real people and is suitable for multiple language environments. Background information on CosyVoice shows that it was developed by the FunAudioLLM team under the Apache-2.0 license.

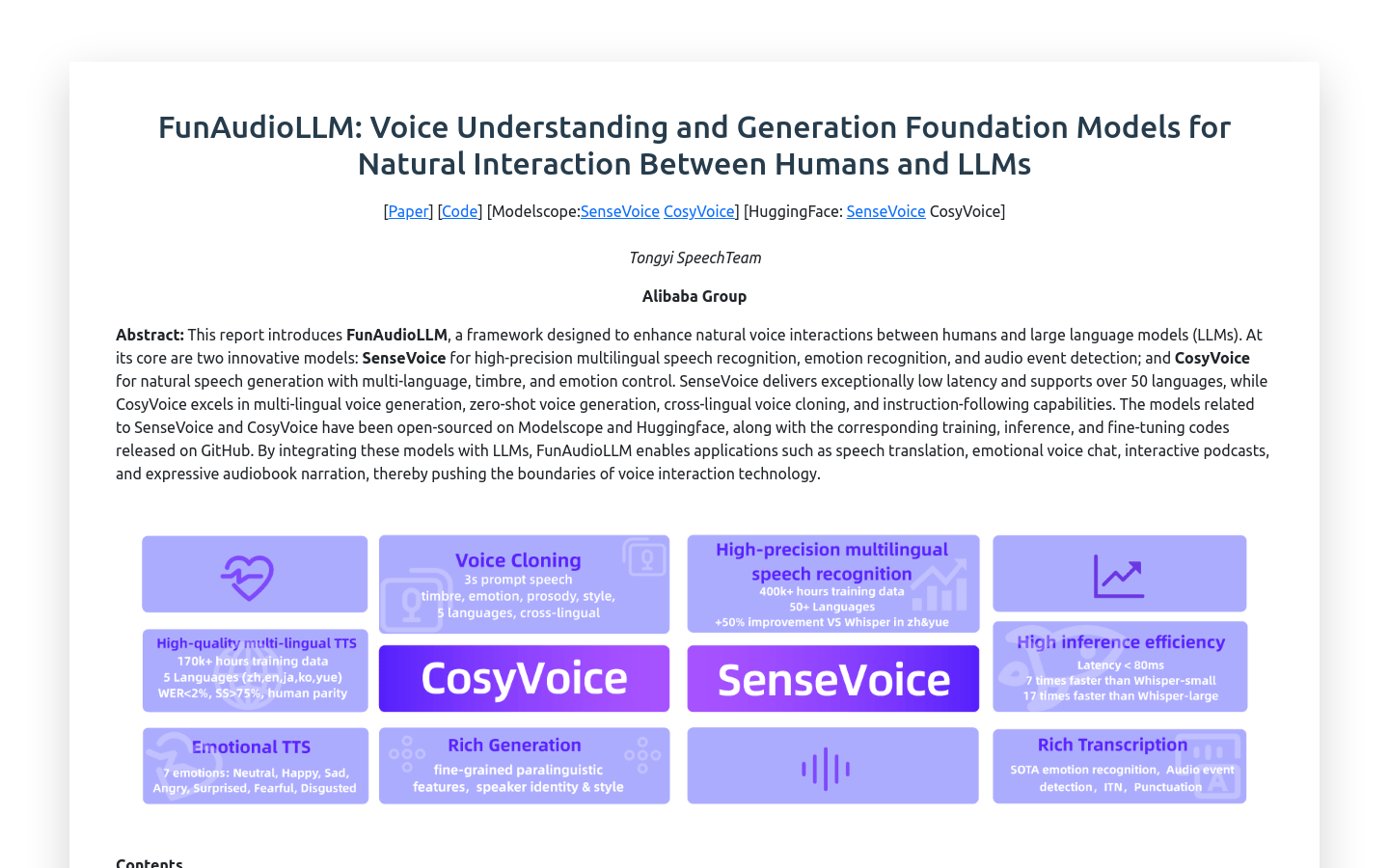

FunAudioLLM

FunAudioLLM is a framework designed to enhance natural speech interaction between humans and Large Language Models (LLMs). It contains two innovative models: SenseVoice is responsible for high-precision multilingual speech recognition, emotion recognition and audio event detection; CosyVoice is responsible for natural speech generation and supports multilingual, timbre and emotion control. SenseVoice supports more than 50 languages and has extremely low latency; CosyVoice is good at multilingual voice generation, zero-sample context generation, cross-language voice cloning and command following capabilities. The relevant models have been open sourced on Modelscope and Huggingface, and the corresponding training, inference and fine-tuning codes have been released on GitHub.

SenseVoice

SenseVoice is a basic speech model that includes multiple speech understanding capabilities such as automatic speech recognition (ASR), speech language recognition (LID), speech emotion recognition (SER), and audio event detection (AED). It focuses on high-precision multilingual speech recognition, speech emotion recognition and audio event detection, supports more than 50 languages, and its recognition performance exceeds the Whisper model. The model uses a non-autoregressive end-to-end framework with extremely low inference latency, making it ideal for real-time speech processing.

Fish Speech V1.2

Fish Speech V1.2 is a text-to-speech (TTS) model trained on 300,000 hours of English, Chinese and Japanese audio data. This model represents the latest progress in speech synthesis technology and can provide high-quality speech output and is suitable for multiple language environments.

Azure Cognitive Services Speech

Azure Cognitive Services Speech is a speech recognition and synthesis service launched by Microsoft that supports speech-to-text and text-to-speech functions in more than 100 languages and dialects. It improves the accuracy of your transcriptions by creating custom speech models that handle specific terminology, background noise, and accents. In addition, the service also supports real-time speech-to-text, speech translation, text-to-speech and other functions, and is suitable for a variety of business scenarios, such as subtitle generation, post-call transcription analysis, video translation, etc.

OpenVoice

OpenVoice is an open source voice cloning technology that can accurately clone reference timbres and generate voices in multiple languages and accents. It can flexibly control speech style parameters such as emotion and accent, as well as rhythm, pauses and intonation, etc. It implements zero-shot cross-language speech cloning, that is, neither the language of the generated speech nor the reference speech needs to appear in the training data.

Mixboard

Mixboard is an innovative AI tool designed to help users with concept development and creative expansion. It allows users to explore, expand and refine ideas through an AI-powered interface for designers, creatives and teamwork. The tool is seamlessly integrated, easy to use, and suitable for all types of users, whether individuals or teams can benefit from it.

AstroChart.ai

AstroChart.ai is an artificial intelligence platform that provides personalized horoscope and birth chart readings. By integrating traditions such as Western astrology, Indian astrology, Chinese astrology and body design, it helps users gain a deeper understanding of their own cosmic journey.

Brooke & Jubal in the Morning

Brooke and Jubal Update is a website that tells the complete story of radio morning duo Brooke and Jubal, telling their split, personal moves, and current activities. The website presents the story of this well-known morning duo in the broadcast industry by introducing in detail the past, current situation and important program clips of the two hosts.

SpatialChat

SpatialChat is an AI-driven event and webinar platform designed to increase engagement, increase interactivity, and provide a seamless virtual experience. The main advantages of this platform include powerful AI technology support, rich functions, strong customizability, multiple integration options, etc.

Base44

Base44 is a platform for quickly building apps without coding or setup. It provides powerful tools and functions to help users easily transform ideas into practical applications without complex technical knowledge and programming experience.

Destiny Matrix Chart Calculator

Matrix Destiny Chart is a powerful system that combines numerology, tarot, archetypes and energy work to reveal your soul's journey and reveal your strengths, challenges and purpose. It calculates a personalized matrix to reveal 22 key locations representing different aspects of your life, from your core essence to relationships, career paths and spiritual growth.