LLaMA Assistant for Mac

Simple helper on Mac, using llama-cpp-python.

Product Details

LLaMA Assistant for Mac is a desktop client developed based on the llama-cpp-python library and is designed to assist users with predefined requirements. It uses a lot of code from other projects, but replaces the ollama parts with llama-cpp-python to achieve a solution more consistent with Python programming style.

Main Features

How to Use

Target Users

LLaMA Assistant for Mac is primarily intended for Mac users, especially developers who are familiar with Python programming and want to automate or customize assistant functionality on Mac.

Examples

Developers can use LLaMA Assistant for Mac to automate routine programming tasks.

Users can set up LLaMA Assistant for Mac to manage files and applications on their Mac.

Programmers can use LLaMA Assistant for Mac to improve coding efficiency and enable fast input of code snippets.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

rag-chatbot

rag-chatbot is a chatbot model based on artificial intelligence technology that allows users to interact with multiple PDF files through natural language. The model uses the latest machine learning technologies, such as Huggingface and Ollama, to understand PDF content and generate answers. Its importance lies in its ability to process large amounts of document information and provide users with fast and accurate question and answer services. Product background information indicates that this is an open source project aimed at improving the efficiency of document processing through technological innovation. The project is currently free and is mainly aimed at developers and technology enthusiasts.

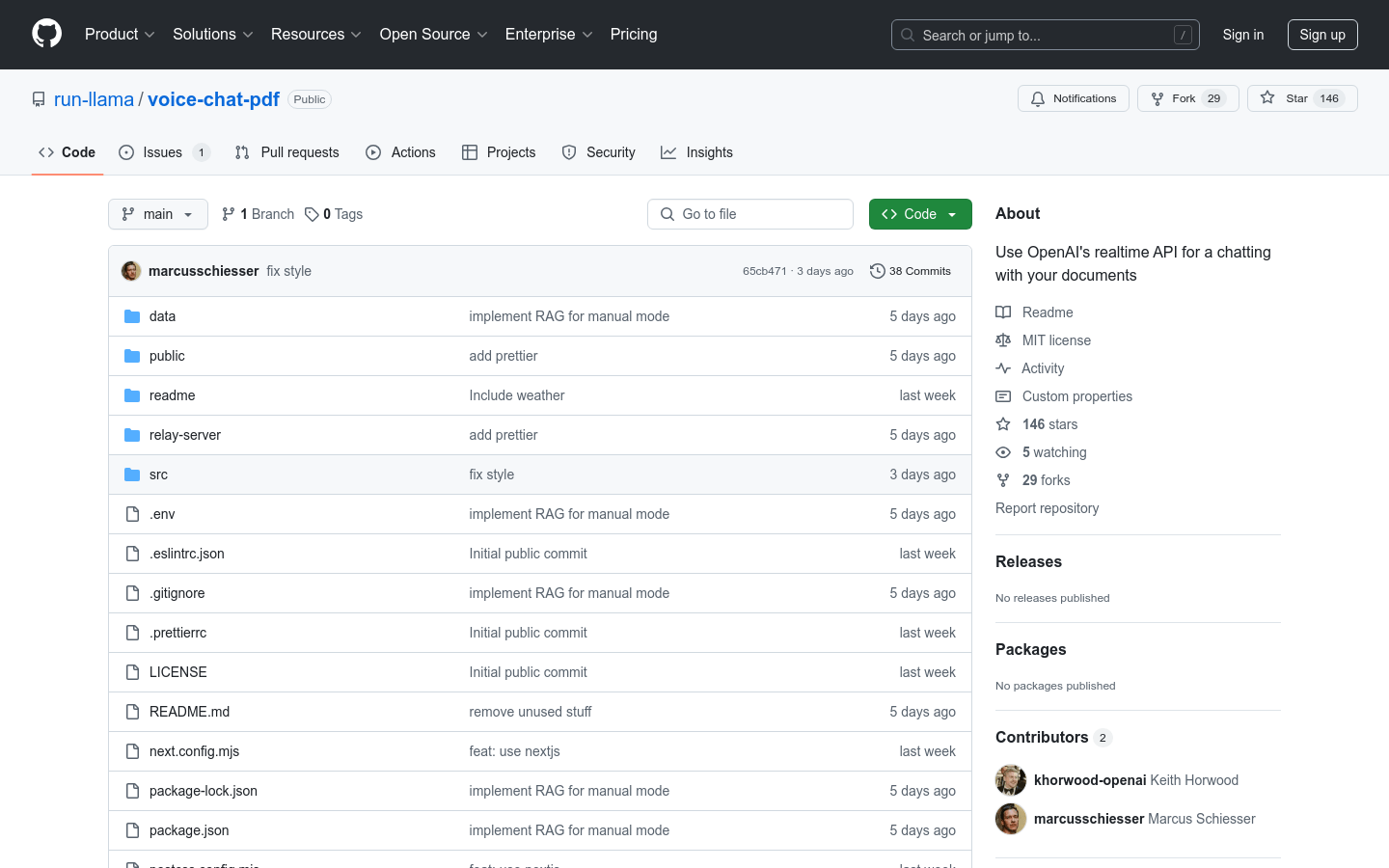

voice-chat-pdf

voice-chat-pdf is an example based on the LlamaIndex project and built using Next.js. It allows users to interact with PDF documents through voice through a simple RAG system. This project requires an OpenAI API key to access the real-time API and generate embedding vectors of documents within the project for voice interaction. It demonstrates how advanced machine learning techniques can be applied to improve the efficiency and convenience of document interaction.

gradio-bot

gradio-bot is a tool that turns Hugging Face Space or Gradio apps into Discord bots. It allows developers to quickly deploy existing machine learning models or applications to the Discord platform through simple command line operations to achieve automated interaction. This not only improves the accessibility of the application, but also provides developers with a new channel for direct interaction with users.

curiosity

curiosity is a chatbot project based on the ReAct framework, aiming to explore and build a Perplexity-like user interaction experience through LangGraph and FastHTML technology stack. The core of the project is a simple ReAct agent that uses Tavily search to enhance text generation. Supports three different LLMs (Large Language Models), including OpenAI's gpt-4o-mini, Groq's llama3-groq-8b-8192-tool-use-preview, and Ollama's llama3.1. The project builds the front-end via FastHTML, which overall provides a fast user experience, although there may be some challenges during debugging.

MemoryScope

MemoryScope is a framework that provides long-term memory capabilities for large language model (LLM) chatbots. It enables chatbots to store and retrieve memory fragments through memory databases and work libraries, thereby achieving personalized user interaction experience. Through operations such as memory retrieval and memory integration, this product enables the robot to understand and remember the user's habits and preferences, providing users with a more personalized and coherent conversation experience. MemoryScope supports multiple model APIs, including openai and dashscope, and can be used in conjunction with existing agent frameworks such as AutoGen and AgentScope, providing rich customization and scalability.

kotaemon

kotaemon is an open source, RAG (Retrieval-Augmented Generation) model-based tool designed to interact with user documents through a chat interface. It supports multiple language model API providers and local language models, and provides a clean and customizable user interface suitable for end users to perform document Q&A and developers to build their own RAG Q&A process.

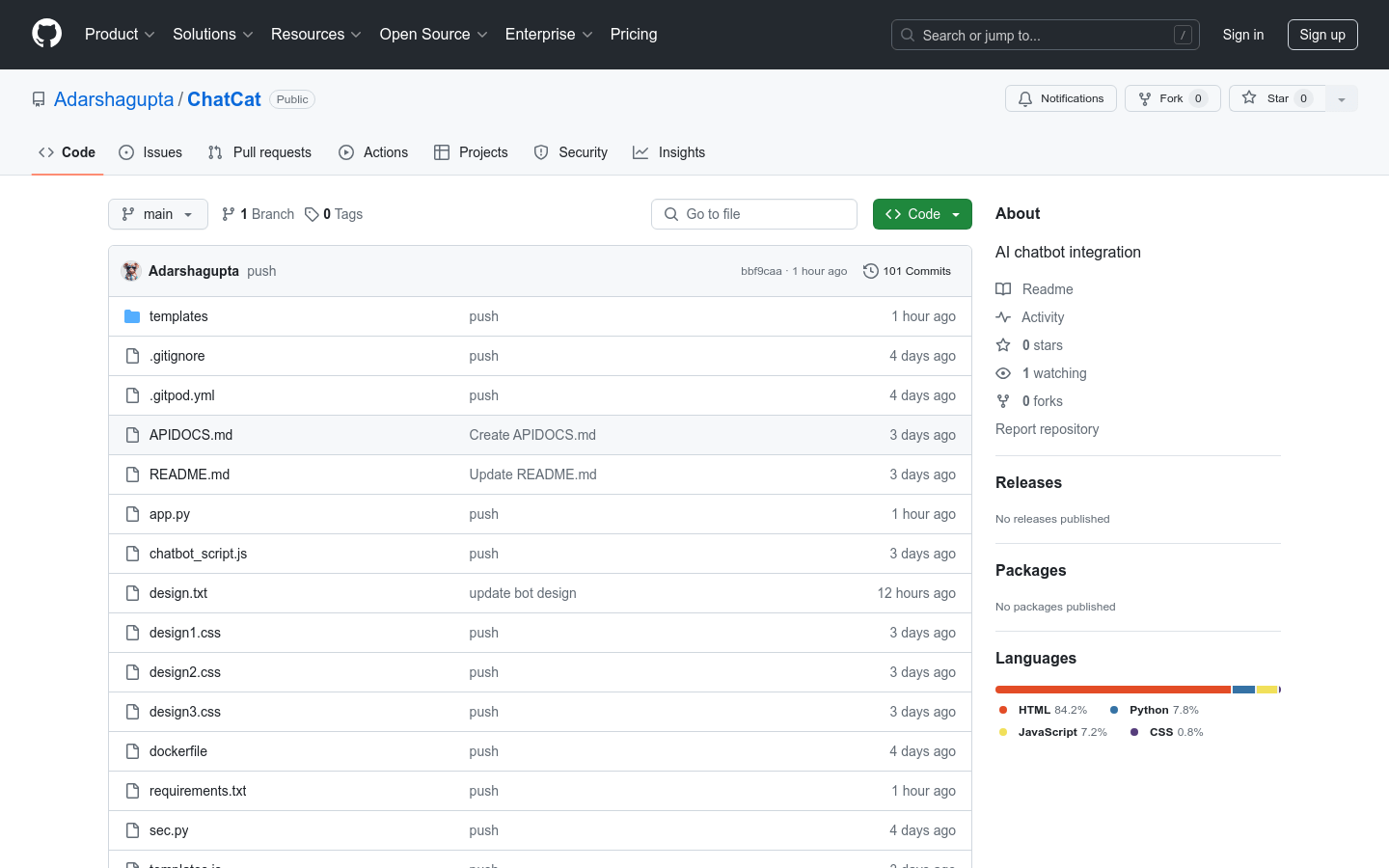

ChatCat

ChatCat is a web application designed to enable users to seamlessly create, deploy and manage AI-powered chatbots. These chatbots are trained to extract content from user-provided URLs and can provide real-time, context-aware responses. The application leverages the Together API to provide advanced AI capabilities, ensuring a high-quality interactive experience.

Meta-Llama-3.1-405B-Instruct

Meta Llama 3.1 is a series of large-scale pre-trained and instruction-tuned generative models in multiple languages, including 8B, 70B and 405B sized versions. These models are optimized for multilingual conversation use cases and outperform many open and closed source chat models on common industry benchmarks. The model uses an optimized transformer architecture and is tuned through supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF) to match human preferences for usefulness and safety.

Meta-Llama-3.1-8B-Instruct

Meta Llama 3.1 is a series of pre-trained and instruction-tuned multilingual large language models (LLMs) supporting 8 languages, optimized for conversational use cases, and improved safety and usefulness through supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF).

Typebot.io

Typebot is an open source chatbot builder that allows users to visually create advanced chatbots, embed them into any web/mobile application, and collect results in real time. It provides more than 34 building blocks, such as text, pictures, videos, audio, conditional branches, logic scripts, etc., and supports multiple integration methods, such as Webhook, OpenAI, Google Sheets, etc. Typebot supports custom themes to match brand identity and provides in-depth analysis capabilities to help users gain insight into the chatbot's performance.

Enchanted

Enchanted is an open source, Ollama-compatible app for macOS/iOS/visionOS that allows users to talk to private self-hosted language models such as Llama 2, Mistral, Vicuna, and more. It's basically a ChatGPT application interface connected to a private model. Enchanted's goal is to provide a product that allows for unfiltered, secure, private and multi-modal experiences across all devices in the iOS ecosystem (macOS, iOS, Watch, Vision Pro).

TalkWithGemini

TalkWithGemini is a cross-platform application that supports one-click free deployment. Users can interact with Gemini models through this application. It supports multi-modal interaction methods such as image recognition and voice dialogue to improve work efficiency.

GLM-4V-9B

GLM-4V-9B is a new generation of pre-training model launched by Zhipu AI. It supports Chinese and English bilingual multi-round dialogue at 1120*1120 high resolution, as well as visual understanding capabilities. In the multi-modal evaluation, GLM-4V-9B demonstrated excellent performance beyond GPT-4-turbo-2024-04-09, Gemini 1.0 Pro, Qwen-VL-Max and Claude 3 Opus.

GPT Computer Assistant

gpt-computer-assistant is an application designed for Windows, macOS and Ubuntu operating systems to provide an alternative ChatGPT application. It allows users to easily install through Python libraries, and plans to provide native installation scripts (.exe). The product is powered by Upsonic Tiger, a platform that provides a feature center for large language model (LLM) agents. Key benefits of the product include cross-platform compatibility, ease of installation and use, and future support for native models.

GLM-4-9B-Chat

GLM-4-9B-Chat is an open source version of the new generation pre-training model GLM-4 series launched by Zhipu AI. It has advanced functions such as multi-round dialogue, web browsing, code execution, custom tool invocation, and long text reasoning. It supports 26 languages including Japanese, Korean, and German, and has launched a model that supports 1M context length.

CogVLM2

CogVLM2 is a second-generation multi-modal pre-trained dialogue model developed by the Tsinghua University team. It has achieved significant improvements in multiple benchmark tests and supports 8K content length and 1344*1344 image resolution. CogVLM2 series models provide open source versions that support Chinese and English, with performance comparable to some non-open source models.