Cheating LLM Benchmarks

Research project exploring cheating in automated language model benchmarks.

Product Details

Cheating LLM Benchmarks is a research project aimed at exploring cheating in automated language model (LLM) benchmarks by building so-called "null models". The project experimentally found that even simple null models can achieve high winning rates on these benchmarks, challenging the validity and reliability of existing benchmarks. This research is important for understanding the limitations of current language models and improving benchmarking methods.

Main Features

How to Use

Target Users

The target audience is primarily researchers, developers in the field of natural language processing (NLP), and technology enthusiasts interested in language model performance evaluation. This project provides them with a platform to test and understand the benchmark performance of existing language models, as well as explore how to improve these testing methods.

Examples

Researchers use the project to test and analyze the performance of different language models on specific tasks.

Developers leverage the project's code and tools to build and evaluate their own language models.

Educational institutions may use this project as a teaching case to help students understand the complexities of language model evaluation.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

AutoArena

AutoArena is an automated generative AI evaluation platform focused on evaluating large language models (LLMs), retrieval-augmented generation (RAG) systems, and generative AI applications. It provides trusted assessments through automated head-to-head judgment, helping users find the best version of their system quickly, accurately, and cost-effectively. The platform supports the use of judgment models from different vendors, such as OpenAI, Anthropic, etc., and can also use locally run open source weight judgment models. AutoArena also provides Elo scores and confidence interval calculations to help users convert multiple head-to-head votes into leaderboard rankings. Additionally, AutoArena supports fine-tuning of custom judgment models for more accurate, domain-specific assessments and can be integrated into continuous integration (CI) processes to automate the assessment of generative AI systems.

SWE-bench Verified

SWE-bench Verified is a manually verified subset of SWE-bench released by OpenAI, designed to more reliably evaluate the ability of AI models to solve real-world software problems. By providing a code base and a description of the problem, it challenges the AI to generate a patch that solves the described problem. This tool was developed to improve the accuracy of assessments of a model's ability to autonomously complete software engineering tasks and is a key component of the medium risk level in the OpenAI Readiness Framework.

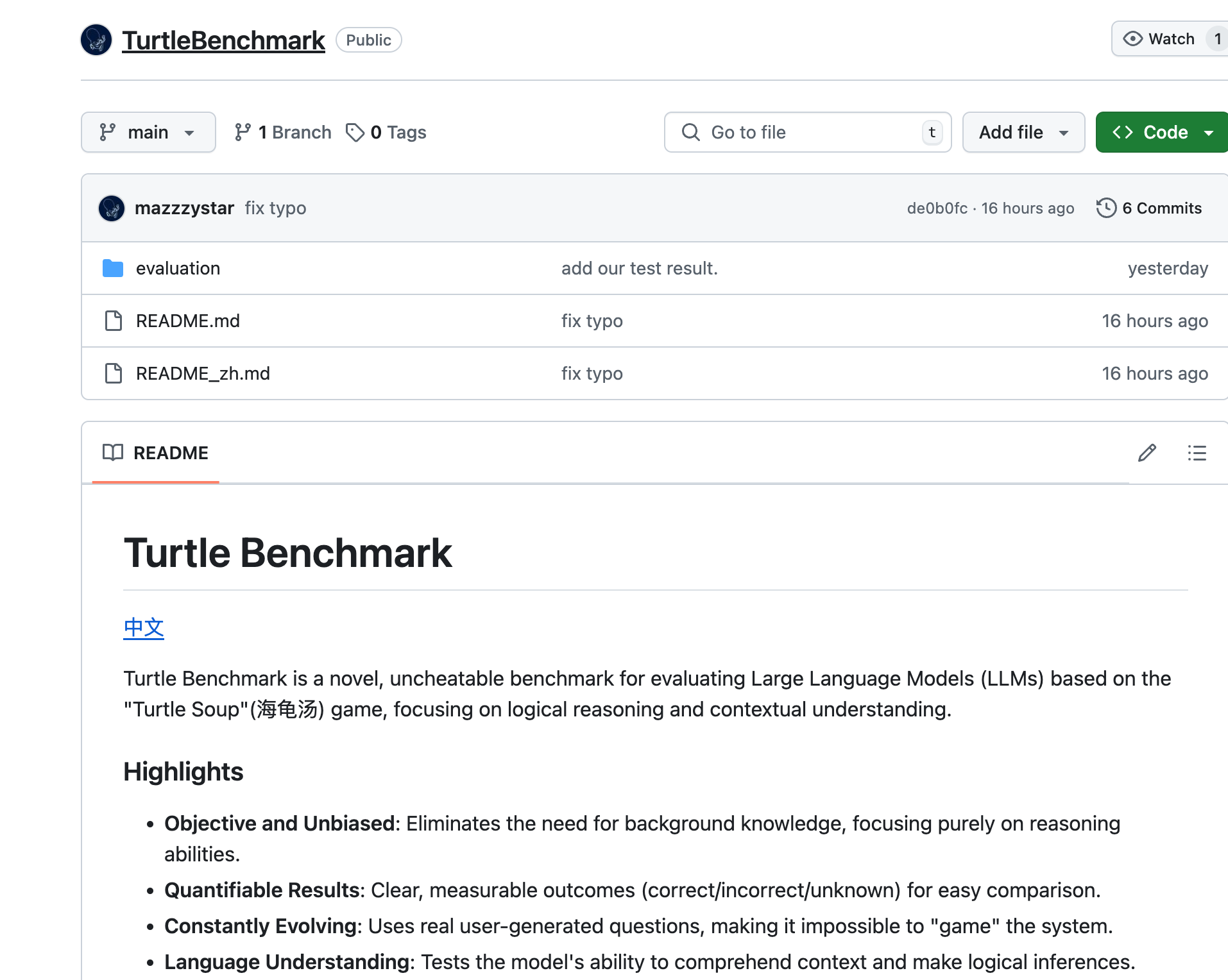

Turtle Benchmark

Turtle Benchmark is a new, uncheatable benchmark based on the 'Turtle Soup' game that focuses on evaluating the logical reasoning and context understanding capabilities of large language models (LLMs). It provides objective and unbiased testing results by eliminating the need for background knowledge, with quantifiable results, and by using real user-generated questions so that the model cannot be 'gamified'.

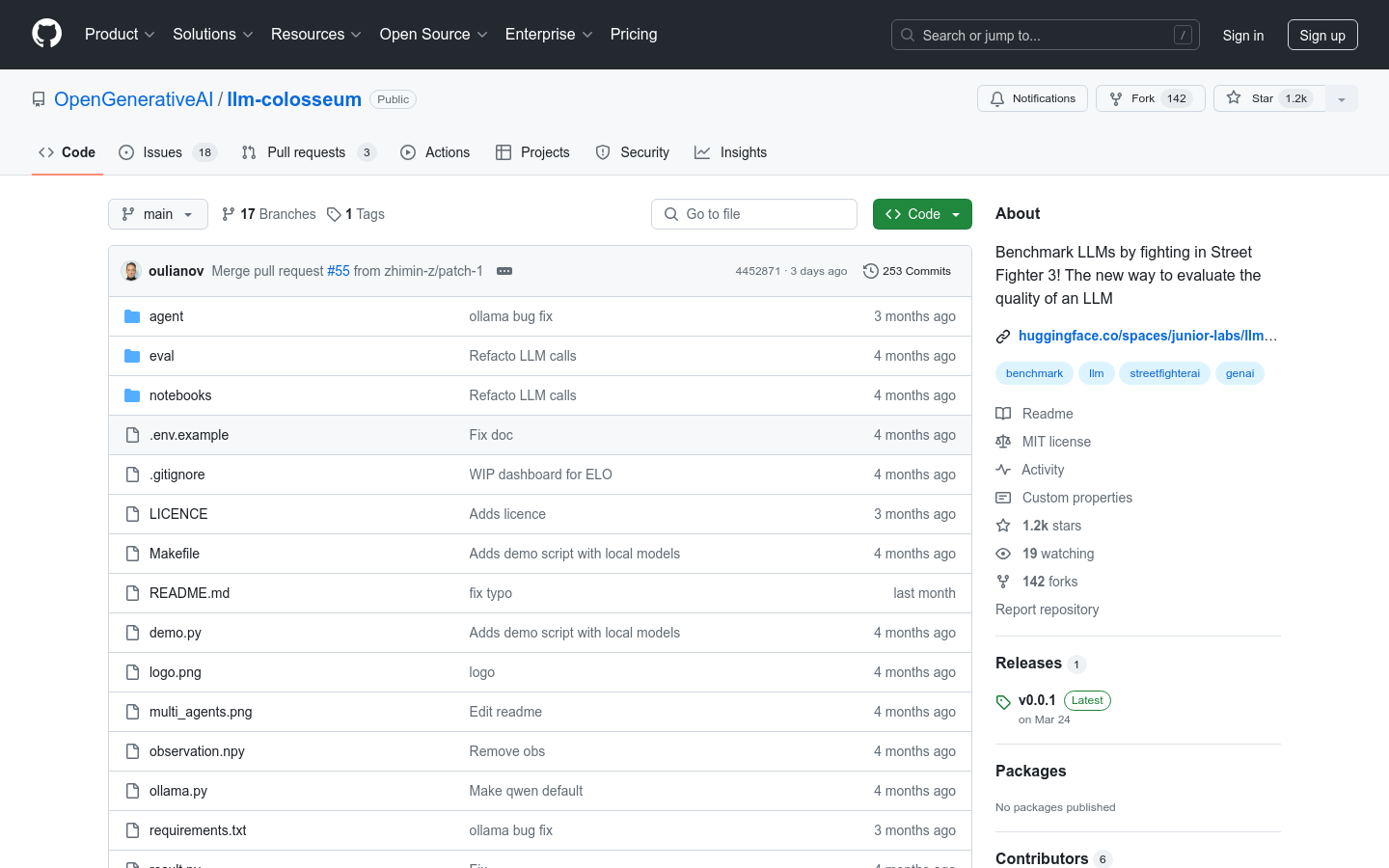

llm-colosseum

llm-colosseum is an innovative benchmarking tool that uses the Street Fighter 3 game to evaluate the real-time decision-making capabilities of large language models (LLMs). Unlike traditional benchmarks, this tool tests a model's quick reactions, smart strategies, innovative thinking, adaptability, and resilience by simulating actual game scenarios.

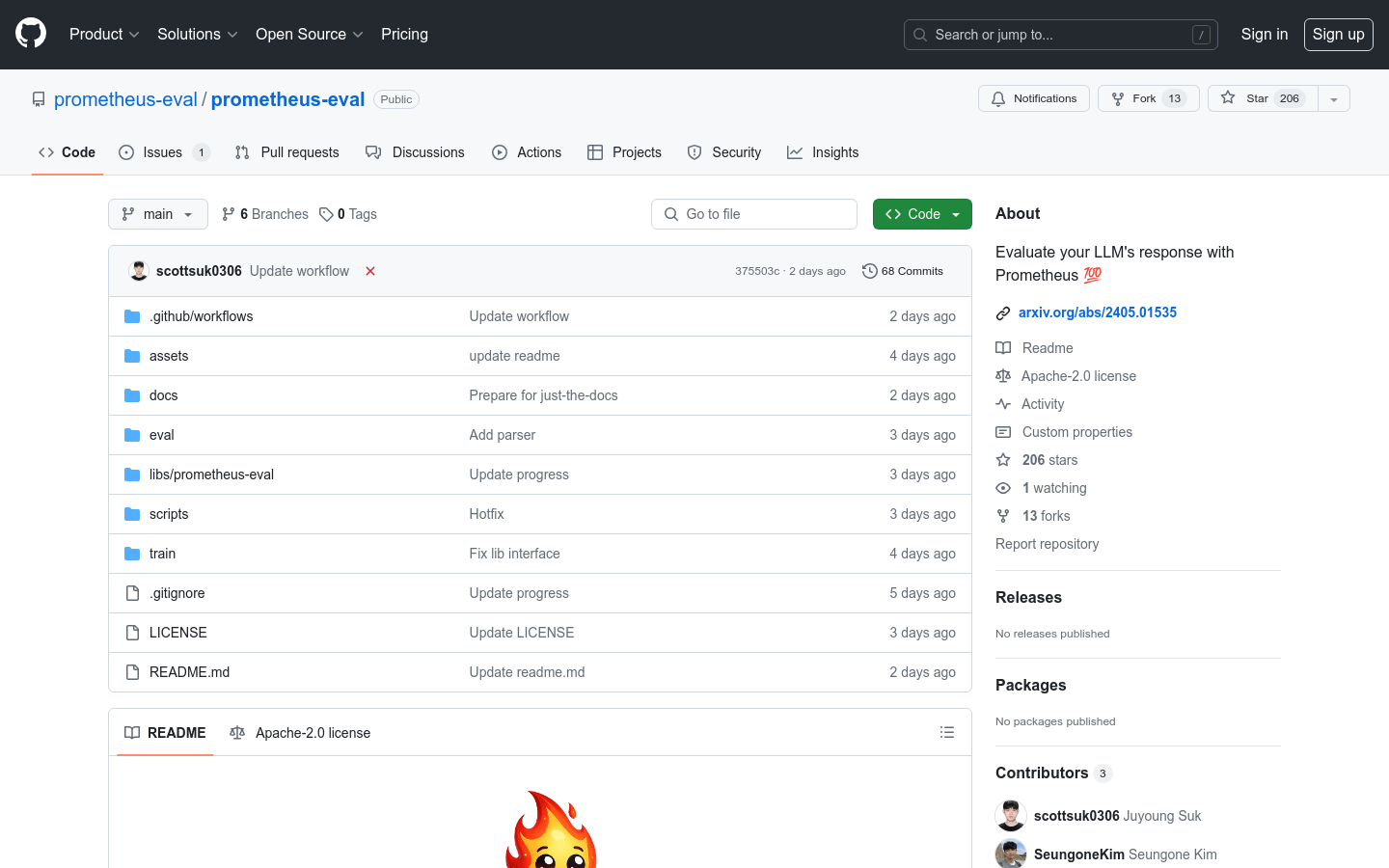

Prometheus-Eval

Prometheus-Eval is an open source toolset for evaluating the performance of large language models (LLMs) in generation tasks. It provides a simple interface to evaluate command and response pairs using Prometheus models. The Prometheus 2 model supports direct evaluation (absolute scoring) and pairwise ranking (relative scoring), can simulate human judgment and proprietary language model-based evaluation, and solves the issues of fairness, controllability and affordability.

Deepmark AI

Deepmark AI is a benchmark tool for evaluating large language models (LLMs) on a variety of task-specific metrics on their own data. It comes pre-integrated with leading generative AI APIs such as GPT-4, Anthropic, GPT-3.5 Turbo, Cohere, AI21, and more.

deepeval

DeepEval provides different aspects of metrics to evaluate LLM's answers to questions to ensure that the answers are relevant, consistent, unbiased, and non-toxic. These integrate well with CI/CD pipelines, allowing machine learning engineers to quickly evaluate and check whether the LLM application is performing well as they improve it. DeepEval provides a Python-friendly offline evaluation method to ensure your pipeline is ready for production. It's like "Pytest for your pipelines", making the process of producing and evaluating your pipelines as simple and straightforward as passing all your tests.

Cognitora

Cognitora is the next generation cloud platform designed for AI agents. Different from traditional container platforms, it utilizes high-performance micro-virtual machines such as Cloud Hypervisor and Firecracker to provide a secure, lightweight and fast AI-native computing environment. It can execute AI-generated code, automate intelligent workloads at scale, and bridge the gap between AI inference and real-world execution. Its importance lies in providing powerful computing and operation support for AI agents, allowing AI agents to run more efficiently and safely. Key benefits include high performance, secure isolation, lightning-fast boot times, multi-language support, advanced SDKs and tools, and more. This platform is aimed at AI developers and enterprises and is committed to providing comprehensive computing resources and tools for AI agents. In terms of price, users who register can get 5,000 free points for testing.

Macroscope

Macroscope is a programming efficiency tool that serves R&D teams. It has received US$30 million in Series A financing and has been publicly launched. The core functions focus on code management and R&D process optimization. By analyzing the code base to build a knowledge graph and integrating a multi-tool ecosystem, it solves the pain points of engineers being burdened with non-development work and managers having difficulty keeping track of R&D progress. Its technical advantage lies in multi-model collaboration (such as the combination of OpenAI o4-mini-high and Anthropic Opus 4) to ensure the accuracy of code review, and customer data is isolated and encrypted, compliant with SOC 2 Type II compliance, and promises not to use customer code to train models. Pricing is divided into Teams ($30/developer/month, at least 5 seats) and Enterprise (customized price) packages, targeting small and medium-sized R&D teams and large enterprises with customization needs, helping teams focus on core development and improving overall R&D efficiency.

100 Vibe Coding

100 Vibe Coding is an educational programming website focused on quickly building small web projects through AI technology. It skips complicated theories and focuses on practical results, making it suitable for beginners who want to quickly create real projects.

iFlow CLI

iFlow CLI is an interactive terminal command line tool designed to simplify the interaction between developers and terminals and improve work efficiency. It supports a variety of commands and functions, allowing users to quickly perform commands and management tasks. The key benefits of iFlow CLI include ease of use, flexibility, and customizability, making it suitable for a variety of development environments and project needs.

Never lose your work again

Claude Code Checkpoint is an essential companion app for Claude AI developers. Keep your code safe and never lost by tracking all code changes seamlessly.

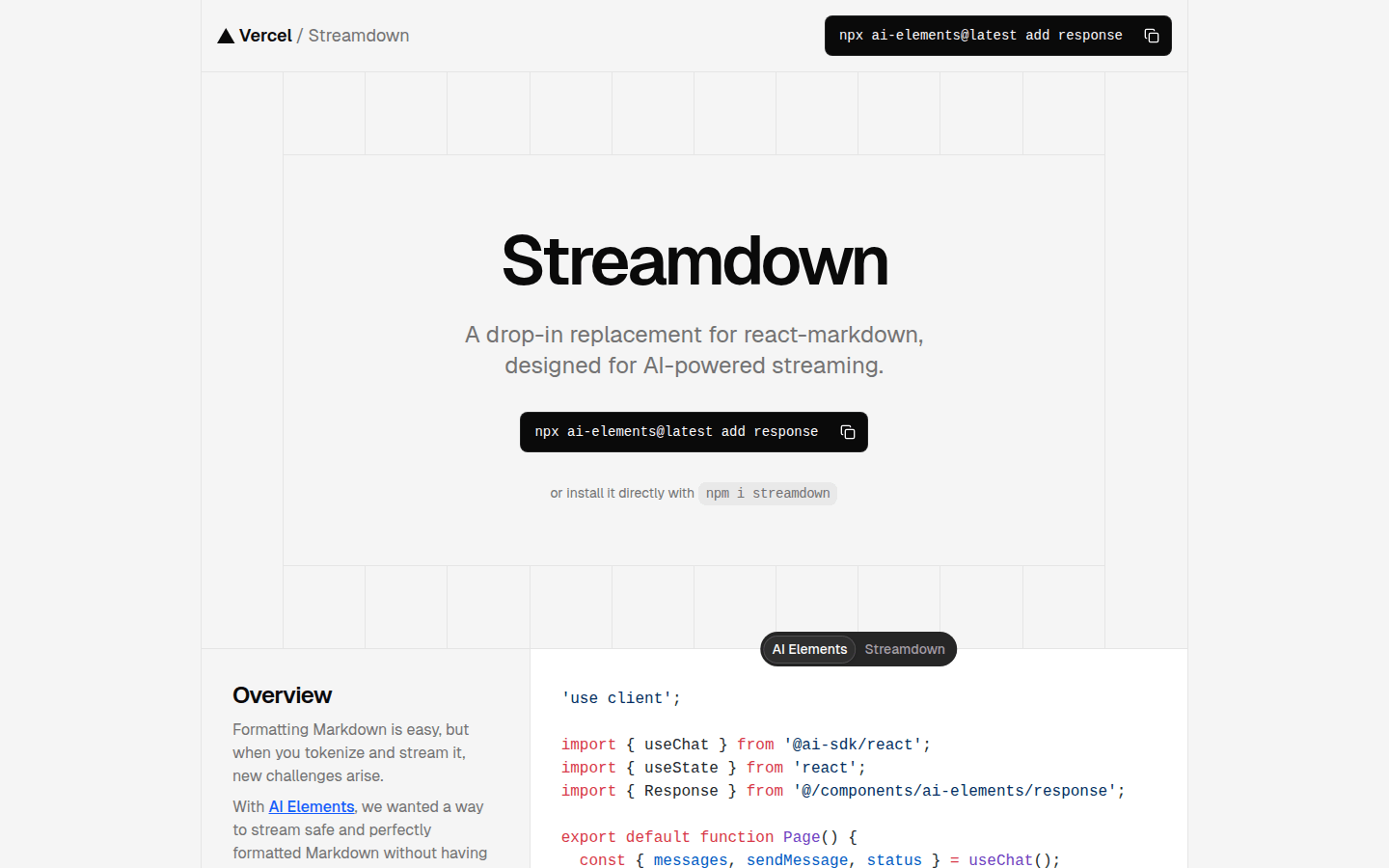

Streamdown

Streamdown is a plug-and-play replacement for React Markdown designed for AI-driven streaming. It solves new challenges that arise when marking and streaming, ensuring safe and perfectly formatted Markdown content. Key advantages include AI-driven streaming, built-in security, support for GitHub Flavored Markdown, and more.

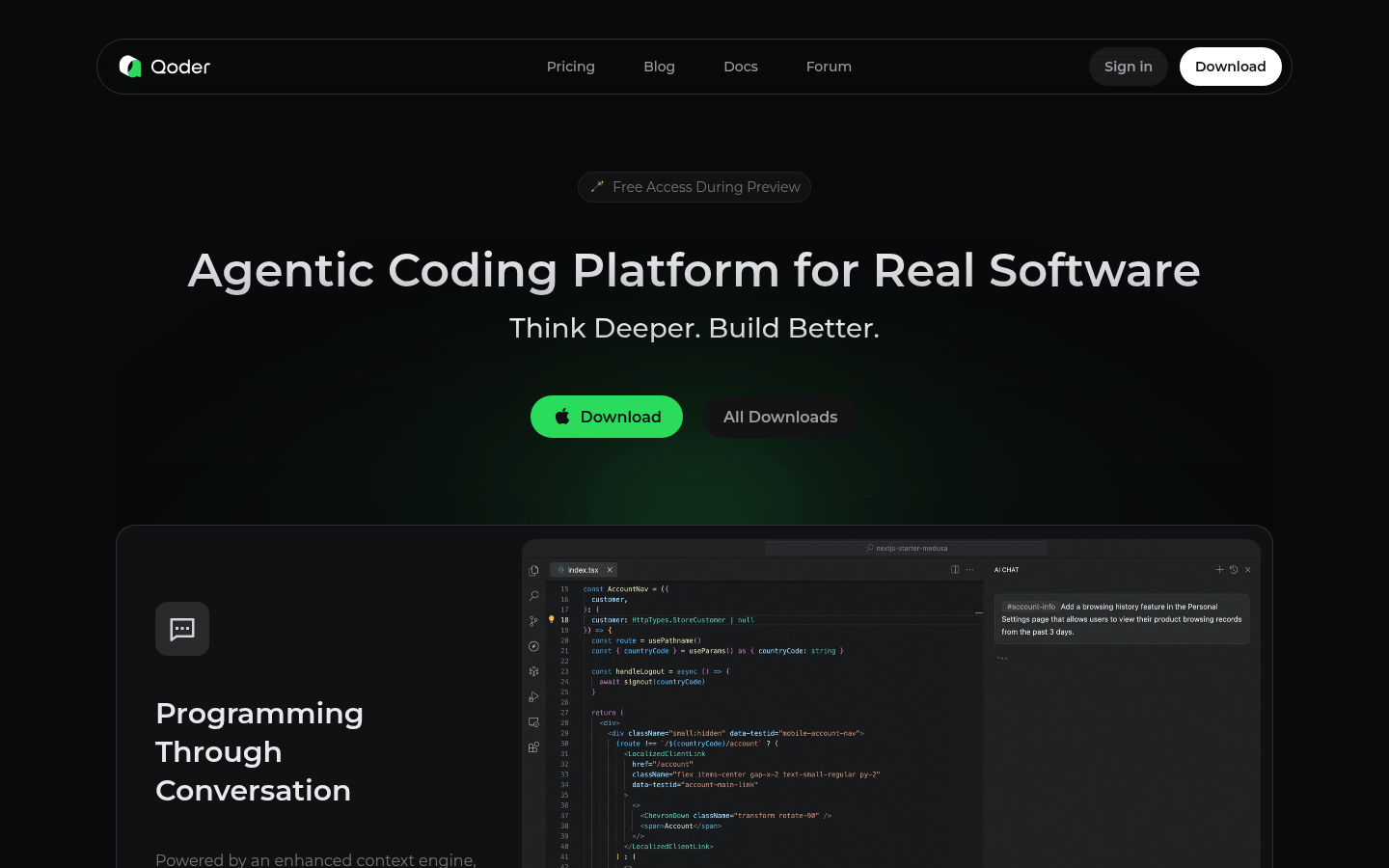

Qoder

Qoder is an agent coding platform that seamlessly integrates with enhanced context engines and intelligent agents to gain a comprehensive understanding of your code base and systematically handle software development tasks. Supports the latest and most advanced AI models in the world: Claude, GPT, Gemini, etc. Available for Windows and macOS.

Compozy

Compozy is an enterprise-grade platform that uses declarative YAML to provide scalable, reliable and cost-effective distributed workflows, simplifying complex fan-out, debugging and monitoring for production-ready automation.