Animate-X

A universal character image animation framework that supports animation generation of multiple character types.

Product Details

Animate-X is a universal LDM-based animation framework for various character types (collectively referred to as X), including human mimic characters. This framework enhances motion representation by introducing pose indicators, which can more comprehensively capture motion patterns from driving videos. Key benefits of Animate-X include in-depth modeling of motion, the ability to understand the motion patterns driving video and flexibly apply them to target characters. In addition, Animate-X introduces a new Animated Anthropomorphic Benchmark (A2Bench) to evaluate its performance on general and widely applicable animated images.

Main Features

How to Use

Target Users

The target audience of Animate-X is mainly professionals in the gaming, entertainment and animation production industries, as well as academics conducting related research. These users usually need to convert static character images into dynamic videos to enhance the expressiveness and interactivity of the characters. The advanced animation generation functions provided by Animate-X enable these users to easily create realistic animations for various characters, thus improving their work efficiency and product quality.

Examples

Game developers use Animate-X to create dynamic promotional videos for game characters.

Animators use Animate-X to transform comic book characters into dynamic images for social media promotion.

The film production team used Animate-X to create realistic character animation effects for movie trailers.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

DressRecon

DressRecon is a method for reconstructing temporally consistent 4D human models from monocular videos, focusing on handling very loose clothing or handheld object interactions. The technique combines general prior knowledge of the human body (learned from large-scale training data) with specific "bag-of-skeleton" deformations for individual videos (fitted through test-time optimization). DressRecon separates body and clothing deformations by learning a neural implicit model as separate motion model layers. To capture the subtle geometry of clothing, it leverages image-based prior knowledge such as human pose, surface normals, and optical flow to adjust during the optimization process. The generated neural fields can be extracted into temporally consistent meshes or further optimized into explicit 3D Gaussians to improve rendering quality and enable interactive visualization. DressRecon delivers higher 3D reconstruction fidelity than previous techniques on datasets containing highly challenging clothing deformations and object interactions.

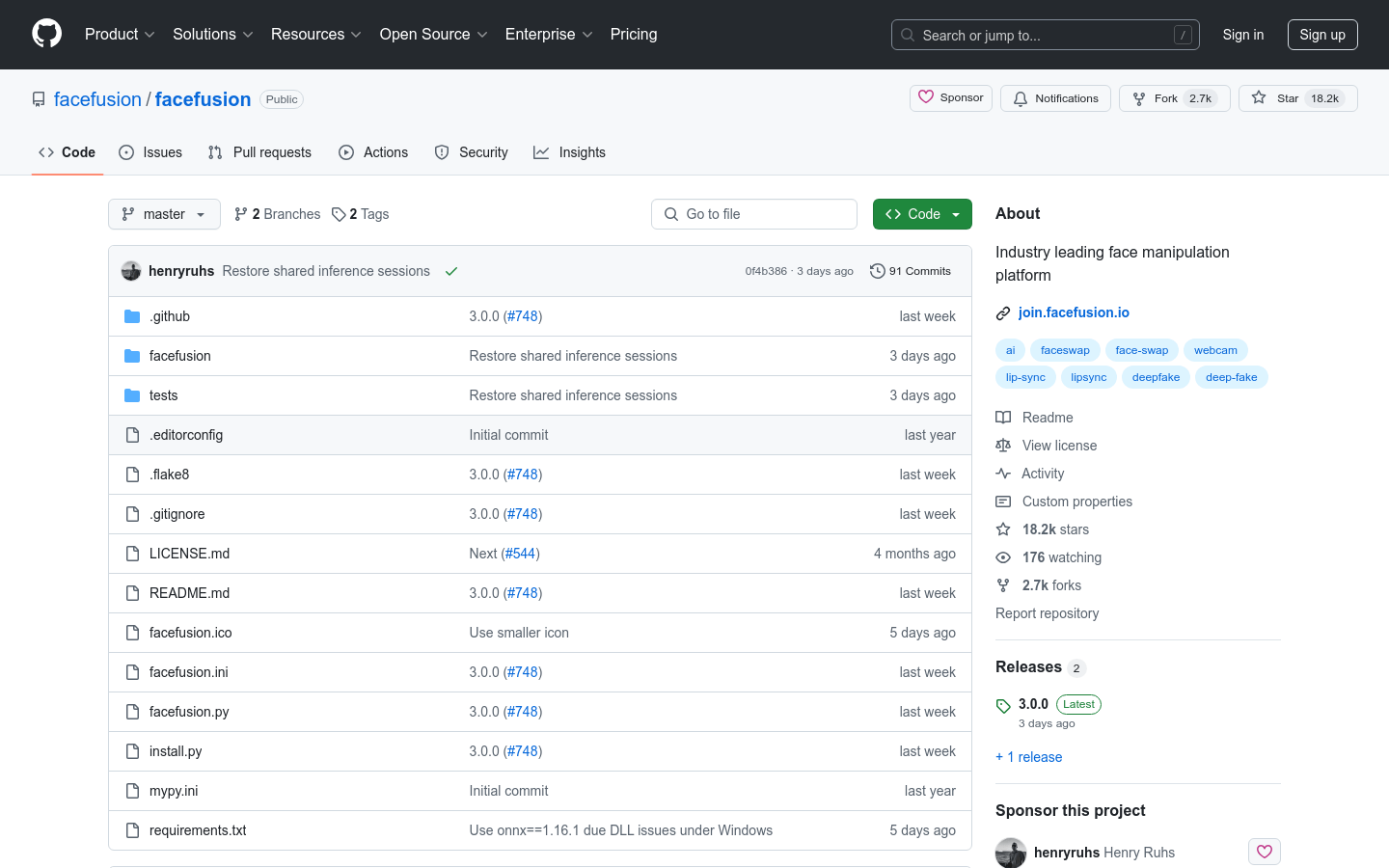

FaceFusion

FaceFusion is an industry-leading facial manipulation platform specializing in face swapping, lip sync, and deep manipulation technologies. It utilizes advanced artificial intelligence technology to provide users with a highly realistic facial operation experience. FaceFusion has a wide range of applications in image processing and video production, especially in the entertainment and media industries.

PortraitGen

PortraitGen is a 2D portrait video editing tool based on multi-modal generation priors. It can upgrade 2D portrait videos to 4D Gaussian fields to achieve multi-modal portrait editing. This technology can quickly generate and edit 3D portraits by tracking SMPL-X coefficients and using a neural Gaussian texture mechanism. It also proposes an iterative dataset update strategy and multi-modal face-aware editing module to improve expression quality and maintain personalized facial structure.

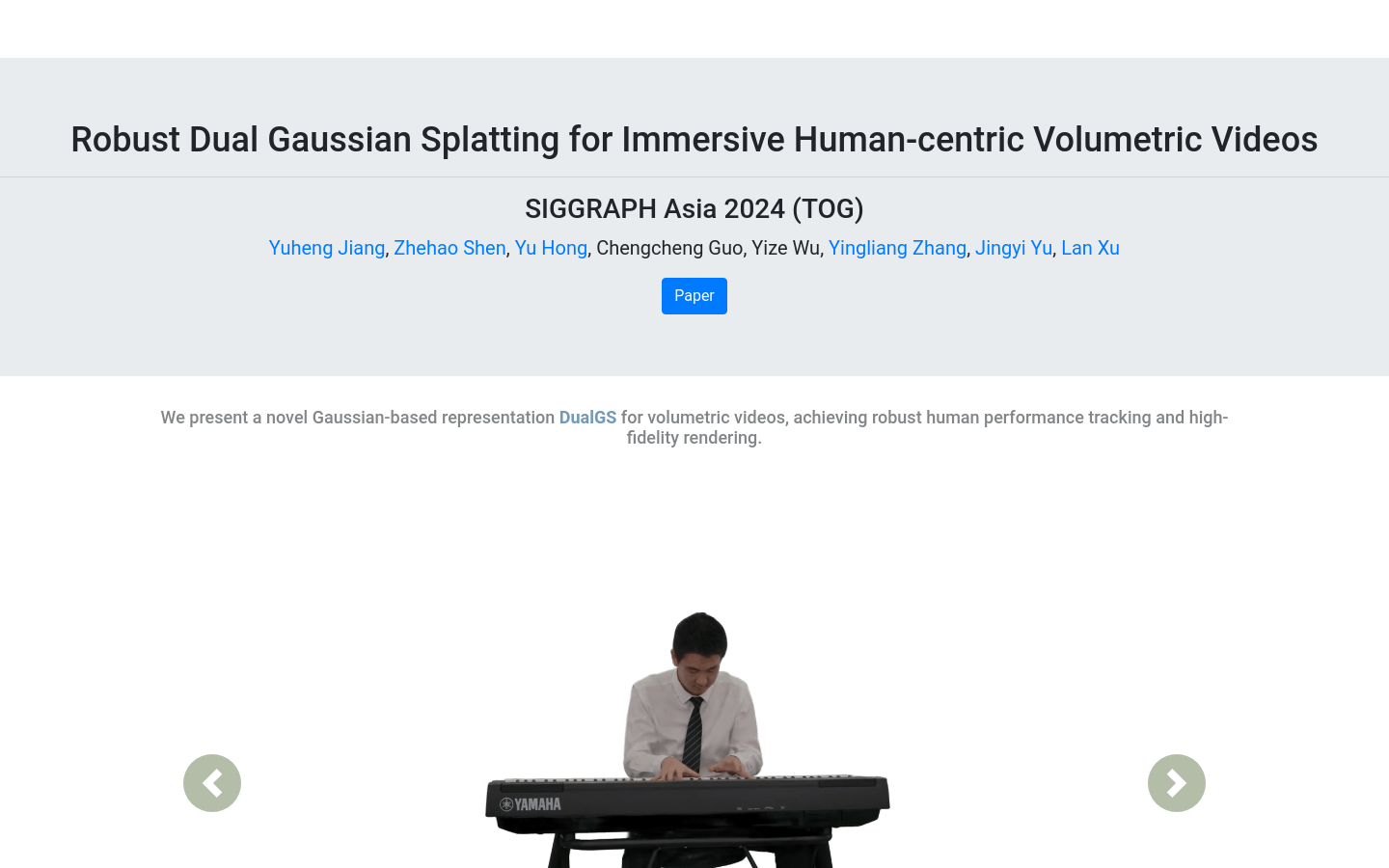

DualGS

Robust Dual Gaussian Splatting (DualGS) is a novel Gaussian-based volumetric video representation method that captures complex human performances by optimizing joint Gaussians and skin Gaussians and achieves robust tracking and high-fidelity rendering. Demonstrated at SIGGRAPH Asia 2024, the technology enables real-time rendering on low-end mobile devices and VR headsets, providing a user-friendly and interactive experience. DualGS achieves a compression ratio of up to 120 times through a hybrid compression strategy, making the storage and transmission of volumetric videos more efficient.

Svd Keyframe Interpolation

Svd Keyframe Interpolation is a keyframe interpolation model based on singular value decomposition (SVD) technology, which is used to automatically generate intermediate frames in animation production, thereby improving the work efficiency of animators. This technology automatically calculates the images of intermediate frames by analyzing the characteristics of key frames, making the animation more smooth and natural. Its advantage is that it reduces the animator's workload of manually drawing in-between frames while maintaining high-quality animation effects.

Generative Keyframe Interpolation with Forward-Backward Consistency

This product is an image-to-video diffusion model that can generate continuous video sequences with coherent motion from a pair of key frames through lightweight fine-tuning technology. This method is particularly suitable for scenarios where a smooth transition animation needs to be generated between two static images, such as animation production, video editing, etc. It leverages the power of large-scale image-to-video diffusion models by fine-tuning them to predict videos between two keyframes, achieving forward and backward consistency.

ComfyUI-AdvancedLivePortrait

ComfyUI-AdvancedLivePortrait is an advanced tool for real-time preview and editing of facial expressions. It allows users to track and edit faces in videos, insert expressions into videos, and even extract expressions from sample photos. This project simplifies the installation process by automating the installation using ComfyUI-Manager. It combines image processing and machine learning technologies to provide users with a powerful tool for creating dynamic and interactive media content.

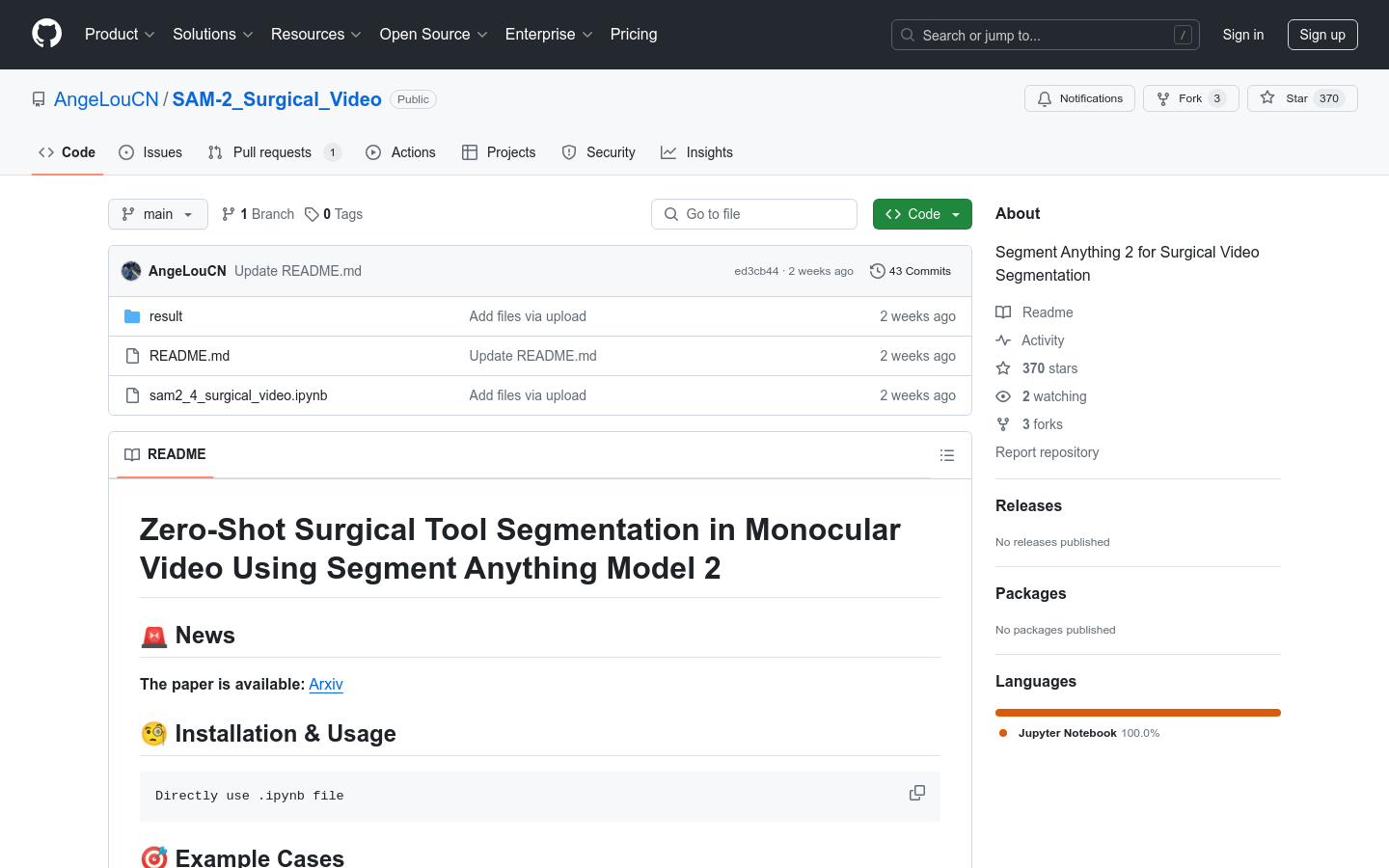

Segment Anything 2 for Surgical Video Segmentation

Segment Anything 2 for Surgical Video Segmentation is a surgical video segmentation model based on Segment Anything Model 2. It uses advanced computer vision technology to automatically segment surgical videos to identify and locate surgical tools, improving the efficiency and accuracy of surgical video analysis. This model is suitable for various surgical scenarios such as endoscopic surgery and cochlear implant surgery, and has the characteristics of high accuracy and high robustness.

Live_Portrait_Monitor

Live_Portrait_Monitor is an open source project designed to animate portraits via a monitor or webcam. This project is based on the LivePortrait research paper and uses deep learning technology to efficiently implement portrait animation through splicing and redirection control. The author is actively updating and improving this project and is for research use only.

Comfyui LivePortrait

LivePortrait is an efficient tool for portrait animation that transforms static images into vivid animations through splicing and redirection control technology. This technology is of great significance in the fields of image processing and animation production, and can greatly improve the efficiency and quality of animation production. Product background information shows that it was developed by shadowcz007 and used in conjunction with comfyui-mixlab-nodes to better achieve portrait animation effects.

MASA

MASA is an advanced model for object matching in video frames, which is capable of handling multi-object tracking (MOT) in complex scenes. MASA does not rely on domain-specific annotated video data sets, but learns instance-level correspondences through rich object segmentation through the Segment Anything Model (SAM). MASA has designed a universal adapter that can be used with basic segmentation or detection models to achieve zero-shot tracking capabilities that can perform well even in complex domains.

CameraCtrl

CameraCtrl is dedicated to providing precise camera pose control for text generation video models, and achieves camera control in the video generation process by training camera encoders to achieve parameterized camera trajectories. By comprehensively studying the effects of various data sets, the product proves that videos with diverse camera distributions and similar appearances can enhance controllability and generalization capabilities. Experiments have proven that CameraCtrl is very effective in achieving precise, domain-adaptive camera control, and is an important advance in enabling dynamic, customized video storytelling from text and camera gesture input.

GoEnhance

GoEnhance AI is a video-to-video, image enhancement and upscaling platform. It can convert your videos into many different styles of animation, including pixel and flat animation. Through AI technology, it is able to enhance and upgrade images to the ultimate level of detail. Whether it's personal creation or commercial application, GoEnhance AI provides you with powerful image and video editing tools.

Move API

Move API can convert videos containing human body movements into 3D animation assets, supports converting video files to usdz, usdc and fbx file formats, and provides preview videos. Ideal for integrating into production workflow software, enhancing application motion capture capabilities, or creating new experiences.

VisFusion

VisFusion is a technology that uses video data for online 3D scene reconstruction. It can extract and reconstruct a 3D environment from videos in real time. This technology combines computer vision and deep learning to provide users with a powerful tool for creating accurate 3D models.

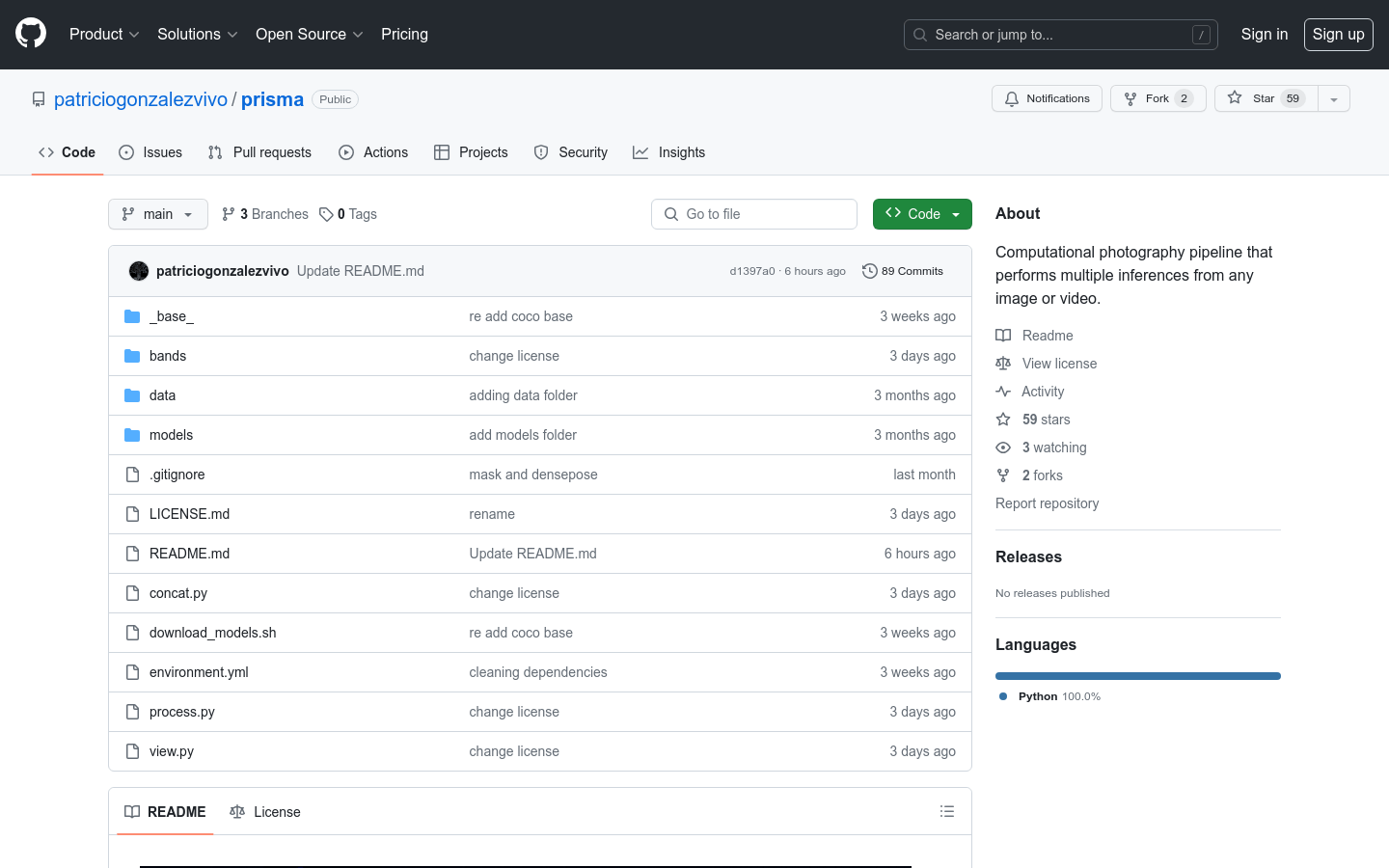

PRISMA

PRISMA is a computational photography pipeline that can perform multiple inferences from any image or video. Just like light refracted into different wavelengths through a prism, this pipeline expands the image into data that can be used for 3D reconstruction or real-time post-processing operations. It combines different algorithms and open source pre-trained models, such as monocular depth (MiDAS v3.1, ZoeDepth, Marigold, PatchFusion), optical flow (RAFT), segmentation mask (mmdet), camera pose (colmap), etc. The resulting bands are stored in a folder with the same name as the input files, with each band stored individually as a .png or .mp4 file. For videos, in the last step, it tries to perform sparse reconstruction, which can be used for NeRF (such as NVidia's Instant-ngp) or Gaussian diffusion training. The inferred depth information is exported by default as a heat map that can be decoded in real time using LYGIA's heatmap GLSL/HLSL sampling, while the optical flow is encoded as HUE (angle) and saturation, which can also be decoded in real time using LYGIA's optical flow GLSL/HLSL sampler.