GPTACG

Stable and reliable transit API service

Product Details

GPTACG transfer API provides OpenAI official api forwarding service, focusing on stability and suitable for application scenarios with high stability requirements. The product background is to provide users with enterprise-level stable services that remove regional restrictions, ultra-high concurrency support, and high cost performance, and promises not to collect user request and return information. In terms of price, we provide discounts for different purchase amounts, such as different rates for single purchases of less than $500 and greater than or equal to $500.

Main Features

How to Use

Target Users

The target audience is enterprises and developers who need stable API services, especially users who have higher requirements for API stability. The high stability, high concurrency processing capabilities and technical support of GPTACG transfer API make it an ideal choice for these users.

Examples

Enterprise users use the GPTACG transfer API for big data analysis and processing.

Developers use API services to develop chatbots and provide intelligent conversation functions.

Researchers use APIs to conduct natural language processing research and improve research efficiency.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

Ministral-8B-Instruct-2410

Mistral-8B-Instruct-2410 is a large-scale language model developed by the Mistral AI team and is designed for local intelligence, device-side computing and edge usage scenarios. The model performs well among similar sized models, supports 128k context windows and interleaved sliding window attention mechanisms, can be trained on multi-language and code data, supports function calls, and has a vocabulary of 131k. The Ministral-8B-Instruct-2410 model performs well in various benchmarks, including knowledge and general knowledge, code and mathematics, and multi-language support. The model performs particularly well in chat/arena (judged by gpt-4o) and is able to handle complex conversations and tasks.

Aria

Aria is a multi-modal native hybrid expert model with strong performance on multi-modal, language and encoding tasks. It excels in video and document understanding, supports multi-modal inputs up to 64K, and is able to describe a 256-frame video in 10 seconds. The Aria model has a parameter size of 25.3B and can be loaded with bfloat16 precision on a single A100 (80GB) GPU. Aria was developed to meet the need for multimodal data understanding, particularly in video and document processing. It is an open source model designed to advance the development of multi-modal artificial intelligence.

Open-O1

Open O1 is an open source project that aims to match the proprietary and powerful O1 model capabilities through open source innovation. The project gives these smaller models more powerful long-term reasoning and problem-solving capabilities by curating a set of O1-style thinking data for training LLaMA and Qwen models. As the Open O1 project progresses, we will continue to push what is possible with large language models, and our vision is to create a model that not only achieves O1-like performance, but also leads in test-time scalability, making advanced AI capabilities available to everyone. Through community-driven development and a commitment to ethical practices, Open O1 will become a cornerstone of AI progress, ensuring that the future development of the technology is open and beneficial to all.

GRIN-MoE

GRIN-MoE is a Mixture of Experts (MoE) model developed by Microsoft, focusing on improving the performance of the model in resource-constrained environments. This model estimates the gradient of expert routing by using SparseMixer-v2. Compared with the traditional MoE training method, GRIN-MoE achieves the expansion of model training without relying on expert parallel processing and token discarding. It performs especially well on coding and math tasks, and is suitable for scenarios that require strong reasoning capabilities.

OneGen

OneGen is an efficient single-pass generation and retrieval framework designed for large language models (LLMs) to fine-tune generation, retrieval, or hybrid tasks. Its core idea is to integrate generation and retrieval tasks into the same context, enabling LLM to perform both tasks in a single forward pass by assigning retrieval tasks to retrieval tokens generated in an autoregressive manner. Not only does this approach reduce deployment costs, it also significantly reduces inference costs because it avoids the need for two forward-pass computations on the query.

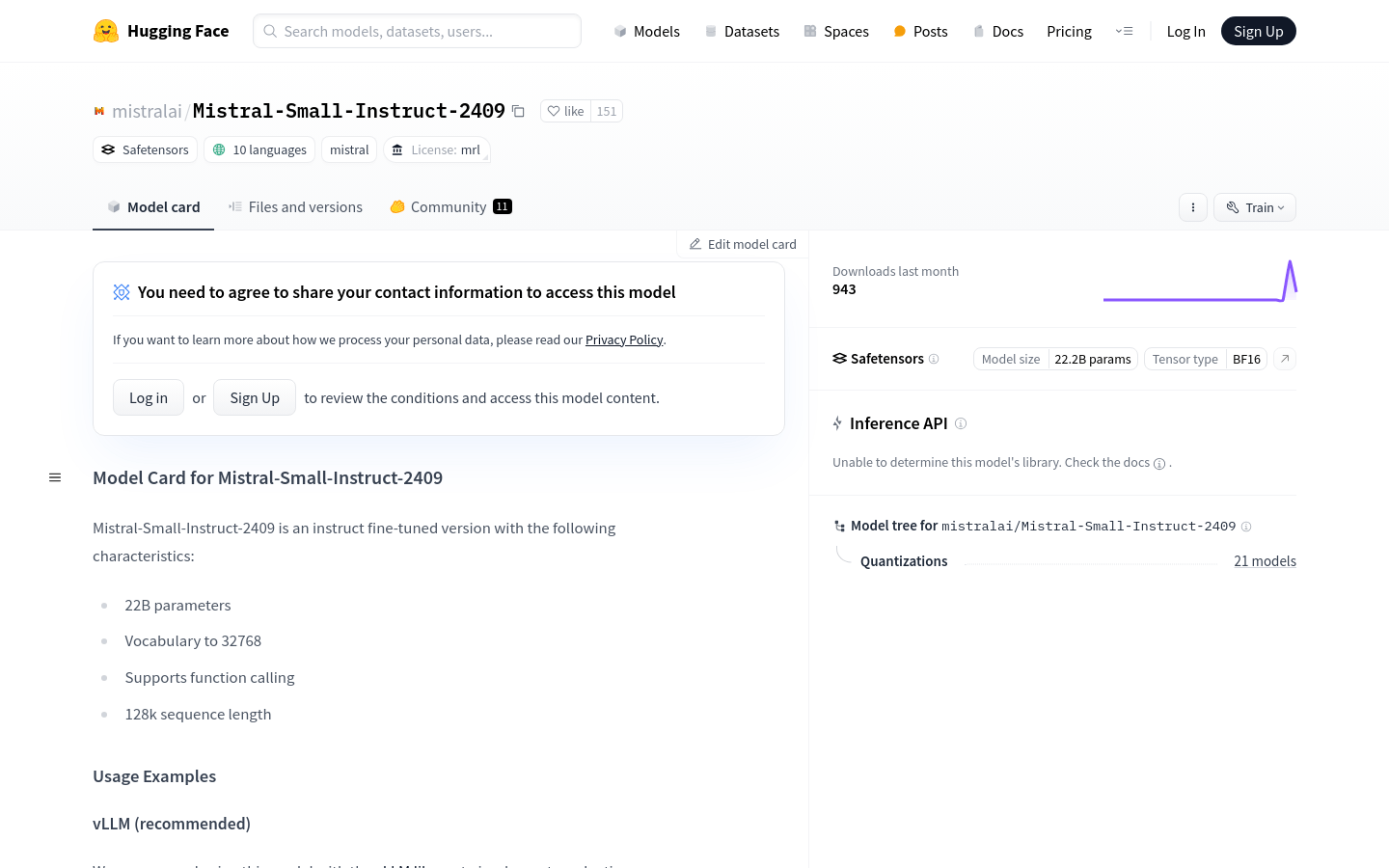

Mistral-Small-Instruct-2409

Mistral-Small-Instruct-2409 is an instruction-based fine-tuning AI model with 22B parameters developed by Mistral AI Team. It supports multiple languages and can support sequence lengths up to 128k. This model is particularly suitable for scenarios that require long text processing and complex instruction understanding, such as natural language processing, machine learning and other fields.

g1

g1 is an experimental project that aims to create an inference chain similar to OpenAI's o1 model on Groq hardware by using the Llama-3.1 70b model. This project demonstrates that it is possible to significantly improve the performance of existing open source models on logic problem solving using hinting technology alone, without the need for complex training. g1 helps the model achieve more accurate reasoning on logical problems through visual reasoning steps, which is of great significance for improving the logical reasoning ability of artificial intelligence.

Skywork-Reward-Llama-3.1-8B

Skywork-Reward-Llama-3.1-8B is an advanced reward model based on the Meta-Llama-3.1-8B-Instruct architecture, trained using the Skywork Reward Data Collection, which contains 80K high-quality preference pairs. The model excels at handling preferences in complex scenarios, including challenging preference pairs, covering multiple domains including mathematics, programming, and security. As of September 2024, the model ranks third on the RewardBench rankings.

Flux Gym

Flux Gym is a simple Web UI designed for FLUX LoRA model training, especially suitable for devices with only 12GB, 16GB or 20GB VRAM. It combines the ease of use of the AI-Toolkit project and the flexibility of Kohya Scripts, allowing users to train models without complex terminal operations. Flux Gym allows users to upload images and add descriptions through a simple interface, and then start the training process.

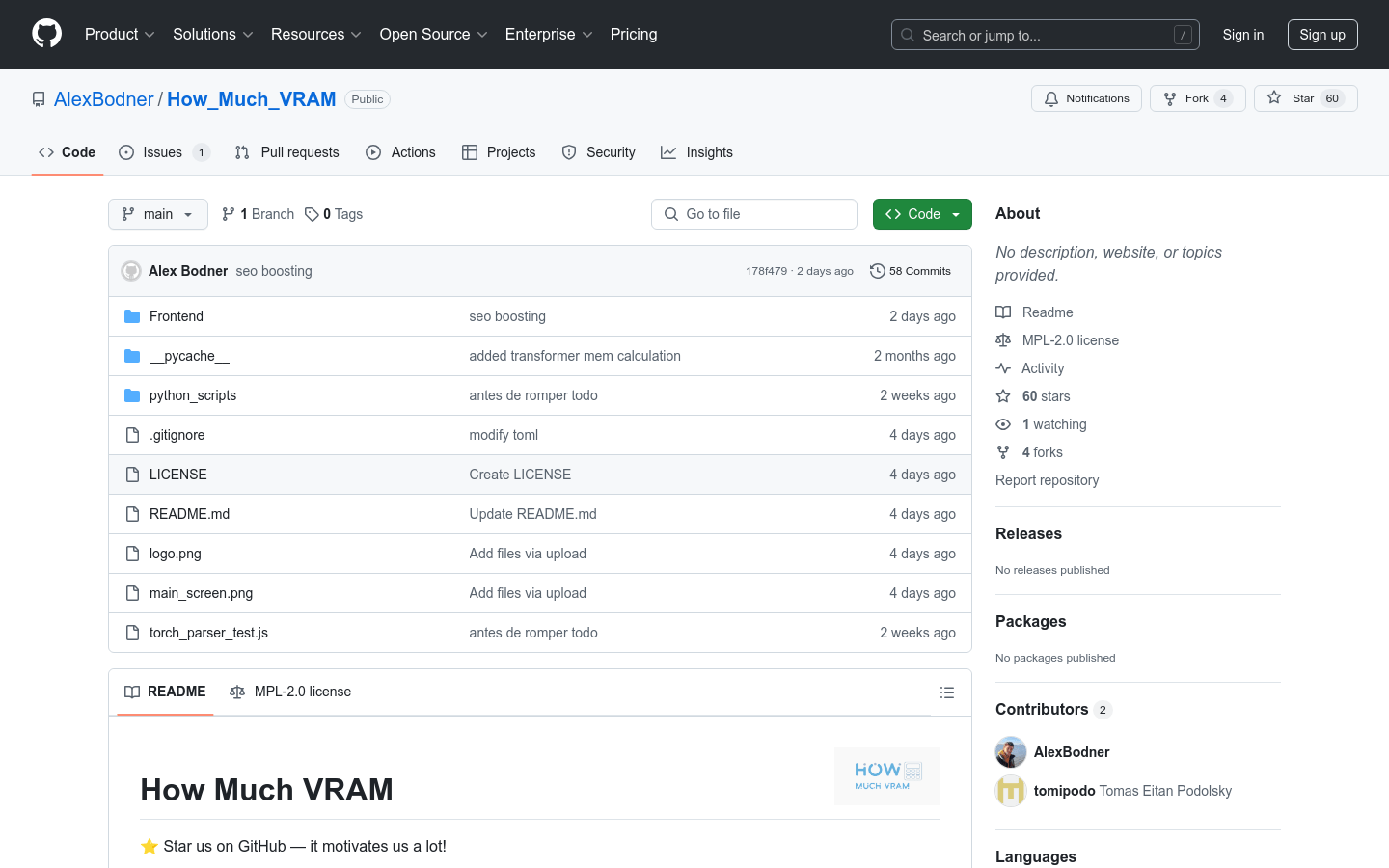

How Much VRAM

How Much VRAM is an open source project designed to help users estimate the amount of video memory their models require during training or inference. This project enables users to decide on the desired hardware configuration without having to try multiple configurations. This project is very important for developers and researchers who need to train deep learning models because it can reduce the trial and error cost of hardware selection and improve efficiency. The project is licensed under the MPL-2.0 license and is provided free of charge.

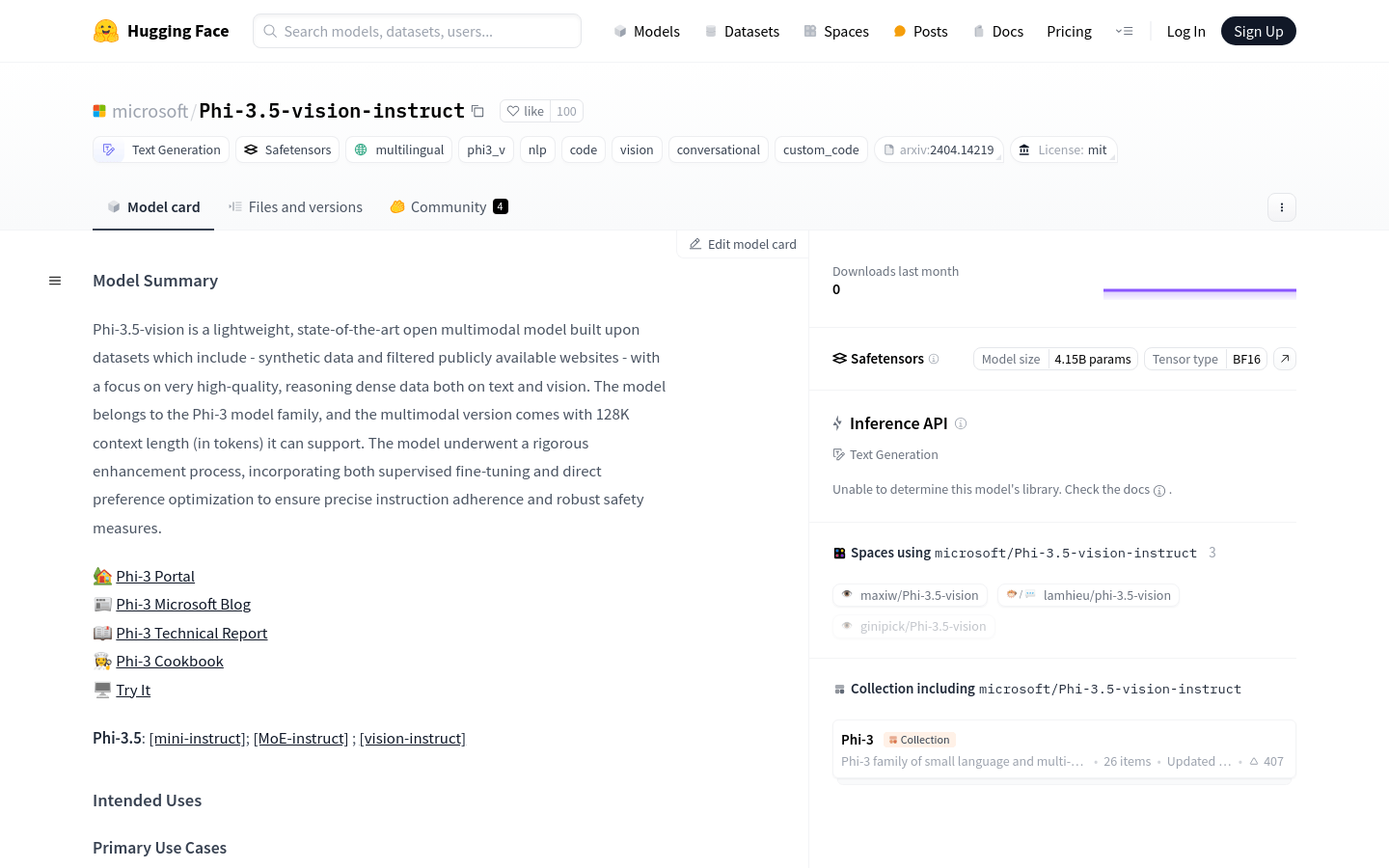

Phi-3.5-vision

Phi-3.5-vision is a lightweight, latest-generation multi-modal model developed by Microsoft, built on datasets including synthetic data and filtered publicly available websites, focusing on high-quality, dense inference data for text and vision. The model belongs to the Phi-3 model family and has undergone a rigorous enhancement process that combines supervised fine-tuning and direct preference optimization to ensure precise instruction following and strong safety measures.

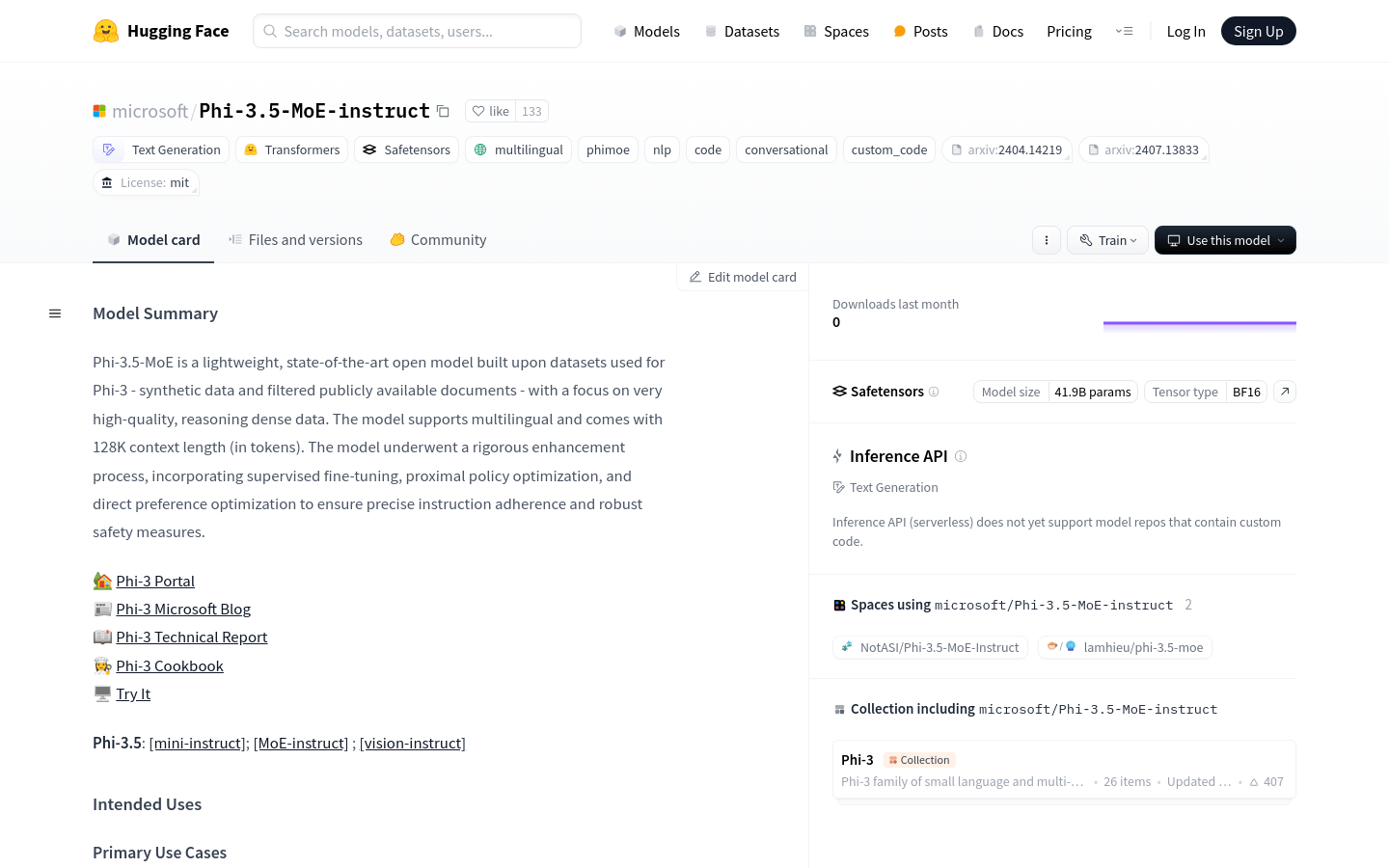

Phi-3.5-MoE-instruct

Phi-3.5-MoE-instruct is a lightweight, multi-language AI model developed by Microsoft. It is built based on high-quality, inference-intensive data and supports a context length of 128K. The model undergoes a rigorous enhancement process, including supervised fine-tuning, proximal policy optimization, and direct preference optimization to ensure precise instruction following and strong safety measures. It is designed to accelerate research on language and multimodal models as building blocks for generative AI capabilities.

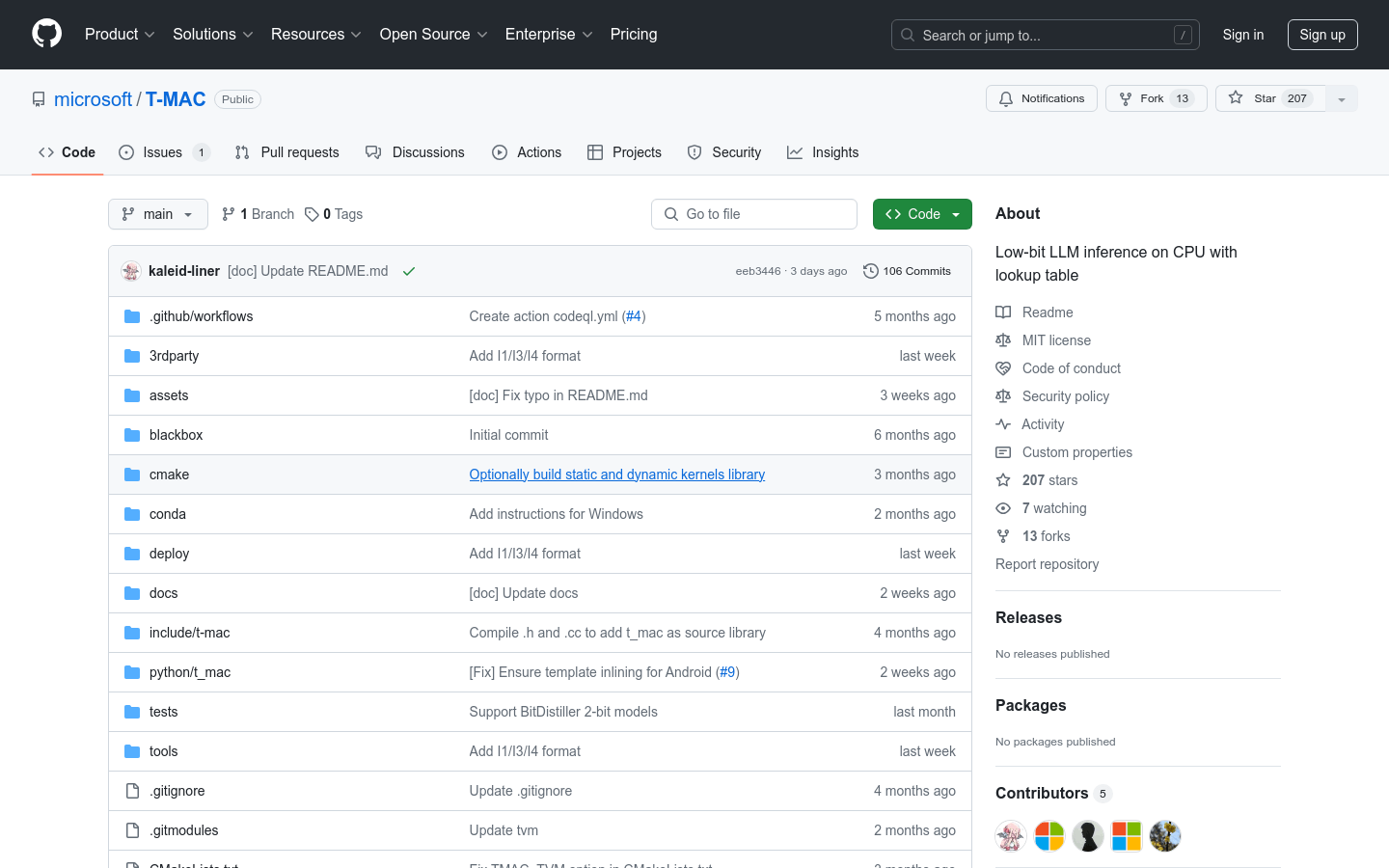

T-MAC

T-MAC is a kernel library that directly supports mixed-precision matrix multiplication by using lookup tables without dequantization operations, and is designed to accelerate low-bit large language model inference on the CPU. It supports multiple low-bit models, including W4A16 for GPTQ/gguf, W2A16 for BitDistiller/EfficientQAT, and BitNet W1(.58)A8 for ARM/Intel CPUs on OSX/Linux/Windows. T-MAC achieved 3B BitNet token generation throughput on Surface Laptop 7, 20 per second on a single core and 48 per second on a quad core, which is 4 to 5 times faster than the existing most advanced CPU low-bit framework (llama.cpp).

Falcon Mamba

Falcon Mamba is the first 7B large-scale model released by the Technology Innovation Institute (TII) in Abu Dhabi that does not require attention mechanisms. The model is not limited by the increased computational and storage costs caused by increasing sequence length while maintaining performance comparable to existing state-of-the-art models when processing large sequences.

Gemma Scope

Gemma Scope is a set of sparse autoencoders designed for the 9B and 2B models of Gemma 2. It helps us analyze the activations inside the model like a microscope to understand the concepts behind it. These autoencoders can be used to study the internal activation of models, similar to how biologists use microscopes to study the cells of plants and animals.

Meta-Llama-3.1-405B-Instruct-FP8

The Meta Llama 3.1 series of models is a set of pre-trained and instruction-tuned multilingual large language models (LLMs), including models in three sizes: 8B, 70B, and 405B. It is optimized for multilingual conversation use cases and outperforms many open source and closed source chat models.