Tabled

Tools to detect and extract tables into Markdown and CSV formats

Product Details

Tabled is a Python library for detecting and extracting tables. It uses surya to identify tables in PDFs, identify rows and columns, and be able to format cells into Markdown, CSV, or HTML. This tool is very useful for data scientists and researchers who often need to extract tabular data from PDF documents for further analysis. Key advantages of Tabled include highly accurate table detection and extraction capabilities, support for multiple output formats, and an easy-to-use command line interface. Additionally, it provides an interactive APP that allows users to intuitively try using Tabled on images or PDF files.

Main Features

How to Use

Target Users

Tabled's target audience is primarily data scientists, researchers, and developers who need to extract tabular data from PDF documents for data analysis or further processing. This tool is suitable for them as it provides high-accuracy table detection and extraction, supports multiple output formats, and is easy to integrate into existing workflows.

Examples

Researchers use Tabled to extract data from PDFs of academic papers for statistical analysis.

Data scientists use Tabled to convert tabular data in market research reports into CSV format for use in economic forecasting models.

Developers integrate Tabled into their software products to provide automated PDF table data processing capabilities.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

Prisma Optimize

Prisma Optimize is a tool that uses artificial intelligence technology to analyze and optimize database queries. It accelerates applications by providing in-depth insights and actionable recommendations to make database queries more efficient. Prisma Optimize supports a variety of databases, including PostgreSQL, MySQL, SQLite, SQL Server, CockroachDB, PlanetScale, and Supabase, and can be seamlessly integrated into existing technology stacks without the need for large-scale modifications or migrations. The main advantages of the product include improving database performance, reducing query latency, optimizing query patterns, etc. This is a powerful tool for developers and database administrators to help them manage and optimize databases more effectively.

Knowledge Table

Knowledge Table is an open source toolkit designed to simplify the process of extracting and exploring structured data from unstructured documents. It enables users to create structured knowledge representations such as tables and charts through a natural language query interface. The toolkit features customizable extraction rules, fine-tuned formatting options, and data provenance displayed through the UI to accommodate a variety of use cases. Its goal is to provide business users with a familiar spreadsheet interface, while providing developers with a flexible and highly configurable backend, ensuring seamless integration with existing RAG workflows.

VARAG

VARAG is a system that supports multiple retrieval technologies, optimized for different use cases of text, image and multi-modal document retrieval. It simplifies the traditional retrieval process by embedding document pages as images and uses advanced visual language models for encoding, improving retrieval accuracy and efficiency. The main advantage of VARAG is its ability to handle complex visual and textual content, providing powerful support for document retrieval.

GraphReasoning

GraphReasoning is a project that uses generative artificial intelligence technology to transform 1,000 scientific papers into knowledge graphs. Through structured analysis, calculating node degrees, identifying communities and connectivity, and evaluating clustering coefficients and betweenness centralities of key nodes reveal fascinating knowledge architectures. The graph is scale-free, highly interconnected, and can be used for graph reasoning, using transitive and isomorphic properties to reveal unprecedented interdisciplinary relationships for answering questions, identifying knowledge gaps, proposing unprecedented materials designs, and predicting material behavior.

AgentRE

AgentRE is an agent-based framework specifically designed for relationship extraction in complex information environments. It can efficiently process and analyze large-scale data sets by simulating the behavior of intelligent agents to identify and extract relationships between entities. This technology is of great significance in the fields of natural language processing and information retrieval, especially in scenarios where large amounts of unstructured data need to be processed. The main advantages of AgentRE include its high scalability, flexibility and ability to handle complex data structures. The framework is open source, allowing researchers and developers to freely use and modify it to suit different application needs.

magic-html

magic-html is a Python library designed to simplify the process of extracting body area content from HTML. It provides a set of tools that can easily extract body area content from HTML. Whether dealing with complex HTML structures or simple web pages, this library aims to provide users with a convenient and efficient interface. It supports multi-modal extraction, supports multiple layout extractors, including articles, forums and WeChat articles, and also supports latex formula extraction and conversion.

TAG-Bench

TAG-Bench is a benchmark used to evaluate and study the performance of natural language processing models in answering database queries. It builds on the BIRD Text2SQL benchmark and increases query complexity by adding requirements for world knowledge or semantic reasoning beyond explicit information in the database. TAG-Bench aims to promote the integration of AI and database technology and provide researchers with a platform to challenge existing models by simulating real database query scenarios.

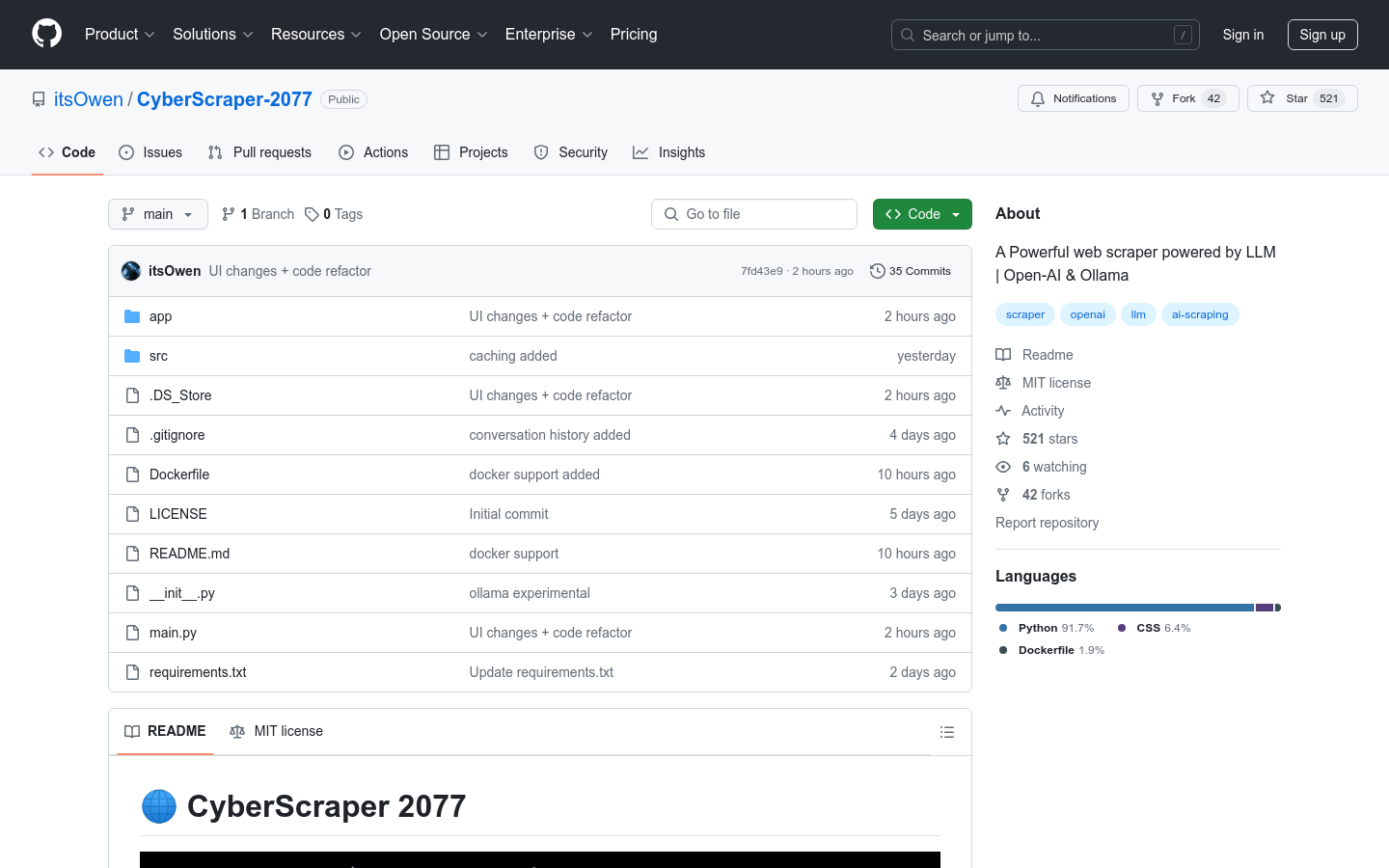

CyberScraper 2077

CyberScraper 2077 is an AI-based web crawler tool that uses large language models (LLM) such as OpenAI and Ollama to intelligently parse web content and provide data extraction services. Not only does this tool have a user-friendly graphical interface, it also supports multiple data export formats, including JSON, CSV, HTML, SQL, and Excel. Additionally, it features a stealth mode to reduce the risk of being detected as a robot, as well as ethical crawling features that adhere to robots.txt and website policies.

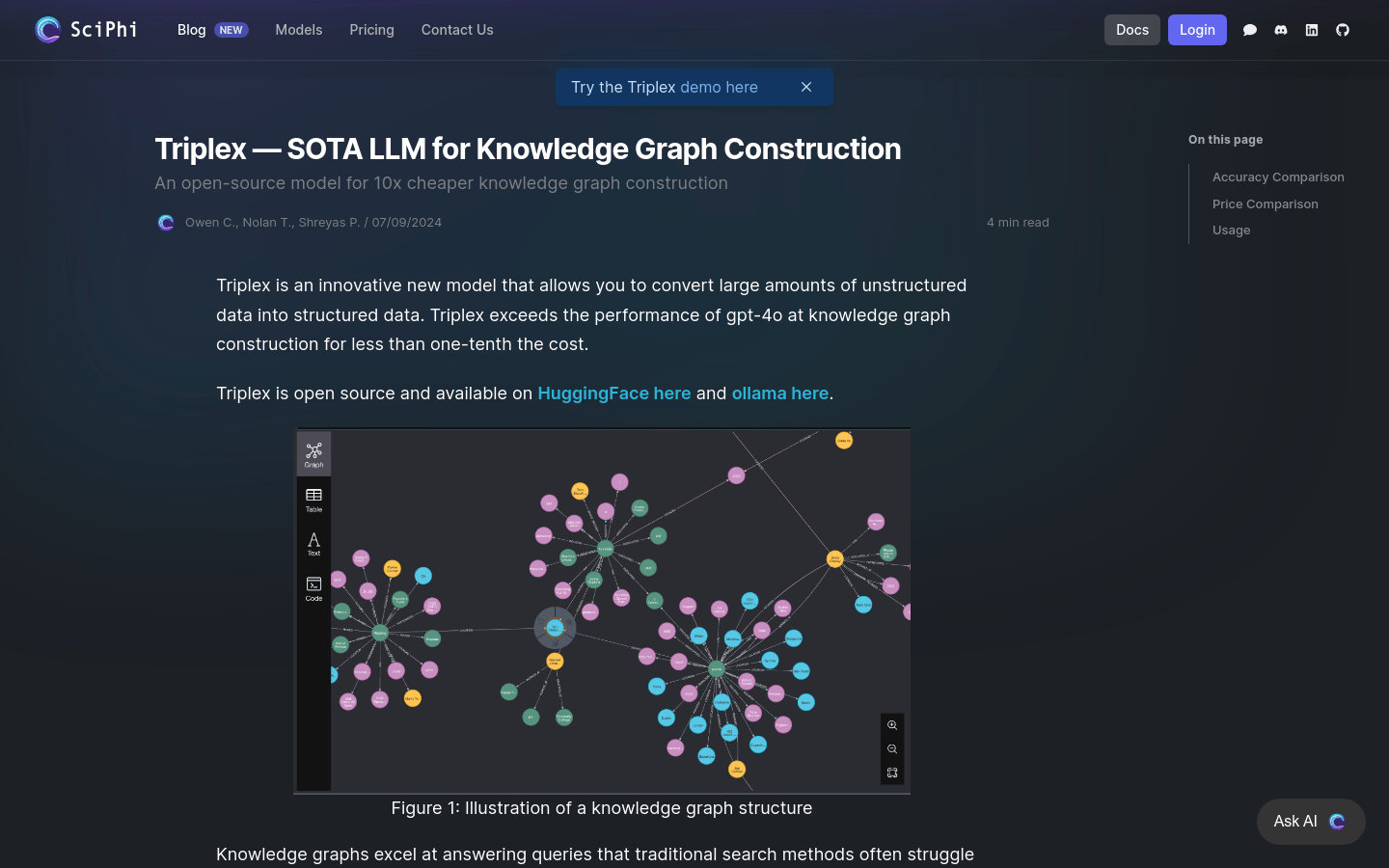

Triplex

Triplex is an innovative open source model that can convert large amounts of unstructured data into structured data. Its performance in building knowledge graphs exceeds that of gpt-4o, and the cost is only one-tenth of the cost. It greatly reduces the cost of generating knowledge graphs by efficiently converting unstructured text into semantic triples, the basis for knowledge graph construction.

Datalore

Datalore is an AI-driven data analysis tool that integrates Anthropic's Claude API and multiple data analysis libraries. It provides an interactive interface that enables users to perform data analysis tasks using natural language commands.

Korvus

Korvus is a search SDK built on Postgres that unifies the entire RAG (Retrieval Augmentation Generation) process into a single database query. It provides high-performance, customizable search capabilities while minimizing infrastructure considerations. Korvus utilizes PostgresML's pgml extension and pgvector extension to compress the RAG process inside Postgres. It supports multi-language SDKs, including Python, JavaScript, Rust and C, allowing developers to seamlessly integrate into existing technology stacks.

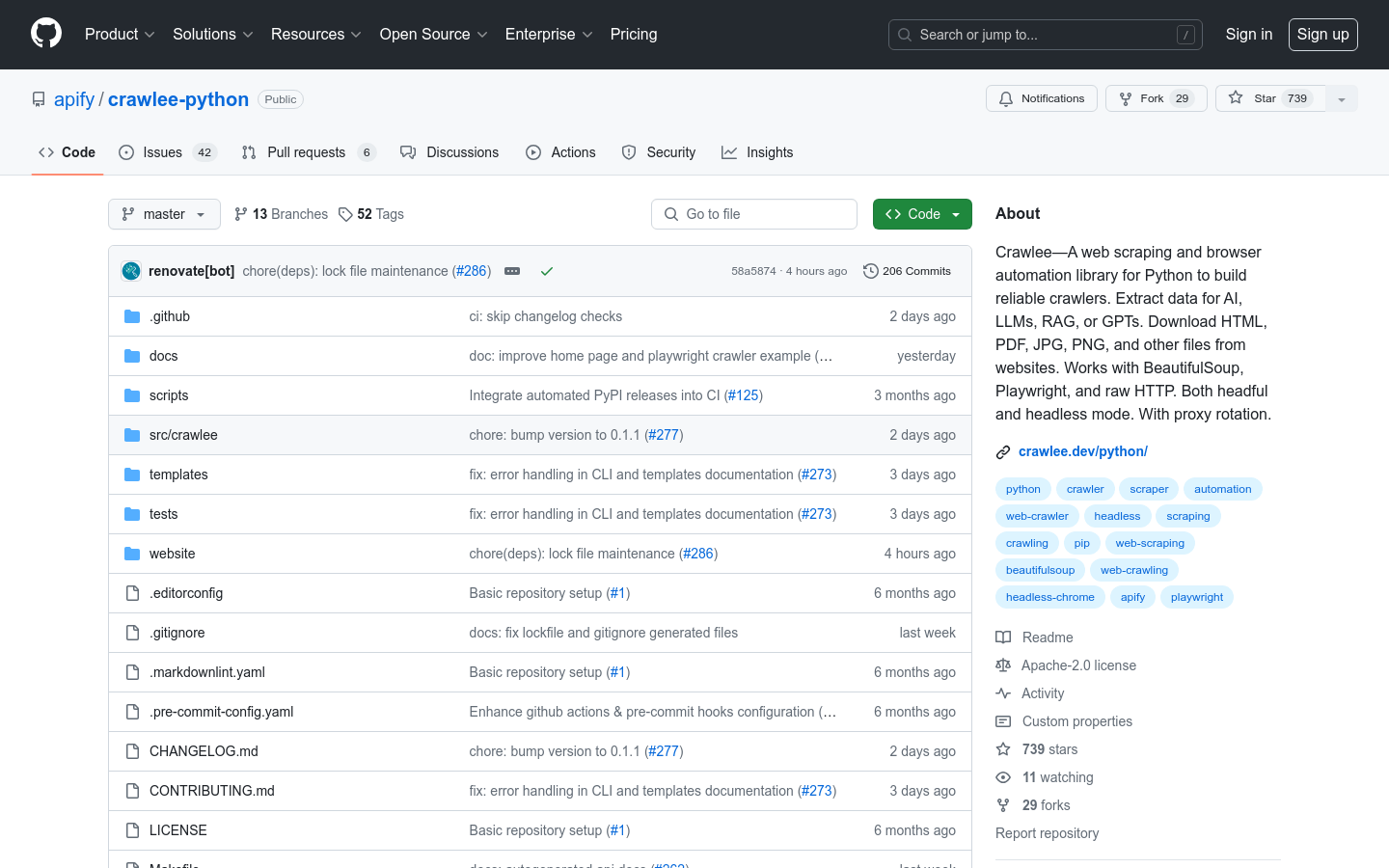

Crawlee

Crawlee is a Python web crawler and browser automation library for building reliable crawlers, extracting data for use in AI, LLMs, RAG or GPTs. It provides a unified interface to handle HTTP and headless browser crawling tasks, supports automatic parallel crawling, and adjusts based on system resources. Crawlee is written in Python and includes type hints to enhance the development experience and reduce errors. It features automatic retries, integrated proxy rotation and session management, configurable request routing, persistent URL queues, pluggable storage options, and more. Compared with Scrapy, Crawlee provides native support for headless browser crawling, has a simple and elegant interface, and is completely based on standard asynchronous IO.

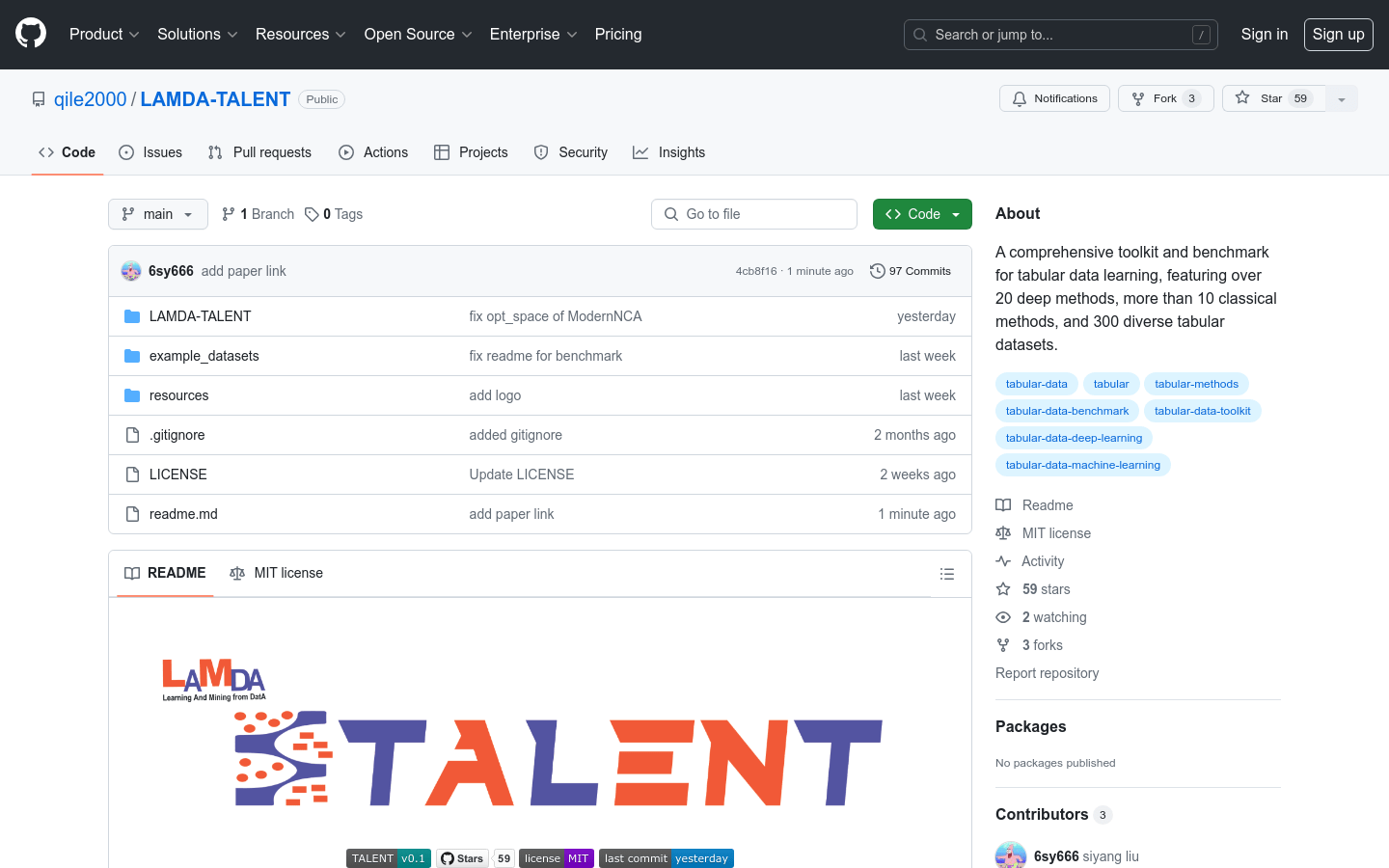

LAMDA-TALENT

LAMDA-TALENT is a comprehensive tabular data analysis toolbox and benchmarking platform that integrates more than 20 deep learning methods, more than 10 traditional methods, and more than 300 diverse tabular data sets. Designed to improve model performance on tabular data, the toolbox provides powerful preprocessing capabilities, optimizes data learning, and supports user-friendly and adaptable operations for both novice and expert data scientists.

APIGen

APIGen is an automated data generation pipeline designed to generate verifiable, high-quality data sets for function-call applications. The model ensures data reliability and correctness through a three-level verification process, including format checking, actual function execution, and semantic verification. APIGen can generate diverse data sets in a large-scale and structured manner, and verify the correctness of the generated function calls by actually executing the API, which is crucial to improving the performance of the function call proxy model.

DB-GPT

DB-GPT is an open source AI native data application development framework that uses AWEL (Agentic Workflow Expression Language) and agent technology to simplify the integration of large model applications and data. It enables enterprises and developers to build customized applications with less code through technical capabilities such as multi-model management, Text2SQL effect optimization, RAG framework optimization, and multi-agent framework collaboration. In the Data 3.0 era, DB-GPT provides basic data intelligence technology for building enterprise-level report analysis and business insights based on models and databases.

Yayi Information Extraction Large Model

The Yayi Information Extraction Large Model (YAYI-UIE) was developed by the Zhongke Wenge algorithm team. It is a model that performs instruction fine-tuning on millions of manually constructed high-quality information extraction data. It can uniformly train information extraction tasks, including Named Entity Recognition (NER), Relationship Extraction (RE) and Event Extraction (EE), covering structured extraction in multiple scenarios such as general, security, finance, biology, medical, and business. The open source of this model aims to promote the development of the Chinese pre-trained large model open source community and jointly build the Yayi large model ecosystem through open source.