CAT4D

4D scene creation tool using multi-view video diffusion models

Product Details

CAT4D is a technology that uses multi-view video diffusion models to generate 4D scenes from monocular videos. It can convert input monocular video into multi-view video and reconstruct dynamic 3D scenes. The importance of this technology lies in its ability to extract and reconstruct complete information of three-dimensional space and time from single-view video data, providing powerful technical support for fields such as virtual reality, augmented reality, and three-dimensional modeling. Product background information shows that CAT4D was jointly developed by researchers from Google DeepMind, Columbia University and UC San Diego. It is a case in which cutting-edge scientific research results are transformed into practical applications.

Main Features

How to Use

Target Users

The target audience is 3D modelers, animators, game developers, and researchers in the fields of virtual reality and augmented reality. CAT4D provides them with a way to quickly create and modify 3D scenes from existing video materials, greatly improving work efficiency and broadening creative possibilities.

Examples

Case 1: Animators use CAT4D to extract character movements from historical videos and create new animation sequences.

Case 2: Game developers use CAT4D technology to transform real-world landmarks into virtual scenes in the game.

Case 3: Researchers use CAT4D to analyze the movements of athletes in sports competitions to optimize training procedures.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

Create point AI

Quark·Zangdian AI is a platform that uses advanced AI technology to generate images and videos. Users can generate visual content through simple input. Its main advantage is that it is fast and efficient, making it suitable for designers, artists, and content creators. This product provides users with flexible creative tools to help them realize their creative ideas in a short time, and the flexible pricing model provides users with more choices.

Photo to video ai

Image to Video AI Generator utilizes advanced AI models to convert static images into eye-catching videos, suitable for social media creators and anyone who wants to experience AI video generation. The product is positioned to simplify the video production process and improve efficiency.

AI Animate Image

AI Animate Image uses advanced AI technology to transform static images into vivid animations, providing professional-level animation quality and smooth dynamic effects.

Grok Imagine

Grok Imagine is an AI image and video generation platform powered by the Aurora engine that can generate multi-domain realistic images and dynamic video content. Its core technology is based on the Aurora engine's autoregressive image model, providing users with high-quality and diverse visual creation experiences.

MuAPI

WAN 2.1 LoRA T2V is a tool that can generate videos based on text prompts. Through customized training of the LoRA module, users can customize the generated videos, which is suitable for brand narratives, fan content and stylized animations. The product background is rich and provides a highly customized video generation experience.

Openjourney

Openjourney is a high-fidelity open source project designed to simulate MidJourney's interface and utilize Google's Gemini SDK for AI image and video generation. This project supports high-quality image generation using Imagen 4, as well as text-to-video and image-to-video conversion using Veo 2 and Veo 3. It is suitable for developers and creators who need to perform image generation and video production. It provides a user-friendly interface and real-time generation experience to assist creative work and project development.

A2E Free and Uncensored AI Videos

a2e.ai is an AI tool that provides AI avatars, lip synchronization, voice cloning, text generation video and other functions. This product has the advantages of high definition, high consistency, and efficient generation speed. It is suitable for various scenarios and provides a complete set of avatar AI tools.

FlyAgt.ai

FlyAgt is an AI image and video generation platform that provides advanced AI tools from creation to editing to image enhancement. Its main advantages are its affordability, wide range of professional tools, and protection of user privacy.

DreamVid

iMyFone DreamVid is a powerful AI image to video conversion tool. By uploading photos, AI can convert static images into vivid videos, including special effects such as hugs, kisses, and face swaps. This tool is rich in background information, affordable, and targeted at individual users and small businesses.

Everlyn.AI

Everlyn AI is the world's leading AI video generator and free AI picture generator, using advanced AI technology to transform your ideas into stunning visuals. It has disruptive performance indicators, including 15-second rapid generation speed, 25-fold cost reduction, and 8-fold higher efficiency.

Describe Anything

The Describe Anything Model (DAM) is able to process specific areas of an image or video and generate a detailed description. Its main advantage is that it can generate high-quality localized descriptions through simple tags (points, boxes, graffiti or masks), which greatly improves image understanding in the field of computer vision. Developed jointly by NVIDIA and multiple universities, the model is suitable for use in research, development, and real-world applications.

vivago.ai

vivago.ai is a free AI generation tool and community that provides text-to-image, image-to-video and other functions, making creation easier and more efficient. Users can generate high-quality images and videos for free, and support a variety of AI editing tools to facilitate users to create and share. The platform is positioned to provide creators with easy-to-use AI tools to meet their visual creation needs.

Stable Virtual Camera

Stable Virtual Camera is a 1.3B parameter universal diffusion model developed by Stability AI, which is a Transformer image to video model. Its importance lies in providing technical support for New View Synthesis (NVS), which can generate 3D consistent new scene views based on the input view and target camera. The main advantages are the freedom to specify target camera trajectories, the ability to generate samples with large viewing angle changes and temporal smoothness, the ability to maintain high consistency without additional Neural Radiation Field (NeRF) distillation, and the ability to generate high-quality seamless loop videos of up to half a minute. This model is free for research and non-commercial use only, and is positioned to provide innovative image-to-video solutions for researchers and non-commercial creators.

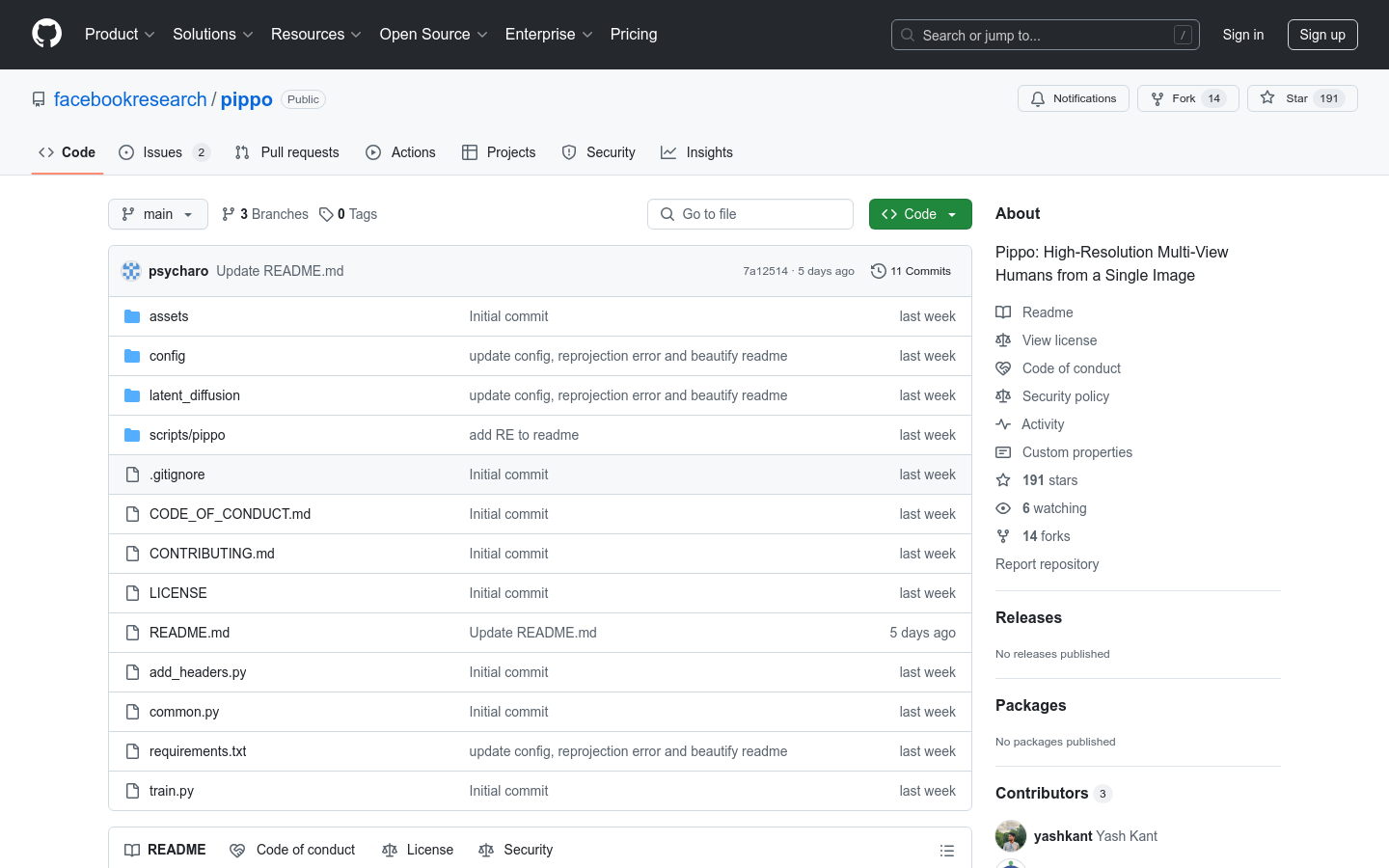

Pippo

Pippo is a generative model developed by Meta Reality Labs in cooperation with multiple universities. It can generate high-resolution multi-view videos from a single ordinary photo. The core benefit of this technology is the ability to generate high-quality 1K resolution video without additional inputs such as parametric models or camera parameters. It is based on a multi-view diffusion converter architecture and has a wide range of application prospects, such as virtual reality, film and television production, etc. Pippo's code is open source, but it does not include pre-trained weights. Users need to train the model by themselves.

Animate Anyone 2

Animate Anyone 2 is a character image animation technology based on the diffusion model, which can generate animations that are highly adapted to the environment. It solves the problem of lack of reasonable correlation between characters and environment in traditional methods by extracting environment representation as conditional input. The main advantages of this technology include high fidelity, strong adaptability to the environment, and excellent dynamic motion processing capabilities. It is suitable for scenes that require high-quality animation generation, such as film and television production, game development and other fields. It can help creators quickly generate character animations with environmental interaction, saving time and costs.

X-Dyna

X-Dyna is an innovative zero-sample human image animation generation technology that generates realistic and expressive dynamic effects by transferring facial expressions and body movements in driving videos to a single human image. This technology is based on the diffusion model. Through the Dynamics-Adapter module, the reference appearance context is effectively integrated into the spatial attention of the diffusion model, while retaining the ability of the motion module to synthesize smooth and complex dynamic details. It can not only realize body posture control, but also capture identity-independent facial expressions through the local control module to achieve precise expression transmission. X-Dyna is trained on a mixture of human and scene videos and is able to learn physical human motion and natural scene dynamics to generate highly realistic and expressive animations.