Llama-3-Patronus-Lynx-8B-Instruct

Open Source Illusion Assessment Model

Product Details

Llama-3-Patronus-Lynx-8B-Instruct is a fine-tuned version based on the meta-llama/Meta-Llama-3-8B-Instruct model developed by Patronus AI, mainly used to detect hallucinations in RAG settings. The model is trained on multiple data sets including CovidQA, PubmedQA, DROP, RAGTruth, etc., including manual annotation and synthetic data. It evaluates whether a given document, question, and answer is faithful to the document content, does not provide new information outside the document, and does not contradict the document information.

Main Features

How to Use

Target Users

The target audience is researchers, developers and enterprises, who need a model that can evaluate and detect the authenticity of AI-generated content, especially in application scenarios where information accuracy needs to be ensured, such as medical, financial and academic research fields.

Examples

The researchers used the model to assess the veracity of answers in the medical literature.

Financial analysts use models to check whether the information in financial reports is accurate.

Academic institutions use models to validate data and conclusions in academic research.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

Firecrawl MCP Server

Firecrawl MCP Server is a plug-in that integrates powerful web crawling functions and supports a variety of LLM clients such as Cursor and Claude. It can efficiently crawl, search and extract web content, and provides functions such as automatic retry and traffic limitation, making it suitable for developers and researchers. The product is highly flexible and scalable and can be used for batch crawling and in-depth research.

DeepSeek-Prover-V2-671B

DeepSeek-Prover-V2-671B is an advanced artificial intelligence model designed to provide powerful inference capabilities. It is based on the latest technology and suitable for a variety of application scenarios. This model is open source and aims to promote the democratization and popularization of artificial intelligence technology, lower technical barriers, and enable more developers and researchers to use AI technology to innovate. By using this model, users can improve their work efficiency and promote the progress of various projects.

AoT

Atom of Thoughts (AoT) is a new reasoning framework that transforms the reasoning process into a Markov process by representing solutions as combinations of atomic problems. This framework significantly improves the performance of large language models on inference tasks through the decomposition and contraction mechanism, while reducing the waste of computing resources. AoT can not only be used as an independent inference method, but also as a plug-in for existing test-time extension methods, flexibly combining the advantages of different methods. The framework is open source and implemented in Python, making it suitable for researchers and developers to conduct experiments and applications in the fields of natural language processing and large language models.

ViDoRAG

ViDoRAG is a new multi-modal retrieval-enhanced generation framework developed by Alibaba's natural language processing team, specifically designed for complex reasoning tasks in processing visually rich documents. This framework significantly improves the robustness and accuracy of the generative model through dynamic iterative inference agents and a Gaussian Mixture Model (GMM)-driven multi-modal retrieval strategy. The main advantages of ViDoRAG include efficient processing of visual and textual information, support for multi-hop reasoning, and high scalability. The framework is suitable for scenarios where information needs to be retrieved and generated from large-scale documents, such as intelligent question answering, document analysis and content creation. Its open source nature and flexible modular design make it an important tool for researchers and developers in the field of multimodal generation.

Level-Navi Agent-Search

Level-Navi Agent is an open source general network search agent framework that can decompose complex problems and gradually search information on the Internet until it answers user questions. It provides a benchmark for evaluating the performance of models on search tasks by providing the Web24 data set, covering five major fields: finance, games, sports, movies, and events. This framework supports zero-shot and few-shot learning, providing an important reference for the application of large language models in the field of Chinese web search agents.

M2RAG

M2RAG is a benchmark code library for retrieval augmentation generation in multimodal contexts. It answers questions by retrieving documents across multiple modalities and evaluates the ability of multimodal large language models (MLLMs) in leveraging multimodal contextual knowledge. The model was evaluated on tasks such as image description, multimodal question answering, fact verification, and image rearrangement, aiming to improve the effectiveness of the model in multimodal context learning. M2RAG provides researchers with a standardized testing platform that helps advance the development of multimodal language models.

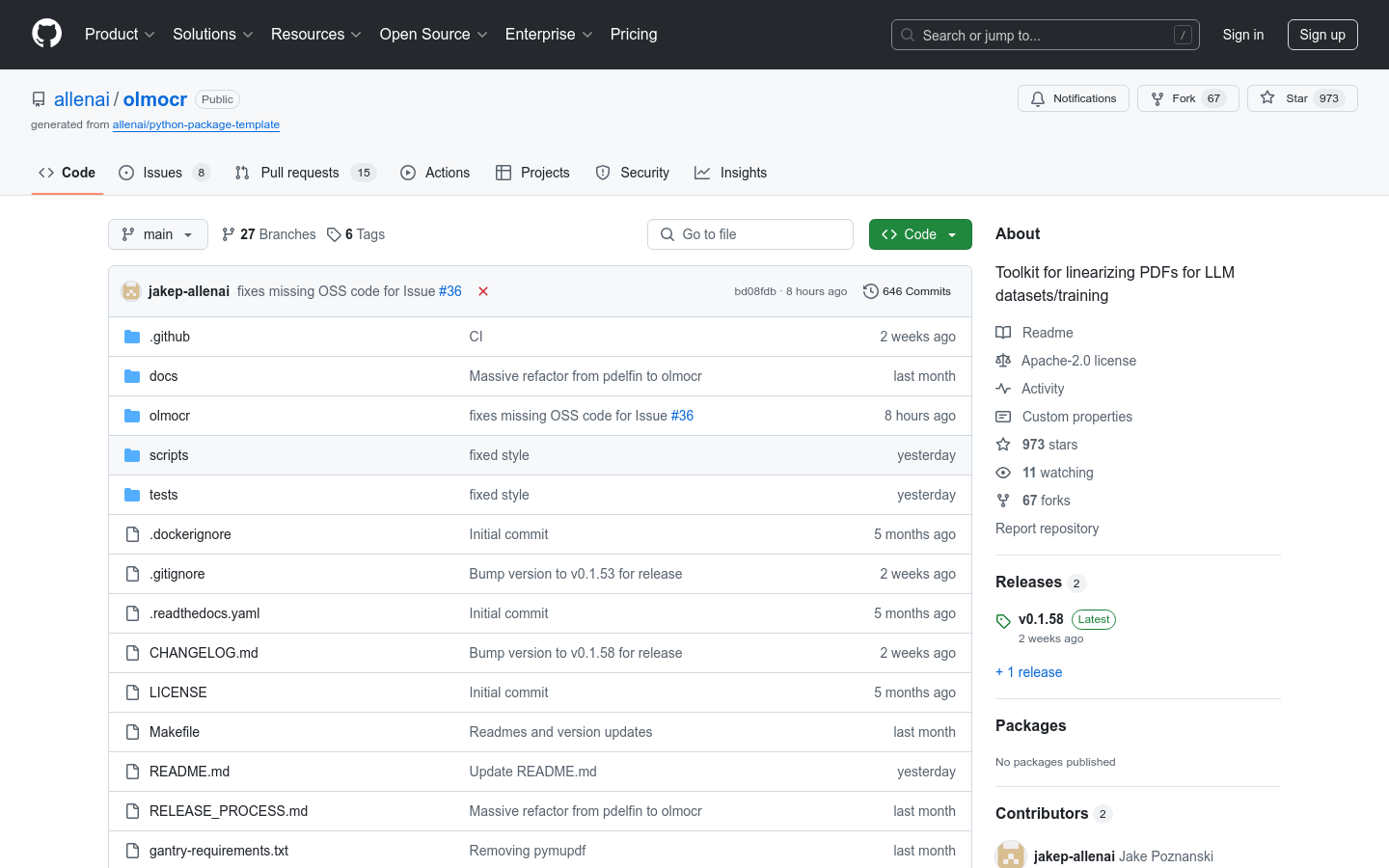

olmOCR

olmOCR is an open source toolkit developed by the Allen Institute for Artificial Intelligence (AI2), designed to linearize PDF documents for use in the training of large language models (LLM). This toolkit solves the problem that traditional PDF documents have complex structures and are difficult to directly use for model training by converting PDF documents into a format suitable for LLM processing. It supports a variety of functions, including natural text parsing, multi-version comparison, language filtering, and SEO spam removal. The main advantage of olmOCR is that it can efficiently process a large number of PDF documents and improve the accuracy and efficiency of text parsing through optimized prompt strategies and model fine-tuning. This toolkit is intended for researchers and developers who need to process large amounts of PDF data, especially in the fields of natural language processing and machine learning.

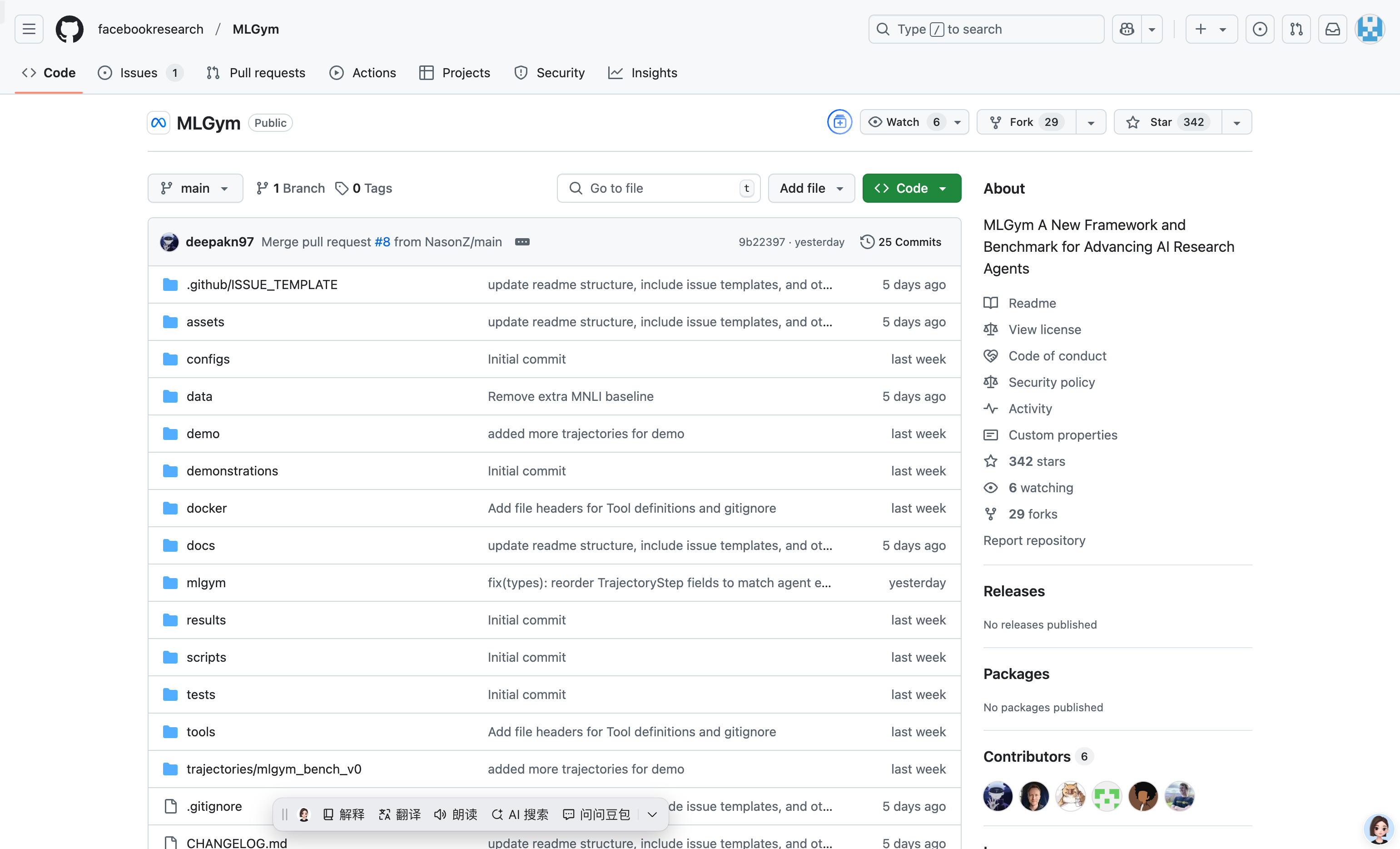

MLGym

MLGym is an open source framework and benchmark developed by Meta's GenAI team and UCSB NLP team for training and evaluating AI research agents. It promotes the development of reinforcement learning algorithms by providing diverse AI research tasks and helping researchers train and evaluate models in real-world research scenarios. The framework supports a variety of tasks, including computer vision, natural language processing and reinforcement learning, and aims to provide a standardized testing platform for AI research.

PIKE-RAG

PIKE-RAG is a domain knowledge and reasoning enhanced generative model developed by Microsoft, designed to enhance the capabilities of large language models (LLM) through knowledge extraction, storage and reasoning logic. Through multi-module design, this model can handle complex multi-hop question and answer tasks, and significantly improves the accuracy of question and answer in fields such as industrial manufacturing, mining, and pharmaceuticals. The main advantages of PIKE-RAG include efficient knowledge extraction capabilities, powerful multi-source information integration capabilities, and multi-step reasoning capabilities, making it perform well in scenarios that require deep domain knowledge and complex logical reasoning.

SWE-Lancer

SWE-Lancer is a benchmark launched by OpenAI to evaluate the performance of cutting-edge language models on real-world free software engineering tasks. The benchmark covers a variety of independent engineering tasks ranging from a $50 bug fix to a $32,000 feature implementation, as well as management tasks such as the selection of a model between technical implementation options. By mapping performance to monetary value through models, SWE-Lancer provides a new perspective on the economic impact of AI model development and advances related research.

Goedel-Prover

Goedel-Prover is an open source large-scale language model focused on automated theorem proving. It significantly improves the efficiency of automated proof of mathematical problems by translating natural language mathematical problems into formal languages (such as Lean 4) and generating formal proofs. The model achieved a success rate of 57.6% on the miniF2F benchmark, surpassing other open source models. Its main advantages include high performance, open source scalability, and deep understanding of mathematical problems. Goedel-Prover aims to promote the development of automated theorem proving technology and provide powerful tool support for mathematical research and education.

OpenThinker-32B

OpenThinker-32B is an open source reasoning model developed by the Open Thoughts team. It achieves powerful inference capabilities by scaling data scale, validating inference paths, and scaling model sizes. The model outperforms existing open data inference models on inference benchmarks in mathematics, code, and science. Its main advantages include open source data, high performance and scalability. The model is fine-tuned based on Qwen2.5-32B-Instruct and trained on large-scale data sets, aiming to provide researchers and developers with powerful inference tools.

RAG-FiT

RAG-FiT is a powerful tool designed to improve the capabilities of large language models (LLMs) through retrieval-augmented generation (RAG) technology. It helps models better utilize external information by creating specialized RAG augmented datasets. The library supports the entire process from data preparation to model training, inference, and evaluation. Its main advantages include modular design, customizable workflows and support for multiple RAG configurations. RAG-FiT is based on an open source license and is suitable for researchers and developers for rapid prototyping and experimentation.

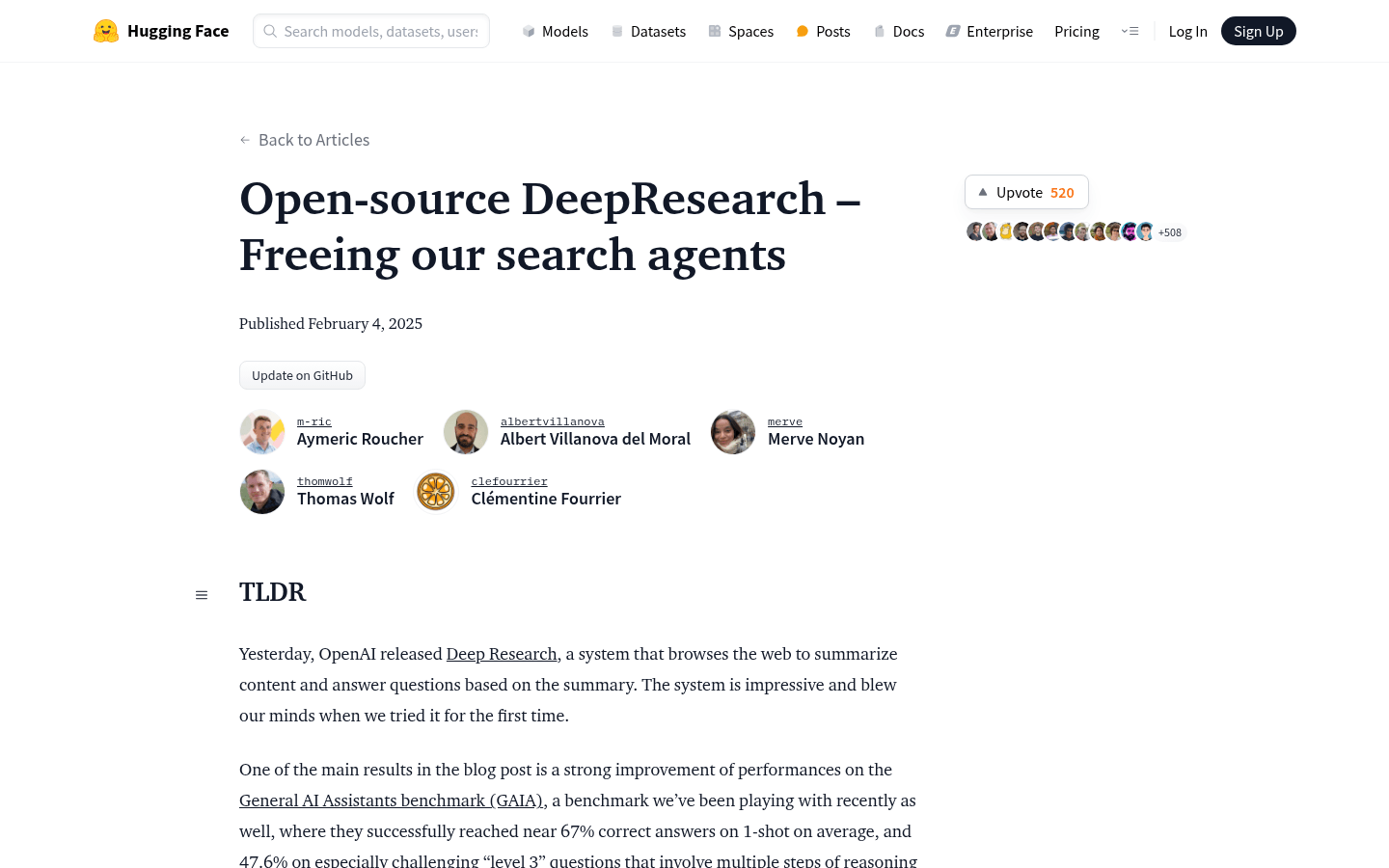

Open-source DeepResearch

Open-source DeepResearch is an open source project that aims to reproduce functions similar to OpenAI Deep Research through open source frameworks and tools. The project is based on the Hugging Face platform and utilizes open source large language models (LLM) and agent frameworks to achieve complex multi-step reasoning and information retrieval through code agents and tool calls. Its main advantages are that it is open source, highly customizable, and able to leverage the power of the community for continuous improvement. The goal of the project is to enable everyone to run intelligent agents like DeepResearch locally, using their favorite models, fully localized and customizable.

node-DeepResearch

node-DeepResearch is a deep research model based on Jina AI technology that focuses on finding answers to questions through continuous search and reading of web pages. It leverages the LLM capabilities provided by Gemini and the web search capabilities of Jina Reader to handle complex query tasks and generate answers through multi-step reasoning and information integration. The main advantage of this model lies in its powerful information retrieval capabilities and reasoning capabilities, and its ability to handle complex problems that require multi-step solutions. It is suitable for scenarios that require in-depth research and information mining, such as academic research, market analysis, etc. The model is currently open source, and users can obtain the code through GitHub and deploy it themselves.

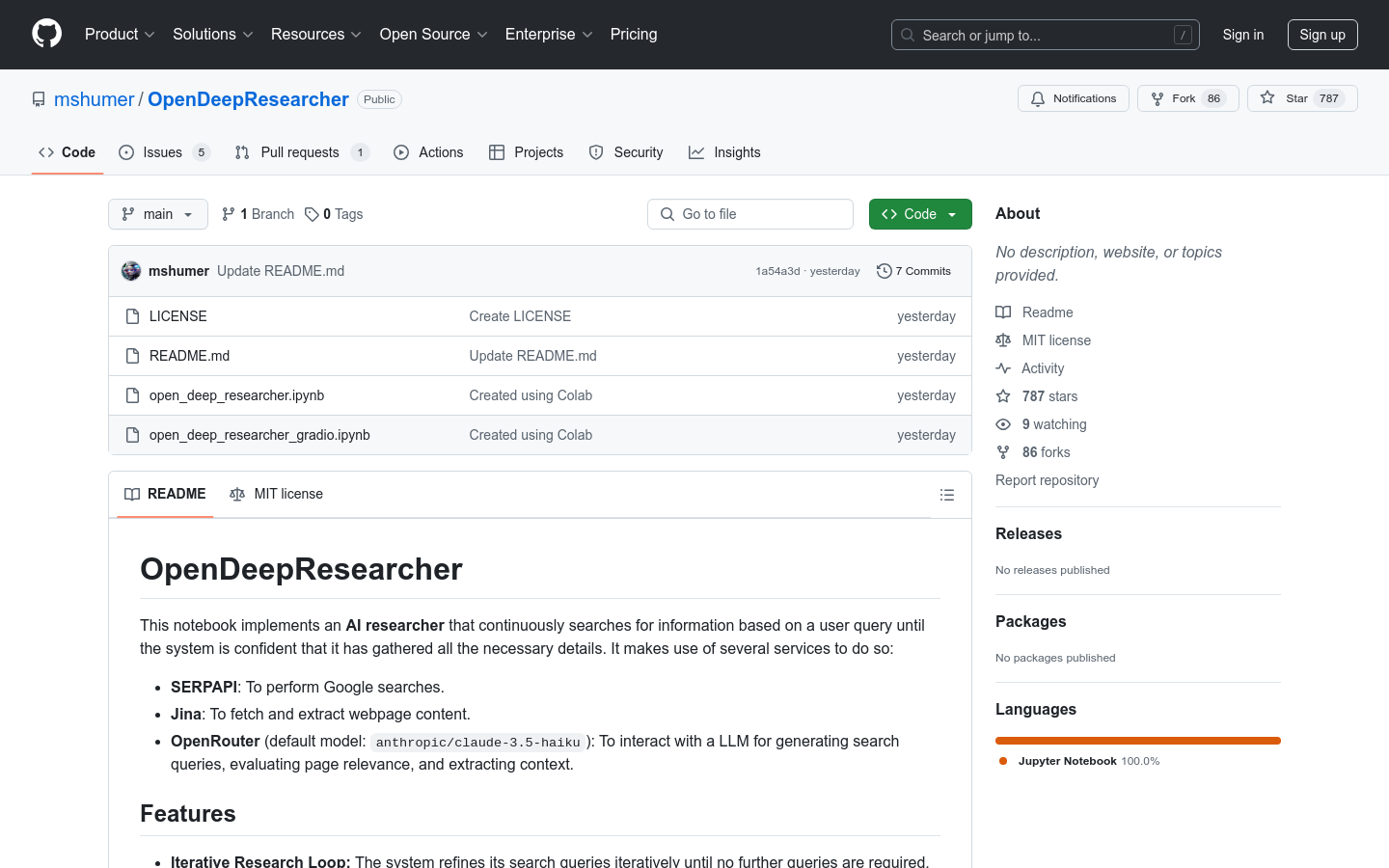

OpenDeepResearcher

OpenDeepResearcher is an AI-based research tool that, by combining services such as SERPAPI, Jina, and OpenRouter, can automatically conduct multiple rounds of iterative searches based on query topics entered by users until sufficient information is collected and a final report is generated. The core advantage of this tool lies in its efficient asynchronous processing capabilities, deduplication function and powerful LLM decision support, which can significantly improve research efficiency. It is mainly aimed at scientific researchers, students and professionals in related fields who need to conduct large-scale literature searches and information sorting, helping them quickly obtain high-quality research materials. The tool is currently available as open source and users can deploy and use it as needed.