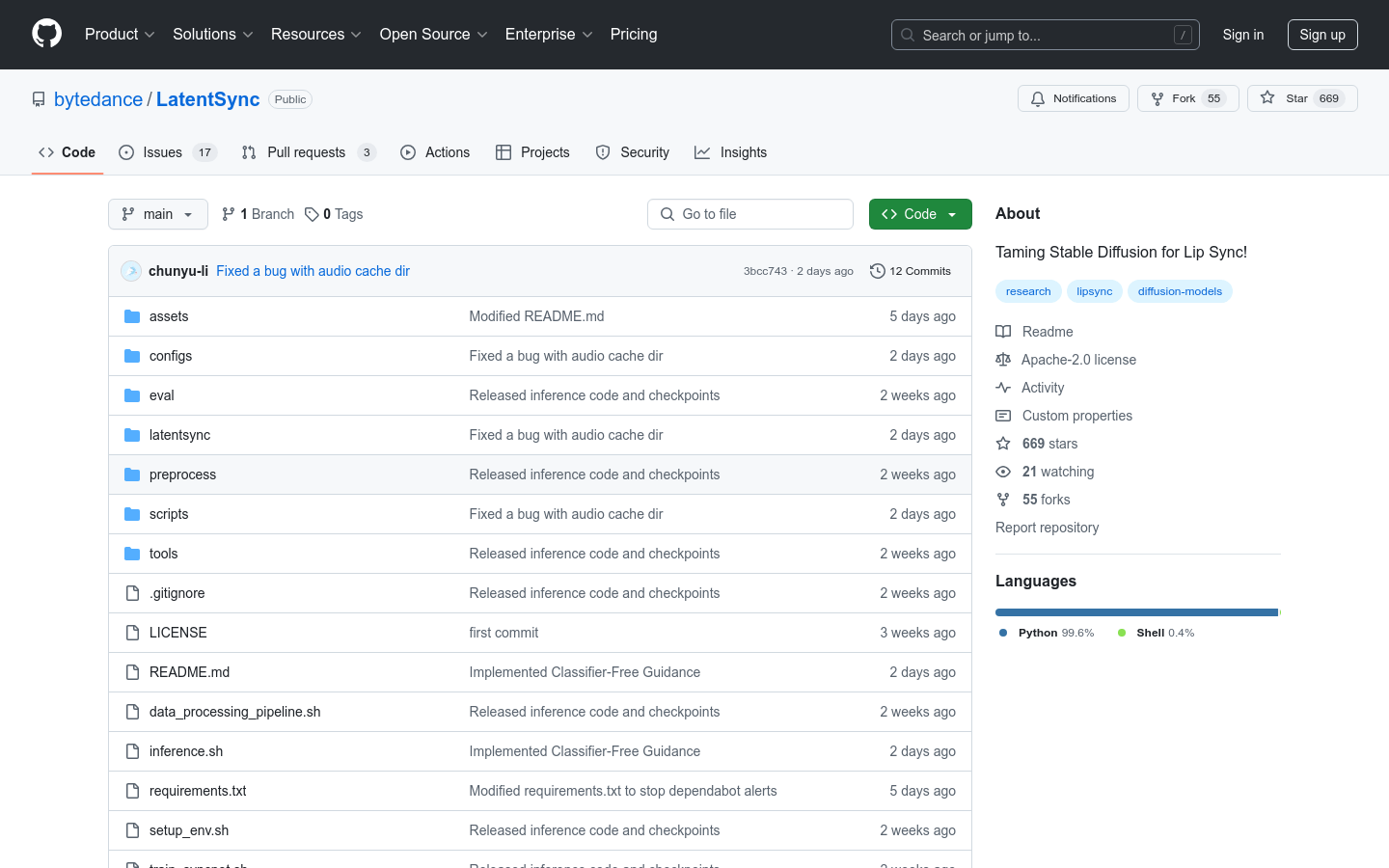

LatentSync

Lip synchronization framework based on audio-conditioned latent diffusion model

Product Details

LatentSync is a lip sync framework developed by ByteDance based on the latent diffusion model of audio conditions. It directly leverages the power of Stable Diffusion to model complex audio-visual correlations without any intermediate motion representation. This framework effectively improves the temporal consistency of generated video frames while maintaining the accuracy of lip synchronization through the proposed temporal representation alignment (TREPA) technology. This technology has important application value in fields such as video production, virtual anchoring, and animation production. It can significantly improve production efficiency, reduce labor costs, and bring users a more realistic and natural audio-visual experience. The open source nature of LatentSync also enables it to be widely used in academic research and industrial practice, promoting the development and innovation of related technologies.

Main Features

How to Use

Target Users

It is suitable for professionals such as video producers, animators, virtual anchor developers, game developers, film and television special effects artists who need to perform lip synchronization, as well as academic researchers and enthusiasts who are interested in lip synchronization technology.

Examples

When making virtual anchor videos, use LatentSync to automatically generate realistic lip movements based on the anchor's voice, making the video more realistic and interactive.

Animation production companies can use LatentSync to automatically generate matching lip animations when dubbing characters, saving the time and cost of traditional manual lip animation production.

When producing special effects videos, film and television special effects teams can use LatentSync to repair or enhance the lip synchronization effect of the characters in the video to improve the overall visual effect.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

Kling 2.5 AI

Kling2.5 Turbo is an AI video generation model that significantly improves the understanding of complex causal relationships and time series. It has the characteristics of cost-optimized generation. The cost of generating a 5-second high-quality video is reduced by 30% (25 points vs. 35 points), and the motion smoothness is excellent. It uses advanced reasoning intelligence to understand complex causal relationships and time instructions, greatly improving motion smoothness and camera stability while optimizing costs. It's also the world's first model to output native 10, 12 and 16-bit HDR video in EXR format, suitable for professional studio workflows and pipelines. Additionally, its draft mode generates 20 times faster, making it easy to iterate quickly. The product has a variety of price plans, including a free entry version, a $29 professional version, and a $99 studio version, suitable for users with different needs, from individual creators to corporate teams.

iMideo

iMideo is an AI video generation platform with multiple advanced AI models such as Veo3 and Seedance. Its main advantage is that it can quickly convert still pictures into high-quality AI videos without complex editing skills, and it supports multiple aspect ratios and resolution settings. The platform provides a free version, allowing users to try the image-to-video function for free first. The paid plan starts at US$5.95 per month, which is suitable for all types of creators to easily produce professional-level video content.

Ray 3 AI

Ray 3 is the first video AI inference model launched by Lumakey, capable of generating true EXR 10, 12, 12, 12 and 16-bit HDR format videos. Its importance lies in providing new tools for high-quality video production to the film, television and advertising industries. The main advantages include high-bit HDR format, with better color and brightness performance, suitable for high-end projects; it can be used for high-resolution video production to meet professional needs. The product background is to meet the demand for high-quality videos in the film, television and advertising industries. Regarding the price, the documentation does not mention it. Product positioning is to serve the fields of high-end film and television and advertising production.

Luma Ray3AI

Ray3 is the world's first video model with inference capabilities, powered by Luma Ray3. It can think, plan and create professional-grade content, with native HDR generation and intelligent draft mode for rapid iteration. Key benefits include: inferential intelligence to deeply understand prompts, plan complex scenes, and self-examine; native 10, 12, and 16-bit HDR video for professional studio workflows; and draft mode to generate 20 times faster, making it easy to refine concepts quickly. In terms of price, there is a free version, a $29 professional version and a $99 studio version. Positioned to meet the video creation needs of different user groups from exploration to professional commercial applications.

Ray3

Ray3 is the world's first AI video model with inference intelligence and 16-bit HDR output. Its importance lies in providing advanced video generation solutions for film and television producers, advertising companies and studios. Its main advantages are: the output video has high fidelity, consistency and controllability; it supports 16-bit HDR, providing professional-level color depth and dynamic range; it has reasoning intelligence and can understand the scene context to ensure the logical consistency and physical accuracy of each frame; it is compatible with Adobe software and can be seamlessly integrated into the existing production process; it has a 5x speed draft mode for rapid creative testing. This product is positioned in the field of professional video production. Although the specific price is not mentioned in the document, there is a "trial" option, and it is speculated that it may adopt a free trial plus payment model.

Lucy Edit AI

Lucy Edit AI is the first basic model for text-guided video editing, launched by DecartAI and open source. Its importance lies in innovating the video creation model, allowing creators to edit videos only through text commands without complicated operations. Key benefits include lightning-fast processing speeds, industry-leading accuracy, unlimited video creation potential, a simple and intuitive interface, and is trusted by content creators around the world. This product is free to use and is positioned to help users complete professional video editing efficiently and conveniently.

Ray 3

Ray 3 AI Video Generator is a video generation platform driven by advanced Ray 3 AI technology. It is the world's first AI video model with HDR generation and intelligent reasoning capabilities. Its importance lies in providing professional creators and enterprises with powerful video production tools that can quickly convert text into high-quality 4K HDR videos. The main advantages include intelligent reasoning to understand user intentions, support for multiple video styles, and multiple practical functions such as voice narration, smart subtitles, etc. The product background was developed to meet the market's demand for efficient, high-quality video creation. In terms of price, there is a free version, a professional version ($29.9 per month) and an enterprise version ($999). It is positioned to serve creators and enterprises around the world and assist professional HDR video creation.

Hailuo 02 fast

Hailuo 2 is an AI video generator that uses MoE technology to convert text and images into 720P videos. Its main advantages include advanced AI technology, high-definition video generation, text-to-video function, etc.

Wan 2.2

Wan 2.2 is an AI video generator that uses advanced MoE technology to convert text and images into 720P videos. It supports consumer-grade GPUs and can generate professional videos in real time.

Veo 5 AI

Veo 5 AI Video Generator is a next-generation AI video generator based on Veo 5 technology that can quickly create stunning, ultra-realistic videos. It uses the latest Veo 5 A model to achieve intelligent scene understanding, natural motion synthesis and context-aware rendering, bringing unprecedented ultra-realism and creativity.

LTXV 13B

LTXV 13B is an advanced AI video generation model developed by Lightricks with 13 billion parameters, significantly improving the quality and speed of video generation. Released in May 2025, this model is a significant upgrade from its predecessor, the LTX video model, supporting real-time high-quality video generation and suitable for all types of creative content production. The model uses multi-scale rendering technology to generate 30 times faster than similar models and run smoothly on consumer hardware.

Veozon AI Video Generator

Veo3 AI Video Generator is a powerful tool that uses Google's Veo3 AI model to generate stunning 4K videos from text. Featuring advanced physics simulations and realistic visual effects, transform your ideas into cinematic content. Price: Paid.

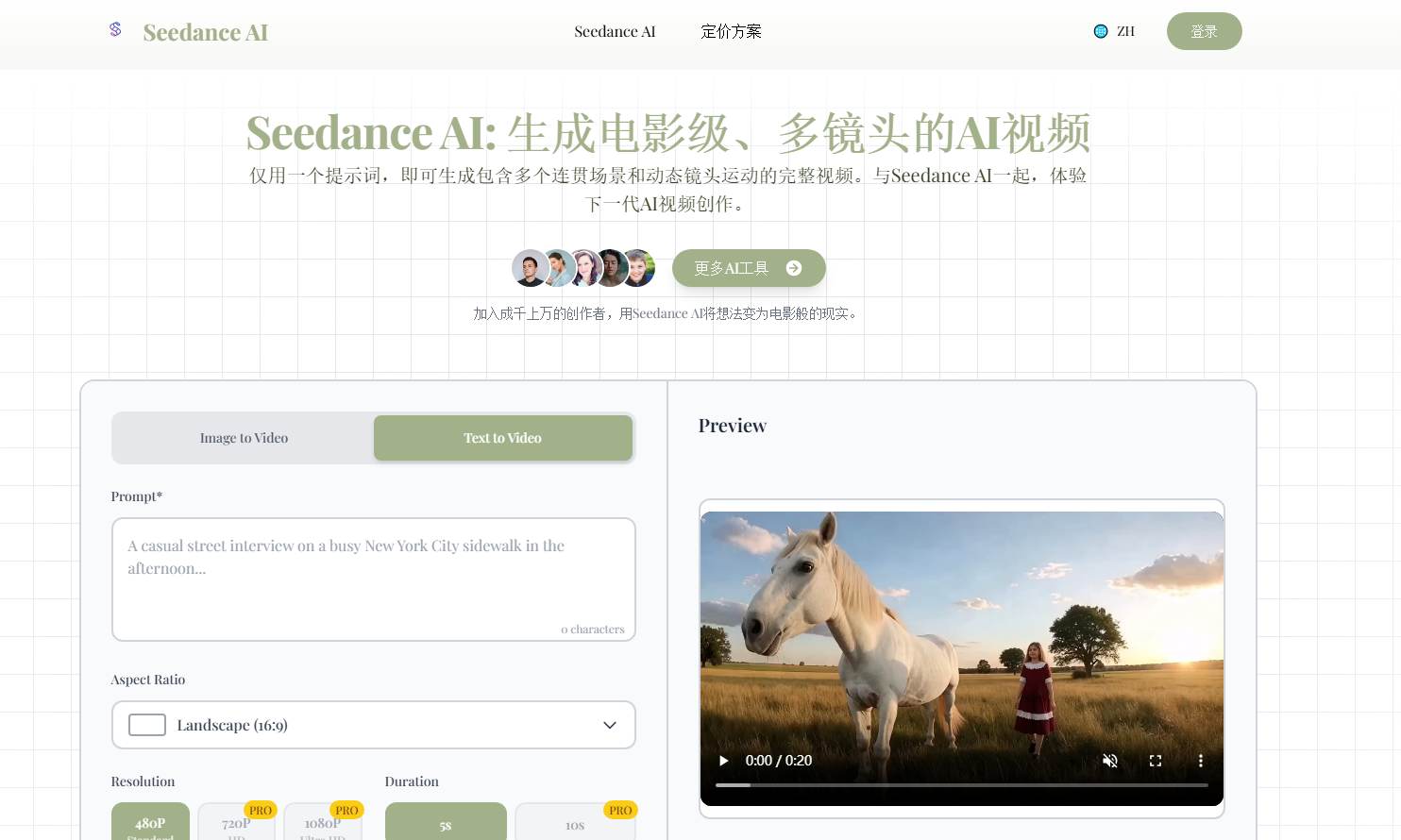

Seedance AI

Seedance AI is a powerful video model that can generate high-quality, narrative videos from simple text prompts. It has features such as dynamic lens movement and 1080p high-definition video output, providing users with the convenience of creating movie-level videos.

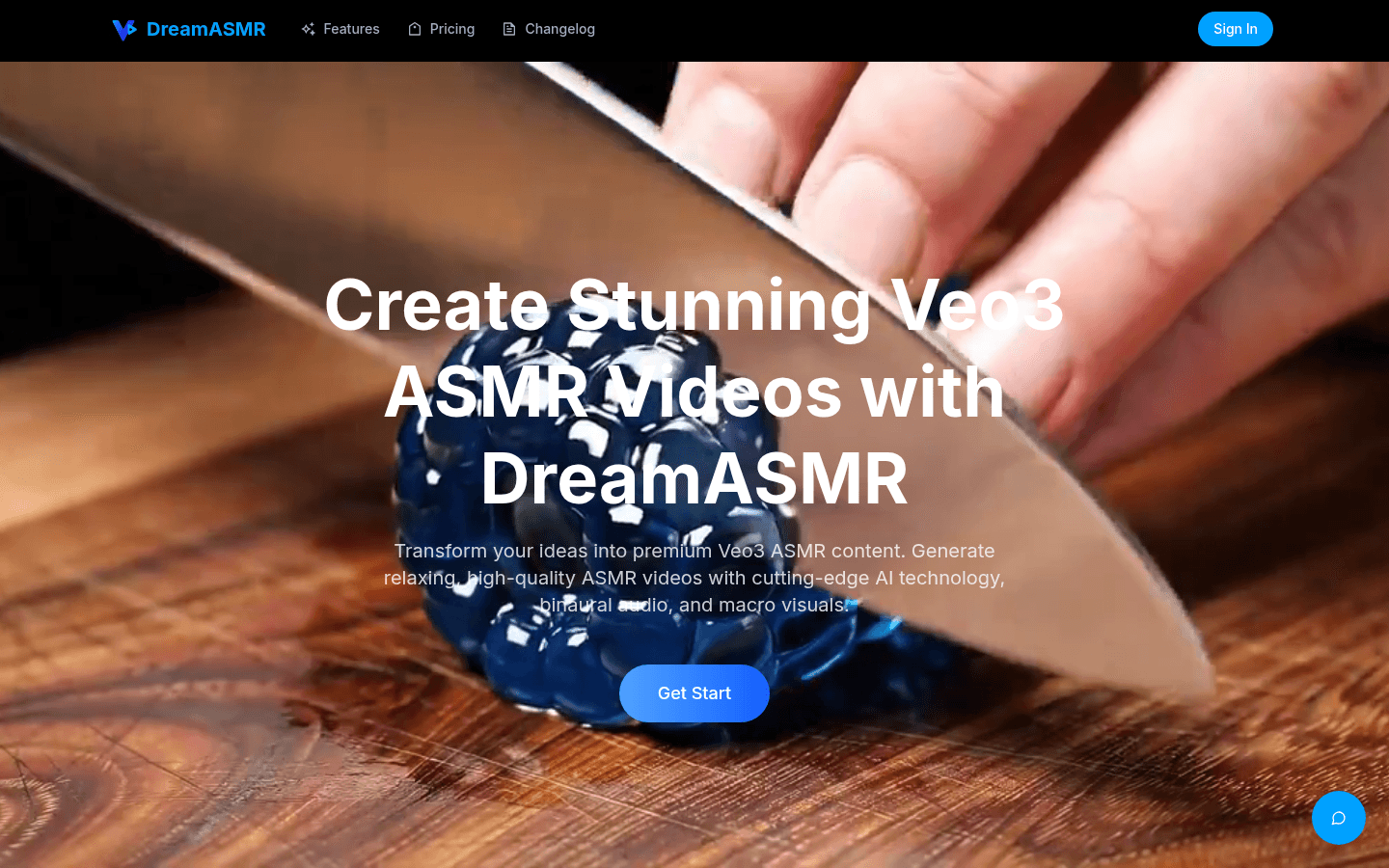

DreamASMR

DreamASMR leverages Veo3 ASMR technology to create relaxing video content, providing advanced AI video generation, binaural sound and a meticulous visual experience, making it the ultimate ASMR experience.

LIP

LIP Sync AI is a revolutionary AI technology that utilizes a global audio perception engine to transform still photos into lifelike conversational videos. Its main advantage is its efficient and realistic generation of effects, resulting in photos with perfect lip synchronization. This product is positioned to provide users with high-quality lip sync video generation services.

Veo3Video

Veo3 Video is a platform that uses the Google Veo3 model to generate high-quality videos. It uses advanced technology and algorithms to ensure audio and lip synchronization during video generation, providing consistent video quality.