FlashInfer

FlashInfer is a high-performance GPU kernel library for serving large language models.

Product Details

FlashInfer is a high-performance GPU kernel library designed for serving large language models (LLM). It significantly improves the performance of LLM in inference and deployment by providing efficient sparse/dense attention mechanism, load balancing scheduling, memory efficiency optimization and other functions. FlashInfer supports PyTorch, TVM and C++ API, making it easy to integrate into existing projects. Its main advantages include efficient kernel implementation, flexible customization capabilities and broad compatibility. The development background of FlashInfer is to meet the growing needs of LLM applications and provide more efficient and reliable inference support.

Main Features

How to Use

Target Users

FlashInfer is suitable for developers and researchers who require high-performance LLM inference and deployment, especially those that require large-scale language model inference on GPUs.

Examples

In natural language processing tasks, FlashInfer is used to accelerate the inference process of large language models and improve model response speed.

In machine translation applications, the attention mechanism of the FlashInfer optimization model is used to improve translation quality and efficiency.

In the intelligent question and answer system, FlashInfer's efficient core is used to achieve fast text generation and retrieval functions.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

100 Vibe Coding

100 Vibe Coding is an educational programming website focused on quickly building small web projects through AI technology. It skips complicated theories and focuses on practical results, making it suitable for beginners who want to quickly create real projects.

iFlow CLI

iFlow CLI is an interactive terminal command line tool designed to simplify the interaction between developers and terminals and improve work efficiency. It supports a variety of commands and functions, allowing users to quickly perform commands and management tasks. The key benefits of iFlow CLI include ease of use, flexibility, and customizability, making it suitable for a variety of development environments and project needs.

Never lose your work again

Claude Code Checkpoint is an essential companion app for Claude AI developers. Keep your code safe and never lost by tracking all code changes seamlessly.

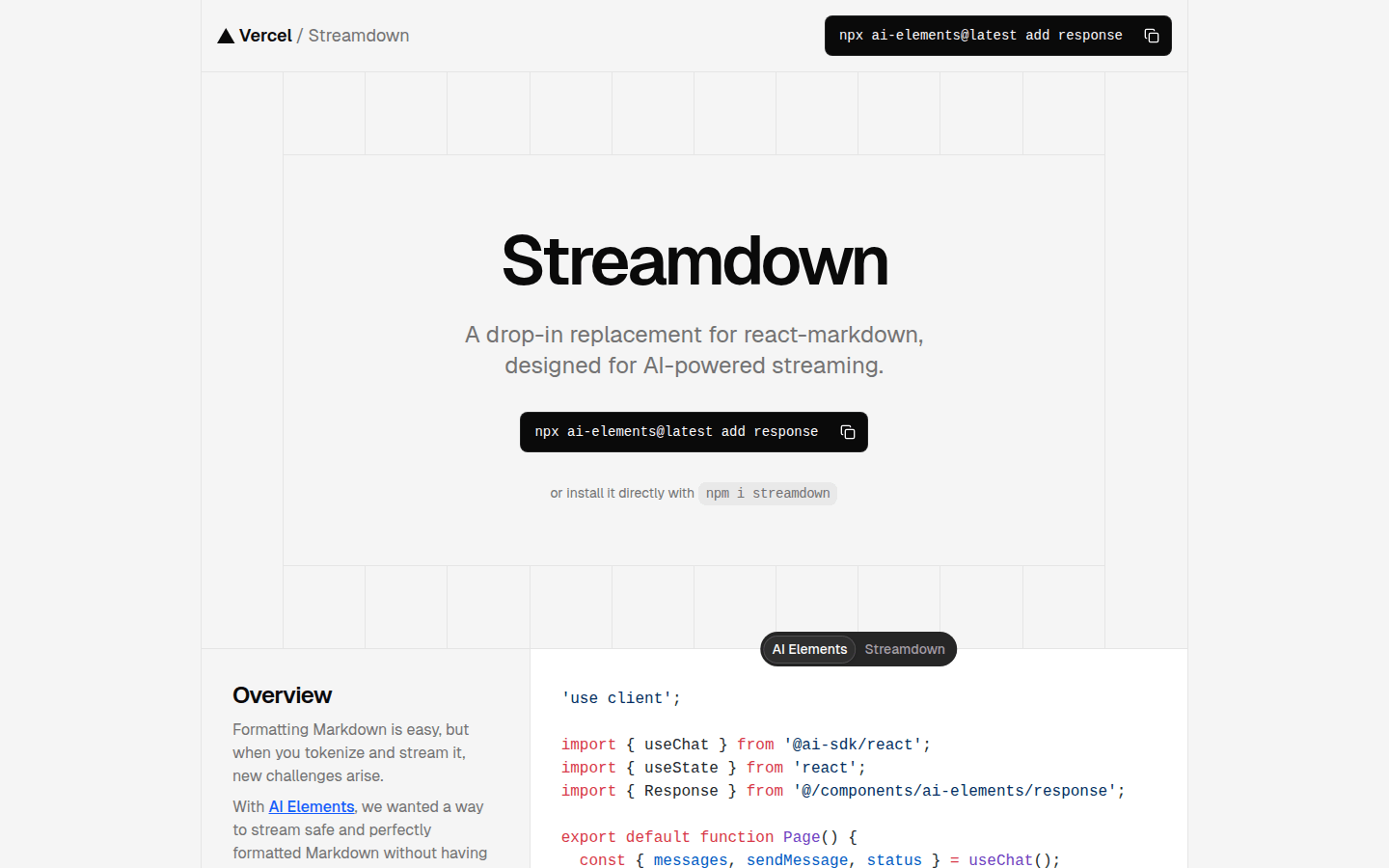

Streamdown

Streamdown is a plug-and-play replacement for React Markdown designed for AI-driven streaming. It solves new challenges that arise when marking and streaming, ensuring safe and perfectly formatted Markdown content. Key advantages include AI-driven streaming, built-in security, support for GitHub Flavored Markdown, and more.

Compozy

Compozy is an enterprise-grade platform that uses declarative YAML to provide scalable, reliable and cost-effective distributed workflows, simplifying complex fan-out, debugging and monitoring for production-ready automation.

Dereference

Claude Code is a futuristic IDE that seamlessly integrates with CLI AI tools such as Claude Code and Gemini CLI. Its main advantages are that it provides multi-session orchestration, atomic branching capabilities, and greatly improves developer productivity. The product is positioned to be designed for developers who want fast delivery.

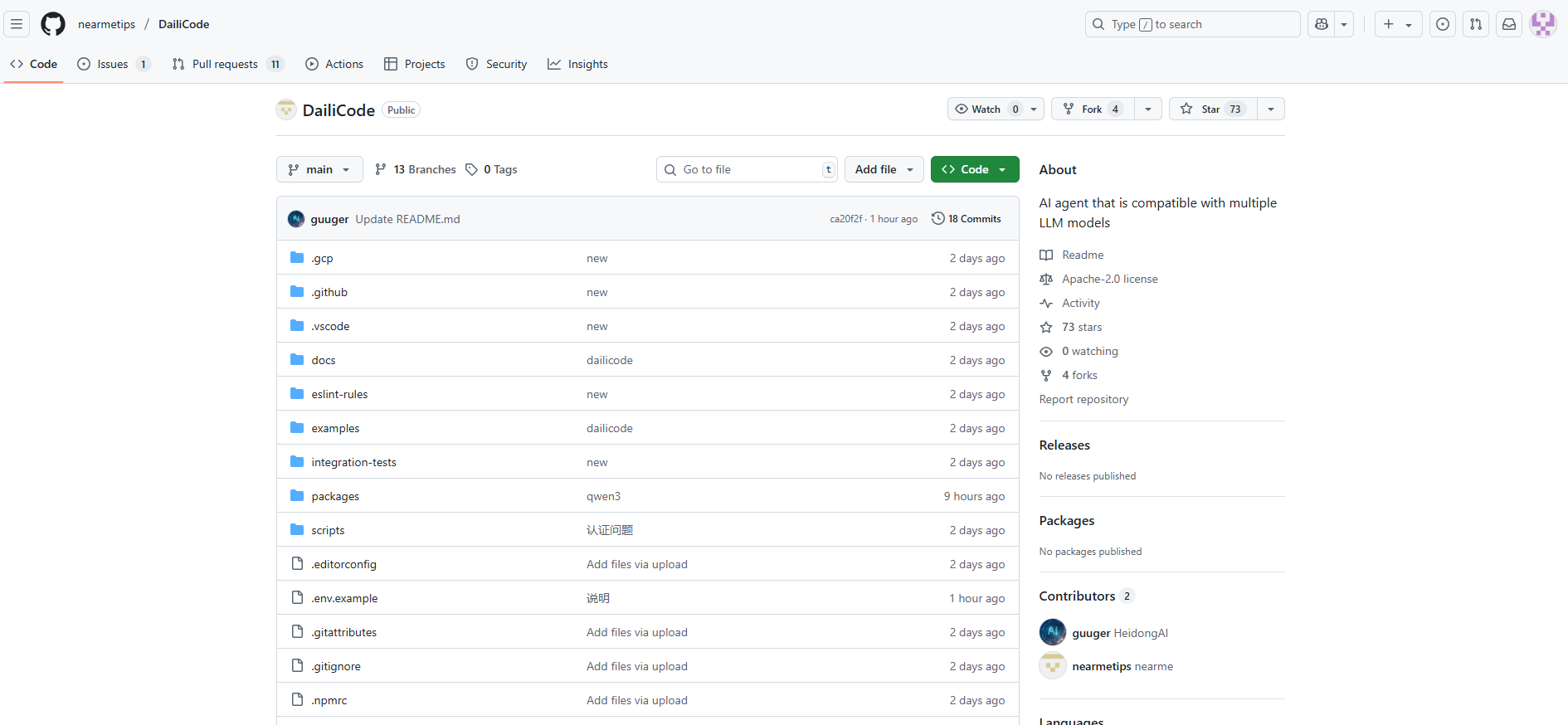

DailiCode

Daili Code is an open source command-line AI tool that is compatible with multiple large language models and can connect to your tools, understand code, and accelerate workflows. It supports multiple LLM providers, provides powerful automation and multi-modal capabilities, and is suitable for developers and technicians.

CodeBuddy IDE

CodeBuddy IDE is a development tool integrated with AI technology, designed to improve developers' work efficiency and collaboration capabilities. It helps developers go from design to code faster and provides a secure development environment through intelligent code completion, design generation and seamless back-end integration. The product is aimed at professional developers and has a 30-day free trial, followed by a paid subscription.

Uncursor

Uncursor is an AI-powered Vibe programming platform that lets you tell an AI agent what you want to build and it will build it for you. Its main advantage is that it allows users to code from anywhere, saving time and increasing efficiency. Uncursor is positioned to help users who want to quickly build applications and websites.

Vibecode

VibeCode is a tool that helps users quickly transform ideas into mobile applications. Its main advantage is a fast, simple and efficient development process coupled with powerful functionality and flexible customization options.

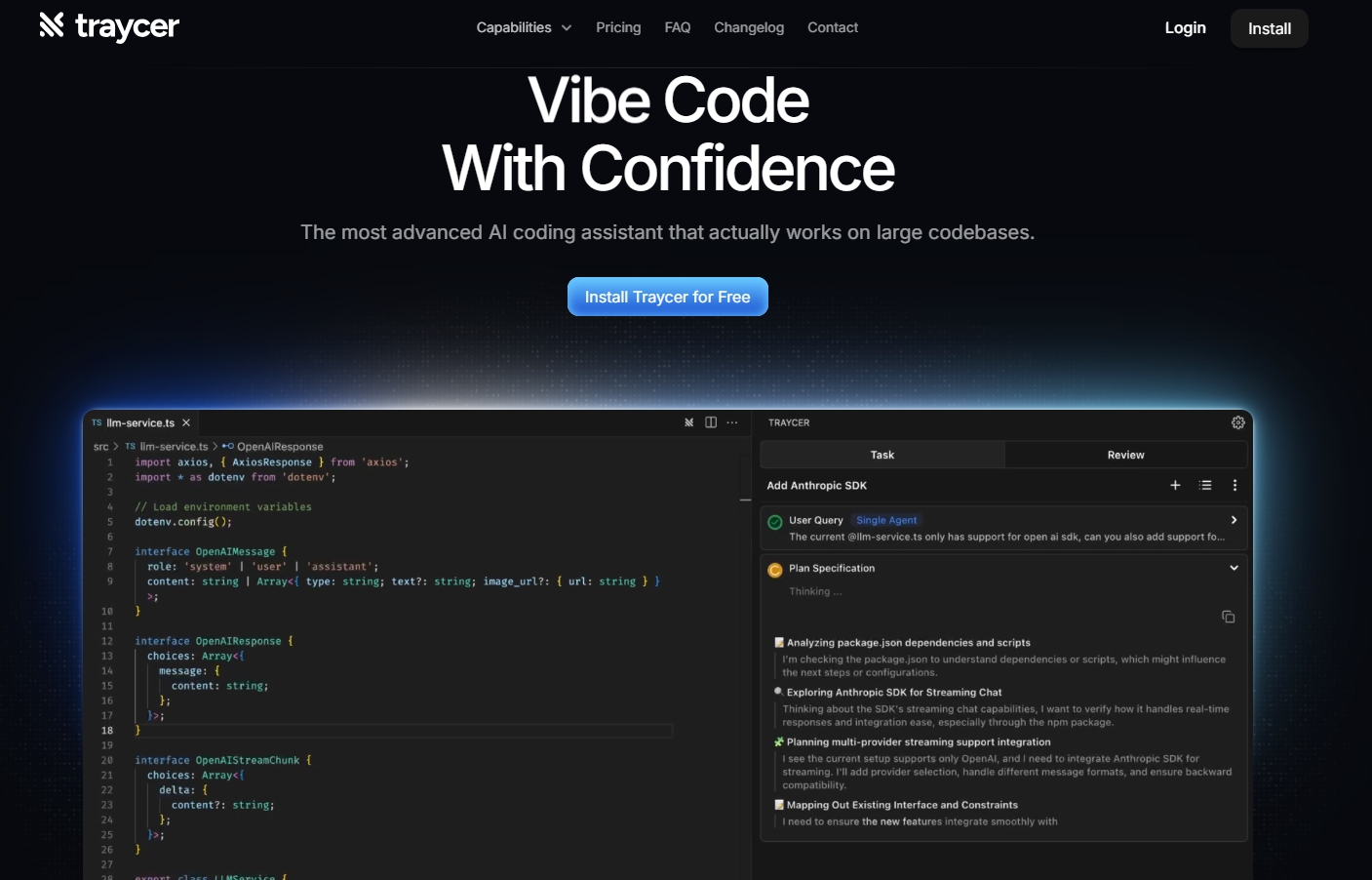

Traycer

Traycer is an innovative coding assistant designed to improve the efficiency of collaboration between developers and AI coding agents. Traycer lets you manage your coding projects more efficiently with its superior scheduling capabilities, ensuring every step is executed optimally. Its intuitive interface and one-click handover make it easy to work with any major AI coding agent. The product is positioned to improve developer productivity and is an indispensable tool for modern software development.

Dualite

Dualite is an AI-based development tool. The core product Alpha is an AI front-end engineer that helps developers quickly build scalable web and mobile applications. This tool is designed to provide secure, smart solutions for SaaS companies and small and medium-sized enterprises.

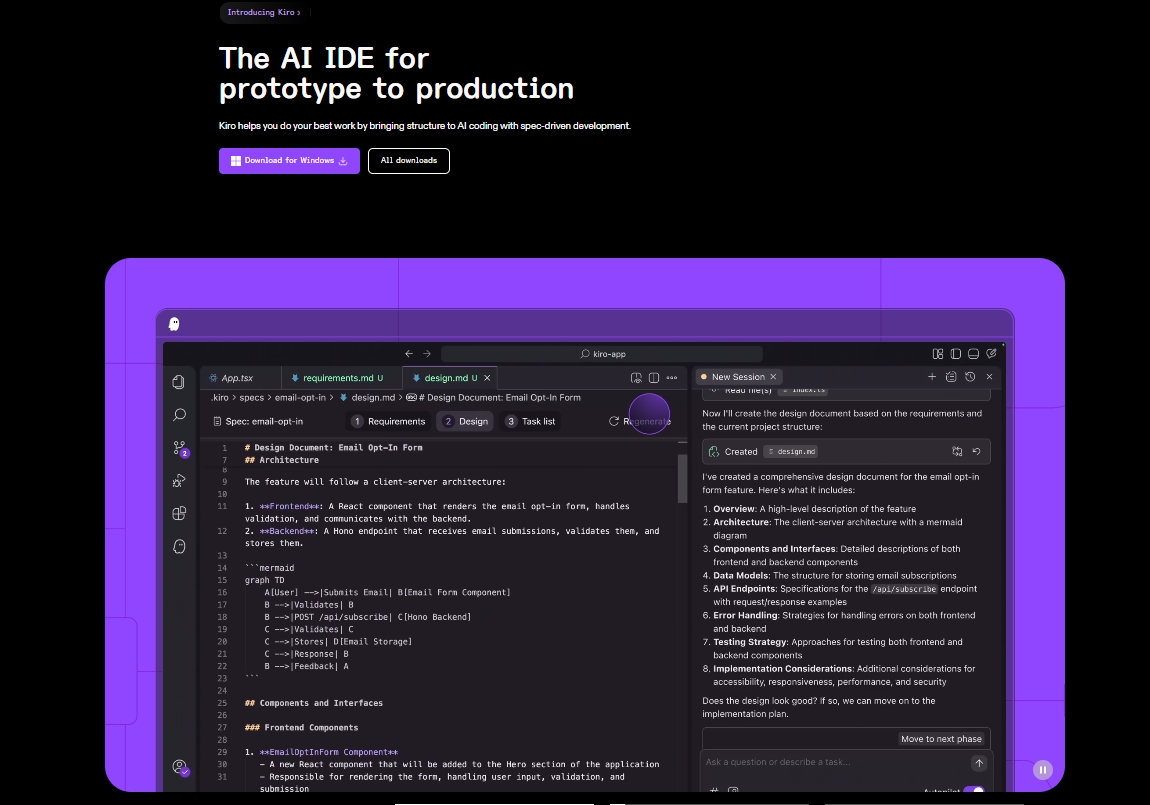

Kiro AI

Kiro AI is an innovative integrated development environment that transforms the way developers build software through specification-driven development. Unlike traditional coding tools, Kiro AI leverages specification-driven development to transform your ideas into structured requirements, system designs, and production-ready code. Built on the open source VS Code and powered by AWS Bedrock’s Claude model, Kiro AI bridges the gap between rapid prototyping and maintainable production systems.

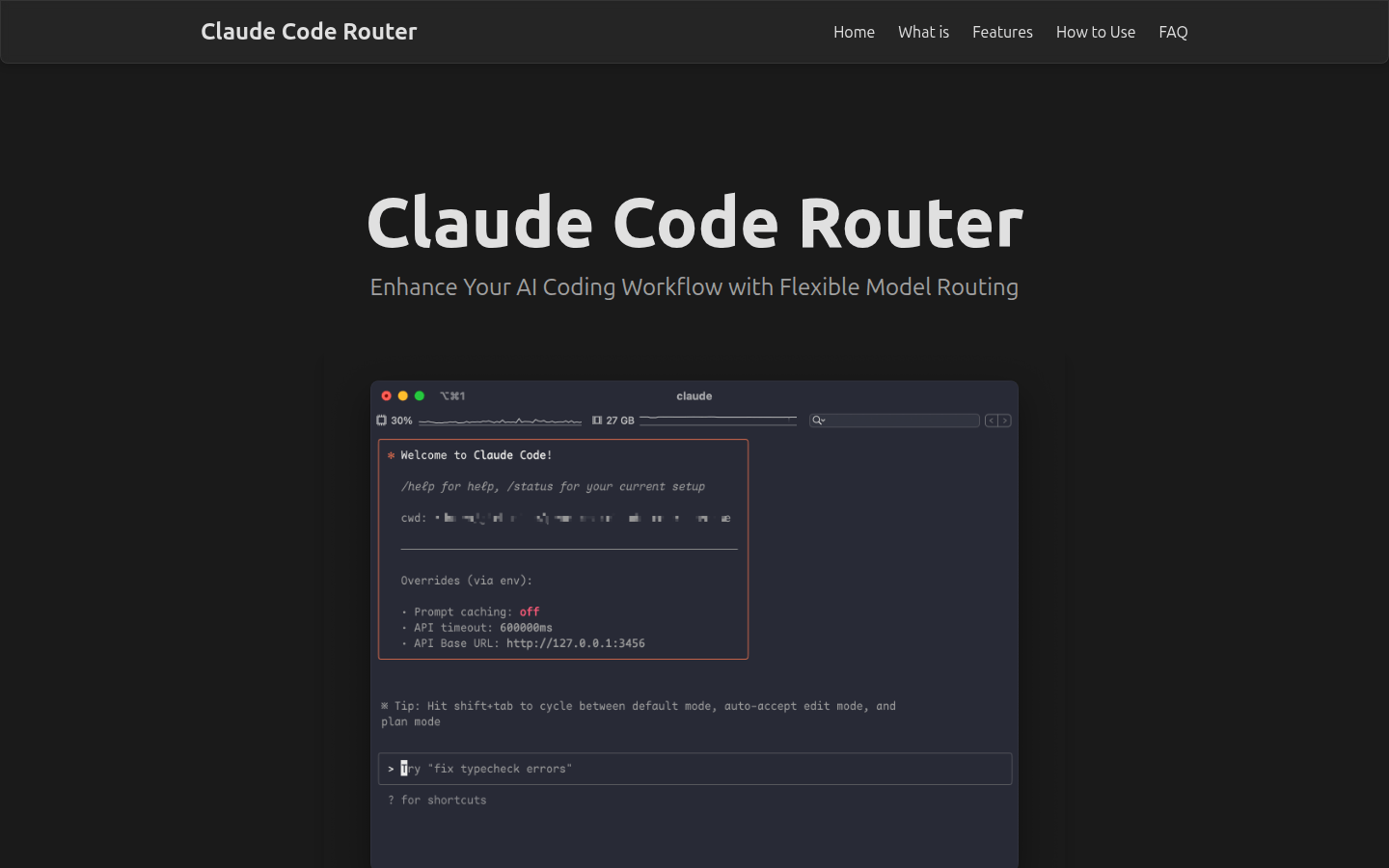

Claude Code Router

Claude Code Router is a tool built on Claude Code that allows users to route coding requests to different AI models, providing greater flexibility and customization. By configuring JSON files, users can specify default models, background tasks, inference models, and long context models.

Kiro

Kiro is an advanced AI integrated development environment (IDE) that provides support at all stages of software development. It uses multi-modal input, understands context, and has complete lifecycle control as if you were working with a senior developer. Kiro's specification-driven development approach allows users to quickly move from concept to working prototype, significantly improving development efficiency and quality.

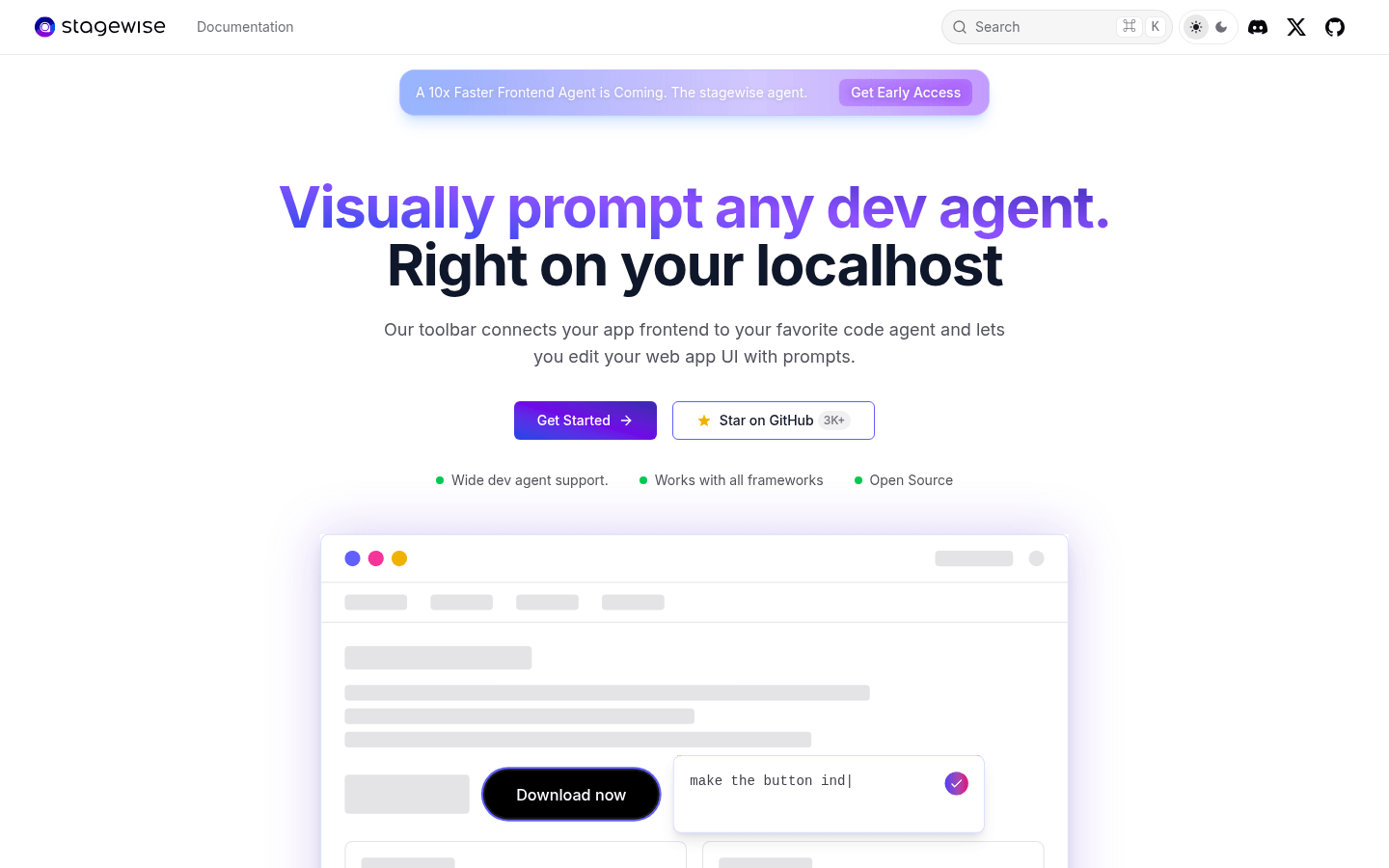

stagewise

Stagewise is a toolbar that connects your app front-end with your favorite code proxy, letting you edit your web app UI with prompts. It provides real-time context to your AI agents, making editing front-end code very easy.