Procyon AI Computer Vision Benchmark

Benchmarking tool for evaluating the performance of AI inference engines on Windows PC or Apple Mac.

Product Details

Procyon AI Computer Vision Benchmark is a professional benchmarking tool developed by UL Solutions, designed to help users evaluate the performance of different AI inference engines on Windows PC or Apple Mac. The tool provides engineering teams with an independent, standardized assessment of the quality of their AI inference engine implementation and the performance of specialized hardware by performing a series of tests based on common machine vision tasks, leveraging a variety of advanced neural network models. The product supports a variety of mainstream AI inference engines, such as NVIDIA® TensorRT™, Intel® OpenVINO™, etc., and can compare the performance of floating point and integer optimization models. Its main advantages include ease of installation and operation, no need for complex configuration, and the ability to export detailed result files, etc. The product is positioned for professional users, such as hardware manufacturers, software developers and scientific researchers, to assist their research and development and optimization work in the field of AI.

Main Features

How to Use

Target Users

This product is mainly aimed at professional users such as engineering teams, hardware manufacturers, software developers and scientific researchers. They need an independent, standardized tool to evaluate the performance of AI inference engines on different hardware platforms to provide data support for product development, optimization and selection. For example, hardware manufacturers can use this tool to test and optimize the performance of their AI acceleration hardware; software developers can understand the advantages and disadvantages of different inference engines to choose the appropriate engine for their own AI applications; scientific researchers can use this tool to conduct research related to AI performance.

Examples

A hardware manufacturer used this tool to test and optimize the performance of its newly launched AI accelerator card. By comparing the performance of different inference engines on the hardware, and adjusting driver parameters, it ultimately significantly improved the inference performance of the accelerator card, making it more competitive in the market.

A software development company planned to develop an AI-based image recognition application. It used Procyon AI Computer Vision Benchmark to test the performance of various inference engines on the target hardware platform. Based on the test results, it selected the most suitable engine for integration to ensure efficient operation of the application.

When researchers conduct AI model optimization research, they use this tool to compare the performance differences of floating-point and integer optimization models under different hardware configurations, which provides empirical basis for the selection of model optimization strategies and promotes the progress of related research.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

Zread

Zread is an open source project exploration platform where users can discover, share and manage various open source repositories, helping developers and enthusiasts better understand and utilize open source resources. It supports multiple languages and technology stacks and is suitable for users with various technical backgrounds.

Dyad

Dyad is a powerful application building tool that uses open source technology so that users can freely customize and build AI applications. Its main advantages include high flexibility, powerful functions, and support for local development and customization.

Fastn UCL

Fastn UCL is a multi-tenant MCP gateway and orchestration layer that connects your AI agents to any user tool in minutes. It features AI-optimized models, flexible design, and operates across dynamic enterprise data.

OpenMemory MCP

OpenMemory is an open source personal memory layer that provides private, portable memory management for large language models (LLMs). It ensures that users have complete control over their data and can maintain data security while building AI applications. This project supports Docker, Python and Node.js, making it suitable for developers to develop personalized AI experiences. OpenMemory is especially suitable for users who want to use AI without revealing personal information.

grimly.ai

grimly.ai is a product designed to protect AI agents from jailbreaking, injection attacks, and abuse. It is tailored for developers, security teams and enterprise AI adopters to provide real-time protection.

parakeet-tdt-0.6b-v2

parakeet-tdt-0.6b-v2 is a 600 million parameter automatic speech recognition (ASR) model designed to achieve high-quality English transcription, with accurate timestamp prediction and automatic punctuation, case support. This model is based on the FastConformer architecture and can efficiently process audio clips up to 24 minutes long, making it suitable for developers, researchers, and applications in various industries.

mcpscan.ai

mccan.ai is a security scanning tool focused on Model Context Protocol (MCP) servers. It is capable of detecting various security vulnerabilities in MCP servers and ensuring the interaction of Large Language Models (LLMs) with external tools. The product is designed to help developers identify and remediate potential security risks to protect sensitive data and systems from attacks. The core value of mcpscan.ai lies in its security scanning specifically for MCP implementation, providing real-time monitoring and detailed vulnerability analysis to support users' security deployment.

MCP Security Checklist

The MCP Security Checklist is compiled and maintained by the SlowMist team to help developers identify and mitigate security risks during MCP implementation. With the rapid development of AI tools based on MCP standards, security issues have become increasingly important. This checklist provides detailed security guidance, covering the security requirements of MCP servers, clients, and multiple scenarios to protect user privacy and improve the stability and controllability of the overall system.

MCP Gateway

MCP Gateway is an advanced mediation solution for managing and enhancing Model Context Protocol (MCP) servers. As an intermediary between large language models (LLM) and other MCP servers, it has functions such as configuration management, request response interception, and unified interfaces, which can protect sensitive information and ensure safe and efficient AI services.

Arthur Engine

Arthur Engine is a tool designed to monitor and govern AI/ML workloads, leveraging popular open source technologies and frameworks. The enterprise version of the product offers better performance and additional features such as custom enterprise-grade safeguards and metrics designed to maximize the potential of AI for organizations. It can effectively evaluate and optimize models to ensure data security and compliance.

EasyControl Ghibli

EasyControl Ghibli is a newly released model based on the Hugging Face platform designed to simplify controlling and managing various artificial intelligence tasks. The model combines advanced technology with a user-friendly interface, allowing users to interact with the AI in a more intuitive way. Its main advantages are its ease of use and powerful functions, making it suitable for users from different backgrounds, whether beginners or professionals.

Mistral Small 3.1

Mistral-Small-3.1-24B-Base-2503 is an advanced open source model with 24 billion parameters, supports multi-language and long context processing, and is suitable for text and vision tasks. It is the basic model of Mistral Small 3.1, has strong multi-modal capabilities and is suitable for enterprise needs.

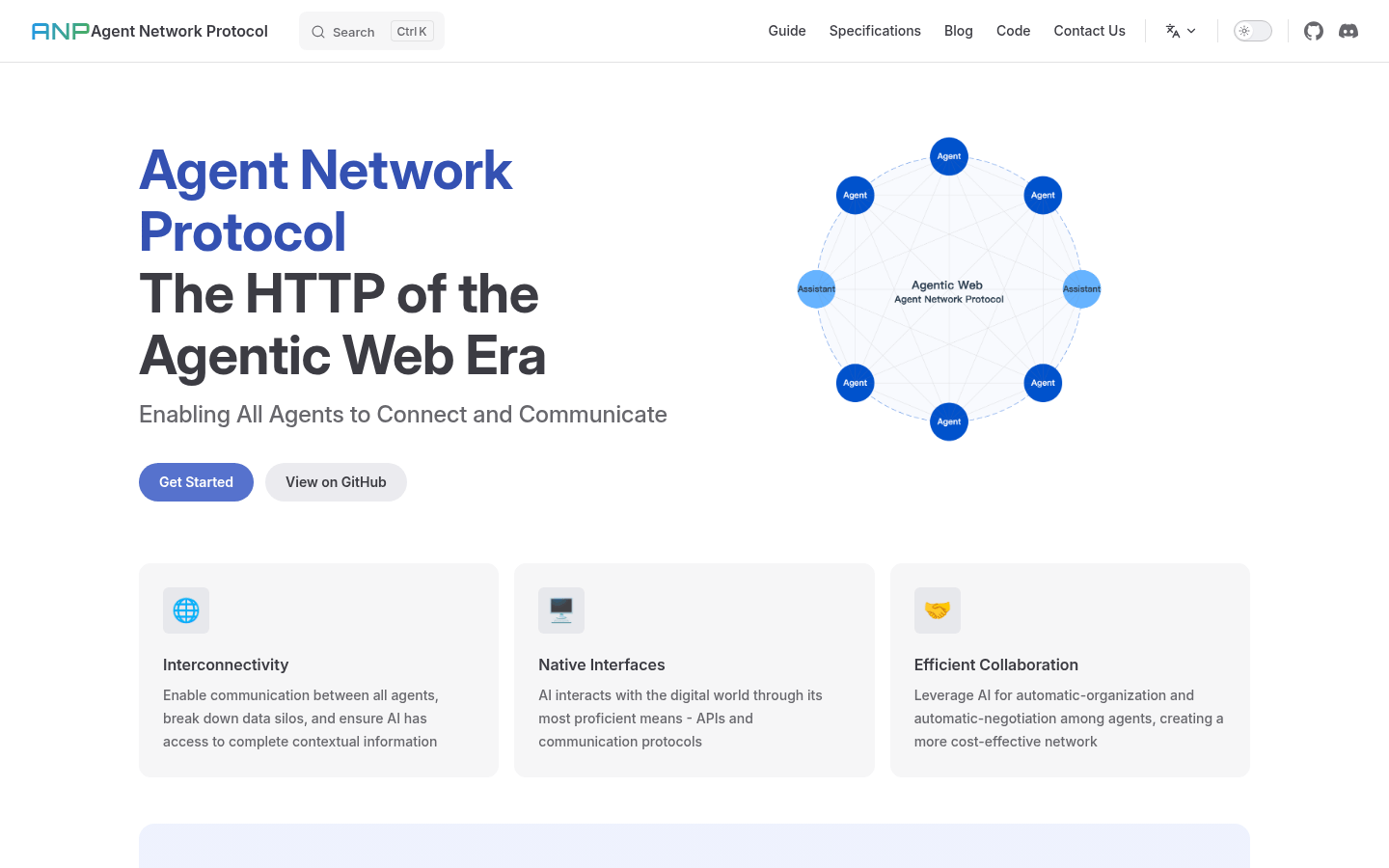

Agent Network Protocol

Agent Network Protocol (ANP) aims to define how intelligent agents connect and communicate with each other. It ensures data security and privacy protection through decentralized identity authentication and end-to-end encrypted communication. Its dynamic protocol negotiation function can automatically organize agent networks to achieve efficient collaboration. The goal of ANP is to break down data silos and enable AI to access complete contextual information, thus promoting the era of intelligent agents. This technology has the advantages of openness, security and efficiency, and is suitable for a variety of scenarios that require intelligent agent collaboration.

AI Infra Guard

AI Infra Guard is an AI infrastructure security assessment tool developed by Tencent. It focuses on discovering and detecting potential security risks in AI systems, supports 28 types of AI framework fingerprint recognition, and covers more than 200 security vulnerability databases. The tool is lightweight, easy to use, requires no complex configuration, has flexible matching syntax and cross-platform support. It provides an efficient assessment method for the security of AI infrastructure and helps enterprises and developers protect their AI systems from security threats.

EPLB

Expert Parallelism Load Balancer (EPLB) is a load balancing algorithm for expert parallelism (EP) in deep learning. It ensures load balancing between different GPUs through redundant expert strategies and heuristic packaging algorithms, while using group-limited expert routing to reduce inter-node data traffic. This algorithm is of great significance for large-scale distributed training and can improve resource utilization and training efficiency.

DualPipe

DualPipe is an innovative bidirectional pipeline parallel algorithm developed by the DeepSeek-AI team. This algorithm significantly reduces pipeline bubbles and improves training efficiency by optimizing the overlap of calculation and communication. It performs well in large-scale distributed training and is especially suitable for deep learning tasks that require efficient parallelization. DualPipe is developed based on PyTorch and is easy to integrate and expand. It is suitable for developers and researchers who require high-performance computing.