DeepSeek-R1-Distill-Qwen-32B

DeepSeek-R1-Distill-Qwen-32B is a high-performance open source language model suitable for a variety of text generation tasks.

Product Details

DeepSeek-R1-Distill-Qwen-32B is a high-performance language model developed by the DeepSeek team, based on the Qwen-2.5 series for distillation optimization. The model performs well on multiple benchmarks, especially on math, coding, and reasoning tasks. Its main advantages include efficient reasoning capabilities, powerful multi-language support, and open source features, which facilitate secondary development and application by researchers and developers. This model is suitable for scenarios that require high-performance text generation, such as intelligent customer service, content creation, and code assistance, and has broad application prospects.

Main Features

How to Use

Target Users

This model is suitable for enterprises and developers who require high-performance text generation, and is especially suitable for scenarios such as intelligent customer service, content creation, and code assistance. The open source feature makes it an ideal choice for researchers and developers for secondary development and innovation.

Examples

In the intelligent customer service system, provide users with a natural and smooth conversation experience.

Assist content creators to quickly generate high-quality articles, stories and creative copy.

Help developers generate and optimize code and improve development efficiency.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

Clacky

ClackyAI is a revolutionary AI coding tool that uses AI agents to drive coding and automate the process from issue to PR. It can greatly improve development efficiency, ensure code quality, and minimize manual intervention. The product is positioned to improve the productivity and efficiency of the development team and provide an excellent collaboration experience.

VibeScan

VibeScan is a tool that can help users upload code, detect problems and fix them with one click. Its main advantages are to improve code security, optimize code quality, check performance and check necessary conditions before going online.

LightLayer

LightLayer is an AI code review platform that achieves 5 times faster code review speed through natural voice communication. It can help users review code efficiently, provide intelligent comments and suggestions, and make code review more efficient and convenient.

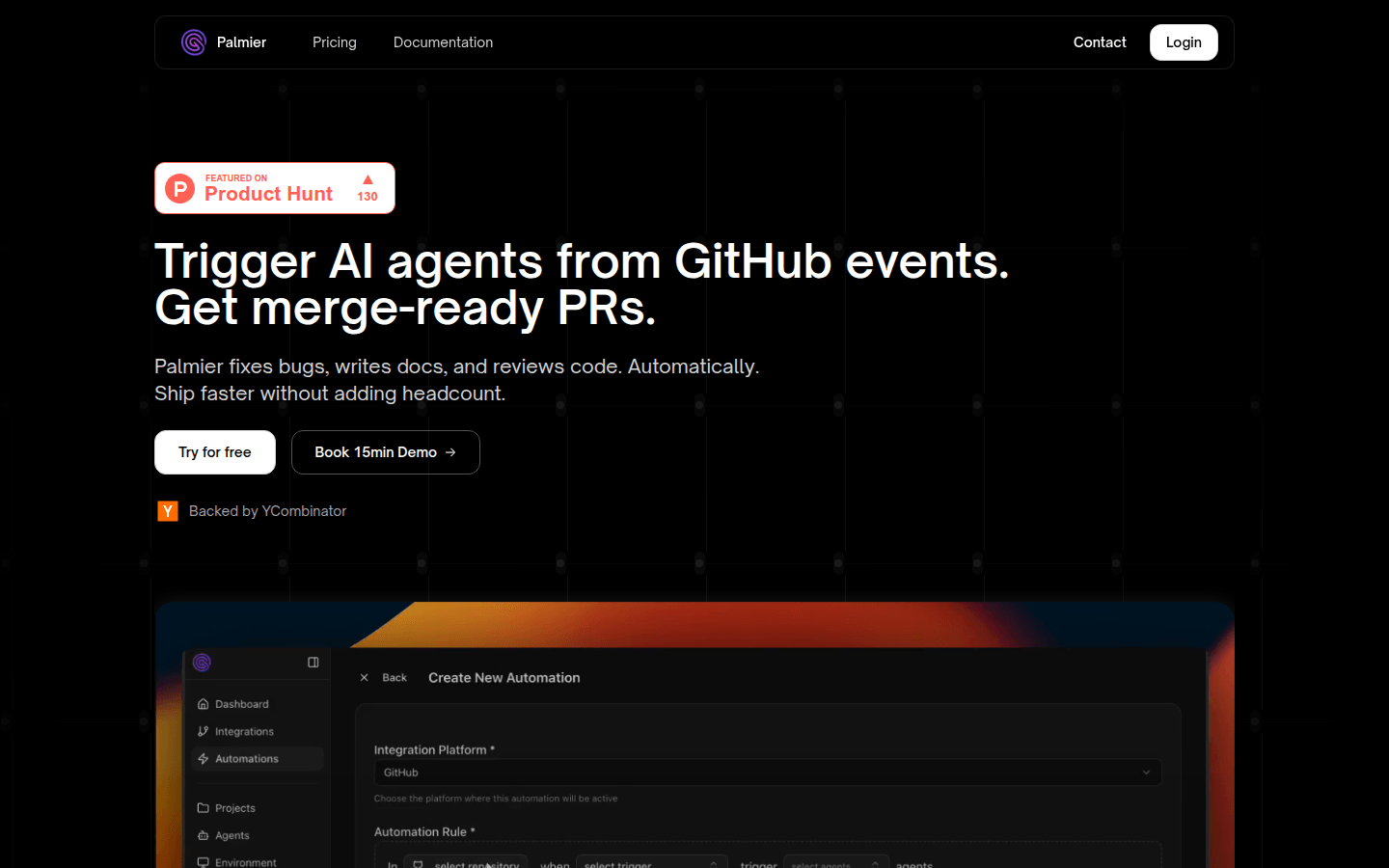

Palmier

Palmier is an autonomous AI software engineering assistant that can handle multiple tasks simultaneously, including writing features, fixing bugs, and accelerating development. Its key benefits include smart code generation and review features to help developers be more productive.

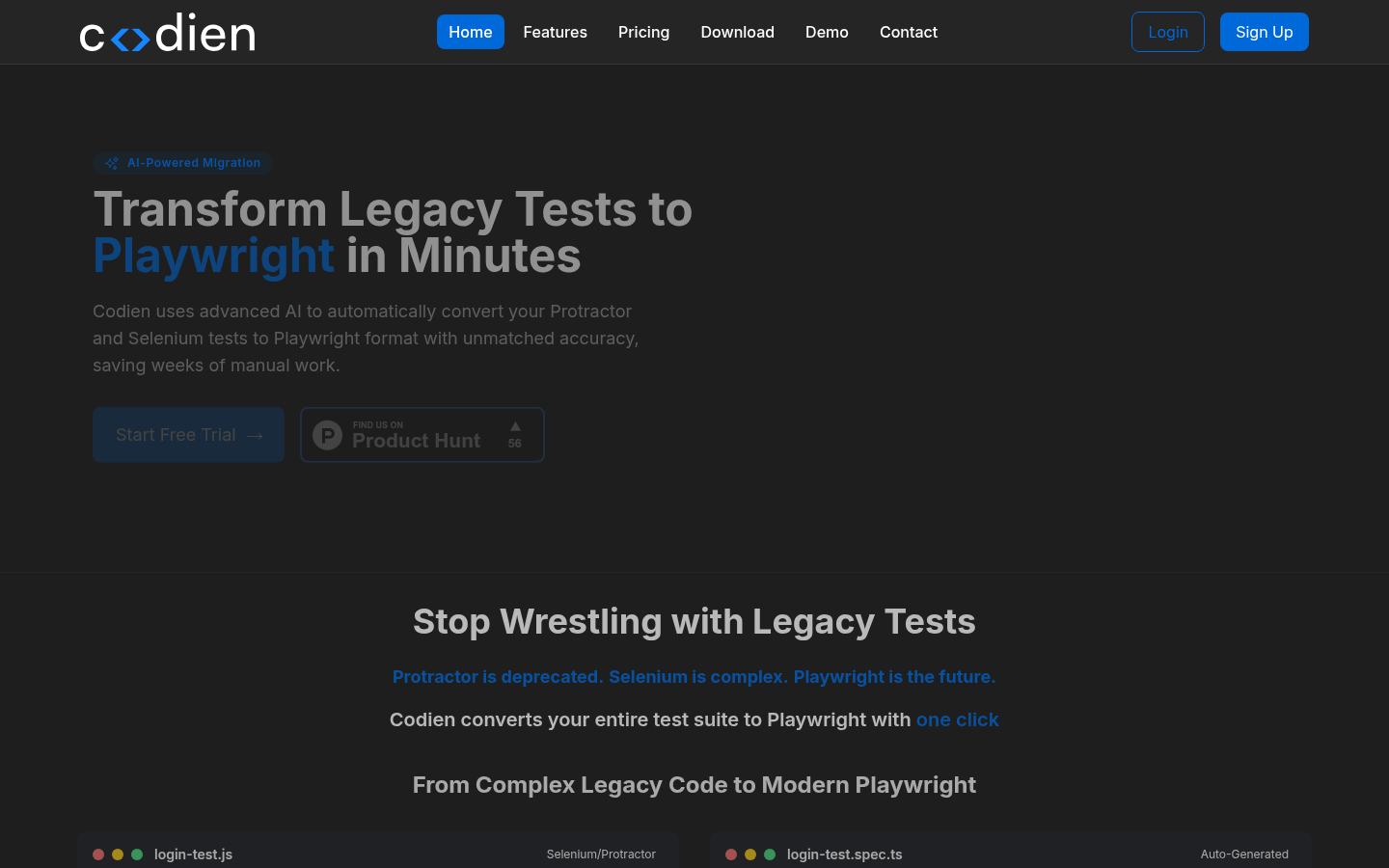

Codien

Codien is an AI-powered platform that automatically converts Protractor and Selenium tests into Playwright format, saving a lot of manual work time. It uses advanced AI technology to help users quickly and accurately implement test migration.

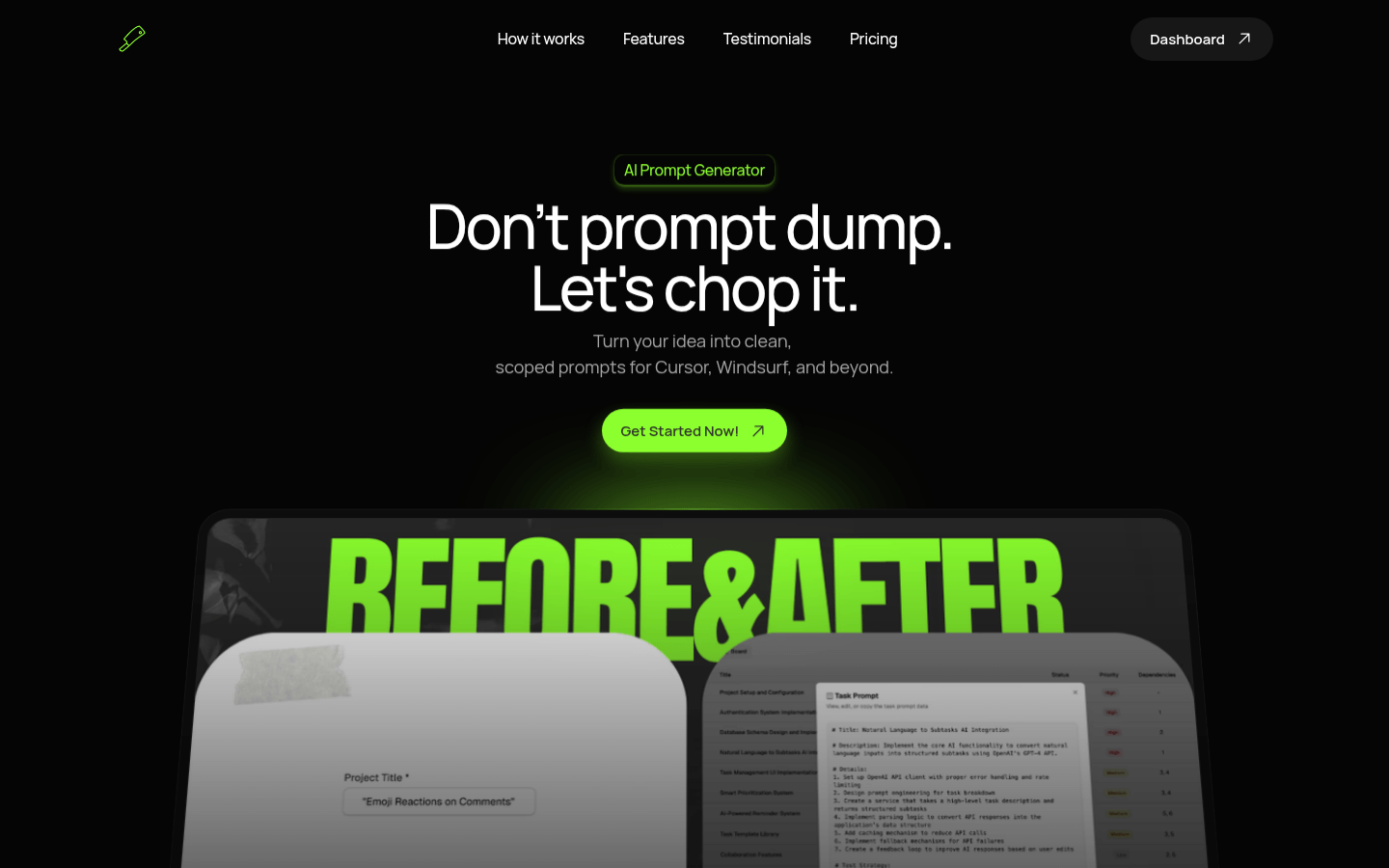

ChopTask

ChopTask is an automation tool that breaks down projects into development tasks and provides priorities, complexity scores, dependencies, and AI code editor prompts. It helps users quickly transform ideas into specific executable code tasks.

Mendel Lab

Mendel uses AI to optimize workflow, automate code reviews, track team performance, and improve deployment efficiency. It provides developers with a faster and more secure way to deliver code.

Data Science Agent in Colab

Data Science Agent in Colab is a Gemini-based smart tool from Google designed to simplify data science workflows. It automatically generates complete Colab notebook code through natural language description, covering tasks such as data import, analysis and visualization. The main advantages of this tool are that it saves time, increases efficiency, and the generated code can be modified and shared. It is aimed at data scientists, researchers, and developers, especially those who want to quickly gain insights from their data. The tool is currently available for free to eligible users.

Claude 3.7 Sonnet

Claude 3.7 Sonnet is the latest hybrid inference model launched by Anthropic, which can achieve seamless switching between fast response and deep inference. It excels in areas such as programming, front-end development, and provides granular control over the depth of inference via APIs. This model not only improves code generation and debugging capabilities, but also optimizes the processing of complex tasks, making it suitable for enterprise-level applications. Pricing is consistent with its predecessor, charging $3 per million tokens for input and $15 per million tokens for output.

Moonlight-16B-A3B

Moonlight-16B-A3B is a large-scale language model developed by Moonshot AI and trained with the advanced Muon optimizer. This model significantly improves language generation capabilities by optimizing training efficiency and performance. Its main advantages include efficient optimizer design, fewer training FLOPs, and excellent performance. This model is suitable for scenarios that require efficient language generation, such as natural language processing, code generation, and multilingual dialogue. Its open source implementation and pre-trained models provide powerful tools for researchers and developers.

Gemini 2.0 Pro

Gemini Pro is one of the most advanced AI models launched by Google DeepMind, designed for complex tasks and programming scenarios. It excels at code generation, complex instruction understanding, and multi-modal interaction, supporting text, image, video, and audio input. Gemini Pro provides powerful tool calling capabilities, such as Google search and code execution, and can handle up to 2 million words of contextual information, making it suitable for professional users and developers who require high-performance AI support.

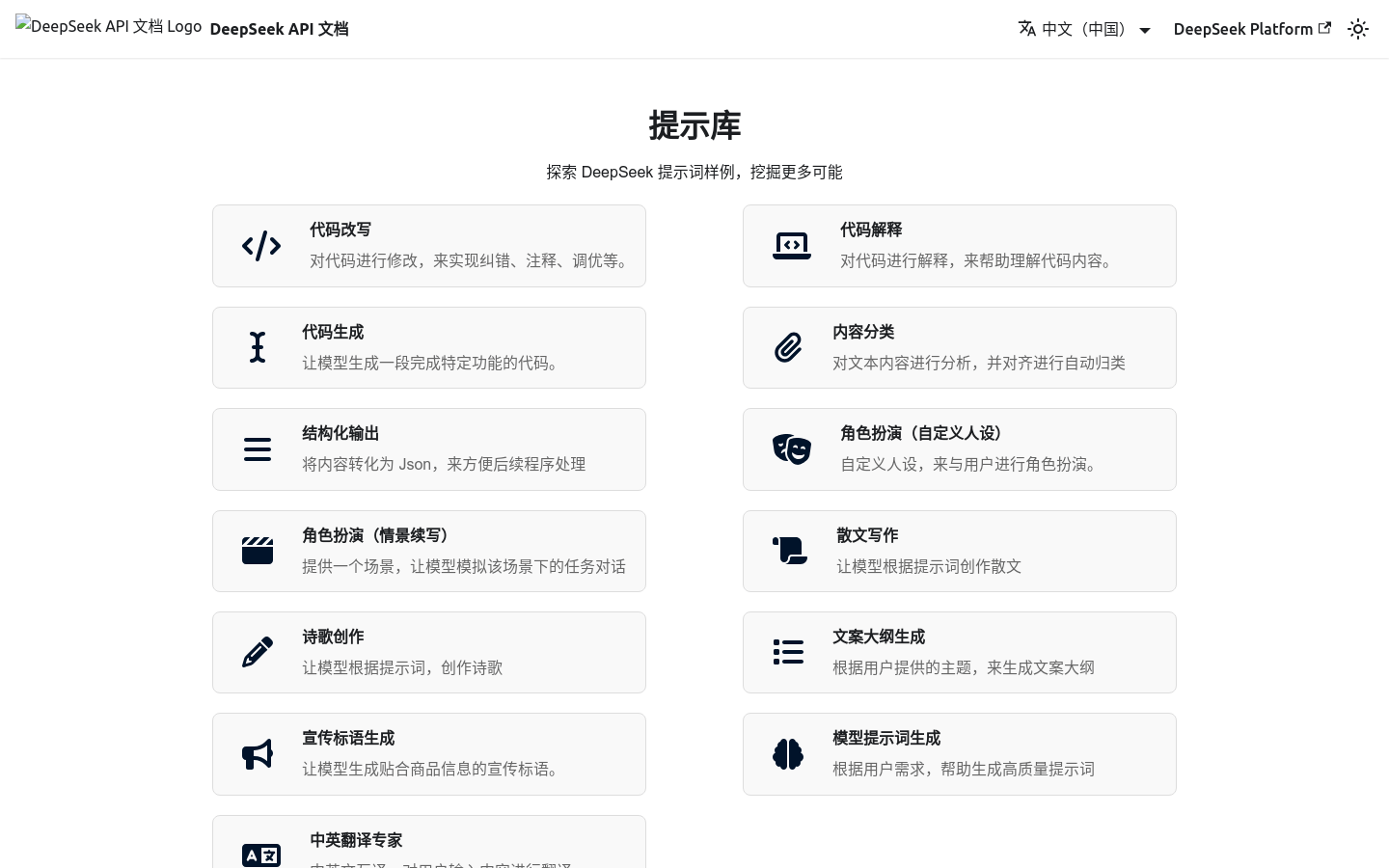

DeepSeek prompt library

DeepSeek prompt library is a powerful tool that helps users quickly achieve code generation, rewriting, interpretation and other functions by providing a variety of prompt word samples. It also supports various application scenarios such as content classification, structured output, and copywriting creation. The main advantages of this tool are that it is efficient, flexible and easy to use, which significantly increases work efficiency. The DeepSeek prompt library provides rich functional support for developers, content creators, and users who need efficient tools to help them solve problems quickly. Currently, this product may require payment, and the specific price needs to be confirmed according to the official platform information.

DeepSeek-R1-Distill-Llama-8B

DeepSeek-R1-Distill-Llama-8B is a high-performance language model developed by the DeepSeek team, based on the Llama architecture and optimized for reinforcement learning and distillation. The model performs well in reasoning, code generation, and multilingual tasks, and is the first model in the open source community to improve reasoning capabilities through pure reinforcement learning. It supports commercial use, allows modifications and derivative works, and is suitable for academic research and corporate applications.

ReaderLM v2

ReaderLM v2 is a small language model with 1.5B parameters launched by Jina AI. It is specially used for HTML to Markdown conversion and HTML to JSON extraction, with excellent accuracy. The model supports 29 languages and can handle input and output combination lengths of up to 512K tokens. It adopts a new training paradigm and higher quality training data. Compared with the previous generation product, it has made significant progress in processing long text content and generating Markdown syntax. It can skillfully use Markdown syntax and is good at generating complex elements. In addition, ReaderLM v2 also introduces a direct HTML to JSON generation function, allowing users to extract specific information from the original HTML according to a given JSON schema, eliminating the need for intermediate Markdown conversion.

Repo Prompt

Repo Prompt is a native app designed for macOS to remove the friction of interacting with the most powerful language models when working with local files. It iterates over files or understands how they work by allowing users to select files and folders as context for prompts, using saved prompts and warehouse mappings to guide the AI's output. Key benefits of the product include improved development efficiency, precise control over context and review of changes made by AI. Repo Prompt's background information shows that it is a tool for developers and technicians designed to optimize code and file processing workflows by integrating the latest AI technology. The product currently offers a free trial, but specific pricing information is not provided on the page.

Claude.ai analysis tool

Claude.ai's analytics tool is a built-in feature that allows Claude to write and run JavaScript code to process data, perform analysis, and generate real-time insights. This tool not only improves answer accuracy but also expands the capabilities of teams, including marketing, sales, product management, engineering, and finance teams, who can all benefit from it.