Google CameraTrapAI

AI model trained by Google to classify species in wildlife camera trap images.

Product Details

Google CameraTrapAI is a collection of AI models for wildlife image classification. It identifies animal species from images captured by motion-triggered wildlife cameras (camera traps). This technology is of great significance to wildlife monitoring and conservation work, and can help researchers and conservation workers process large amounts of image data more efficiently, save time and improve work efficiency. The model is developed based on deep learning technology and has high accuracy and powerful classification capabilities.

Main Features

How to Use

Target Users

This product is suitable for wildlife conservation workers, researchers, ecologists, and individuals and institutions interested in wildlife monitoring. It can help them quickly and accurately identify and classify wildlife images, thereby better understanding the distribution, behavior and ecological habits of animals, and providing strong support for conservation efforts.

Examples

Wildlife conservation groups use CameraTrapAI to analyze camera trap images to quickly identify the presence of rare animals.

The researchers used the model to classify long-term monitoring of wildlife image data to study animal population dynamics.

Eco-tourism projects use CameraTrapAI to display captured wildlife images and provide species information to tourists.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

SRM

SRM is a spatial reasoning framework based on denoising generative models for processing reasoning tasks on sets of continuous variables. It progressively infers a continuous representation of each unobserved variable by assigning independent noise levels to these variables. This technology performs well when dealing with complex distributions and can effectively reduce hallucinations during the generation process. SRM demonstrated for the first time that a denoising network can predict the generation order, significantly improving the accuracy of a specific inference task. The model was developed by the Max Planck Institute for Informatics in Germany and aims to promote research on spatial reasoning and generative models.

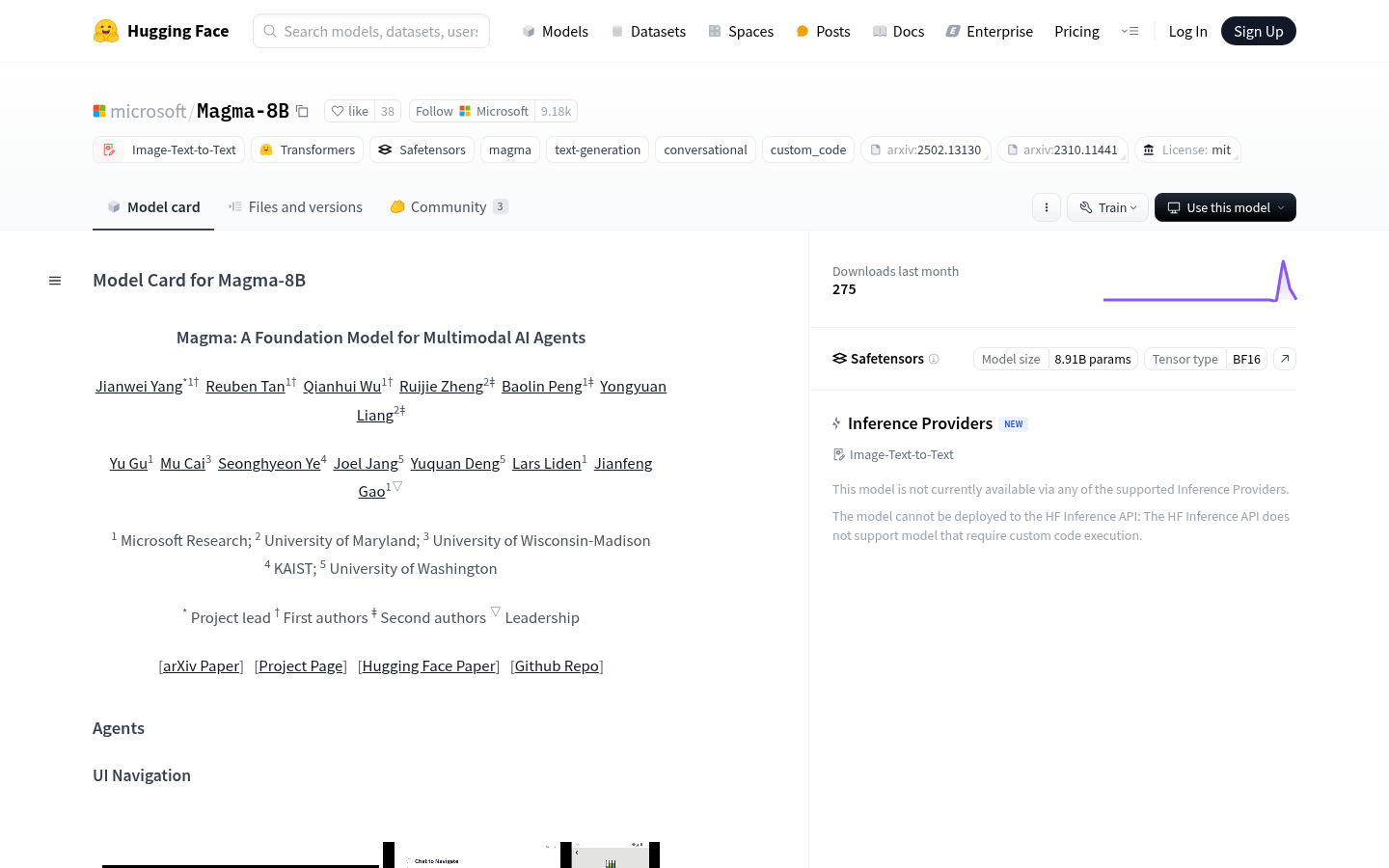

Magma-8B

Magma-8B is a multi-modal AI basic model developed by Microsoft and designed specifically for studying multi-modal AI agents. It combines text and image inputs, is able to generate text output, and has visual planning and agent capabilities. This model uses Meta LLaMA-3 as the backbone of the language model, combined with the CLIP-ConvNeXt-XXLarge visual encoder, to support learning spatiotemporal relationships from unlabeled video data, and has strong generalization capabilities and multi-task adaptability. Magma-8B performs well in multi-modal tasks, especially in spatial understanding and reasoning. It provides powerful tools for multimodal AI research and advances the study of complex interactions in virtual and real environments.

ZeroBench

ZeroBench is a benchmark designed for evaluating the visual understanding capabilities of large multimodal models (LMMs). It challenges the limits of current models with 100 carefully crafted and rigorously vetted complex questions, with 334 sub-questions. This benchmark aims to fill the gaps in existing visual benchmarks and provide a more challenging and high-quality evaluation tool. The main advantages of ZeroBench are its high difficulty, lightweight, diverse and high-quality characteristics, which allow it to effectively differentiate the performance of models. In addition, it provides detailed sub-problem evaluations to help researchers better understand the model's inference capabilities.

MILS

MILS is an open source project released by Facebook Research that aims to demonstrate the ability of large language models (LLMs) to handle visual and auditory tasks without any training. This technology enables automatic description generation of images, audio and video by utilizing pre-trained models and optimization algorithms. This technological breakthrough provides new ideas for the development of multi-modal artificial intelligence and demonstrates the potential of LLMs in cross-modal tasks. This model is primarily intended for researchers and developers, providing them with a powerful tool to explore multimodal applications. The project is currently free and open source and aims to promote academic research and technology development.

InternVL2_5-26B-MPO

InternVL2_5-26B-MPO is a multimodal large language model (MLLM). Based on InternVL2.5, it further improves the model performance through Mixed Preference Optimization (MPO). This model can process multi-modal data including images and text, and is widely used in scenarios such as image description and visual question answering. Its importance lies in its ability to understand and generate text that is closely related to the content of the image, pushing the boundaries of multi-modal artificial intelligence. Product background information includes its superior performance in multi-modal tasks and evaluation results in OpenCompass Leaderboard. This model provides researchers and developers with powerful tools to explore and realize the potential of multimodal artificial intelligence.

DeepSeek-VL2-Tiny

DeepSeek-VL2 is a series of advanced large-scale mixed expert (MoE) visual language models that are significantly improved compared to the previous generation DeepSeek-VL. This model series has demonstrated excellent capabilities in multiple tasks such as visual question answering, optical character recognition, document/table/chart understanding, and visual positioning. DeepSeek-VL2 consists of three variants: DeepSeek-VL2-Tiny, DeepSeek-VL2-Small and DeepSeek-VL2, with 1.0B, 2.8B and 4.5B activation parameters respectively. DeepSeek-VL2 achieves competitive or state-of-the-art performance compared to existing open source intensive and MoE-based models with similar or fewer activation parameters.

DeepSeek-VL2

DeepSeek-VL2 is a series of large-scale Mixture-of-Experts visual language models that are significantly improved compared to the previous generation DeepSeek-VL. This model series demonstrates excellent capabilities in tasks such as visual question answering, optical character recognition, document/table/diagram understanding, and visual localization. DeepSeek-VL2 contains three variants: DeepSeek-VL2-Tiny, DeepSeek-VL2-Small and DeepSeek-VL2, with 1.0B, 2.8B and 4.5B activation parameters respectively. DeepSeek-VL2 achieves competitive or state-of-the-art performance compared to existing open source dense and MoE base models with similar or fewer activation parameters.

Aquila-VL-2B-llava-qwen

The Aquila-VL-2B model is a visual language model (VLM) trained based on the LLava-one-vision framework. The Qwen2.5-1.5B-instruct model is selected as the language model (LLM), and siglip-so400m-patch14-384 is used as the visual tower. The model is trained on the self-built Infinity-MM dataset, which contains approximately 40 million image-text pairs. This dataset combines open source data collected from the Internet and synthetic instruction data generated using an open source VLM model. The open source of the Aquila-VL-2B model aims to promote the development of multi-modal performance, especially in the combined processing of images and text.

Sparsh

Sparsh is a family of general-purpose tactile representations trained with self-supervised algorithms such as MAE, DINO and JEPA. It can generate useful representations for DIGIT, Gelsight'17 and Gelsight Mini, and significantly surpass the end-to-end model in the downstream tasks proposed by TacBench. It can also provide support for data-efficient training of new downstream tasks. The Sparsh project contains PyTorch implementations, pretrained models, and datasets released with Sparsh.

DreamClear

DreamClear is a deep learning model focused on high-volume real-world image inpainting, which provides an efficient image super-resolution and inpainting solution through privacy-safe data management technology. This model was proposed at NeurIPS 2024. Its main advantages include high-capacity processing capabilities, privacy protection, and efficiency in practical applications. The background information of DreamClear shows that it is an improvement based on previous work and provides a variety of pre-trained models and codes to facilitate the use of researchers and developers. The product is free and targeted at the image processing needs of scientific research and industry.

DocLayout-YOLO

DocLayout-YOLO is a deep learning model for document layout analysis that enhances the accuracy and processing speed of document layout analysis through diverse synthetic data and global-to-local adaptive perception. This model generates a large-scale and diverse DocSynth-300K data set through the Mesh-candidate BestFit algorithm, which significantly improves the fine-tuning performance of different document types. In addition, it also proposes a global-to-local controllable receptive field module to better handle multi-scale changes in document elements. DocLayout-YOLO performs well on downstream datasets on a variety of document types, with significant advantages in both speed and accuracy.

VisRAG

VisRAG is an innovative visual language model (VLM)-based RAG (Retrieval-Augmented Generation) process. Unlike traditional text-based RAG, VisRAG directly embeds documents as images through VLM and then retrieves them to enhance the generation capabilities of VLM. This method retains the data information in the original document to the greatest extent and eliminates the information loss introduced during the parsing process. The application of the VisRAG model on multi-modal documents demonstrates its strong potential in information retrieval and enhanced text generation.

Long-LRM

Long-LRM is a model for 3D Gaussian reconstruction capable of reconstructing large scenes from a sequence of input images. The model can process 32 source images at 960x540 resolution in 1.3 seconds and runs on only a single A100 80G GPU. It combines the latest Mamba2 module and the traditional transformer module, and improves efficiency while ensuring quality through efficient token merging and Gaussian pruning steps. Compared with traditional feedforward models, Long-LRM is able to reconstruct the entire scene at once instead of only a small part of the scene. On large-scale scene datasets, such as DL3DV-140 and Tanks and Temples, Long-LRM's performance is comparable to optimization-based methods while improving efficiency by two orders of magnitude.

Proofig AI

Proofig AI is an AI-based automated image proofreading tool specially built for the scientific publishing field and trusted by the world's top researchers, publishers and research institutions. The system provides advanced support for detecting image reuse and tampering, including duplication between images and within a single image. In addition, Proofig focuses on detecting variations such as cloning, rotation, flipping, scaling, etc. The product pricing is flexible and positioned in the field of scientific research, aiming to provide users with efficient and accurate image proofing services.

HyFluid

HyFluid is a neural method for inferring fluid density and velocity fields from sparse multi-view videos. Unlike existing neurodynamic reconstruction methods, HyFluid is able to accurately estimate density and reveal underlying velocities, overcoming the inherent visual ambiguity of fluid velocities. This method achieves the inference of a physically reasonable velocity field by introducing a set of physics-based losses while handling the turbulent nature of the fluid velocity, designing a hybrid neural velocity representation that includes a basic neural velocity field that captures most of the irrotational energy and a vortex particle velocity that simulates the remaining turbulent velocity. The method can be used for a variety of learning and reconstruction applications surrounding 3D incompressible flows, including fluid resimulation and editing, future prediction, and neurodynamic scene synthesis.

MyLens

MyLens is an AI-powered timeline product that helps users gain insight into the intersections between historical events. Users can create, explore and connect stories to seamlessly explore connections between different histories.