Masterpiece Studio

Make 3D design easy - create, edit, publish

Product Details

Masterpiece Studio is the first complete VR 3D creative suite for independent creators, using generative artificial intelligence technology to easily generate, edit and publish 3D works. It provides a range of powerful tools that allow users to quickly create amazing virtual reality experiences. Masterpiece Studio has an intuitive and easy-to-use interface that supports various 3D creation needs, including modeling, texturing, animation, etc. Users can directly perform interactive editing through VR devices, and can also export their works to various platforms for display and publication. Masterpiece Studio also provides a rich material library and templates to help users get started quickly and realize unlimited creative possibilities.

Main Features

Target Users

Masterpiece Studio is suitable for independent creators, designers and artists, who can use the tool to quickly create 3D works and display them to audiences. It is suitable for various scenarios, including game development, virtual reality experience, advertising design, etc.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

3D Mesh Generation

3D Mesh Generation is an online 3D model generation tool launched by Anything World. It uses artificial intelligence technology to allow users to quickly generate 3D models through simple text descriptions or uploading pictures. The importance of this technology is that it greatly simplifies the 3D model creation process, allowing users without professional 3D modeling skills to easily create high-quality 3D content. Product background information shows that Anything World is committed to providing innovative 3D content creation solutions through its platform, and 3D Mesh Generation is an important part of its product line. Regarding price, users can view specific pricing plans after registration.

MakerLab

MakerLab is an online platform that provides a variety of 3D model design tools, including a vase generator, sign customizer, etc. Users can quickly and easily create personalized 3D models according to their needs. The platform supports users to create works using templates, and also provides a creative testing ground where users can try cutting-edge technologies such as AI scanners. MakerLab's background information shows that it is operated by BamBam Lab and aims to provide users with a space to freely create and share ideas. Currently, the platform provides free and paid services, and users can choose the appropriate service according to their needs.

3DTopia-XL

3DTopia-XL is a high-quality 3D asset generation technology built on the Diffusion Transformer (DiT), using a novel 3D representation method PrimX. The technology is capable of encoding 3D shapes, textures and materials into a compact N x D tensor. Each marker is a volumetric primitive anchored on the shape surface, encoding signed distance fields (SDF), RGB and materials with voxelized payloads. This process takes only 5 seconds to generate 3D PBR assets from text/image input, suitable for graphics pipelines.

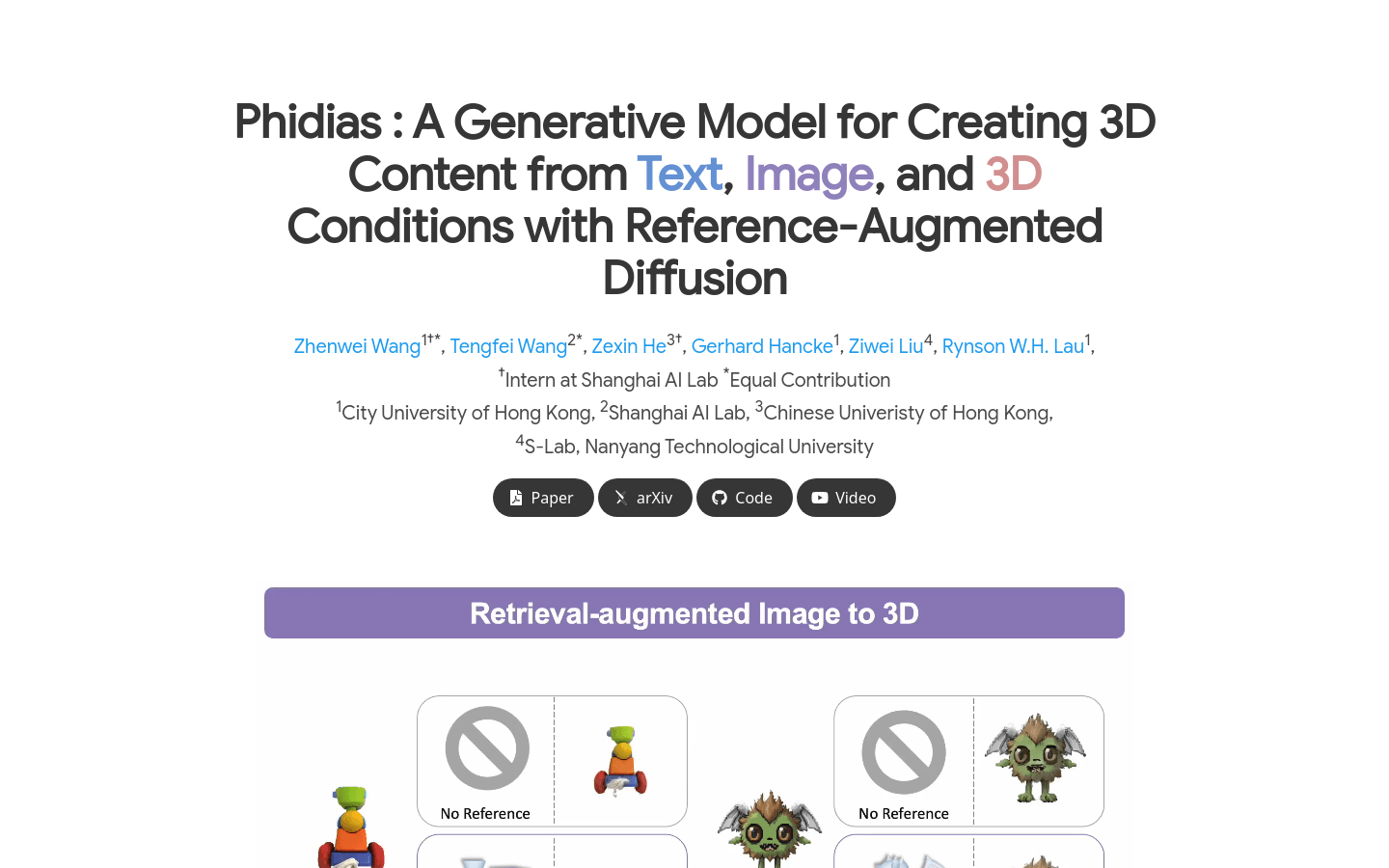

Phidias

Phidias is an innovative generative model that utilizes diffusion technology for reference-enhanced 3D generation. The model generates high-quality 3D assets from images, text or 3D conditions and can be completed in seconds. It significantly improves the generation quality, generalization ability and controllability by integrating three key components: Meta-ControlNet that dynamically adjusts the strength of conditions, dynamic reference routing, and self-reference enhancement. Phidias provides a unified framework for 3D generation using text, images and 3D conditions, and has a variety of application scenarios.

MeshAnything

MeshAnything is a model that utilizes autoregressive transformers for artist-grade mesh generation that can convert any 3D representation of an asset into artist-created meshes (AMs) that can be seamlessly applied to the 3D industry. It generates meshes with a lower face count, significantly improving storage, rendering, and simulation efficiency while achieving comparable accuracy to previous methods.

MaPa

MaPa is an innovative approach to generating materials for 3D meshes based on textual descriptions. This technology creates segmented procedural material maps to represent appearance, enables high-quality rendering, and provides significant flexibility in editing. Utilizing pre-trained 2D diffusion models, MaPa bridges the gap between textual descriptions and material maps without requiring large amounts of paired data. This technology decomposes the shape into multiple parts and designs a diffusion model of the control segments to synthesize a 2D image aligned with the mesh part, then initializes the parameters of the material map and fine-tunes it through the differentiable rendering module to produce a material that conforms to the text description. Extensive experiments show that MaPa outperforms existing technologies in terms of fidelity, resolution, and editability.

Interactive3D

Interactive3D is an advanced 3D generative model that provides users with precise control through interactive design. The model adopts a two-stage cascade structure, utilizing different 3D representation methods, allowing the user to modify and guide at any intermediate step of the generation process. Its importance lies in enabling users to have fine control over the 3D model generation process, thereby creating high-quality 3D models that meet specific needs.

GRM

GRM is a large-scale reconstruction model that can recover 3D assets from sparse view images in 0.1 seconds and generate them in 8 seconds. It is a feed-forward Transformer-based model that can efficiently fuse multi-view information to convert input pixels into pixel-aligned Gaussian distributions. These Gaussian distributions can be back-projected into a dense 3D Gaussian distribution collection representing the scene. Our Transformer architecture and use of 3D Gaussian distribution unlocks a scalable and efficient reconstruction framework. Extensive experimental results demonstrate the superiority of our approach over other alternatives in terms of reconstruction quality and efficiency. We also demonstrate the potential of GRM in generative tasks such as text to 3D and image to 3D, by combining with existing multi-view diffusion models.

3D AI Studio

3D AI Studio is an online tool based on artificial intelligence technology that can easily generate customized 3D models. Suitable for designers, developers and creative people, providing high-quality digital assets. Users can quickly create 3D models through the AI generator and export them in FBX, GLB or USDZ format. 3D AI Studio features high performance, user-friendly interface, and automatic generation of real textures, which can significantly shorten modeling time and reduce costs.

CSM 3D Viewer

CSM 3D Viewer is an online 3D model viewer that allows users to view and interact with 3D models on the web. It supports a variety of 3D file formats and provides basic operations such as rotation and scaling, as well as more advanced viewing functions. CSM 3D Viewer is suitable for designers, engineers and 3D enthusiasts, helping them display and share 3D works more intuitively.

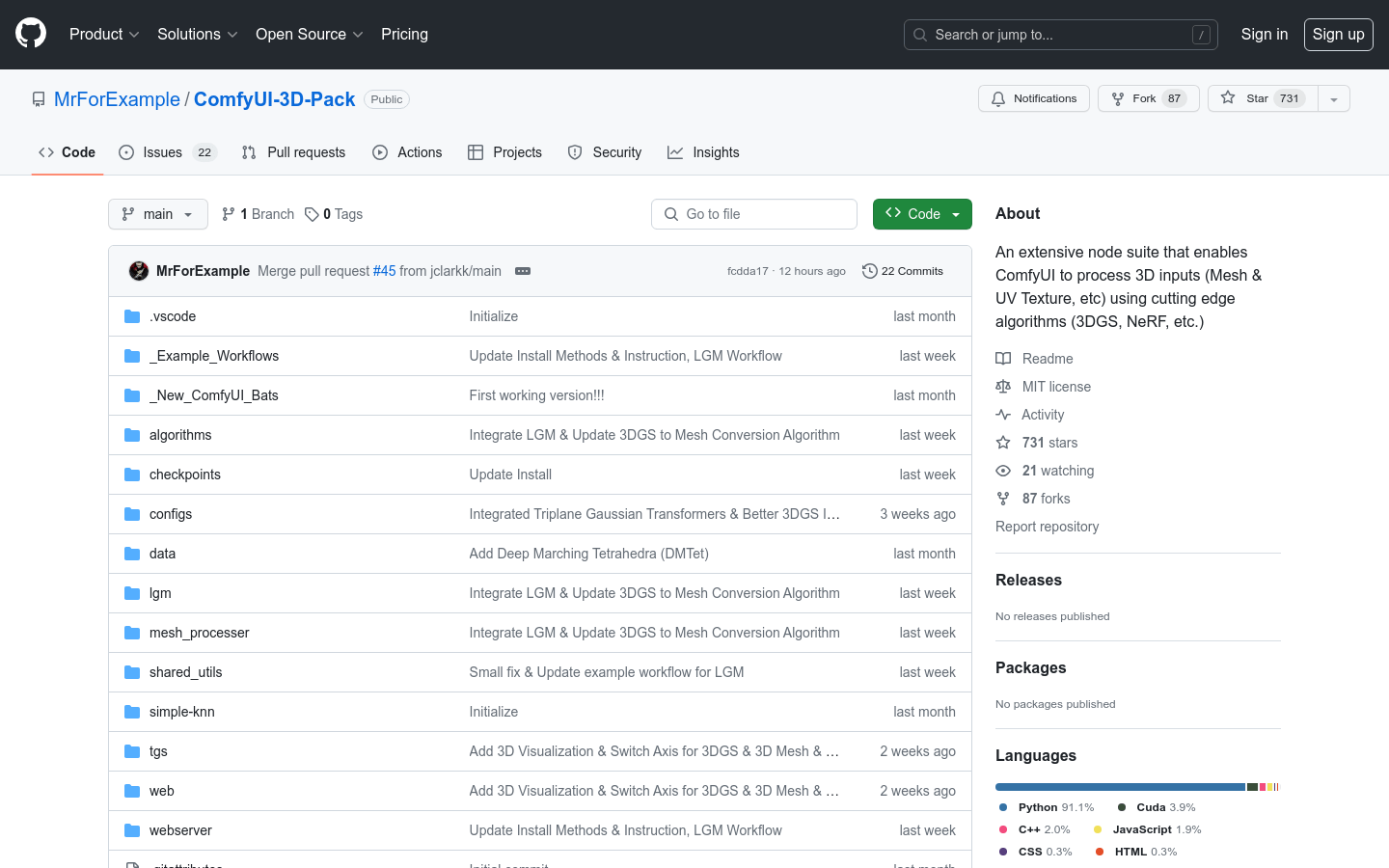

ComfyUI-3D-Pack

ComfyUI-3D-Pack is a powerful collection of 3D processing plug-ins. It provides ComfyUI with the ability to process 3D models (grids, textures, etc.), and integrates various cutting-edge 3D reconstruction and rendering algorithms, such as 3D Gaussian sampling, NeRF different iable rendering, etc., which can quickly reconstruct 3D Gaussian models from single-view images and convert them into triangular mesh models. It also provides an interactive 3D visualization interface.

ComfyUI3D Pack

ComfyUI-3D-Pack is a powerful 3D processing node plug-in package. It provides ComfyUI with the ability to process 3D inputs (grids, UV textures, etc.), using the most cutting-edge algorithms, such as 3D Gaussian sampling, neural radiation fields, etc. This project allows users to quickly generate a 3D Gaussian model using only a single image, and convert the Gaussian model into a grid to achieve 3D reconstruction. It also supports multi-view images as input, allowing texture maps for multi-view rendering to be mapped on a given 3D mesh. The plug-in package is under development and has not yet been officially released to the ComfyUI plug-in library, but it already supports functions such as large multi-view Gaussian models, three-plane Gaussian transformers, 3D Gaussian sampling, depth mesh triangulation, 3D file loading and saving, etc. It aims to be a powerful tool for ComfyUI to handle 3D content.

BlockFusion

BlockFusion is a diffusion-based model that generates 3D scenes and seamlessly integrates new blocks into the scene. It is trained on a dataset of 3D patches randomly cropped from a complete 3D scene mesh. Through block-by-block fitting, all training blocks are converted into hybrid neural fields: triahedrons containing geometric features, followed by a multilayer perceptron (MLP) for decoding signed distance values. A variational autoencoder is used to compress the triahedrons into a latent trihedral space, subjecting them to a denoising diffusion process. Diffusion is applied to latent representations, which enables high-quality and diverse 3D scene generation. When extending a scene during generation, simply append empty blocks to overlap the current scene and extrapolate existing potential triahedrons to fill the new blocks. Extrapolation is accomplished by tuning the generation process using feature samples from overlapping triahedrons during denoising iterations. Latent trihedral extrapolation produces semantically and geometrically meaningful transitions that blend harmoniously with the existing scene. Use the 2D layout adjustment mechanism to control the placement and arrangement of scene elements. Experimental results show that BlockFusion is capable of generating diverse, geometrically consistent, and high-quality indoor and outdoor large-scale 3D scenes.

TIP-Editor

TIP-Editor is an accurate 3D editor that supports text and image hints, allowing users to precisely control the appearance and position of the editing area through text and image hints and 3D bounding boxes. Employs a stepwise 2D personalization strategy to better learn representations of existing scenes and reference images, enabling precise appearance control through local editing. TIPEditor utilizes an unambiguous and flexible 3D Gaussian splash as a 3D representation for local editing while keeping the background intact. Extensive experiments have proven that TIP-Editor accurately edits according to text and image prompts within the specified bounding box area, and the editing quality and alignment with the prompts are both qualitatively and quantitatively better than the baseline.

3DTopia

3DTopia is a two-stage text-to-3D generative model. The first stage uses a diffusion model to quickly generate candidates. The second stage optimizes the assets selected in the first stage. This model enables high-quality text-to-3D generation in under 5 minutes.

Make-A-Shape

Make-A-Shape is a new 3D generative model designed to train on large-scale data in an efficient manner, capable of leveraging 10 million publicly available shapes. We innovatively introduce a wavelet tree representation to compactly encode the shape by formulating a subband coefficient filtering scheme, and then arrange the representation in a low-resolution grid by designing a subband coefficient packing scheme, making it generative of diffusion models. Furthermore, we propose a subband adaptive training strategy that enables our model to effectively learn to generate coarse and fine wavelet coefficients. Finally, we extend our framework to be controlled by additional input conditions to enable it to generate shapes from various modalities, such as single/multi-view images, point clouds, and low-resolution voxels. In extensive experiments, we demonstrate various applications such as unconditional generation, shape completion, and conditional generation. Our method not only surpasses the state of the art in providing high-quality results, but also efficiently generates shapes in seconds, typically only 2 seconds under most conditions.