💻

programming Category

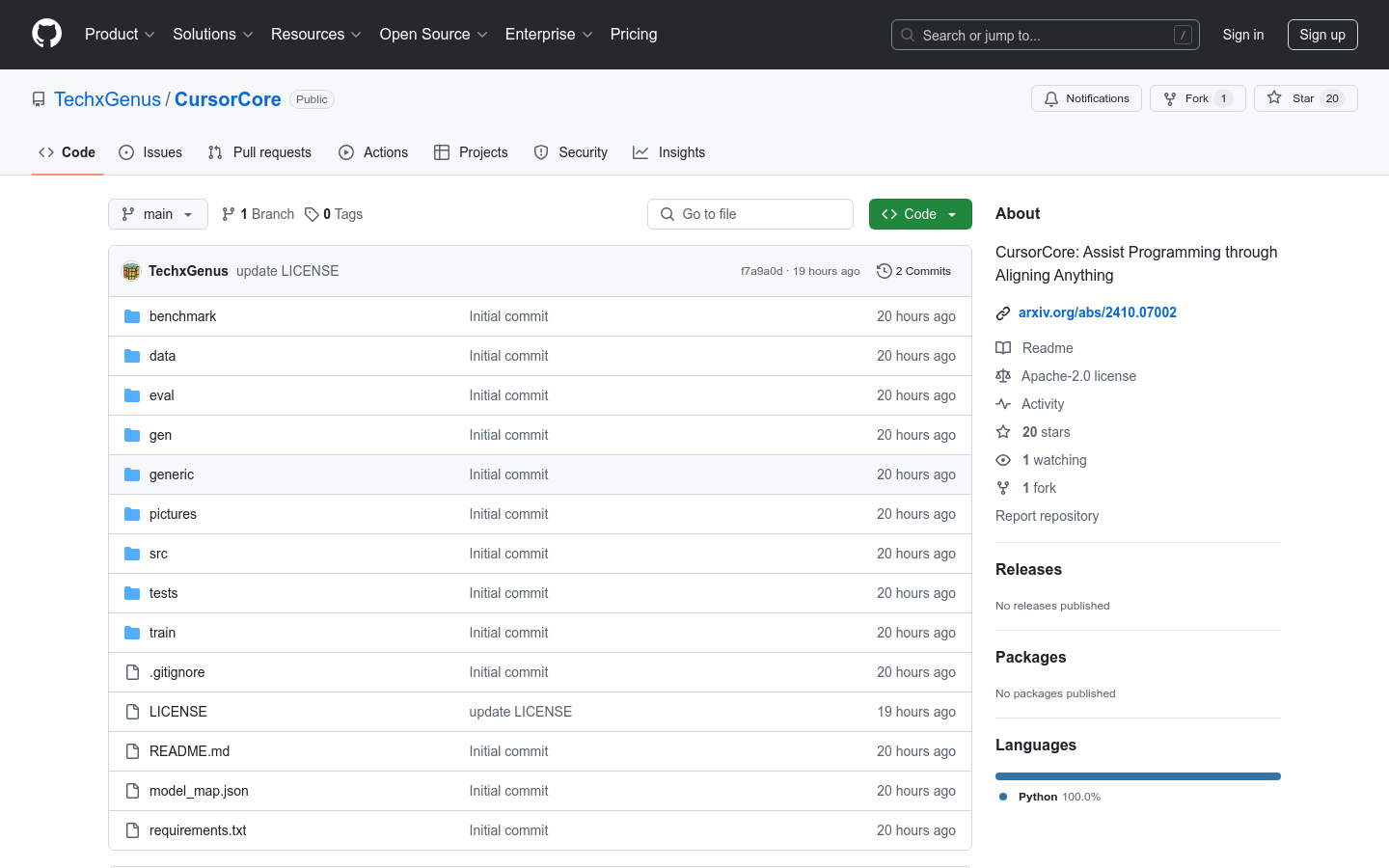

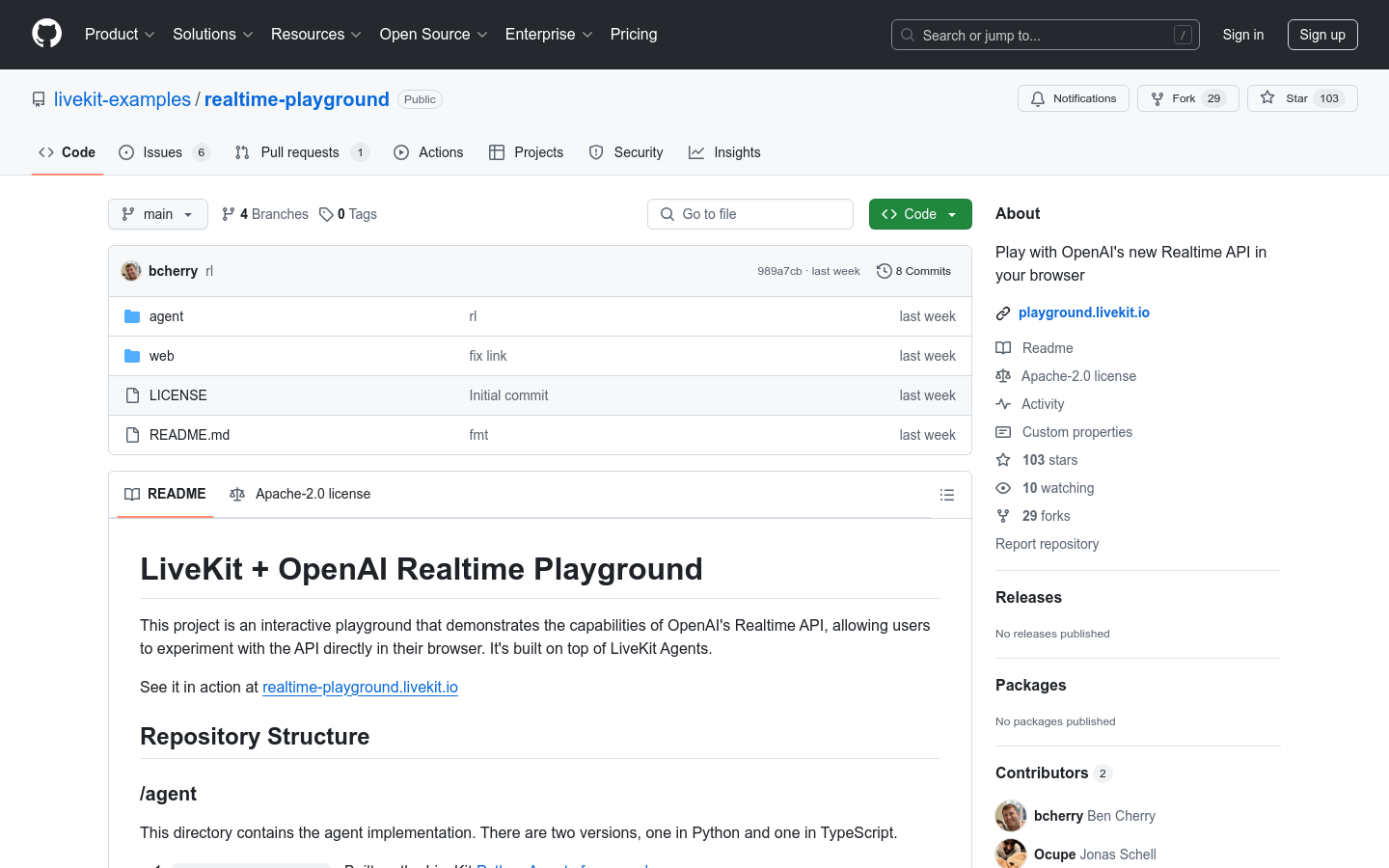

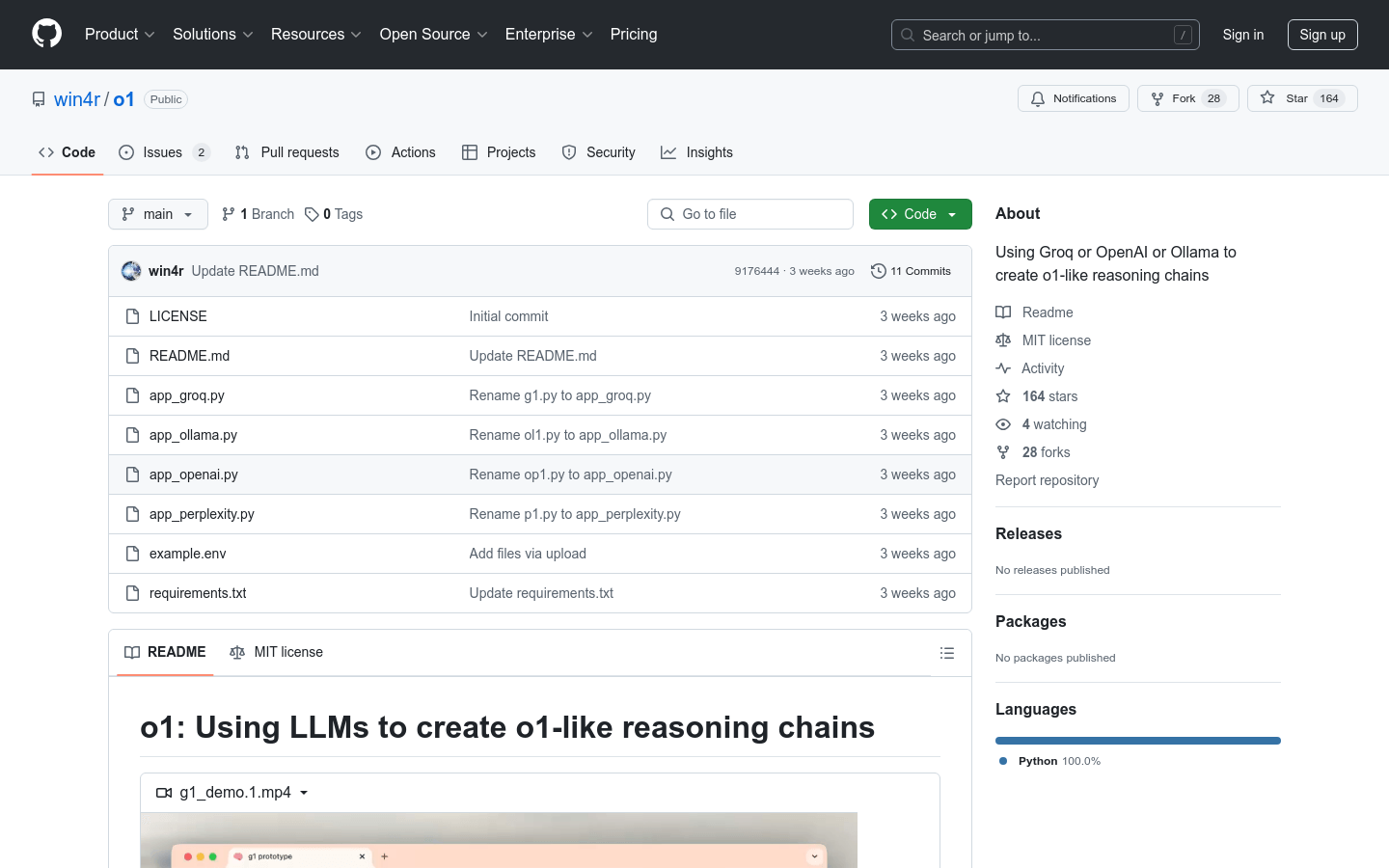

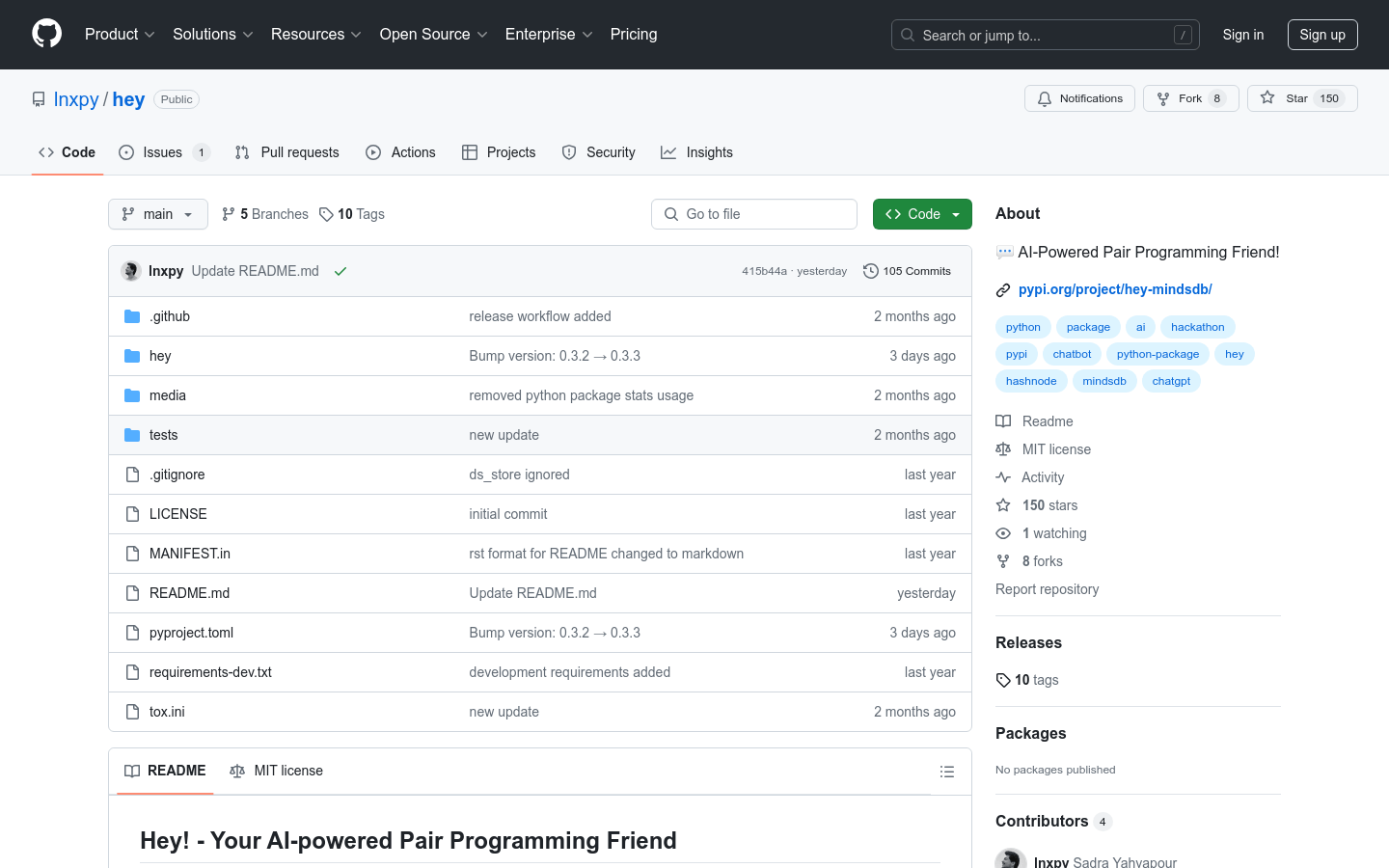

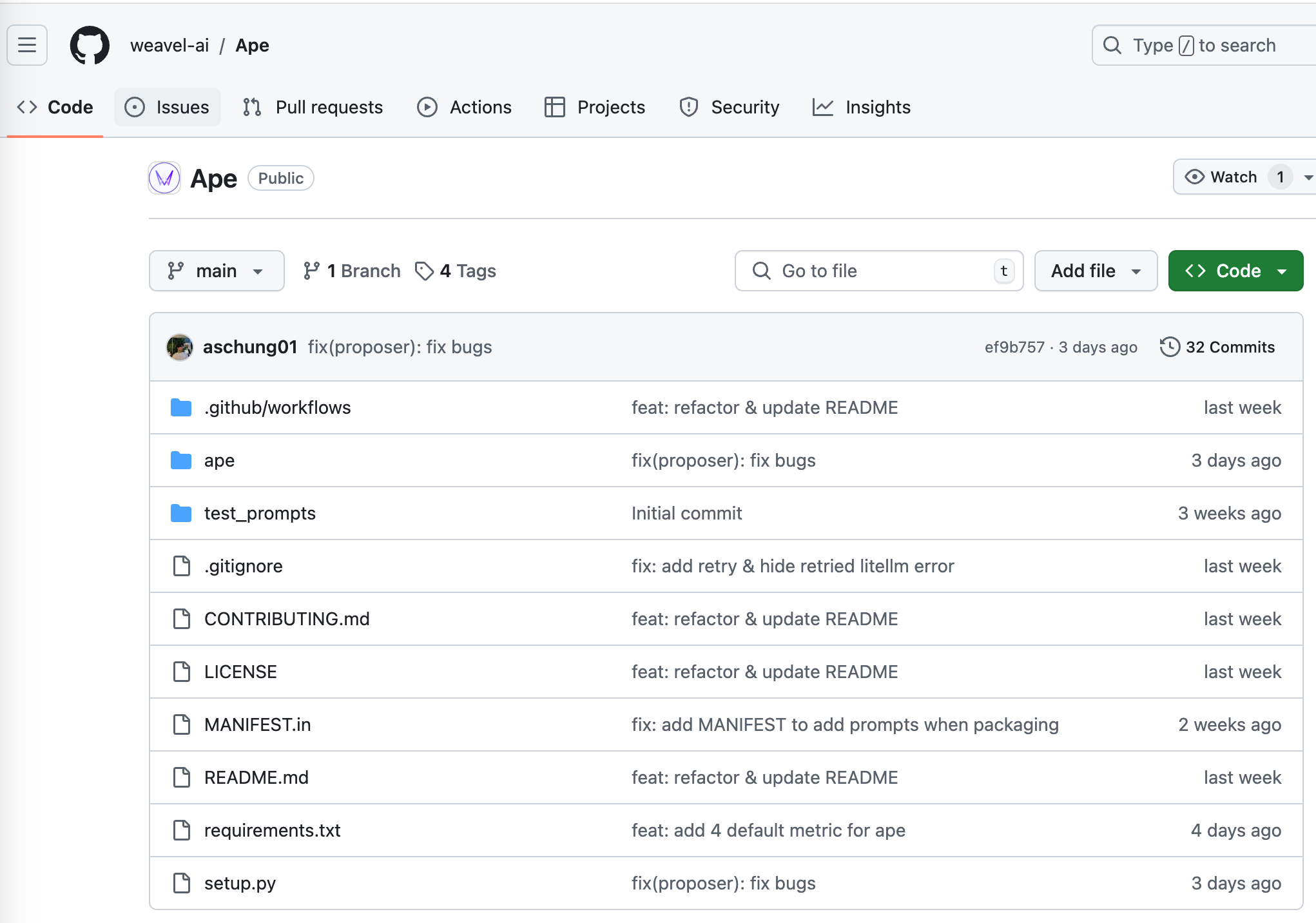

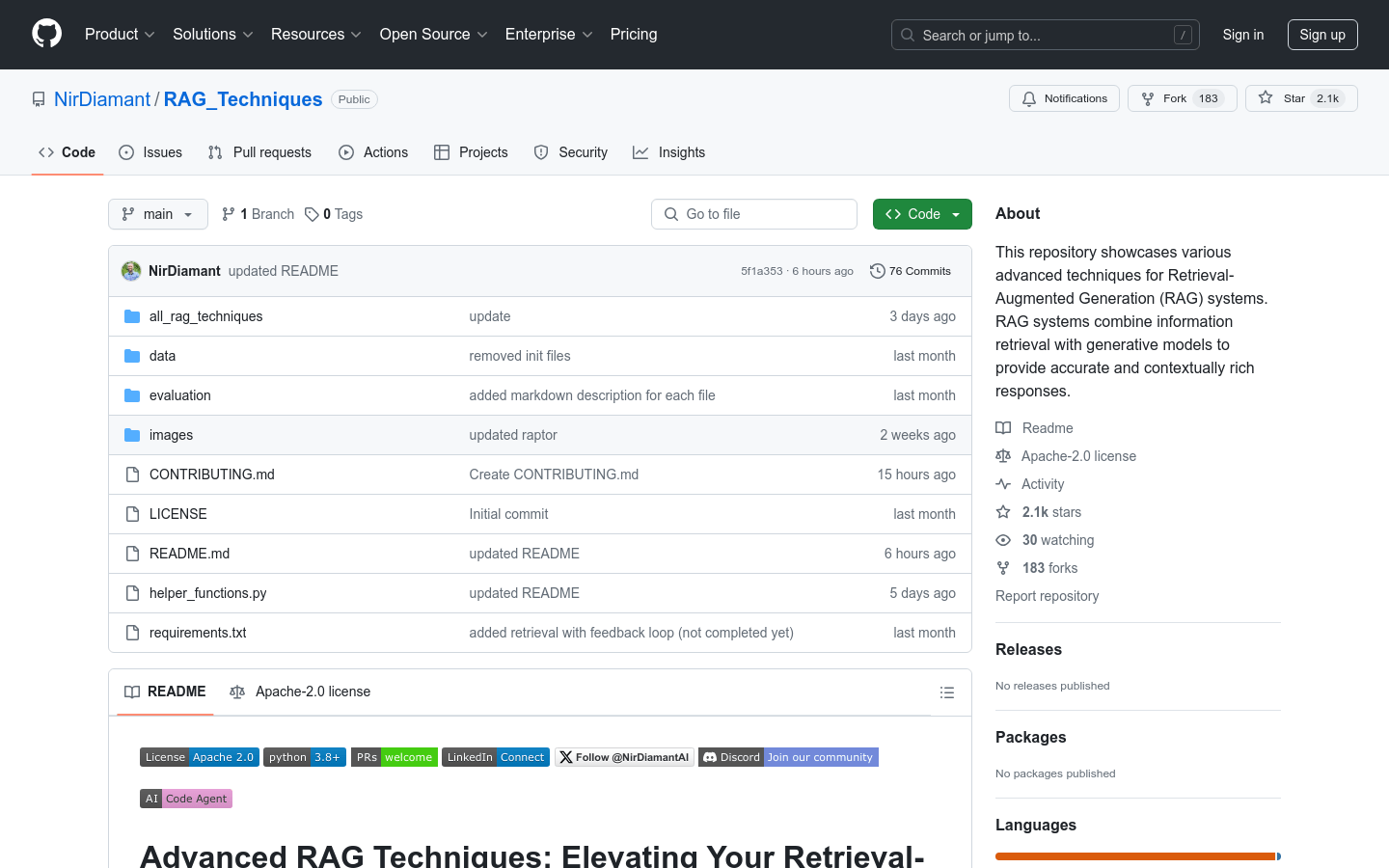

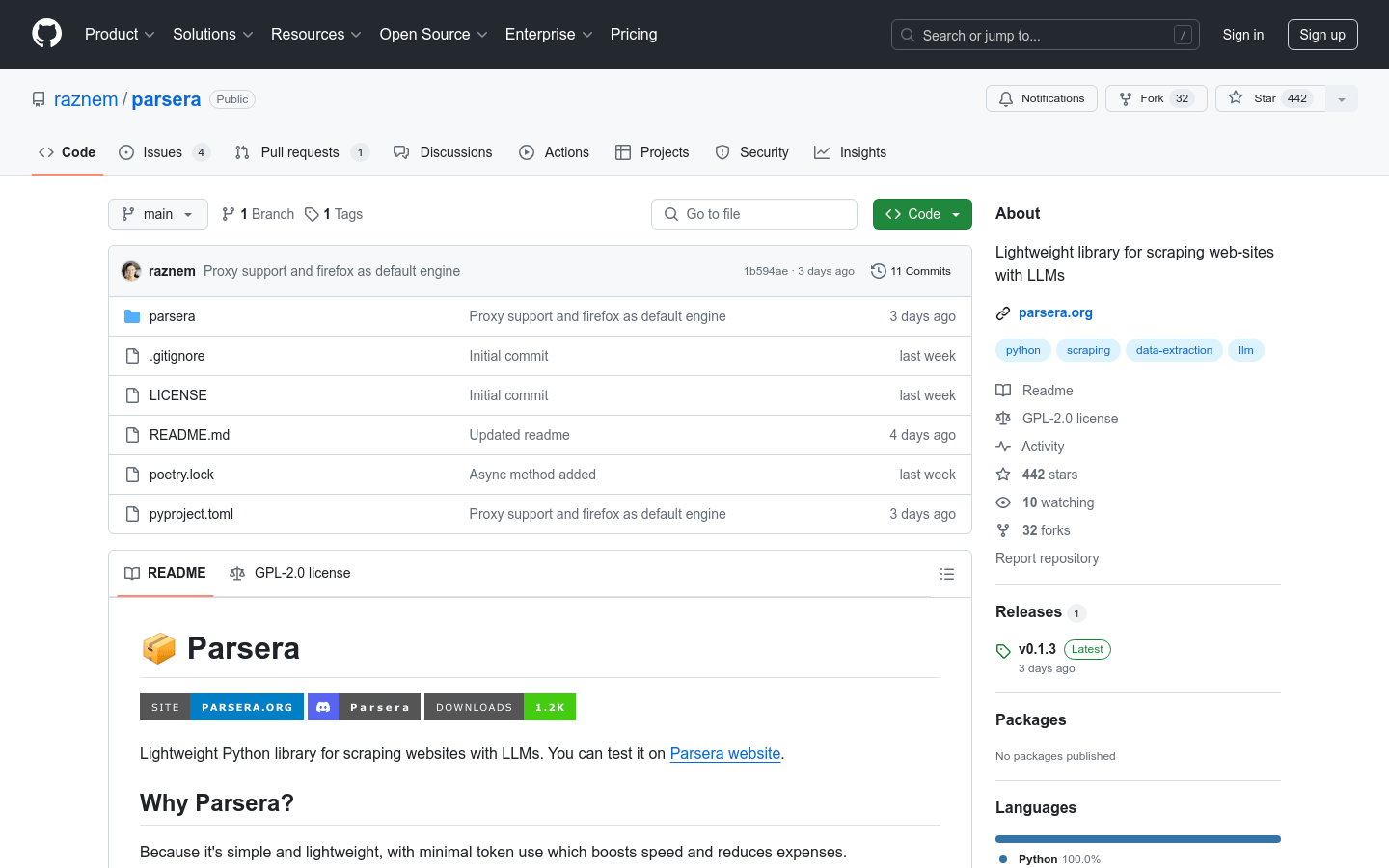

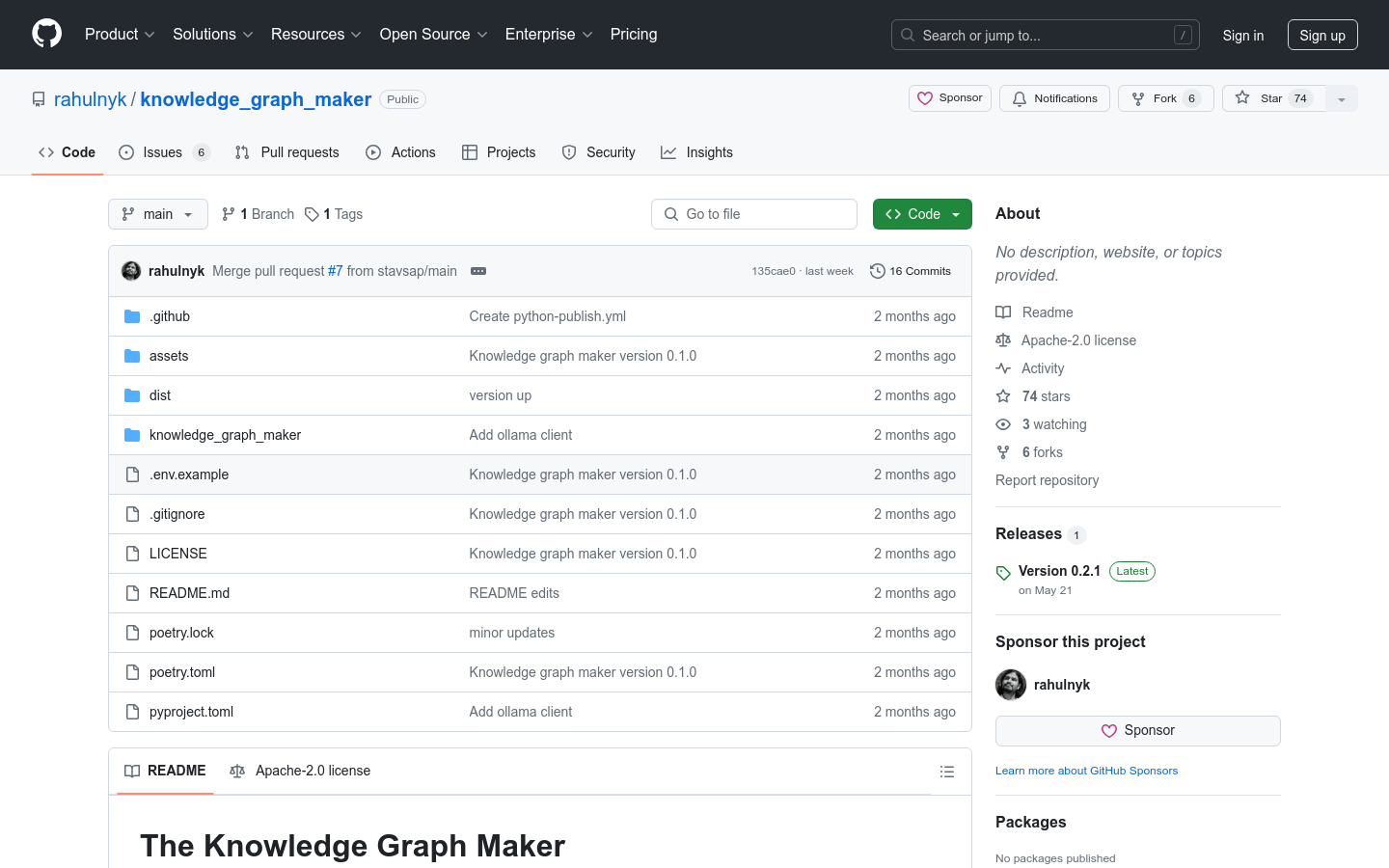

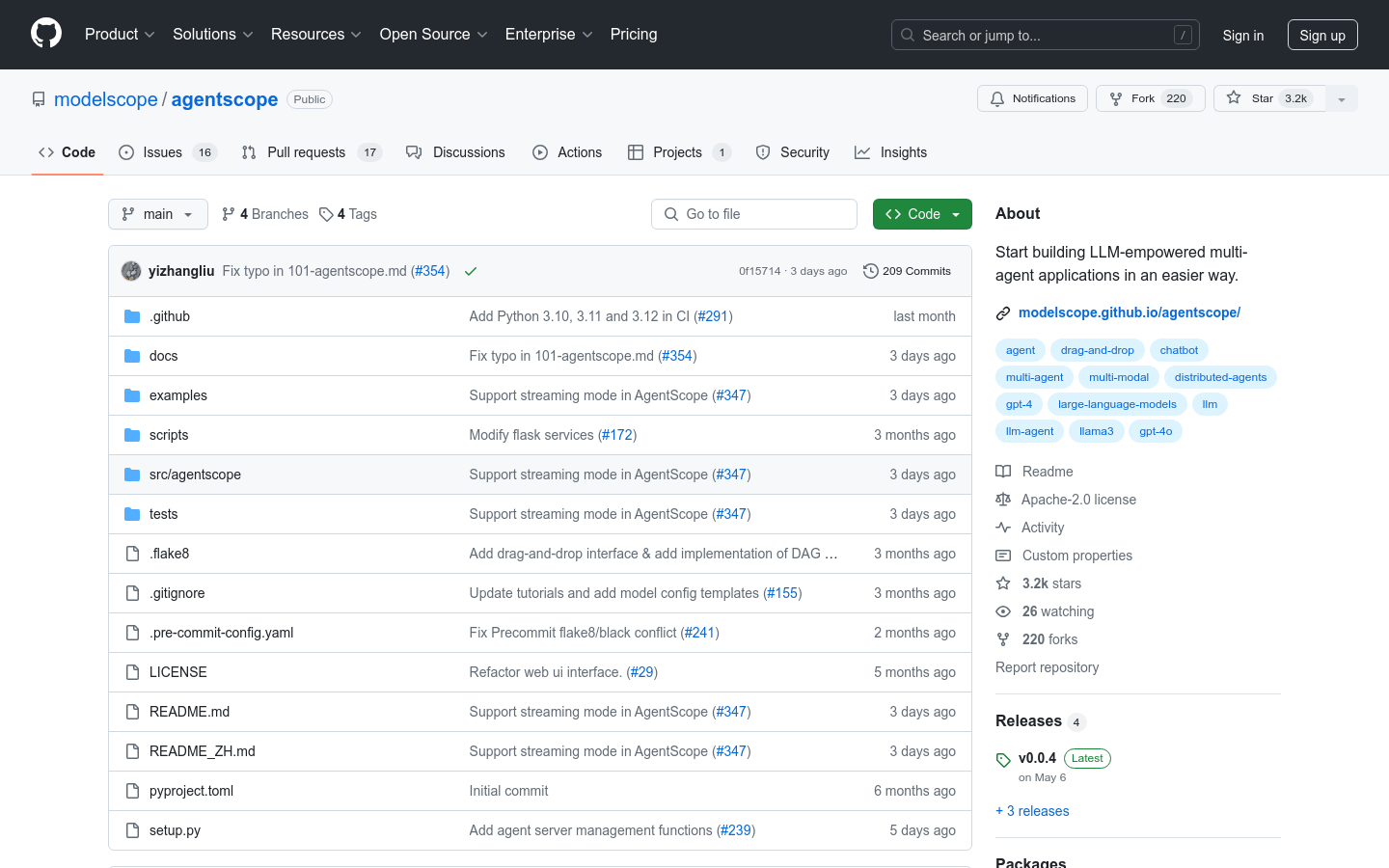

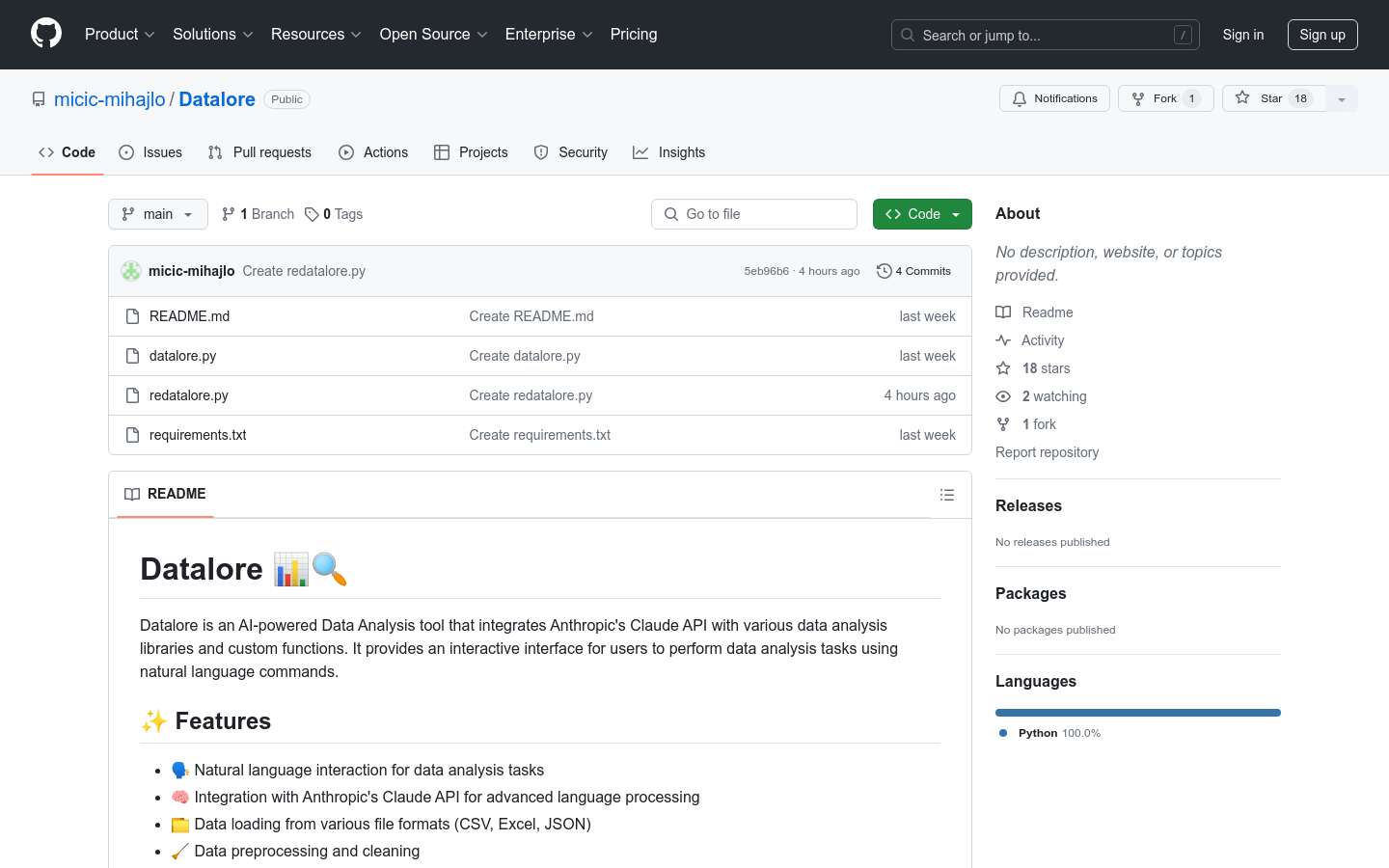

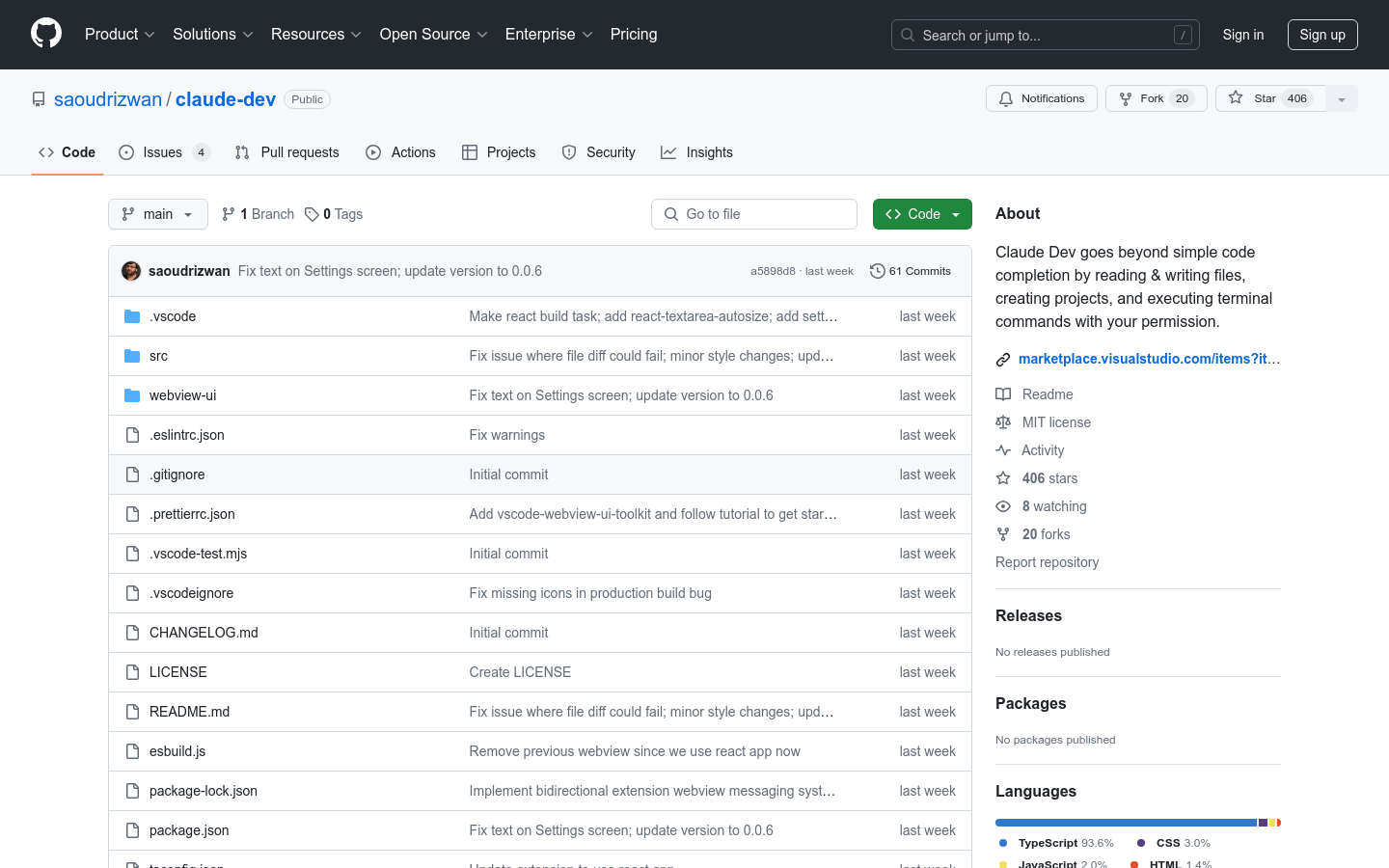

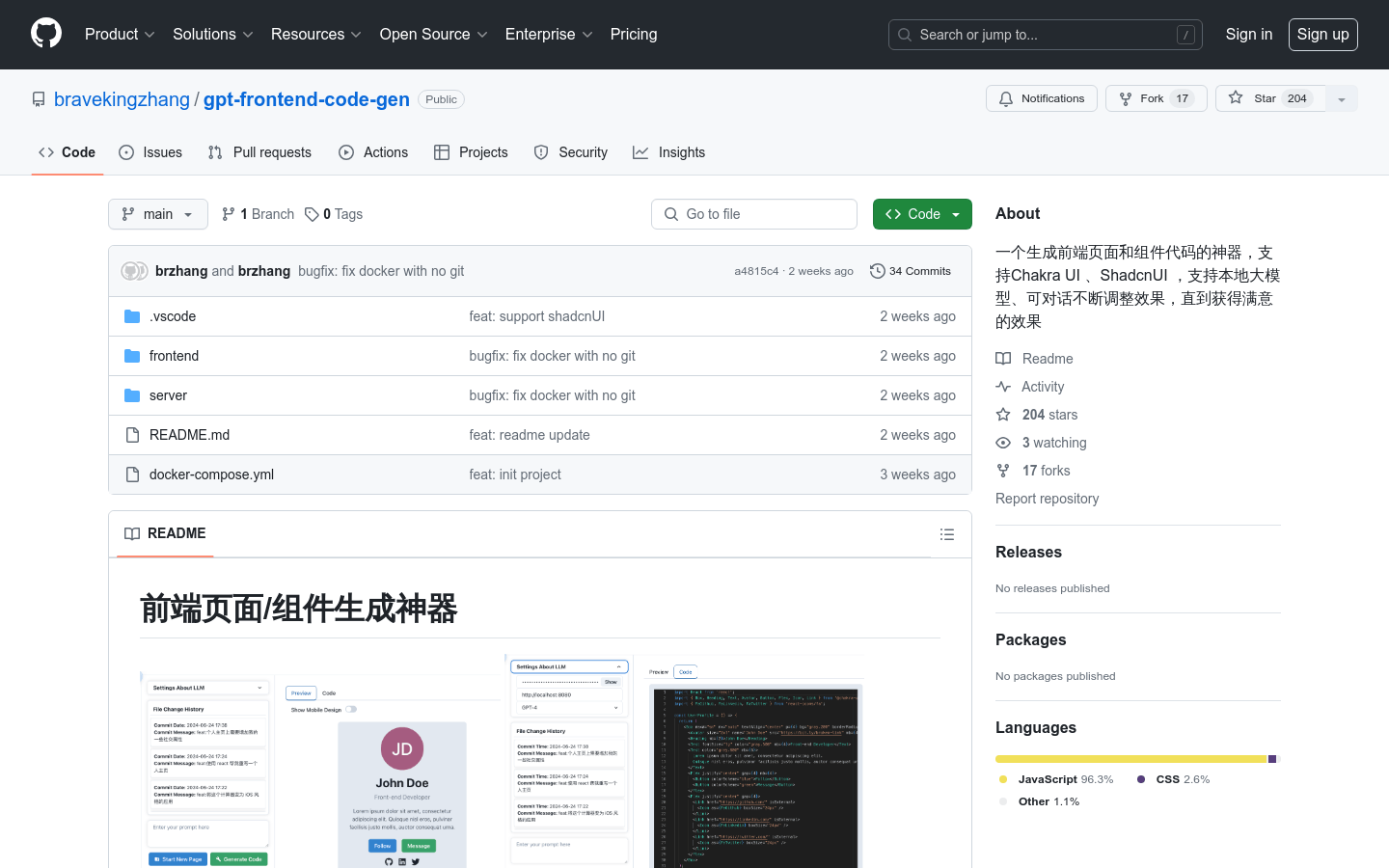

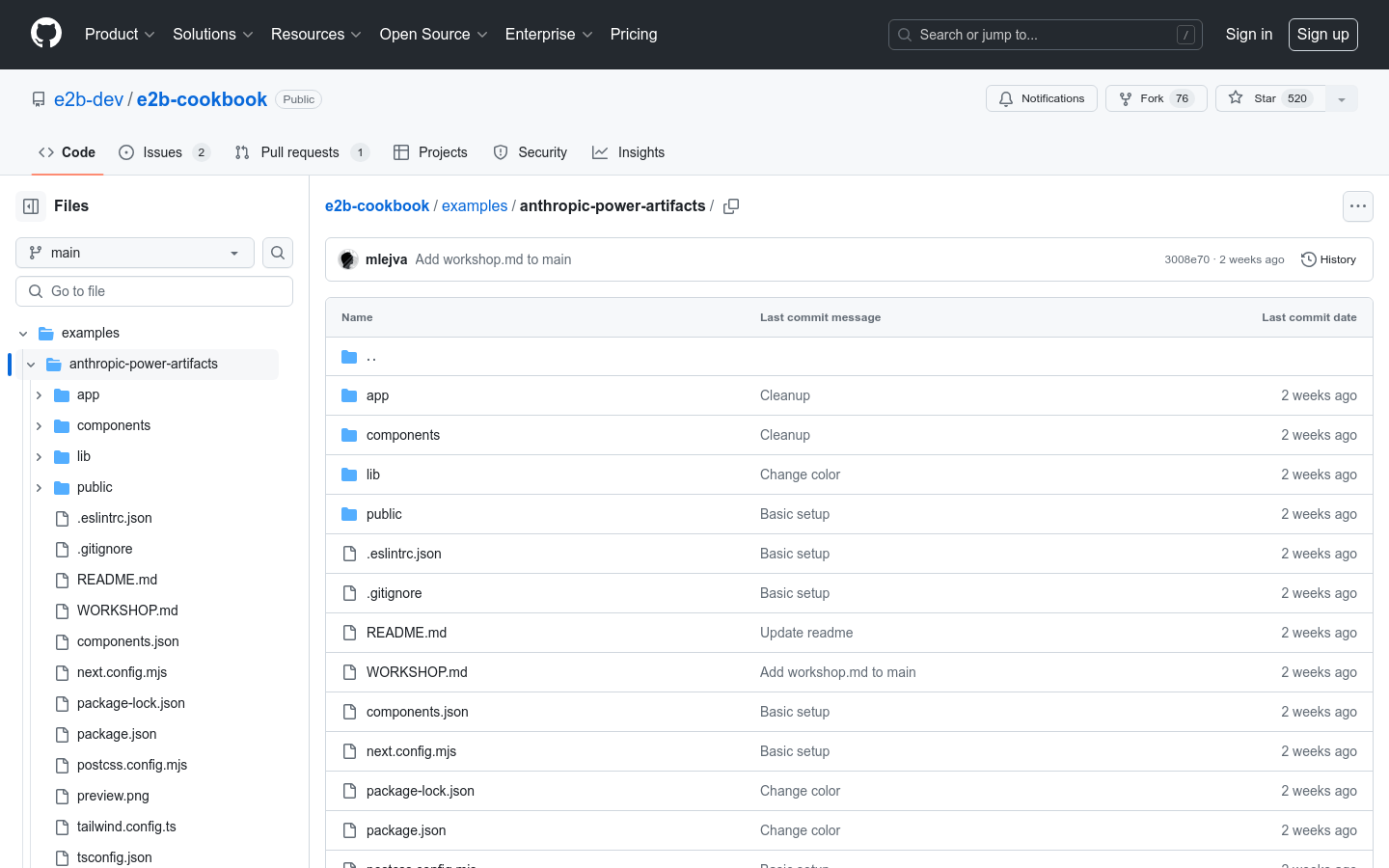

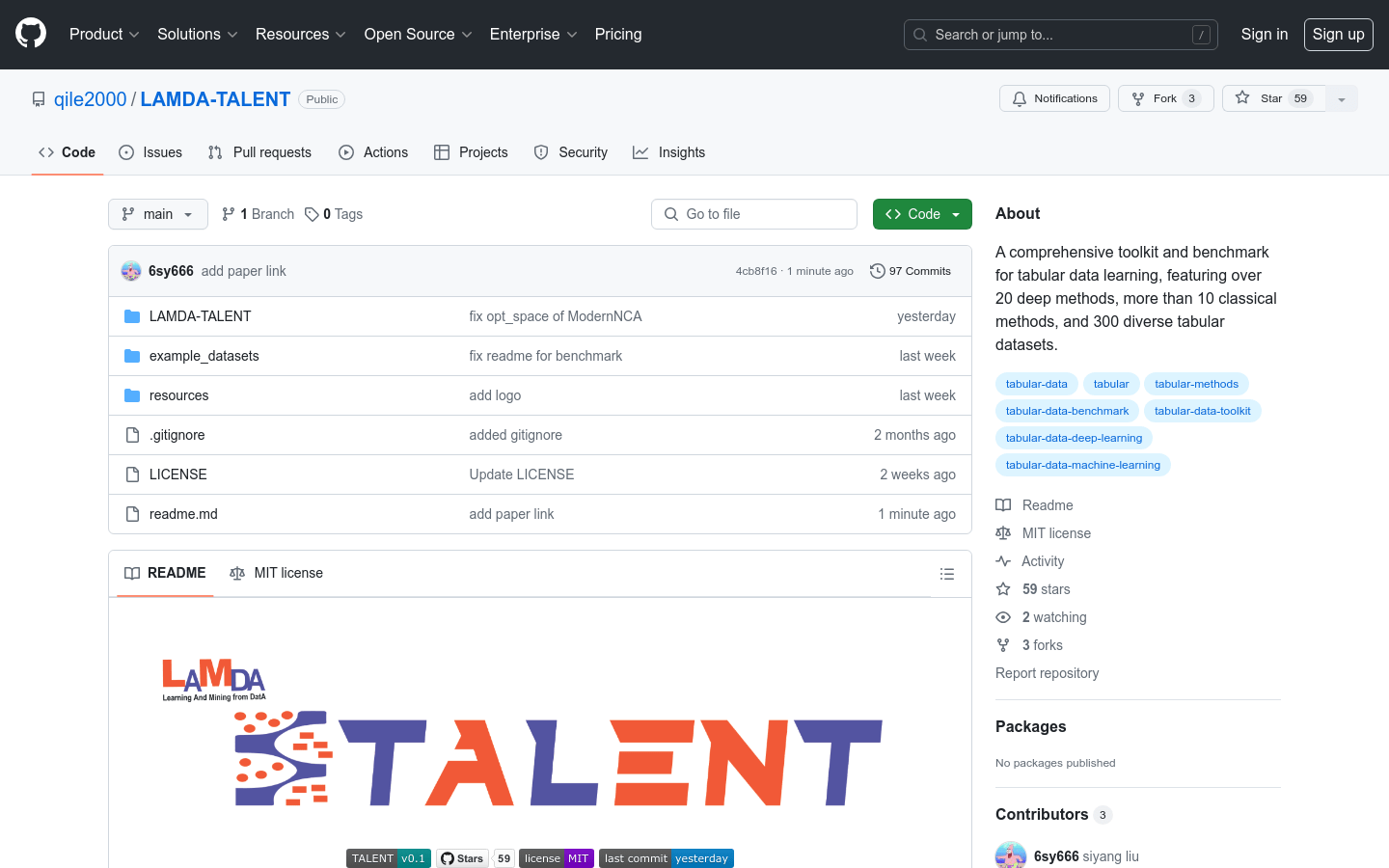

AI development assistant

Found 100 AI tools

100

tools

Primary Category: programming

Subcategory: AI development assistant

Found 100 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under programming Other Categories

💻

Explore More programming Tools

AI development assistant Hot programming is a popular subcategory under 294 quality AI tools