💻

programming Category

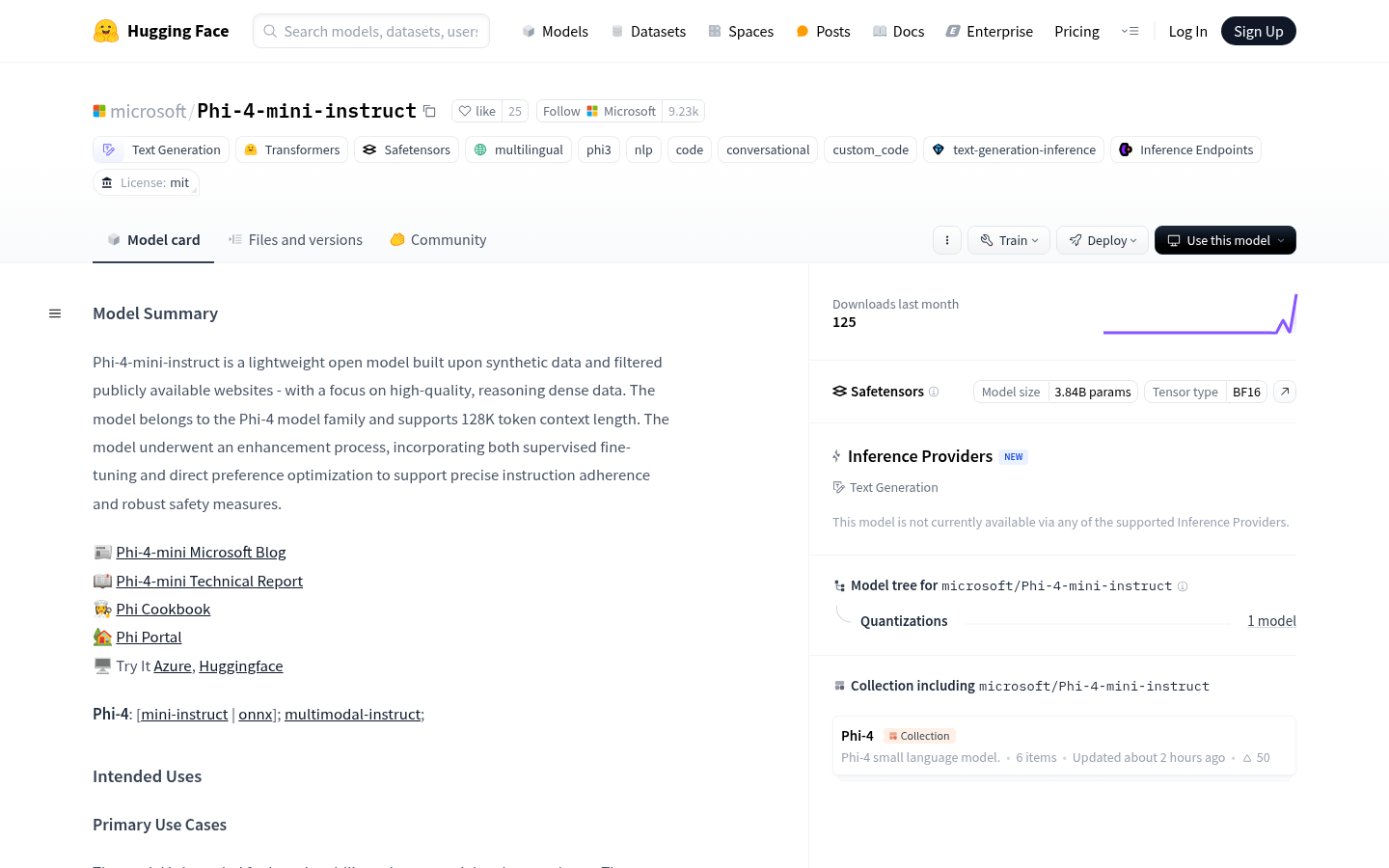

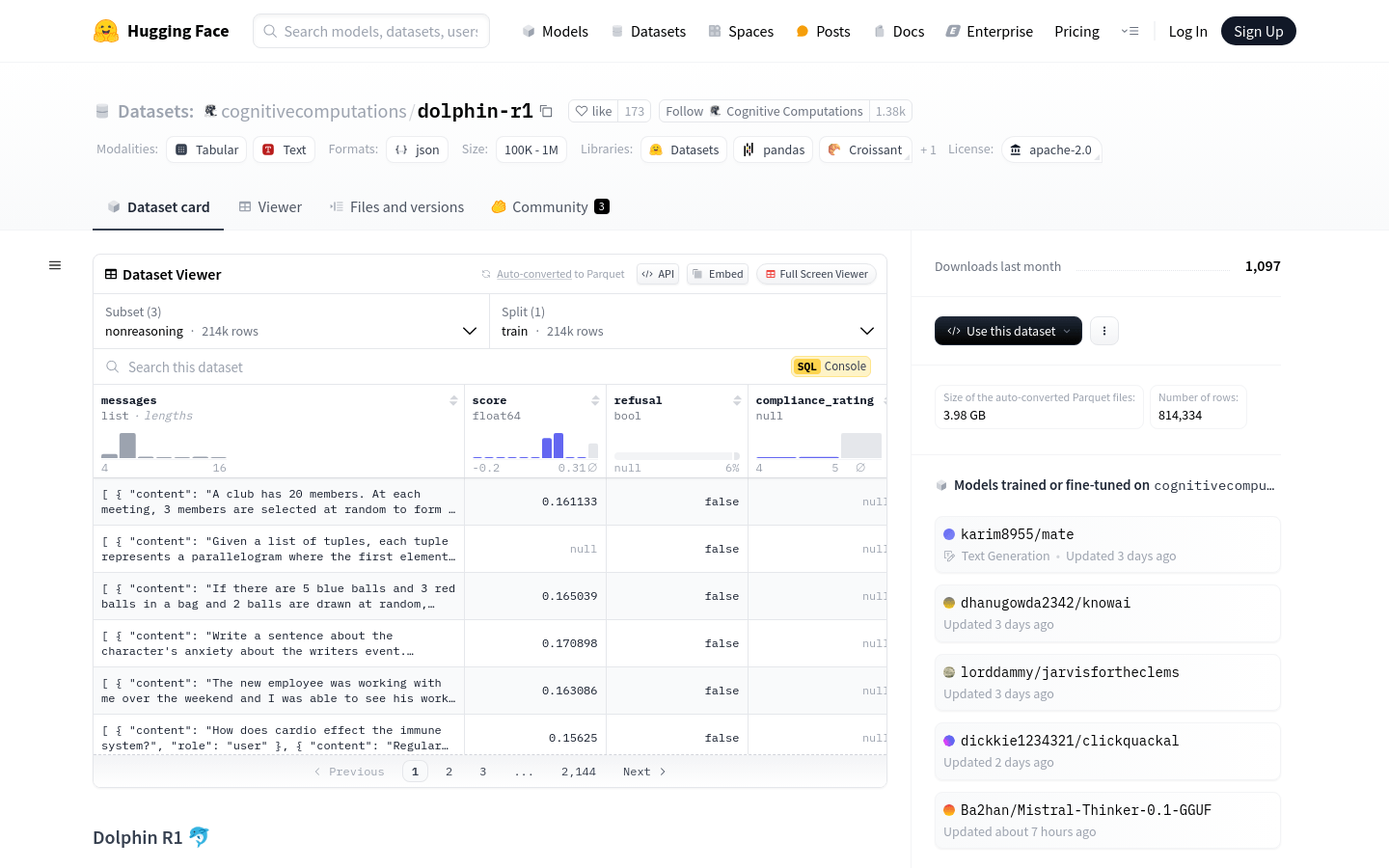

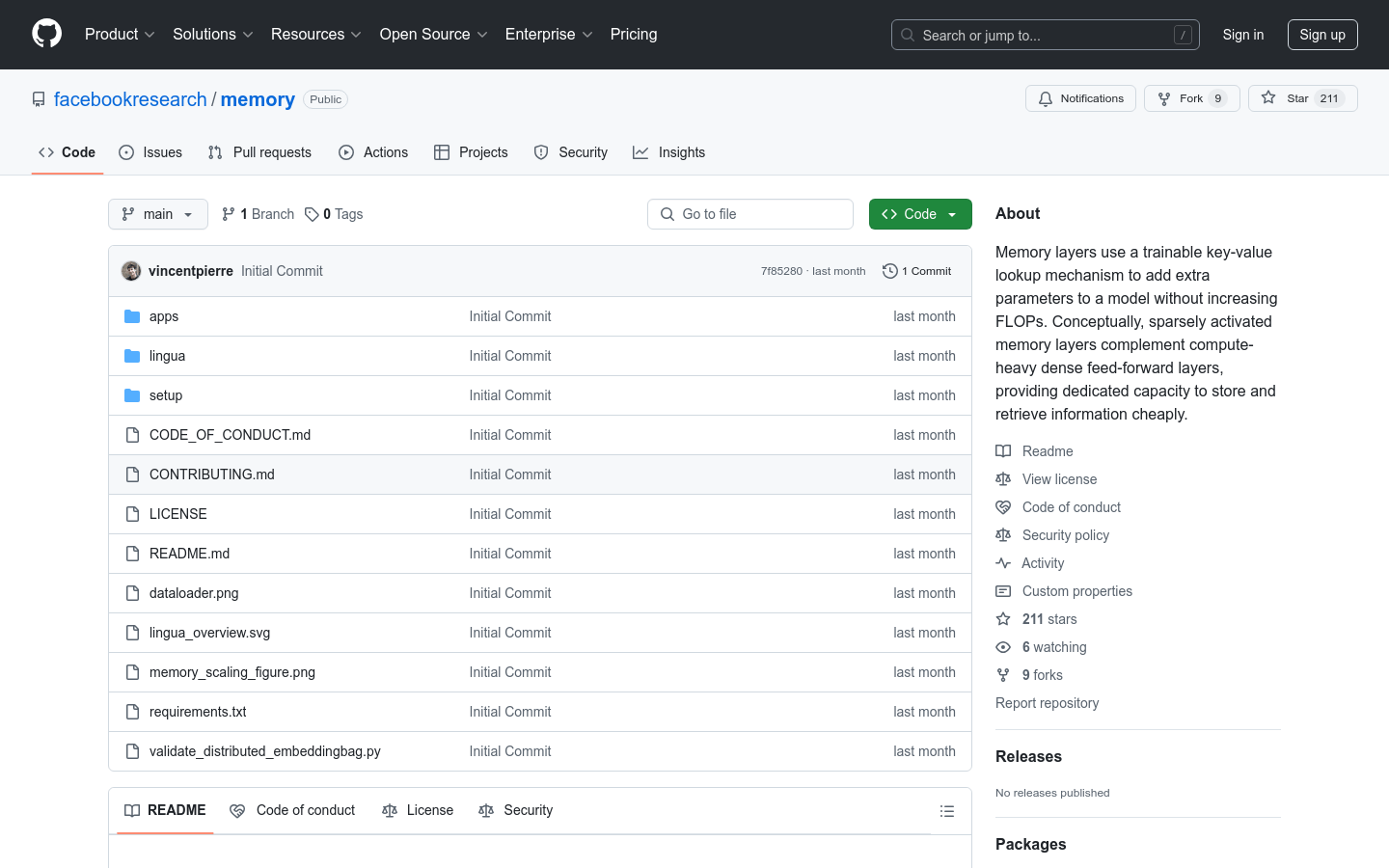

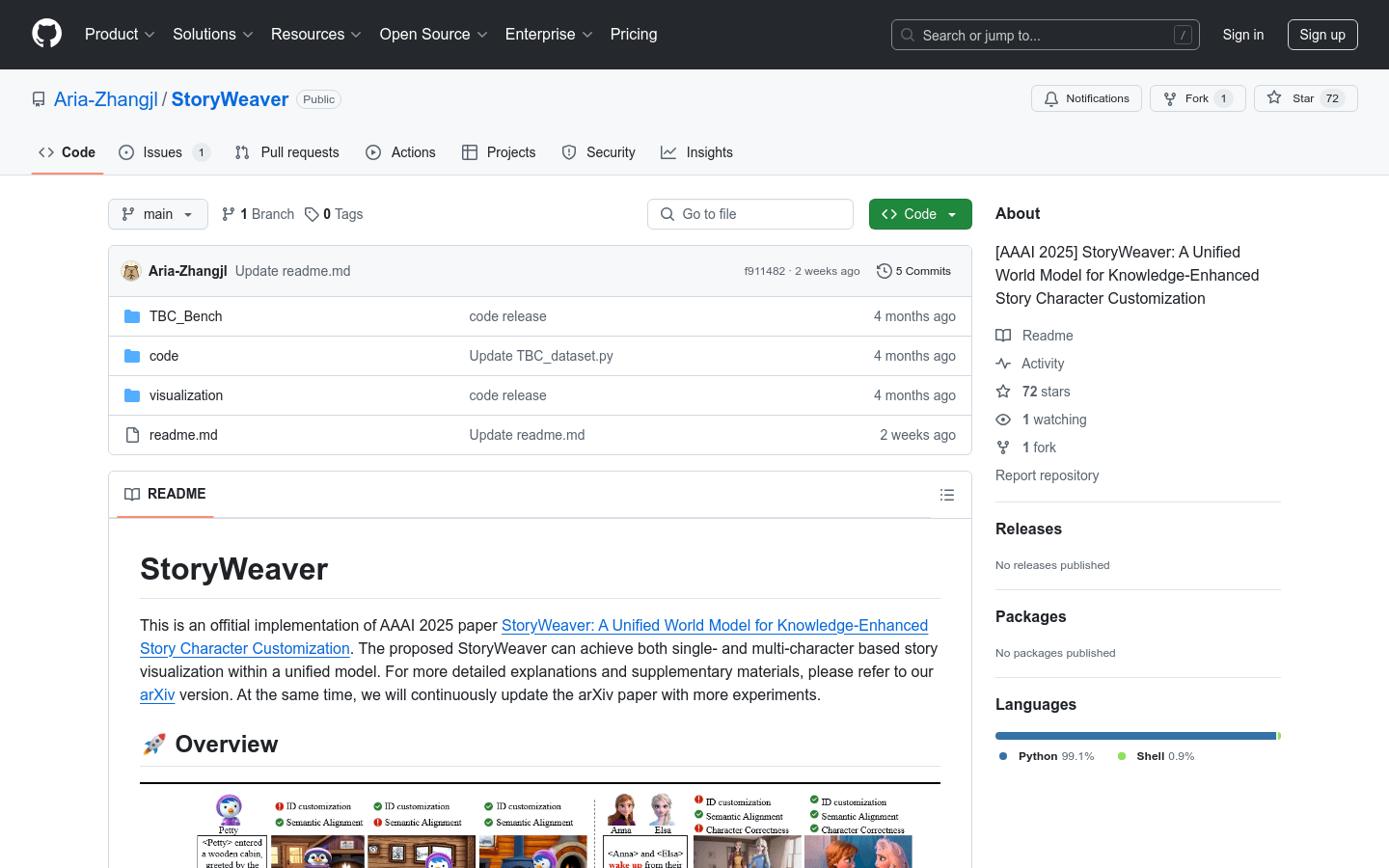

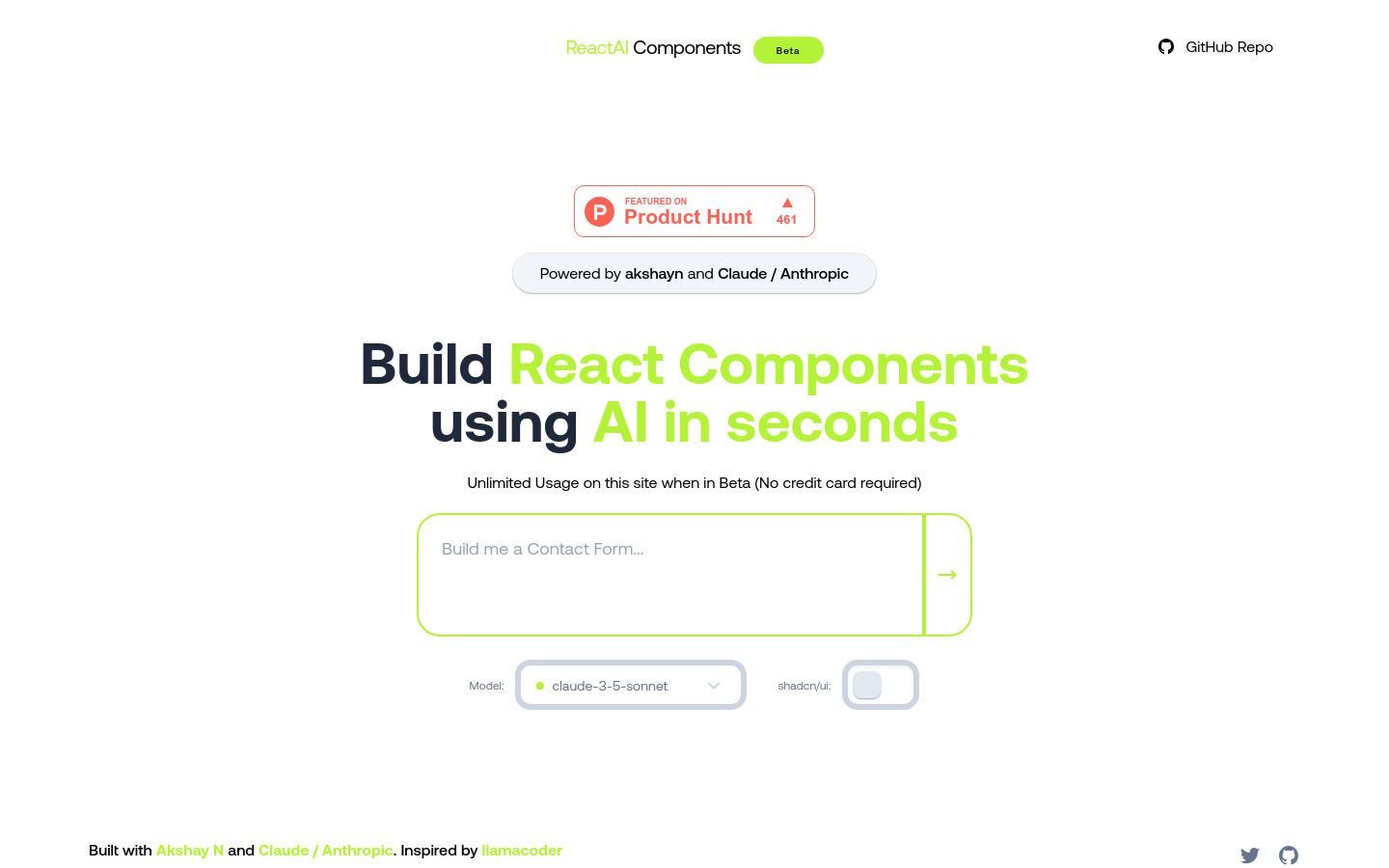

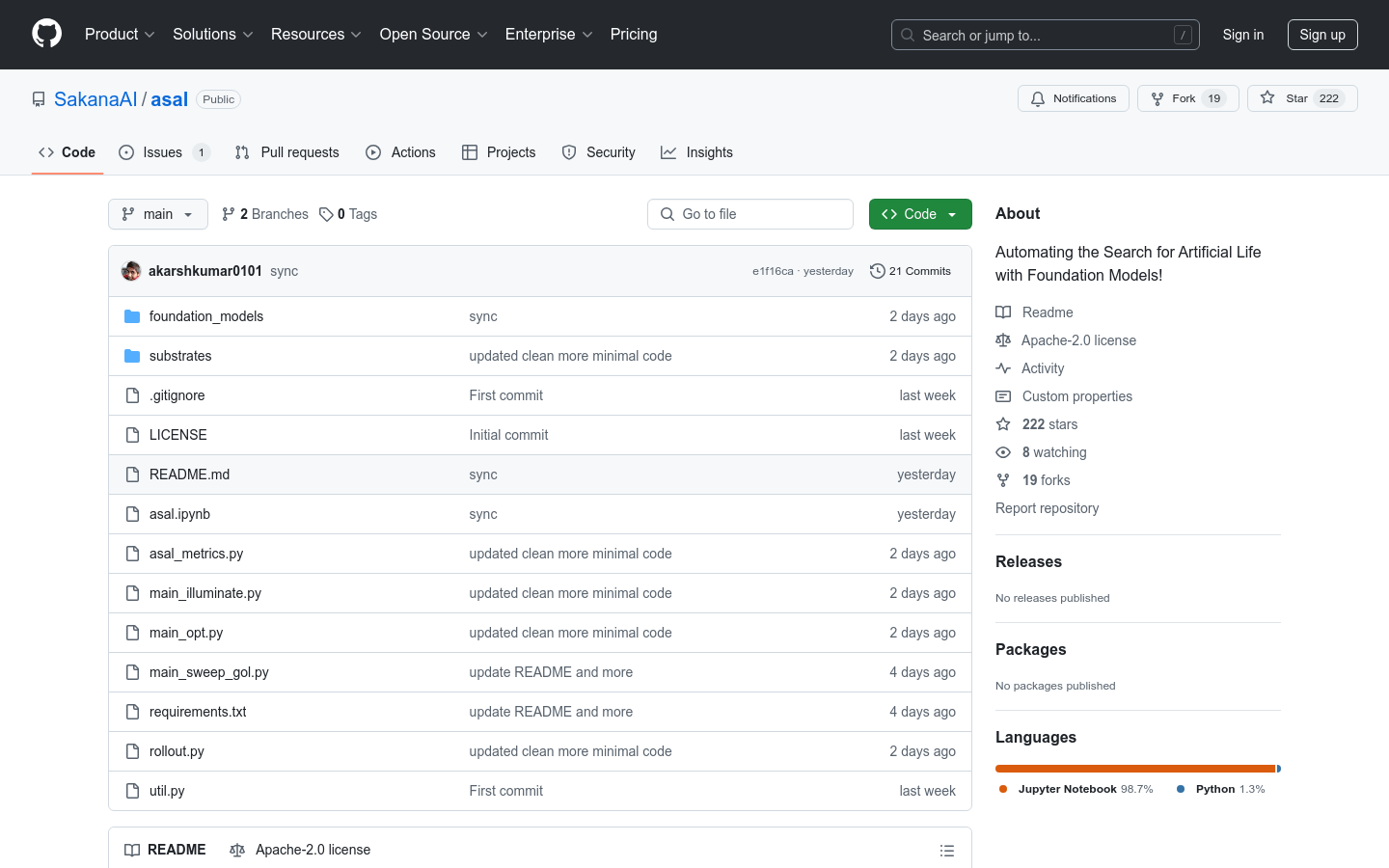

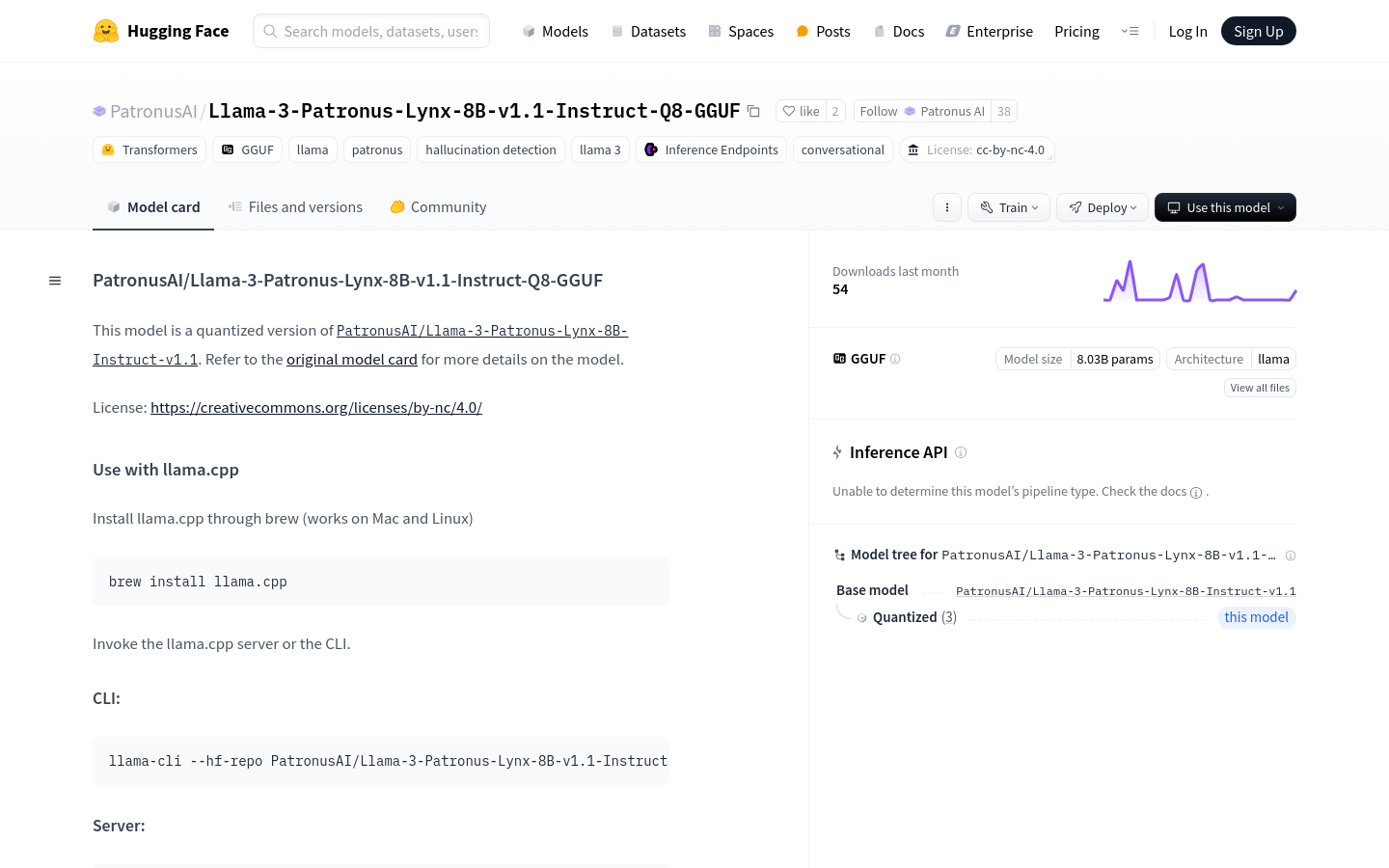

AI model

Found 100 AI tools

100

tools

Primary Category: programming

Subcategory: AI model

Found 100 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under programming Other Categories

💻

Explore More programming Tools

AI model Hot programming is a popular subcategory under 465 quality AI tools