🎵

music Category

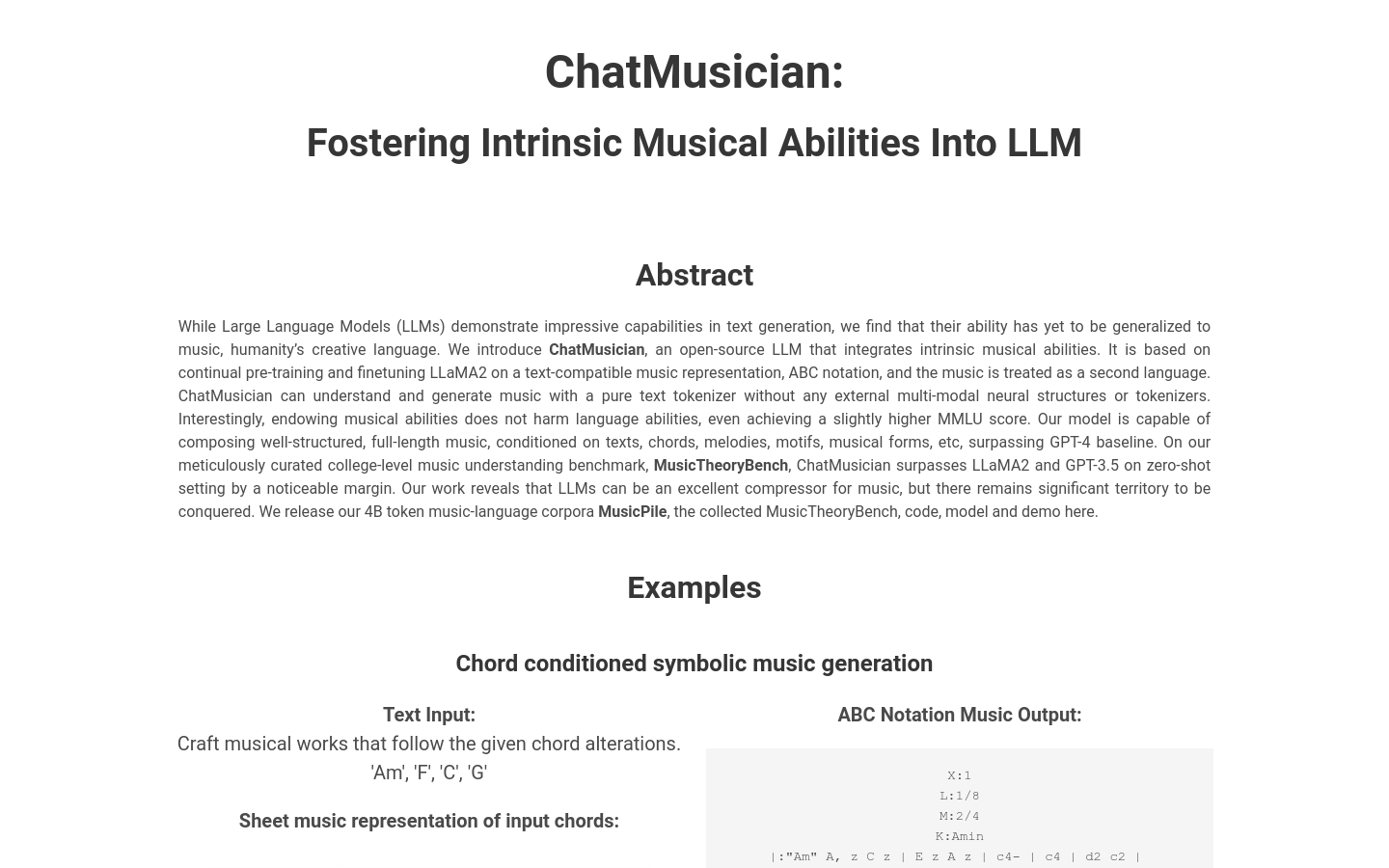

AI model

Found 44 AI tools

44

tools

Primary Category: music

Subcategory: AI model

Found 44 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under music Other Categories

🎵

Explore More music Tools

AI model Hot music is a popular subcategory under 44 quality AI tools