🎬

video Category

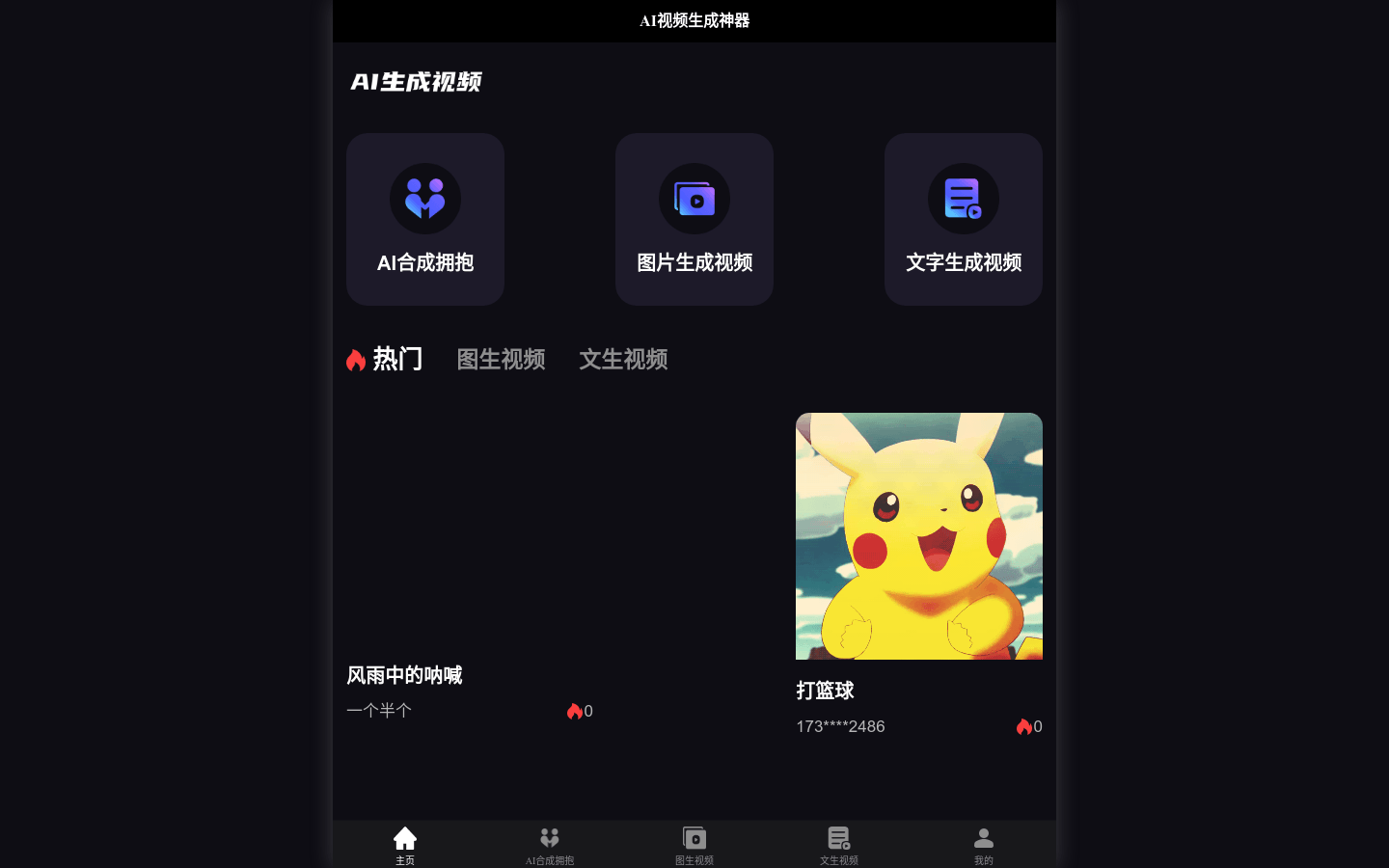

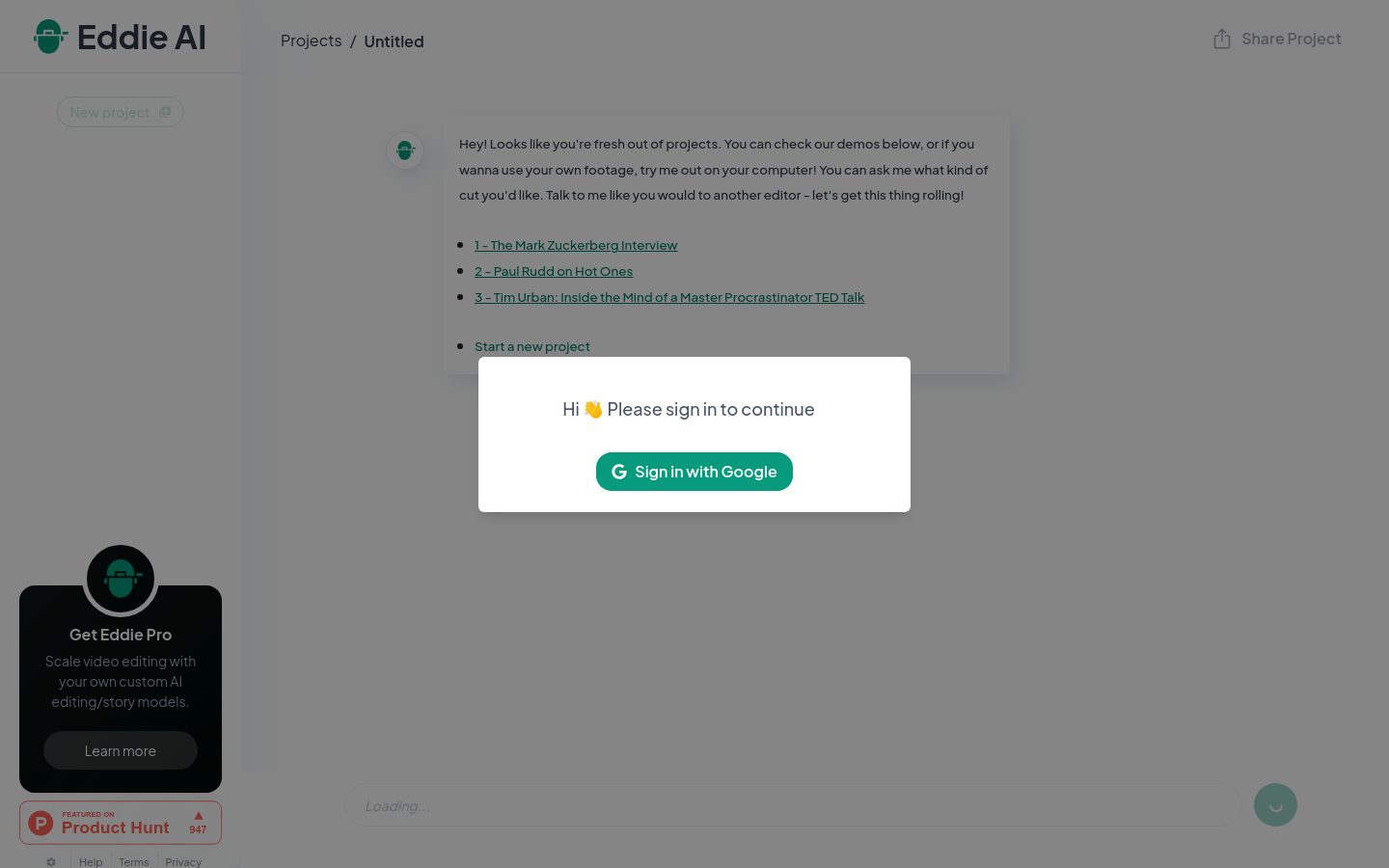

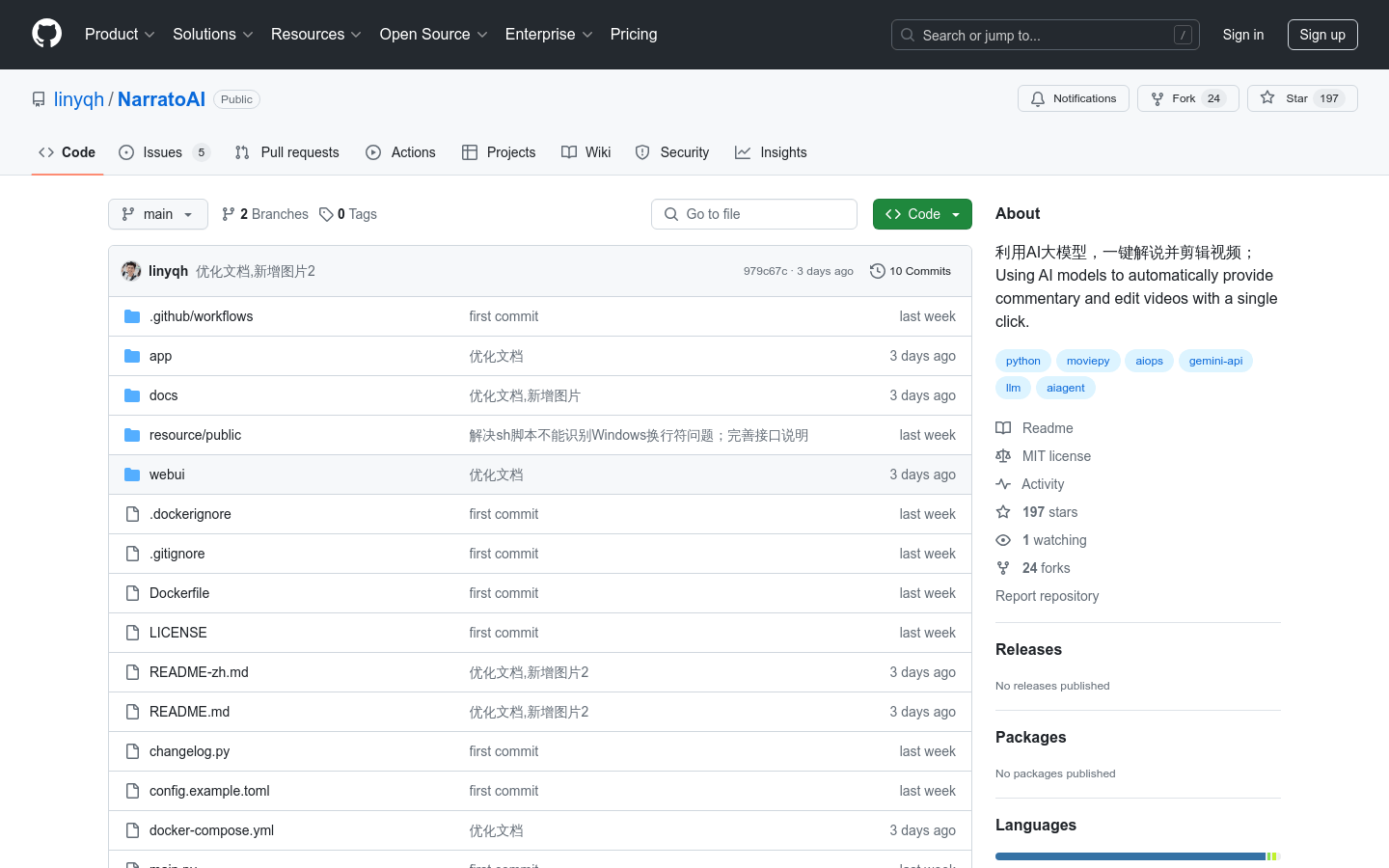

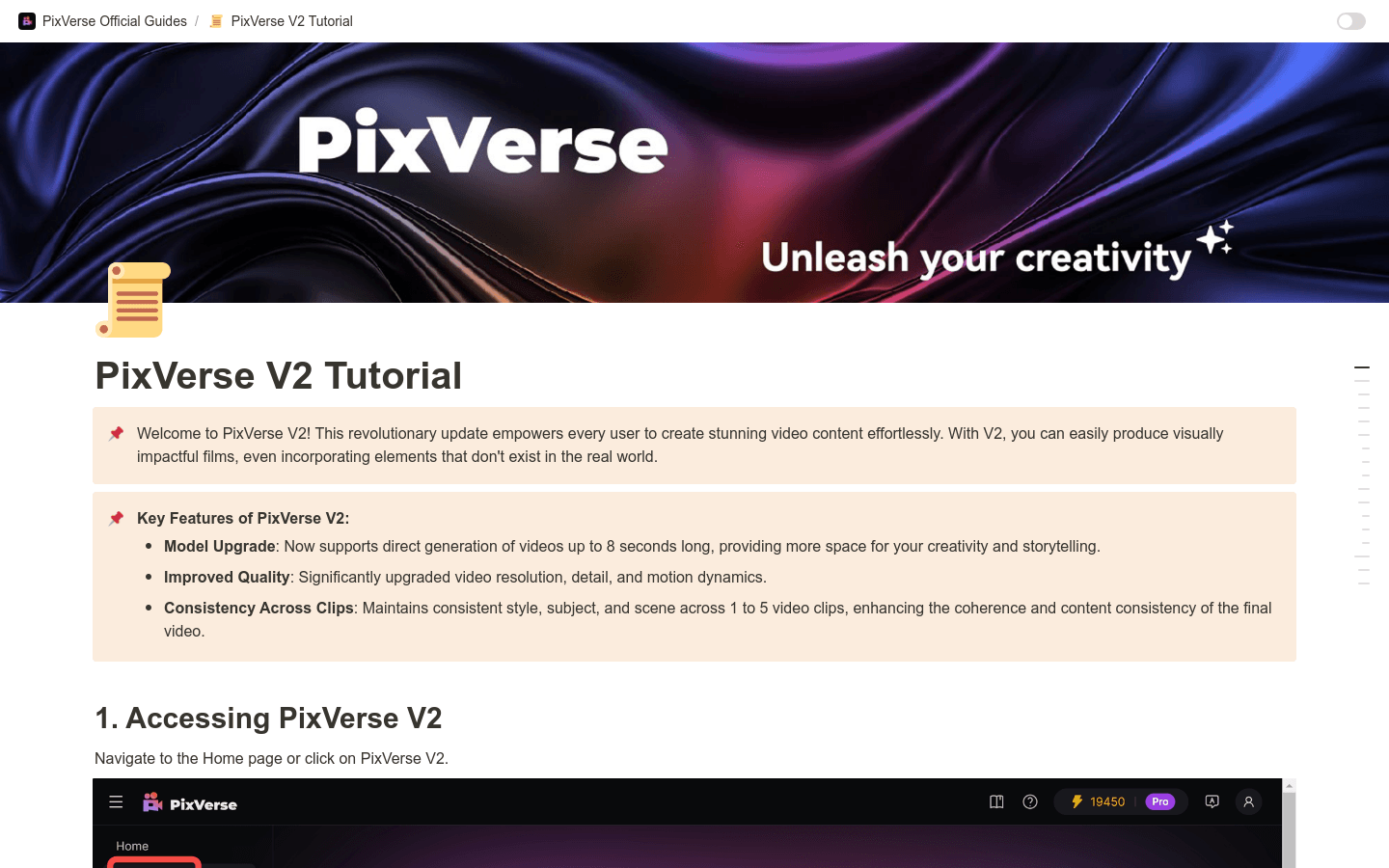

AI video editing

Found 100 AI tools

100

tools

Primary Category: video

Subcategory: AI video editing

Found 100 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under video Other Categories

🎬

Explore More video Tools

AI video editing Hot video is a popular subcategory under 124 quality AI tools