🔧

other Category

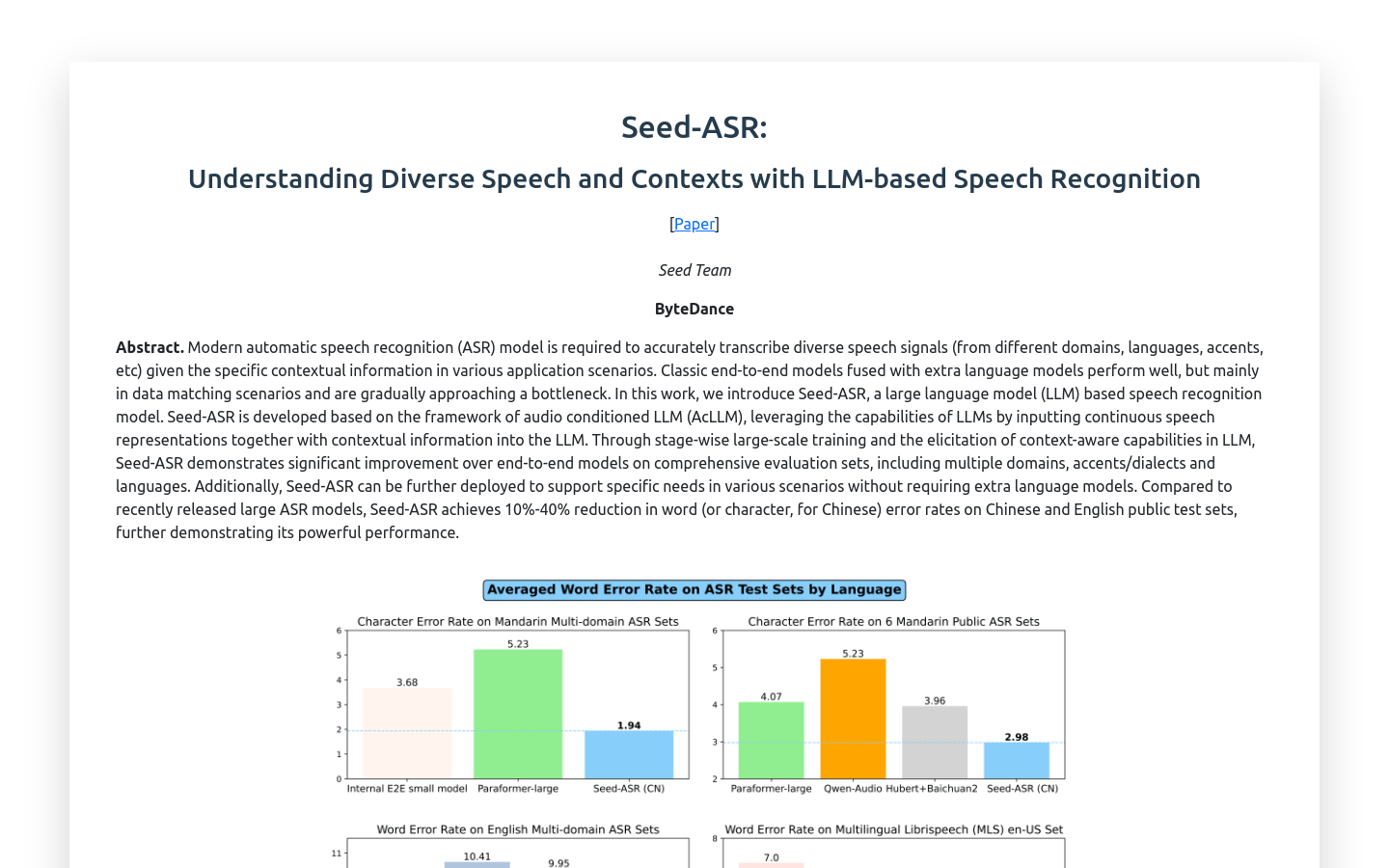

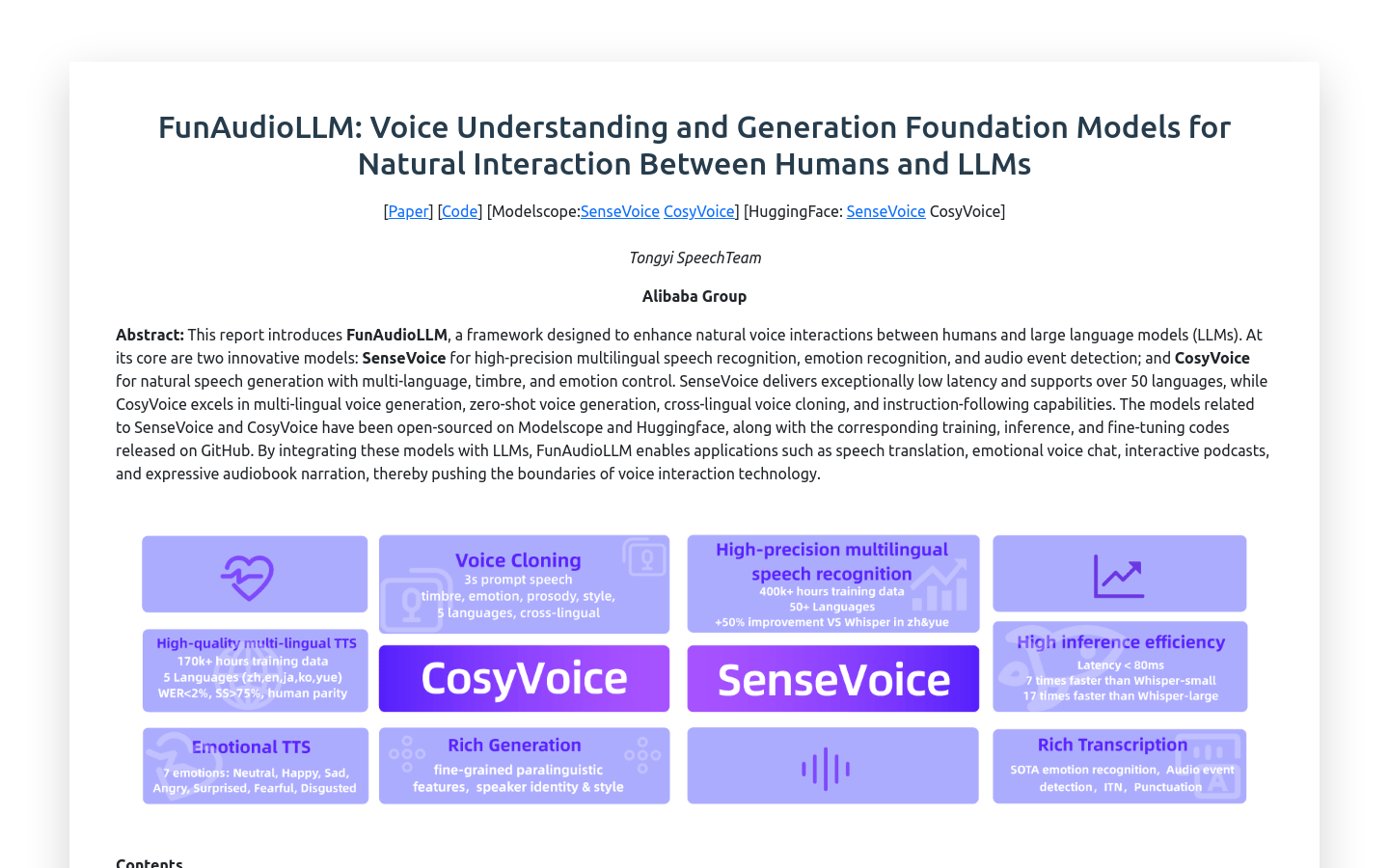

AI speech recognition

Found 7 AI tools

7

tools

Primary Category: other

Subcategory: AI speech recognition

Found 7 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under other Other Categories

🔧

Explore More other Tools

AI speech recognition Hot other is a popular subcategory under 7 quality AI tools