💼

productive forces Category

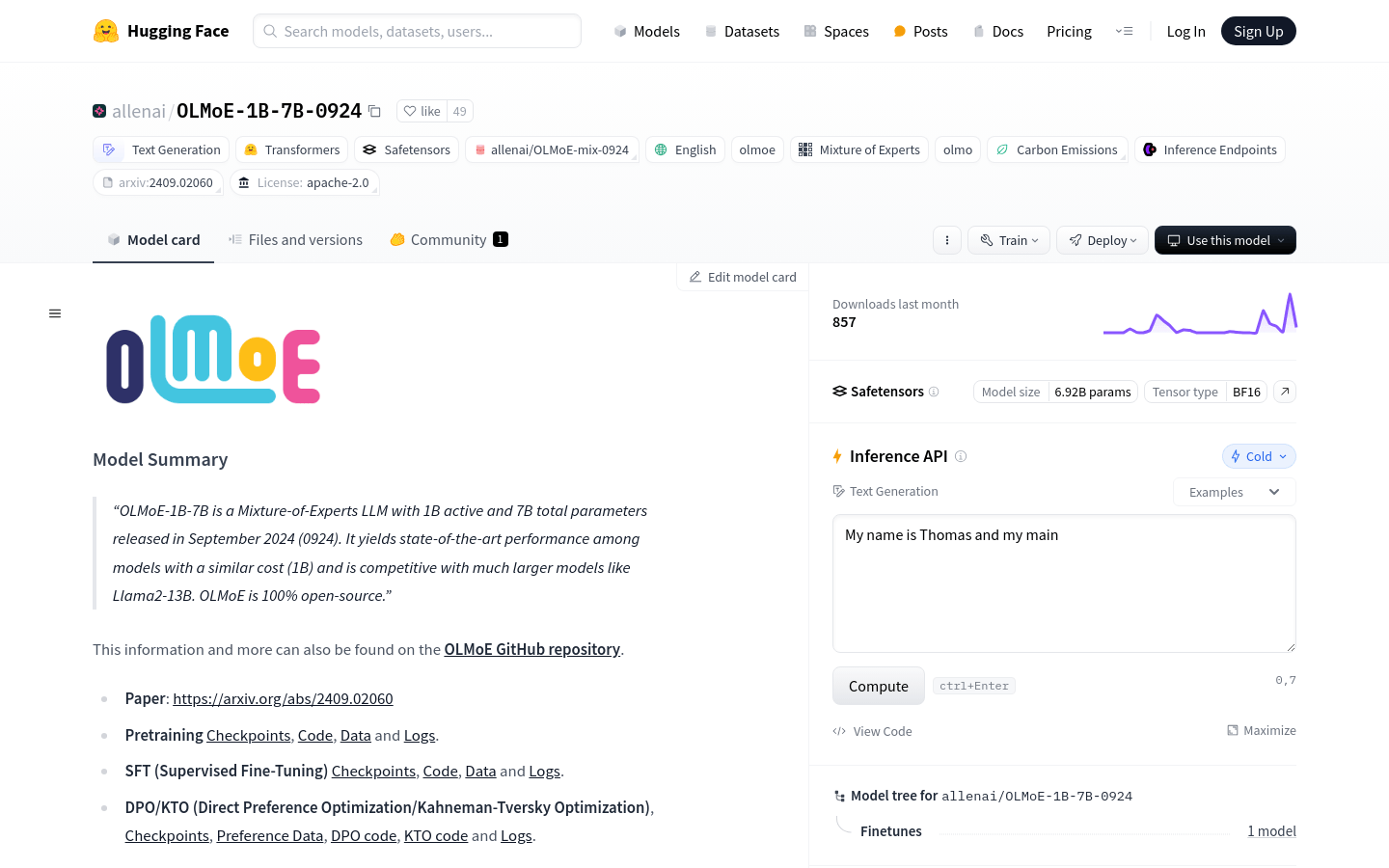

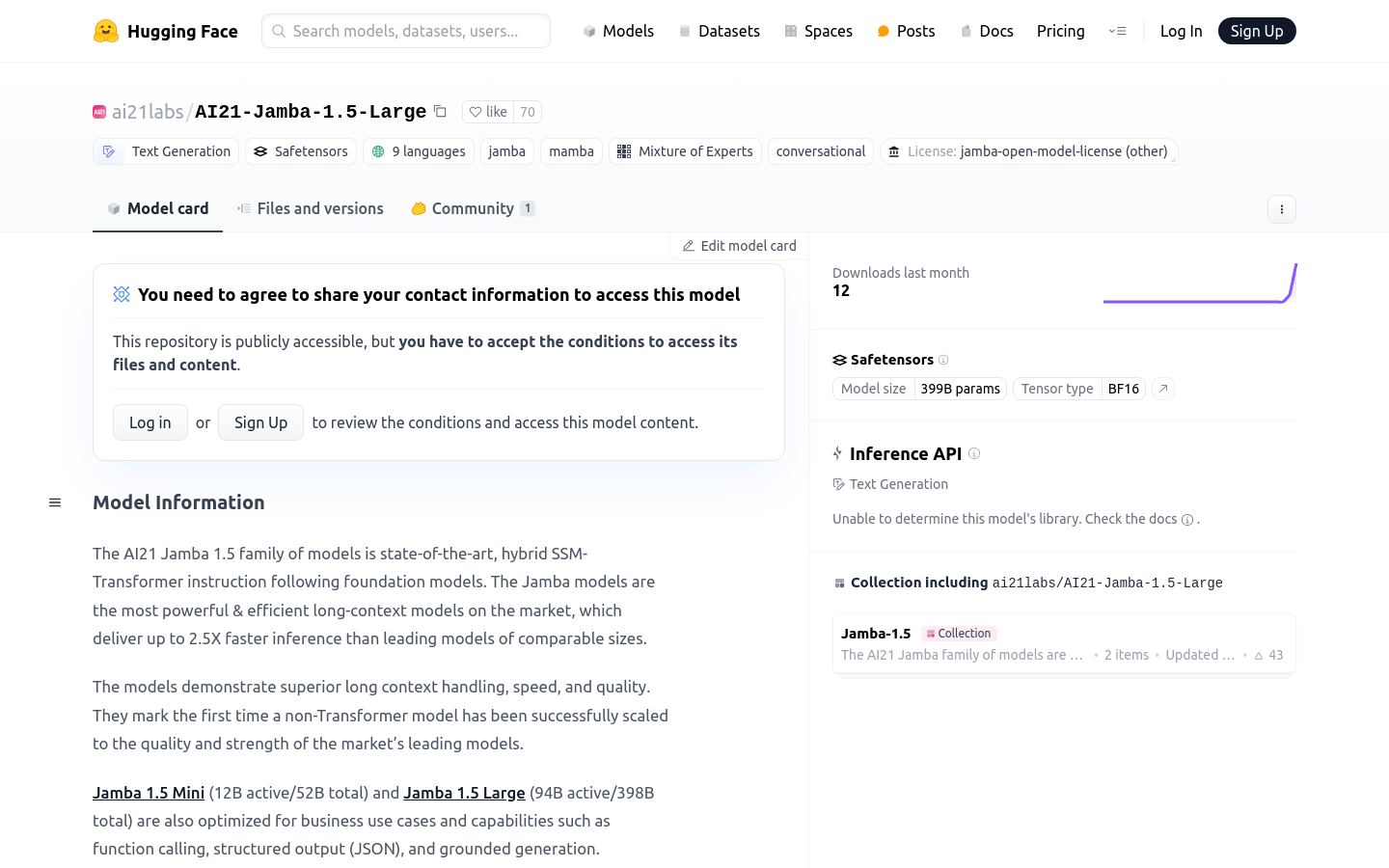

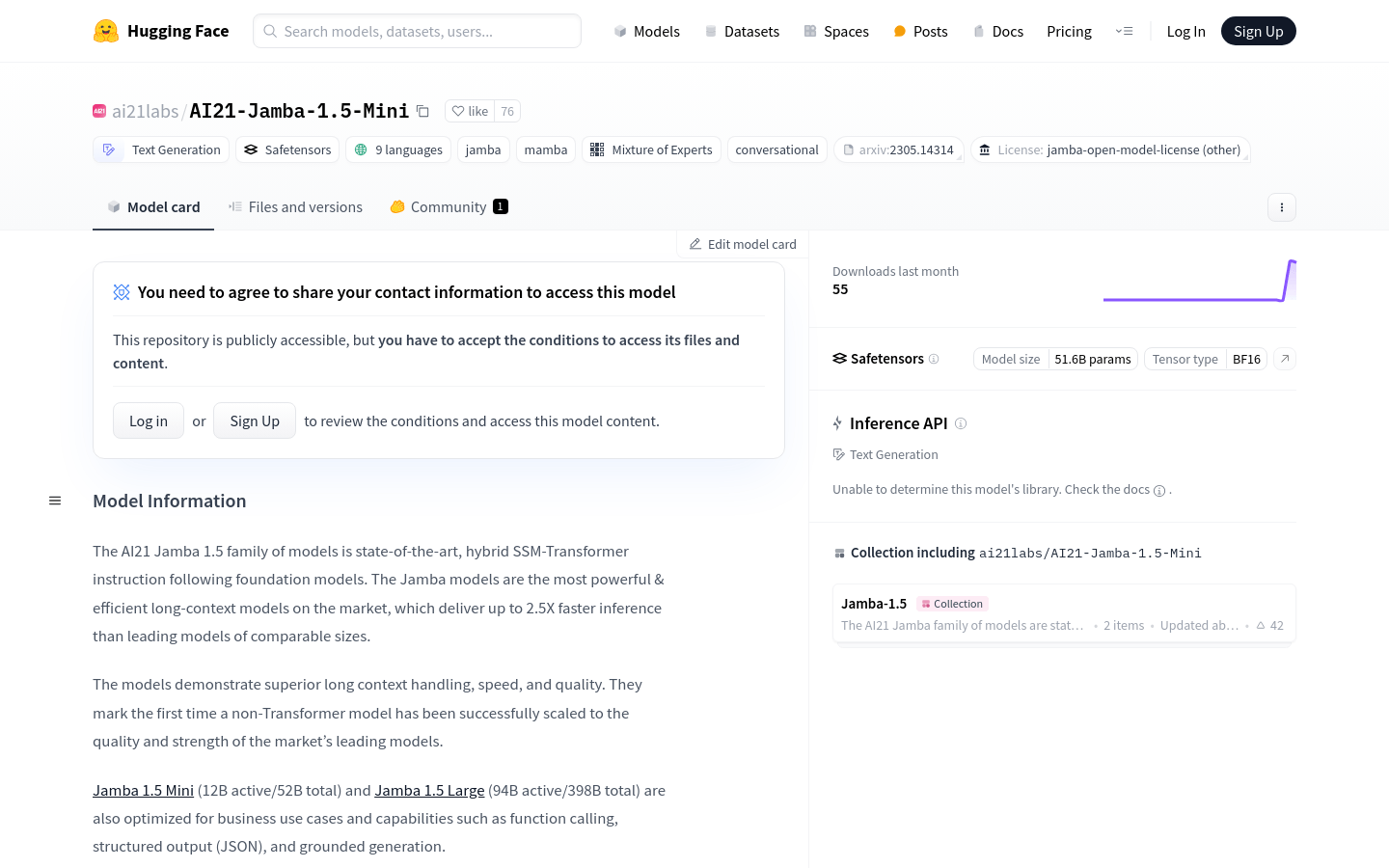

AI model inference training

Found 34 AI tools

34

tools

Primary Category: productive forces

Subcategory: AI model inference training

Found 34 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under productive forces Other Categories

💼

Explore More productive forces Tools

AI model inference training Hot productive forces is a popular subcategory under 34 quality AI tools