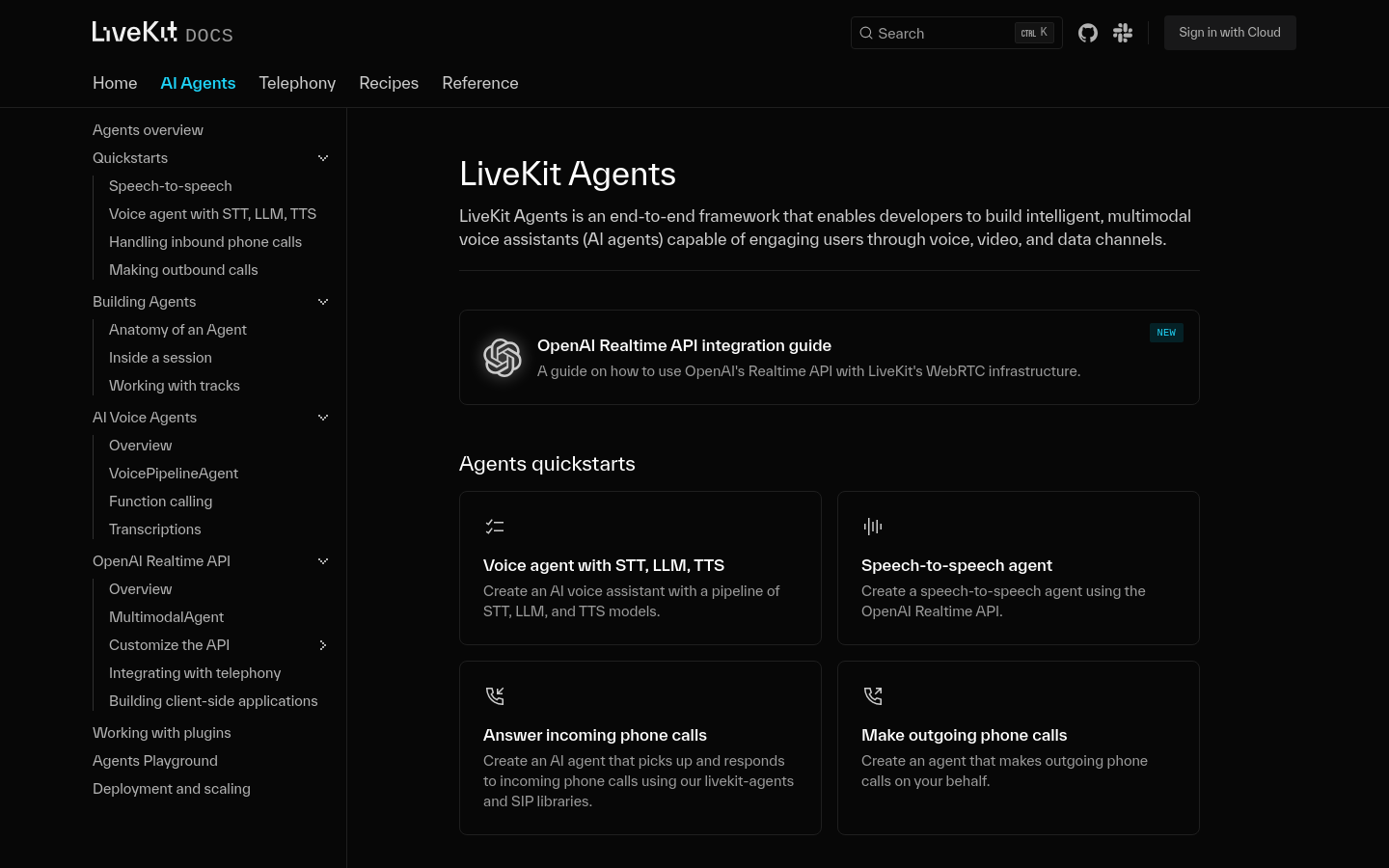

LiveKit Agents

An end-to-end framework for building intelligent multi-modal voice assistants.

Product Details

LiveKit Agents is an end-to-end framework that enables developers to build intelligent multi-modal voice assistants (AI agents) that can interact with users through voice, video and data channels. It provides a quick start guide to creating voice assistants by integrating OpenAI's real-time API and LiveKit's WebRTC infrastructure, including pipelines for speech recognition (STT), language models (LLM), and text-to-speech (TTS). Additionally, it supports the ability to create voice-to-voice agents, answer and respond to incoming calls, and make calls on behalf of users.

Main Features

How to Use

Target Users

The target audience is developers, especially those who want to build smart voice assistants or need to integrate real-time voice and video communication capabilities. LiveKit Agents provides the necessary tools and guidance to help them quickly build and deploy AI agents, saving development time and resources.

Examples

Developers used LiveKit Agents to create an AI voice assistant that can automatically answer customer service calls.

An educational institution used LiveKit Agents to build a voice assistant that can answer student questions in real time.

The enterprise used LiveKit Agents to develop an AI agent capable of recording and summarizing video conferences.

Quick Access

Visit Website →Categories

Related Recommendations

Discover more similar quality AI tools

Swarm

Swarm is an experimental framework managed by the OpenAI Solutions team for building, orchestrating, and deploying multi-agent systems. It achieves coordination and execution between agents by defining the abstract concepts of agents and handoffs. The Swarm framework emphasizes lightweight, high controllability, and ease of testing. It is suitable for scenarios that require a large number of independent functions and instructions, allowing developers to have complete transparency and fine-grained control over context, steps, and tool calls. The Swarm framework is currently in the experimental stage and is not recommended for use in production environments.

curiosity

curiosity is a chatbot project based on the ReAct framework, aiming to explore and build a Perplexity-like user interaction experience through LangGraph and FastHTML technology stack. The core of the project is a simple ReAct agent that uses Tavily search to enhance text generation. Supports three different LLMs (Large Language Models), including OpenAI's gpt-4o-mini, Groq's llama3-groq-8b-8192-tool-use-preview, and Ollama's llama3.1. The project builds the front-end via FastHTML, which overall provides a fast user experience, although there may be some challenges during debugging.

RD-Agent

RD-Agent is an automated research and development tool launched by Microsoft Research Asia. Relying on the powerful capabilities of large language models, it creates a new model of artificial intelligence-driven R&D process automation. By integrating data-driven R&D systems, it can use artificial intelligence capabilities to drive the automation of innovation and development. It not only improves R&D efficiency, but also uses intelligent decision-making and feedback mechanisms to provide unlimited possibilities for future cross-field innovation and knowledge transfer.

sentient

Sentient is a framework/SDK that allows developers to build intelligent agents that can control browsers in 3 lines of code. It leverages the latest artificial intelligence technology to enable complex network interactions and automation tasks through simple code. Sentient supports a variety of AI models, including OpenAI, Together AI, etc., and can provide customized solutions according to users' specific needs.

muAgent

muAgent is an innovative Agent framework driven by a knowledge graph engine that supports multi-Agent orchestration and collaboration technology. It uses LLM+EKG (Eventic Knowledge Graph industry knowledge bearing) technology, combined with FunctionCall, CodeInterpreter, etc., to realize the automation of complex SOP processes through canvas drag and light text writing. muAgent is compatible with various Agent frameworks on the market and has core functions such as complex reasoning, online collaboration, manual interaction, and ready-to-use knowledge. This framework has been verified in multiple complex DevOps scenarios of Ant Group.

GenAgent

GenAgent is a framework for building collaborative AI systems by creating workflows and converting these workflows into code for better understanding by large language model (LLM) agents. GenAgent is able to learn from human-designed work and create new workflows. The generated workflows can be interpreted as collaborative systems to complete complex tasks.

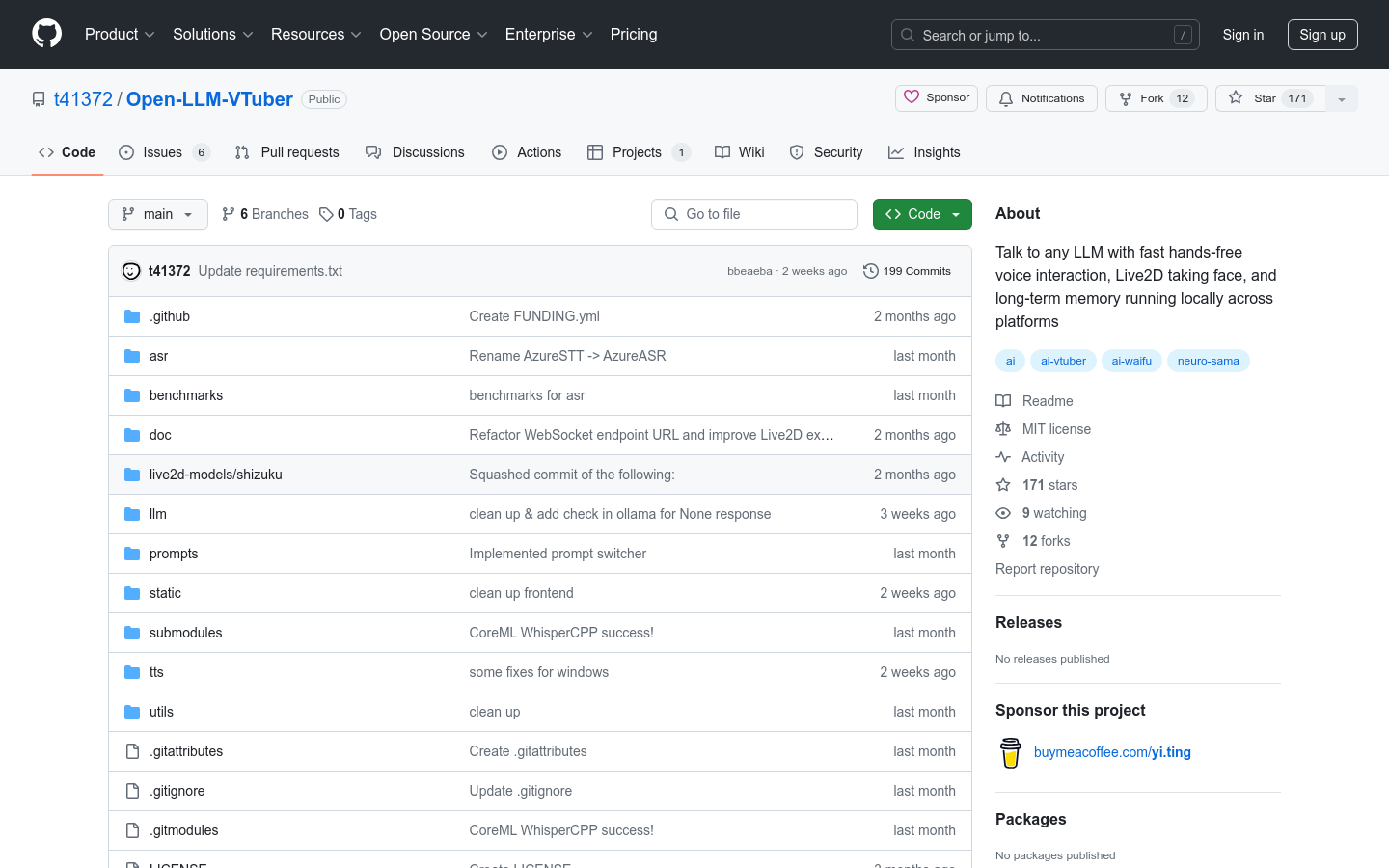

Open-LLM-VTuber

Open-LLM-VTuber is an open source project designed to interact with large language models (LLM) via speech, with real-time Live2D facial capture and cross-platform long-term memory capabilities. The project supports macOS, Windows, and Linux platforms, allowing users to choose from different speech recognition and speech synthesis backends, as well as custom long-term memory solutions. It is particularly suitable for developers and enthusiasts who want to implement natural language conversations with AI on different platforms.

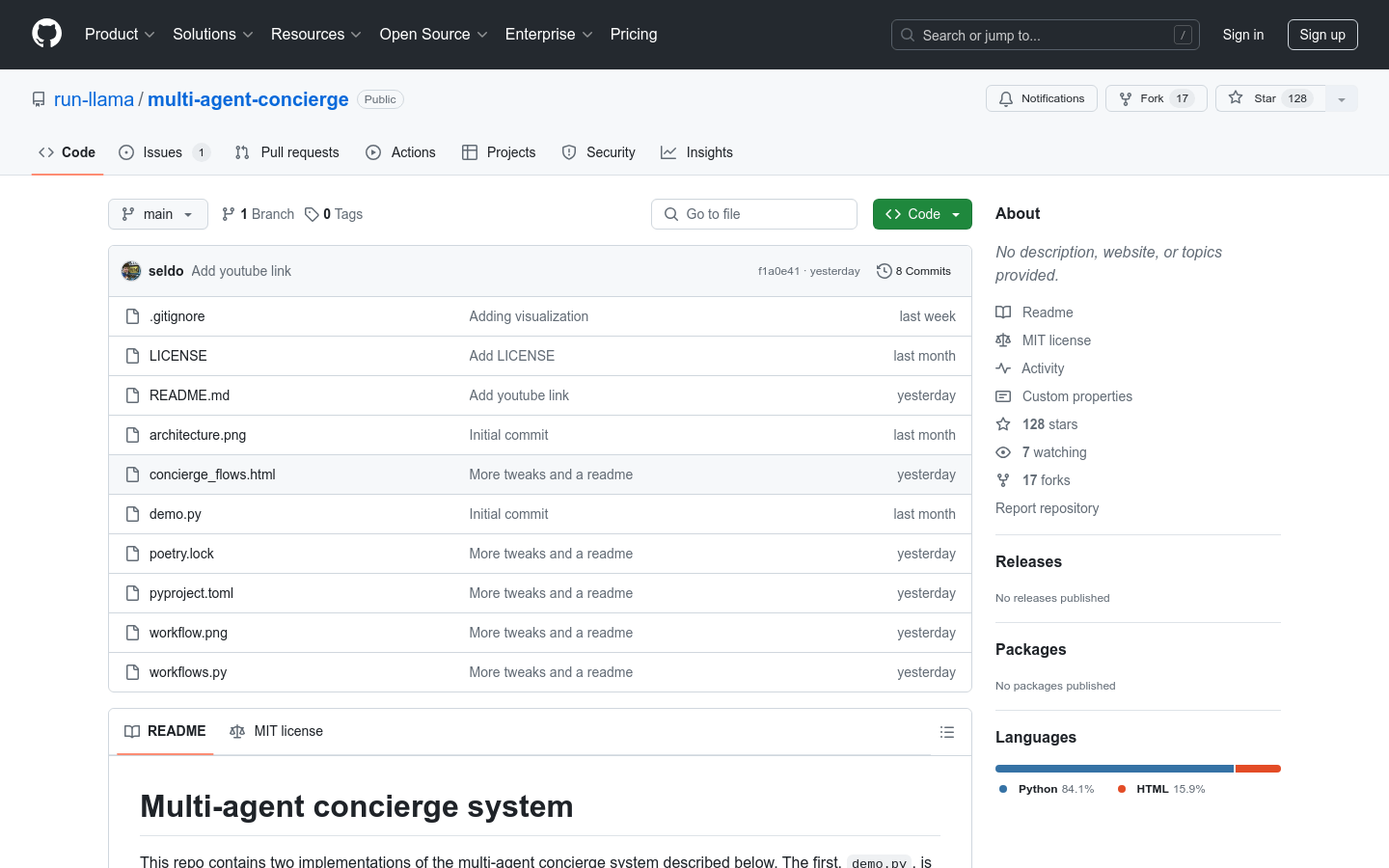

multi-agent-concierge

multi-agent-concierge is a multi-agent concierge system that uses multiple specialized agents to complete complex tasks and a "concierge" agent to guide users to the correct agent. Such systems are designed to handle multiple tasks with interdependencies, using hundreds of tools. The system demonstrates how to create implicit "chains" between agents through natural language instructions and manage these chains through "continuation" agents, while using global state to track users and their current status.

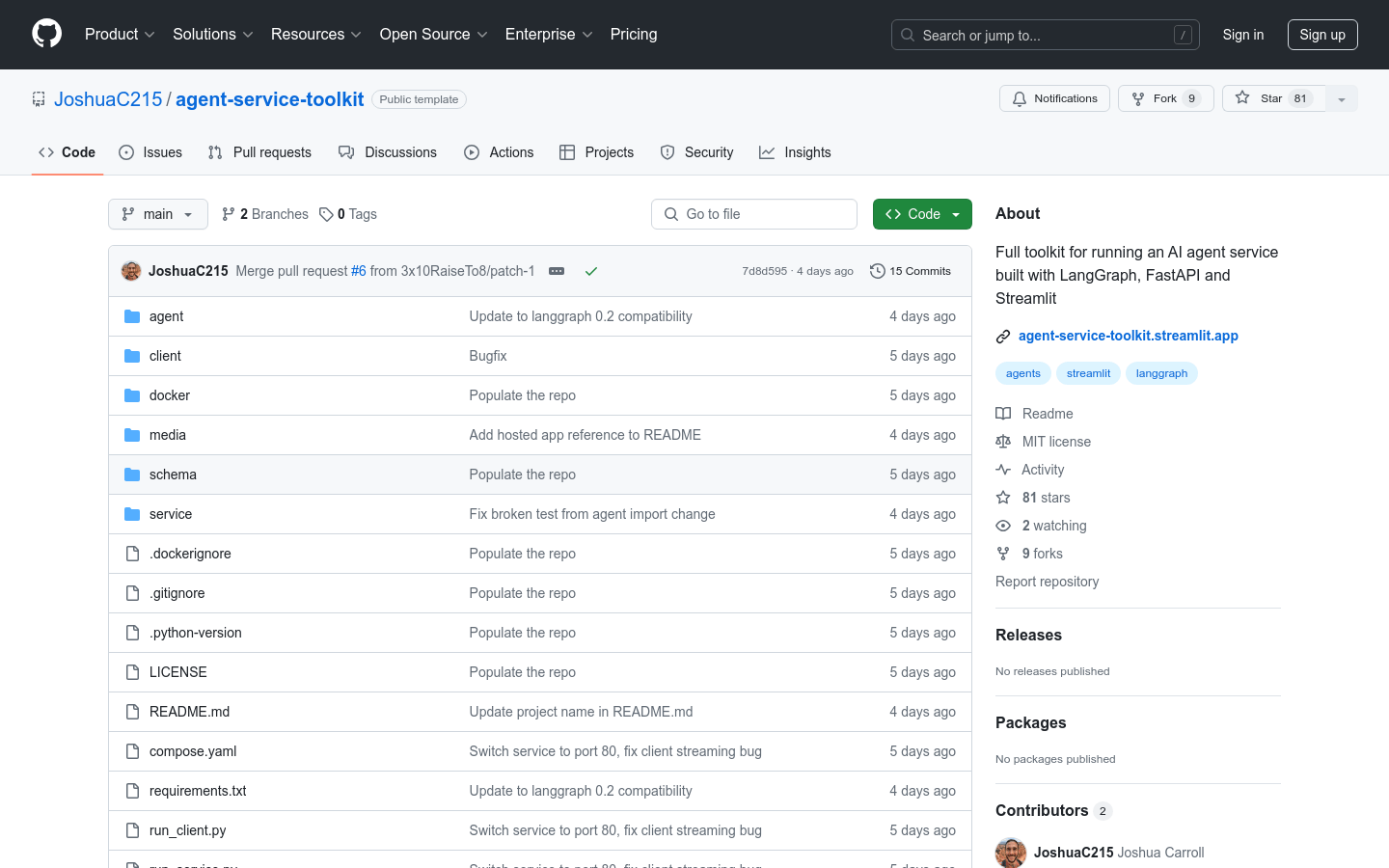

agent-service-toolkit

agent-service-toolkit is a complete toolkit for running AI agent services based on LangGraph, including LangGraph agent, FastAPI service, client and Streamlit application, providing complete settings from agent definition to user interface. It leverages the high degree of control and rich ecosystem of the LangGraph framework to support advanced features such as concurrent execution, graph looping, and streaming results.

AgentK

AgentK is a self-evolving modular self-agent general artificial intelligence (AGI) model consisting of multiple cooperative agents that can build new agents based on user needs to complete tasks. It is built on the LangGraph and LangChain frameworks, has the ability to self-test and repair, and is designed to be a minimal collection of agents and tools that can self-guide and develop its own intelligence.

avp_teleoperate

This is an open source project for remote control operation of the humanoid robot Unitree H1_2. It utilizes Apple Vision Pro technology to allow users to control the robot through a virtual reality environment. The project was tested on Ubuntu 20.04 and Ubuntu 22.04, and detailed installation and configuration guides are provided. The main advantages of this technology include the ability to provide an immersive remote control experience and support testing in a simulated environment, providing a new solution for the field of robot remote control.

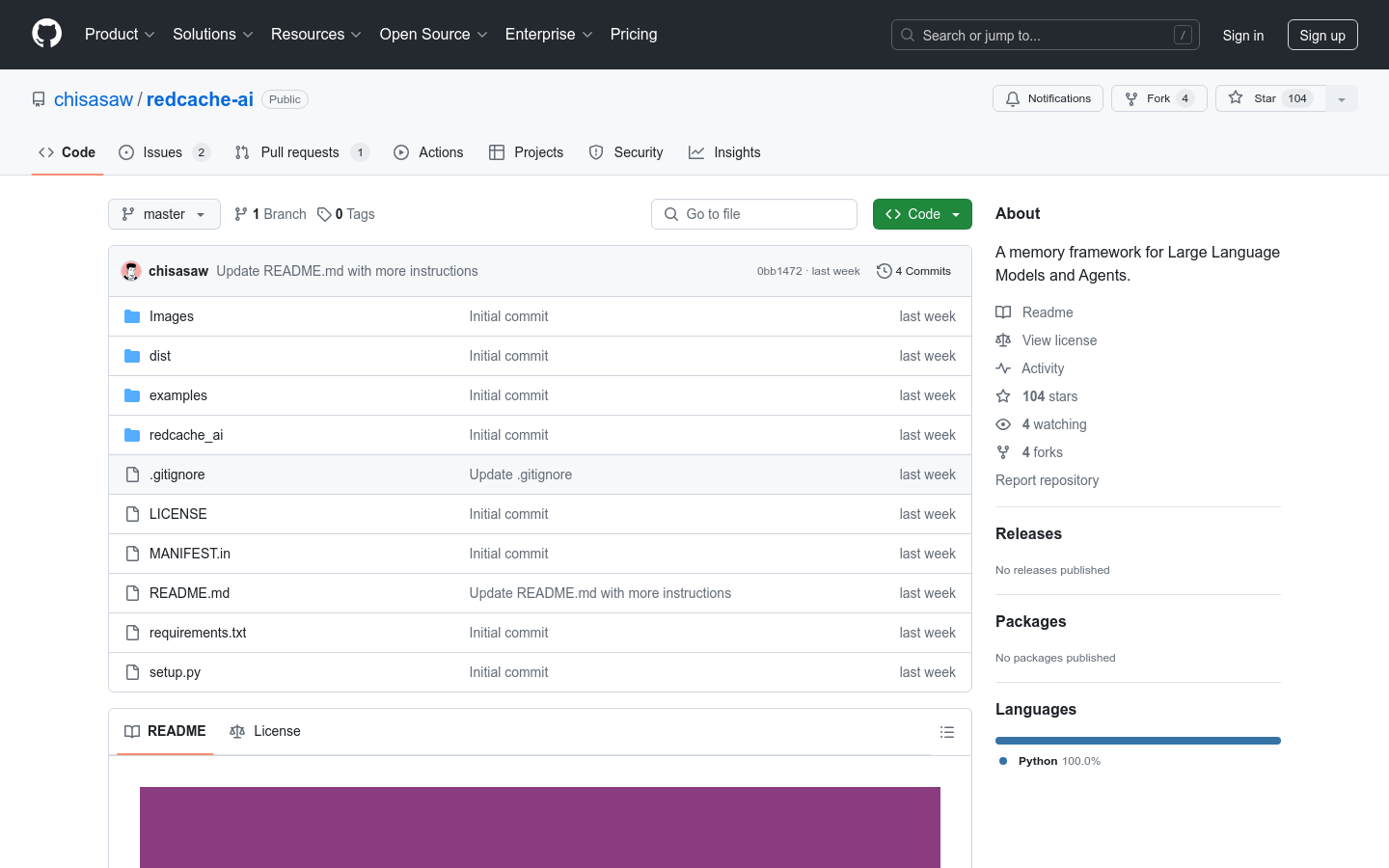

redcache-ai

RedCache-AI is a dynamic memory framework designed for large language models and agents, which allows developers to build a wide range of applications from AI-driven dating apps to medical diagnostic platforms. It solves the problem of existing solutions being expensive, closed source, or lacking extensive support for external dependencies.

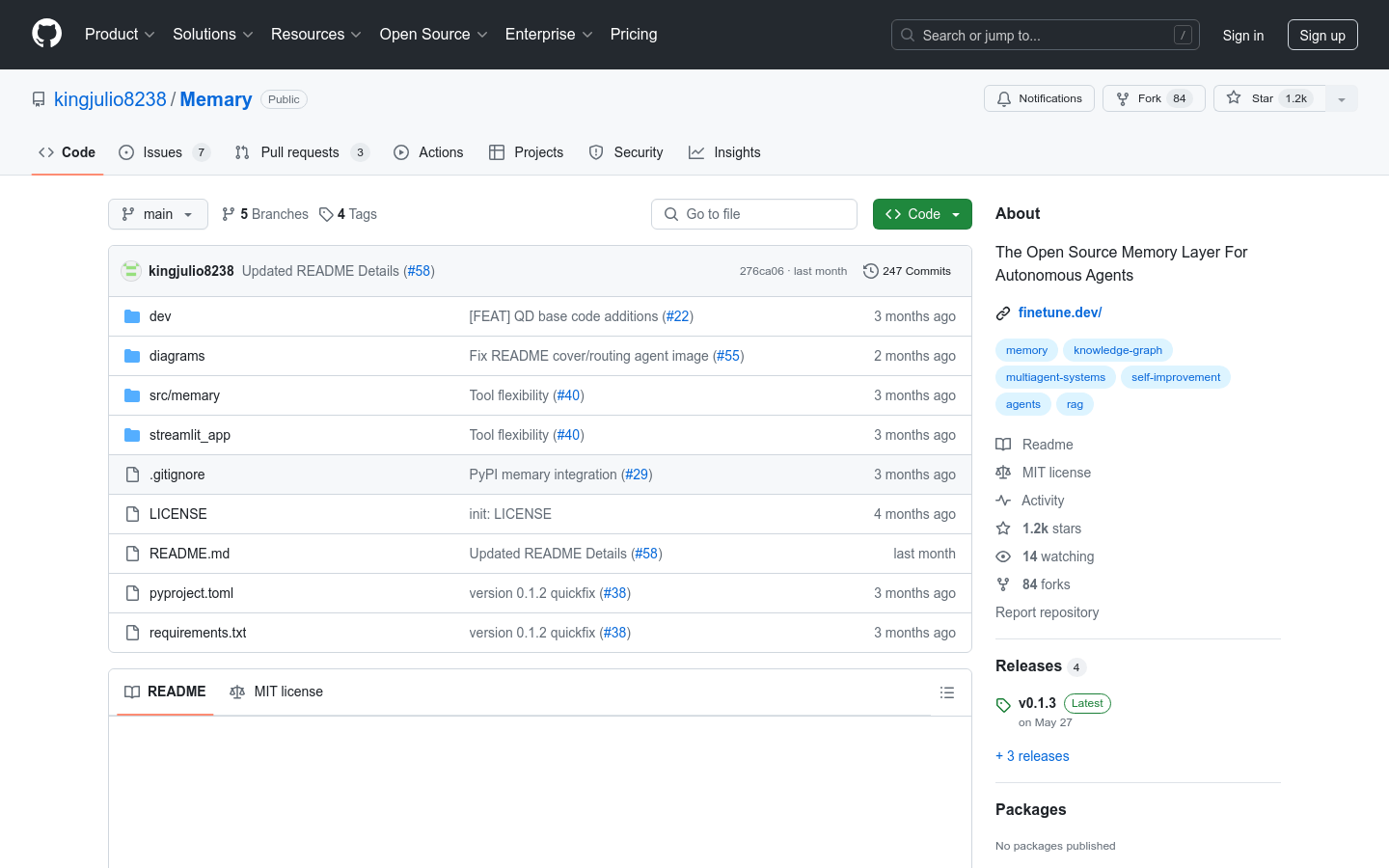

Memary

Memory is an open source memory layer designed for autonomous agents. It improves the agent's reasoning and learning capabilities by imitating human memory. It uses the Neo4j graph database to store knowledge, and combines the Llama Index and Perplexity models to enhance the query capabilities of the knowledge graph. The main advantages of Memory include functions such as automatic memory generation, memory modules, system improvement and retrospective memory. It is designed to integrate with existing agents with minimal developers and provide visual data for memory analysis and system improvement through the dashboard.

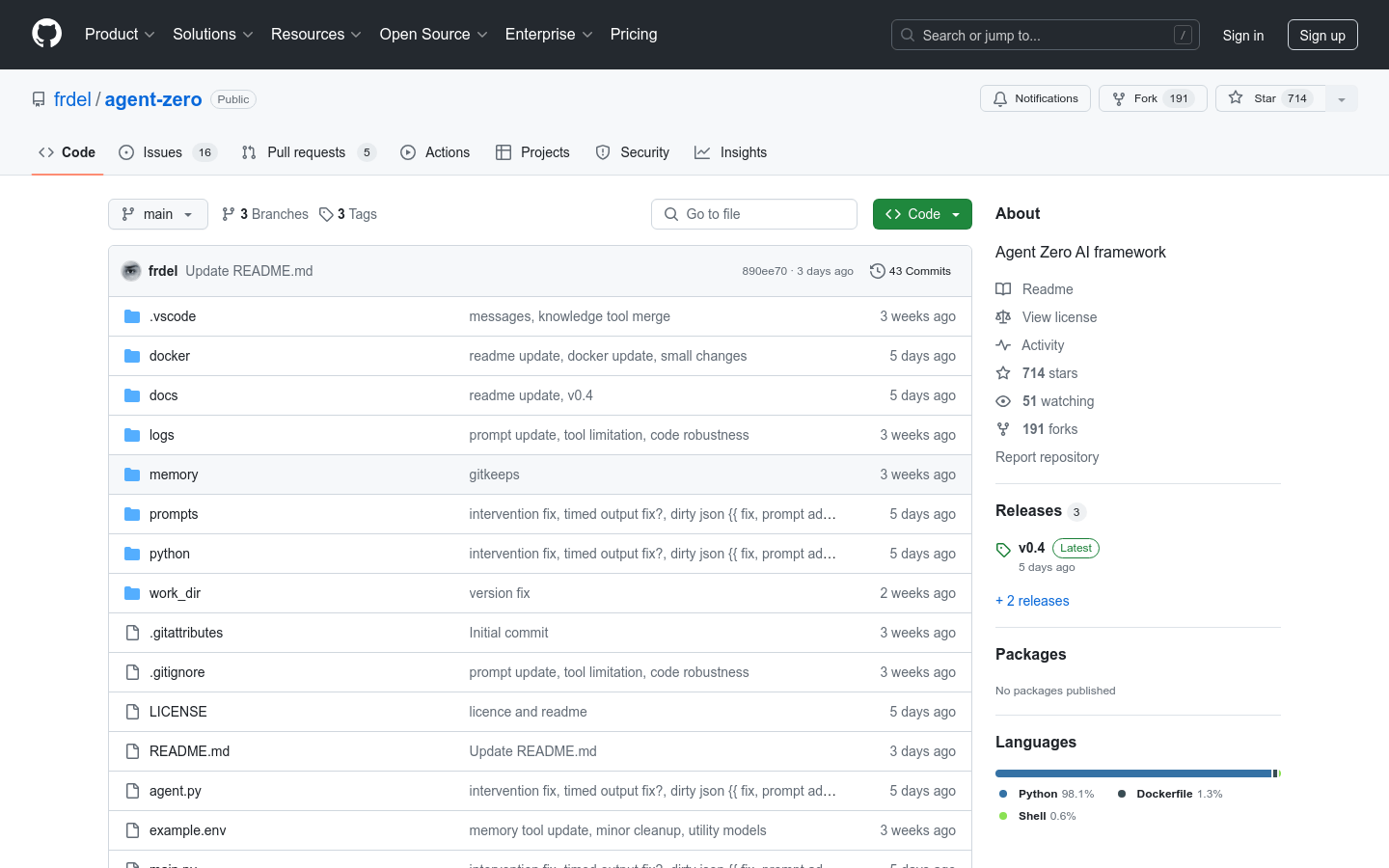

Agent Zero

Agent Zero is a highly transparent, readable, understandable, customizable and interactive personal AI framework. It is not pre-programmed for specific tasks, but is designed as a general-purpose personal assistant capable of executing commands and code, cooperating with other agent instances, and completing tasks to the best of its ability. It has a persistent memory that remembers previous solutions, codes, facts, instructions, etc. to solve tasks faster and more reliably in the future. Agent Zero uses the operating system as a tool to complete tasks, there are no pre-programmed single-purpose tools. Instead, it can write its own code and use the terminal to create and use its own tools as needed.

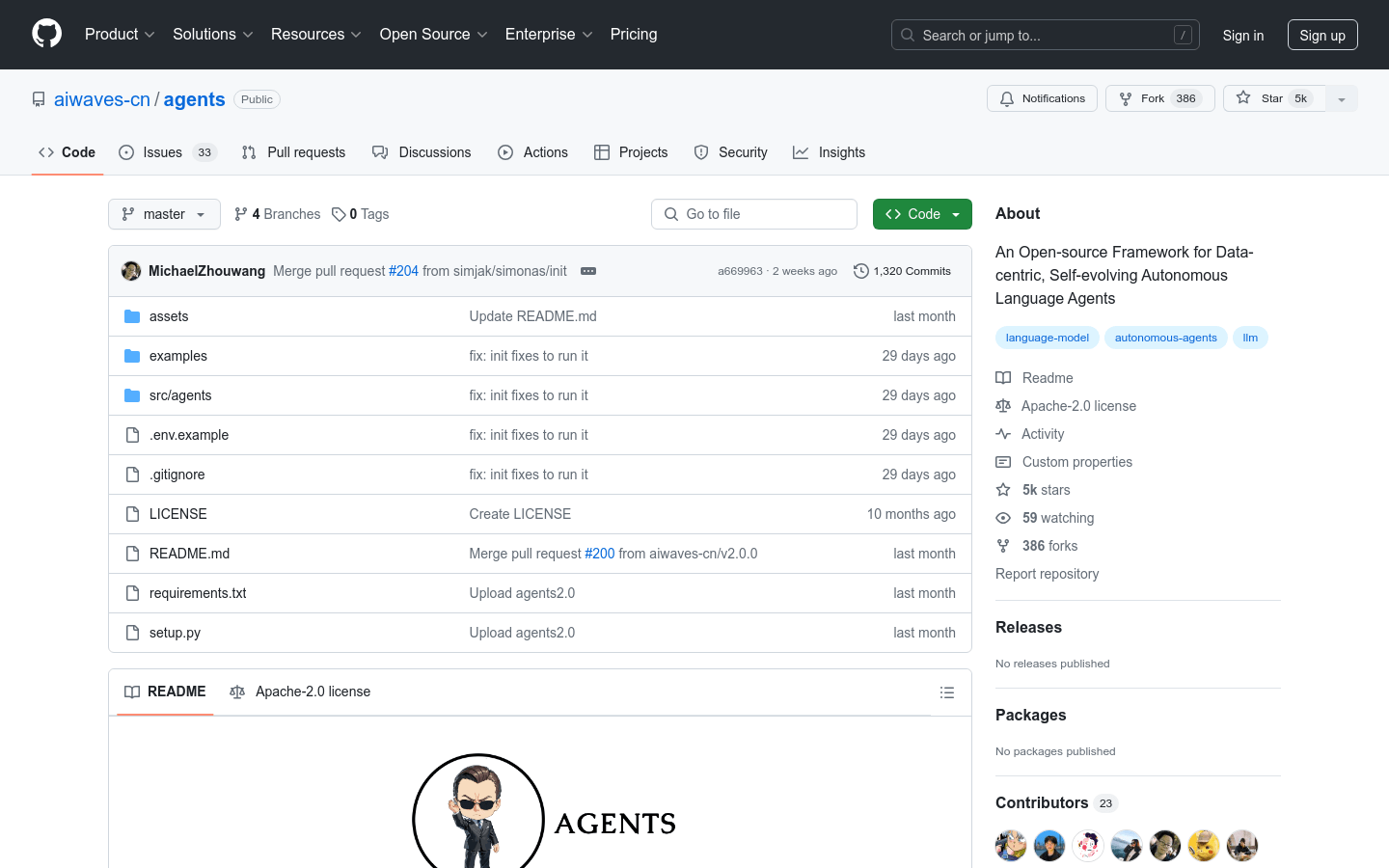

Agents 2.0

aiwaves-cn/agents is an open source framework focusing on data-driven adaptive language agents. It provides a systematic framework for training language agents through symbolic learning, inspired by the connectionist learning process used to train neural networks. The framework implements backpropagation and gradient-based weight updates using language-based losses, gradients, and weights, supporting the optimization of multi-agent systems.

llama-agentic-system

Llama-agentic-system is a system-level agent component based on the Llama 3.1 model, which is capable of performing multi-step reasoning and using built-in tools such as search engines or code interpreters. The system also emphasizes security assessment, with input and output filtering through Llama Guard to ensure security needs are met under different usage scenarios.