🖼️

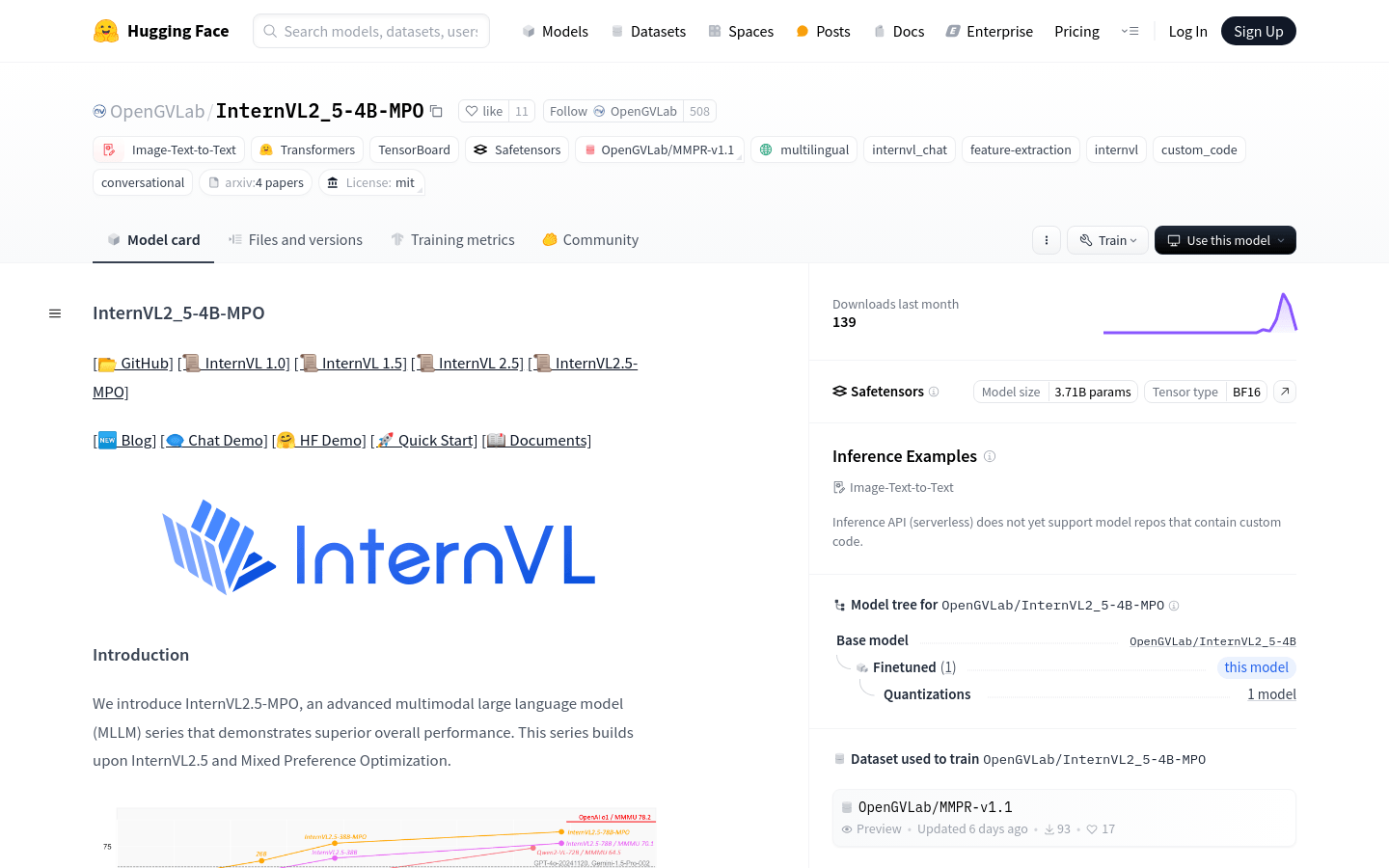

image Category

AI model

Found 100 AI tools

100

tools

Primary Category: image

Subcategory: AI model

Found 100 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under image Other Categories

🖼️

Explore More image Tools

AI model Hot image is a popular subcategory under 352 quality AI tools

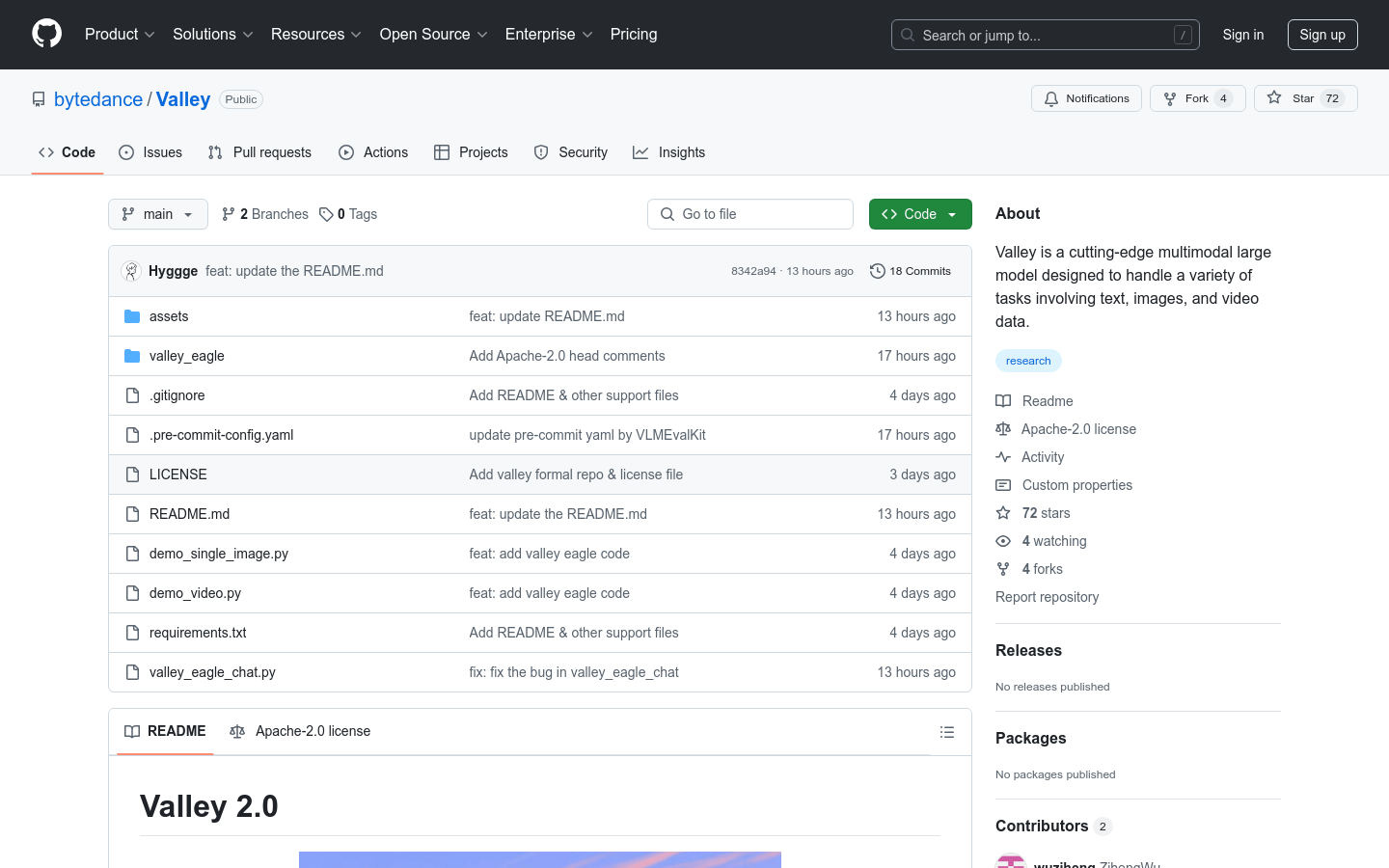

![FLUX.1 Krea [dev]](https://pic.chinaz.com/ai/2025/08/01/25080110475283667344.jpg)