🎬

video Category

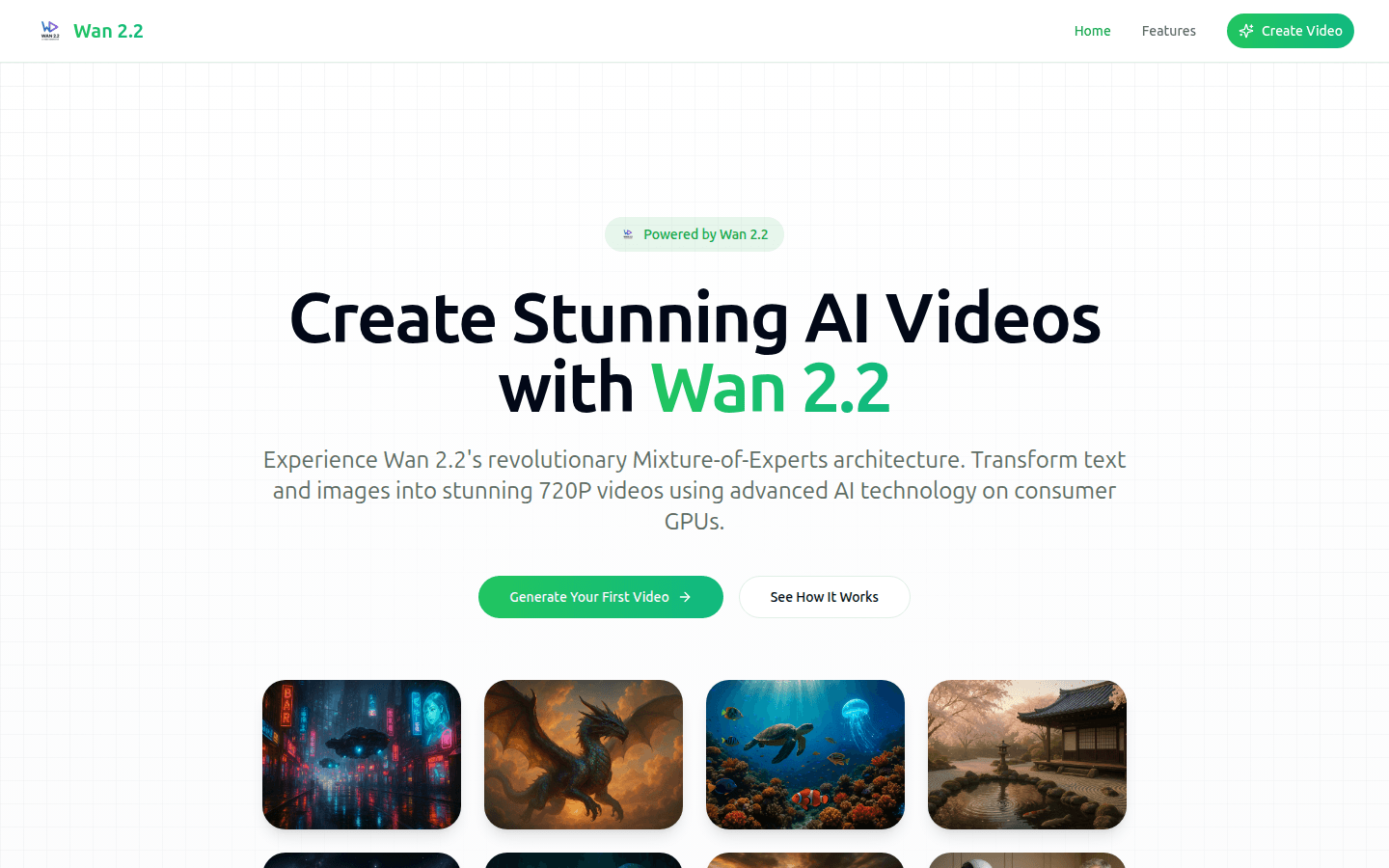

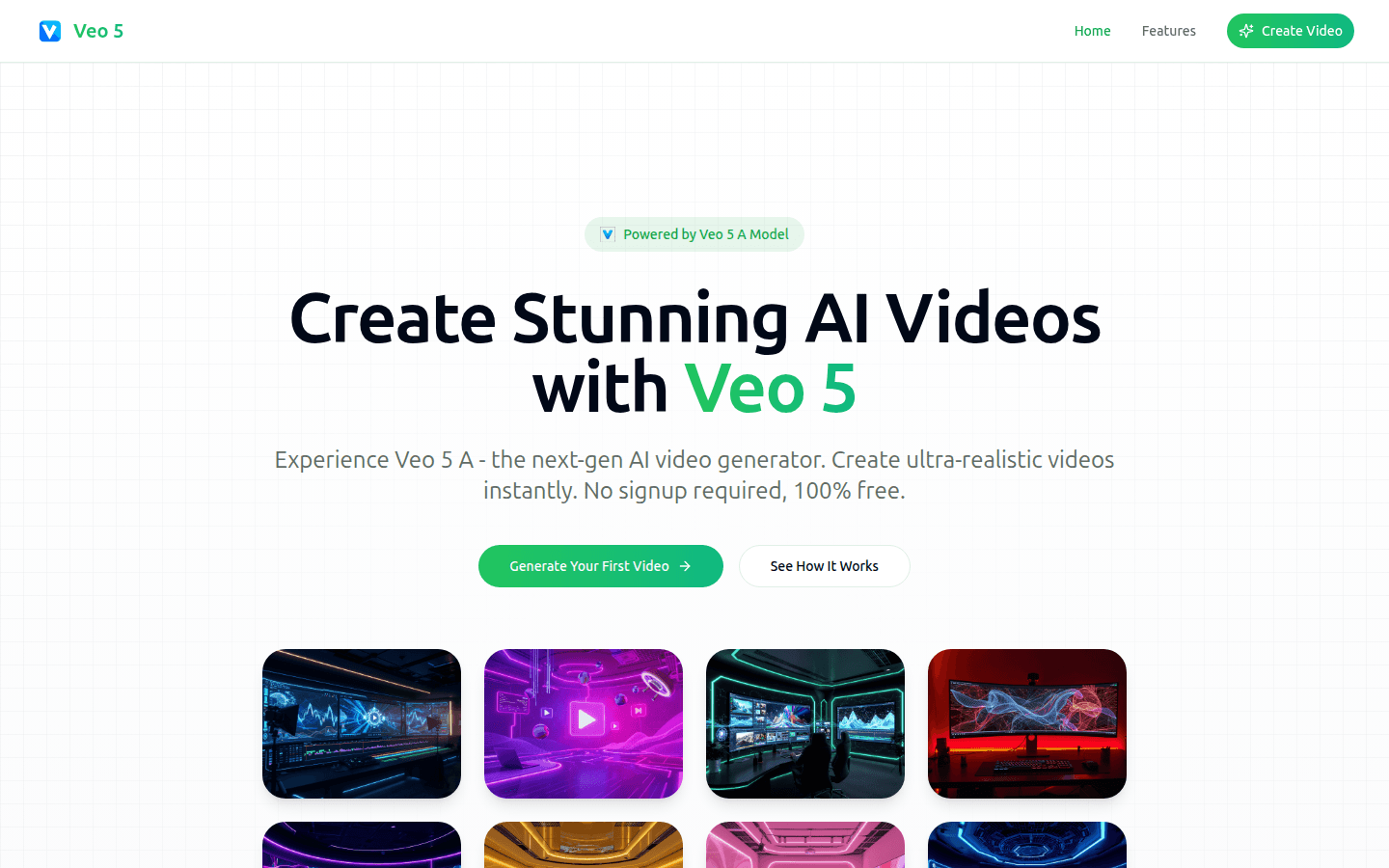

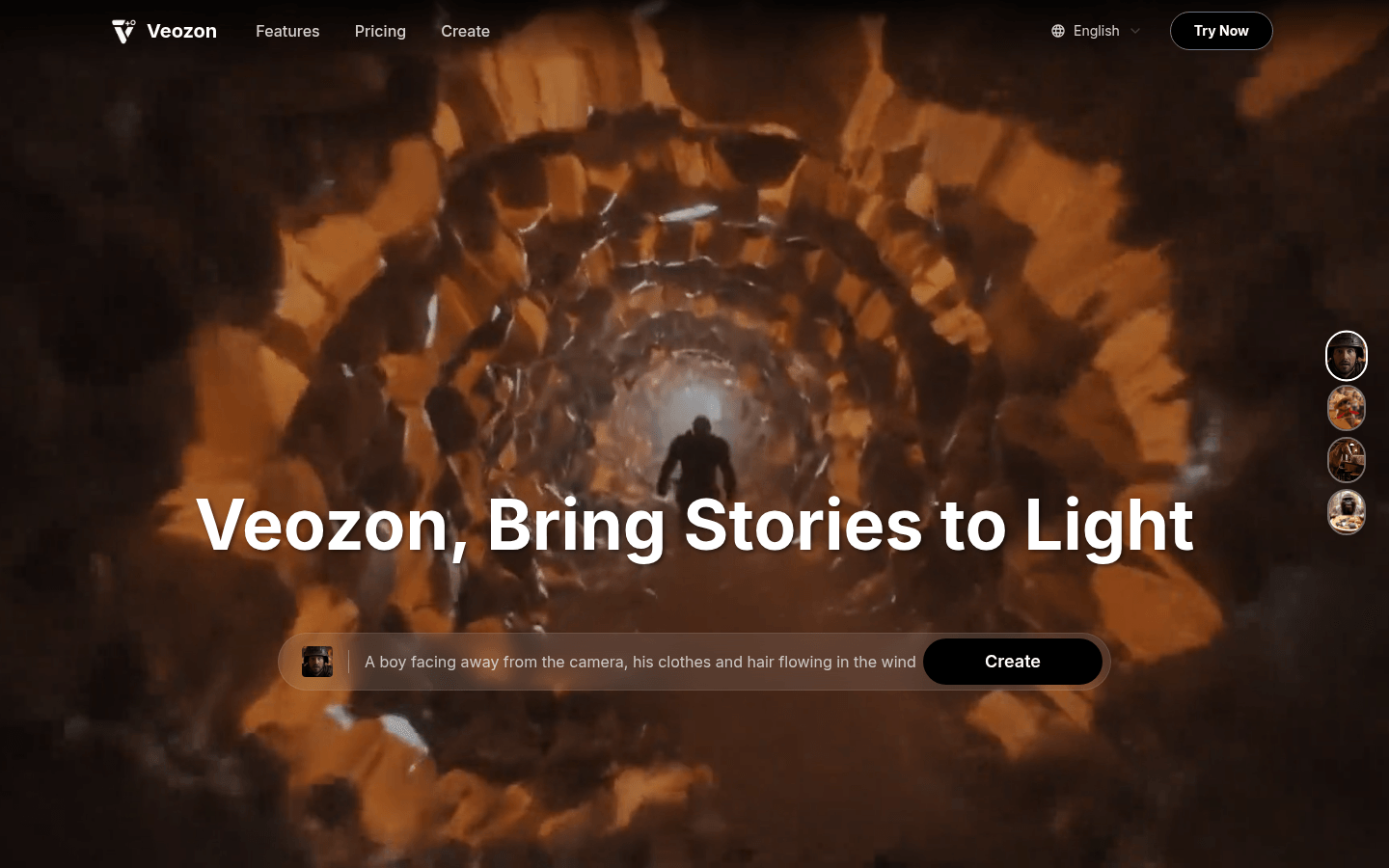

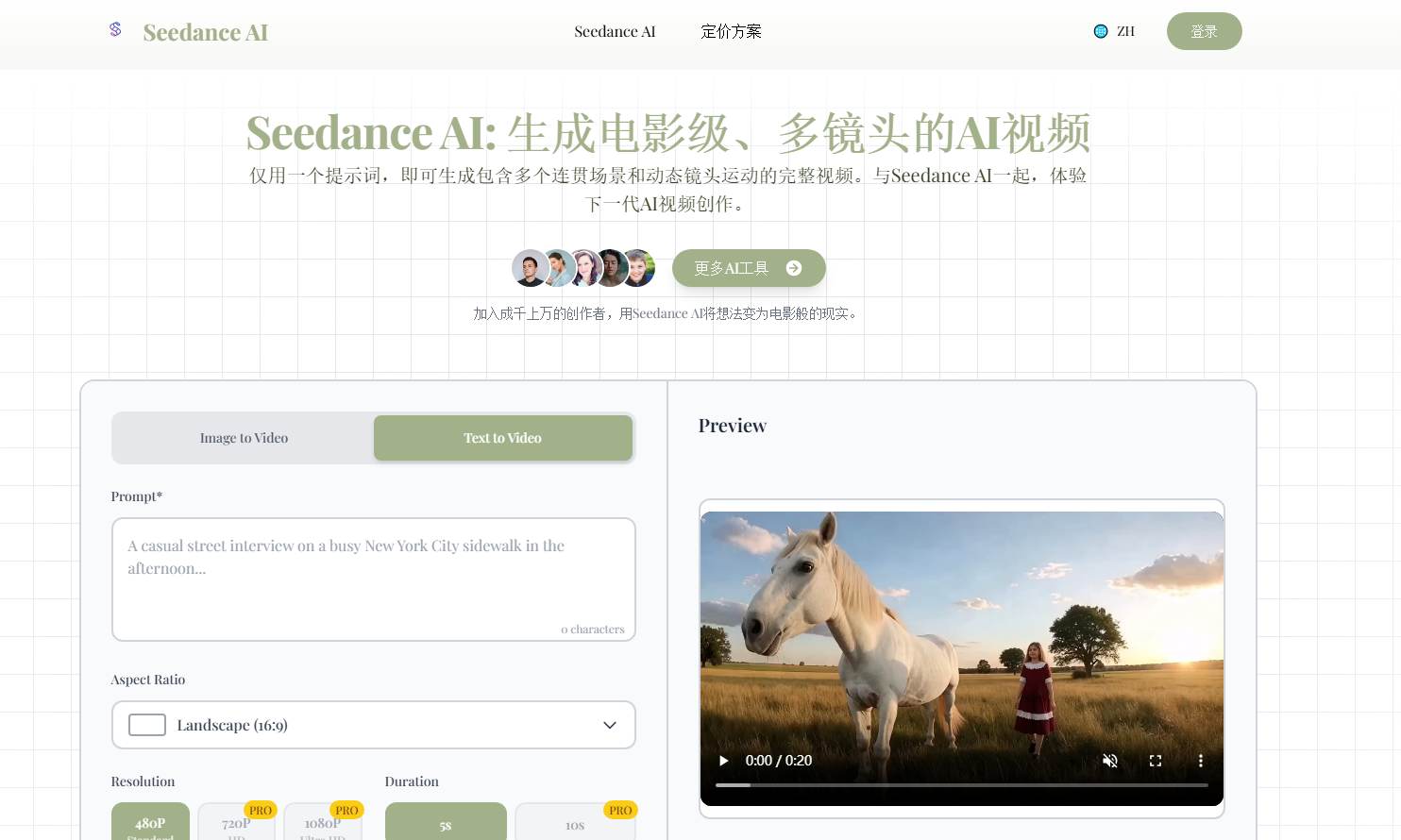

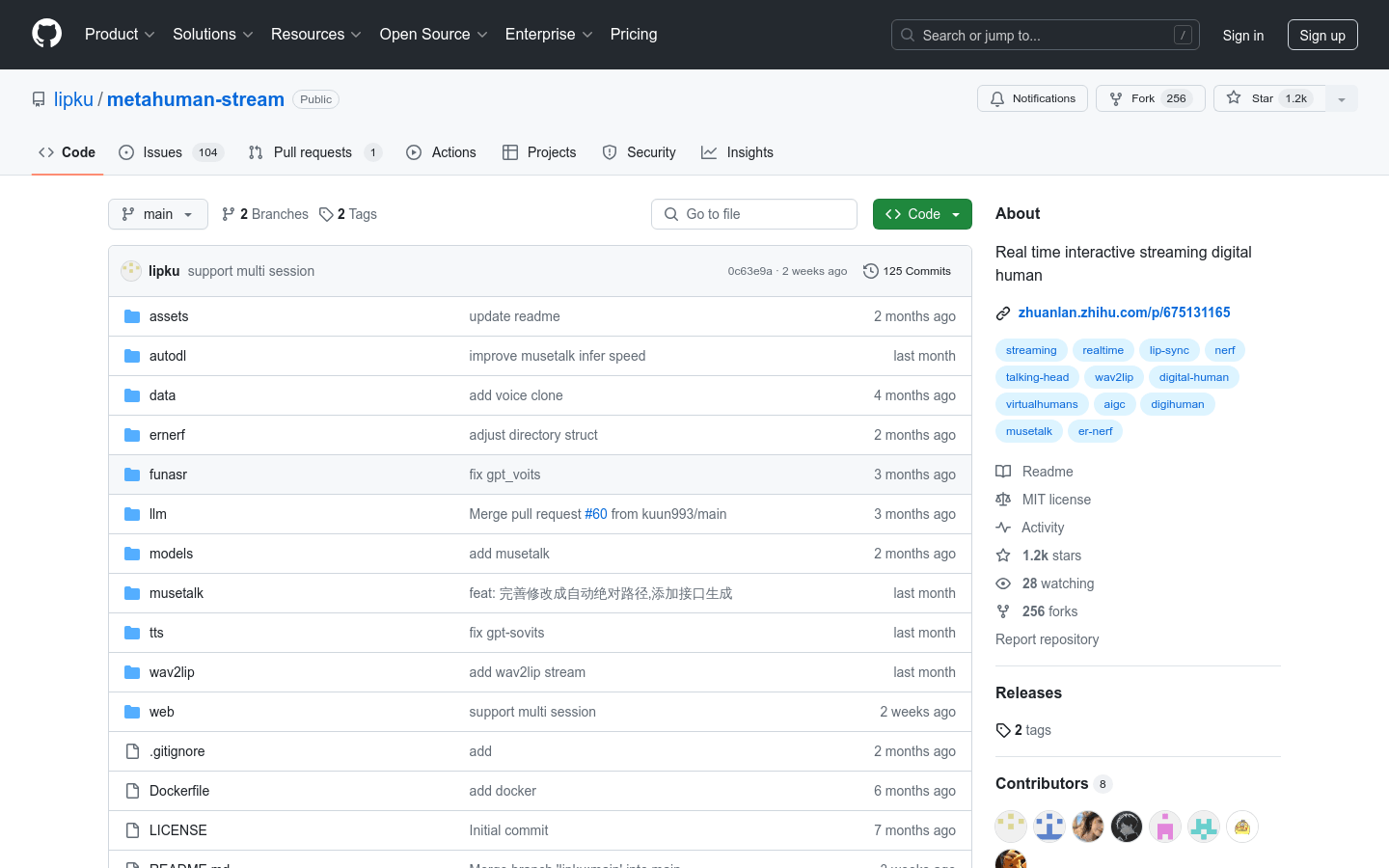

AI model

Found 100 AI tools

100

tools

Primary Category: video

Subcategory: AI model

Found 100 matching tools

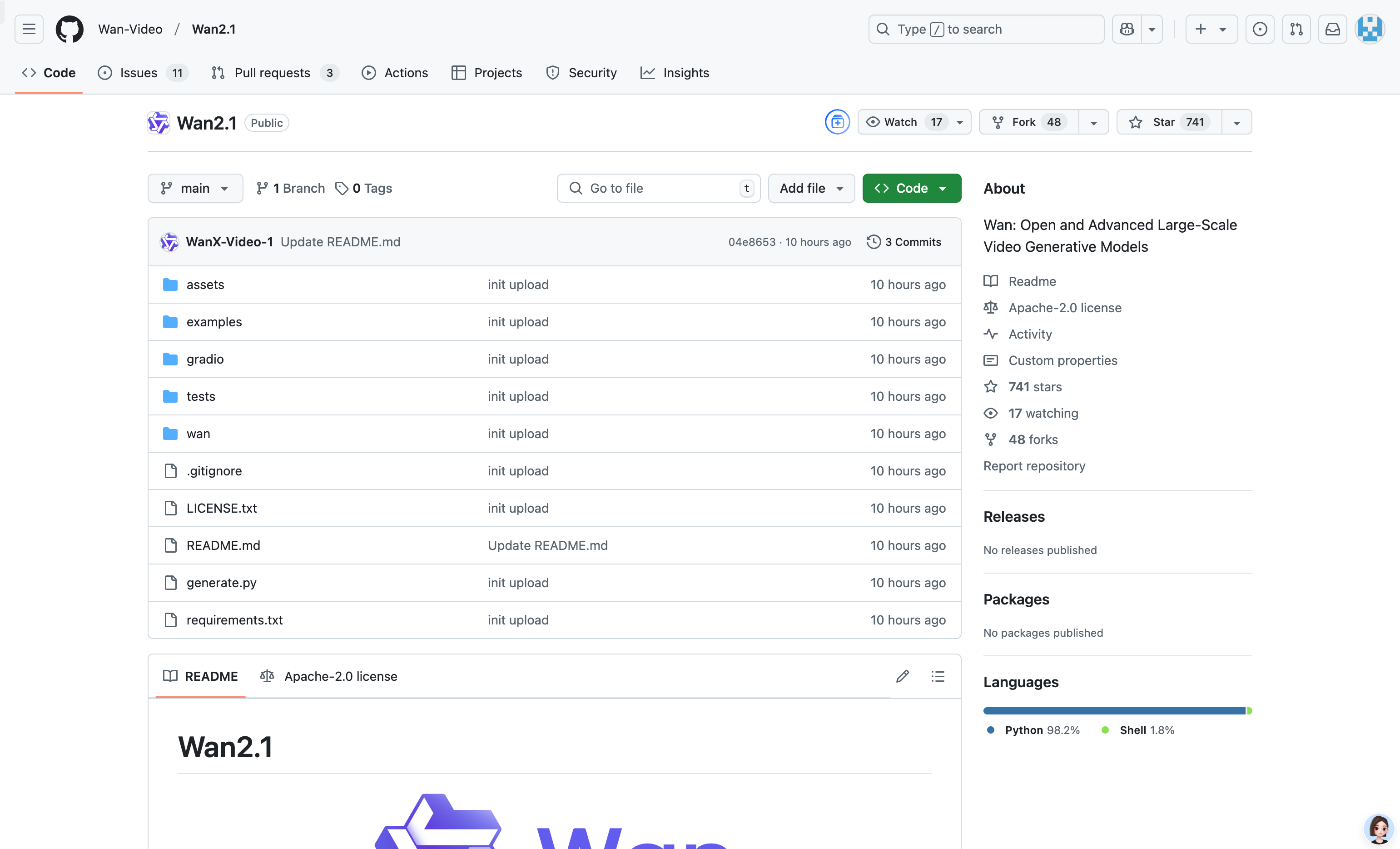

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under video Other Categories

🎬

Explore More video Tools

AI model Hot video is a popular subcategory under 130 quality AI tools