🤖

AI Category

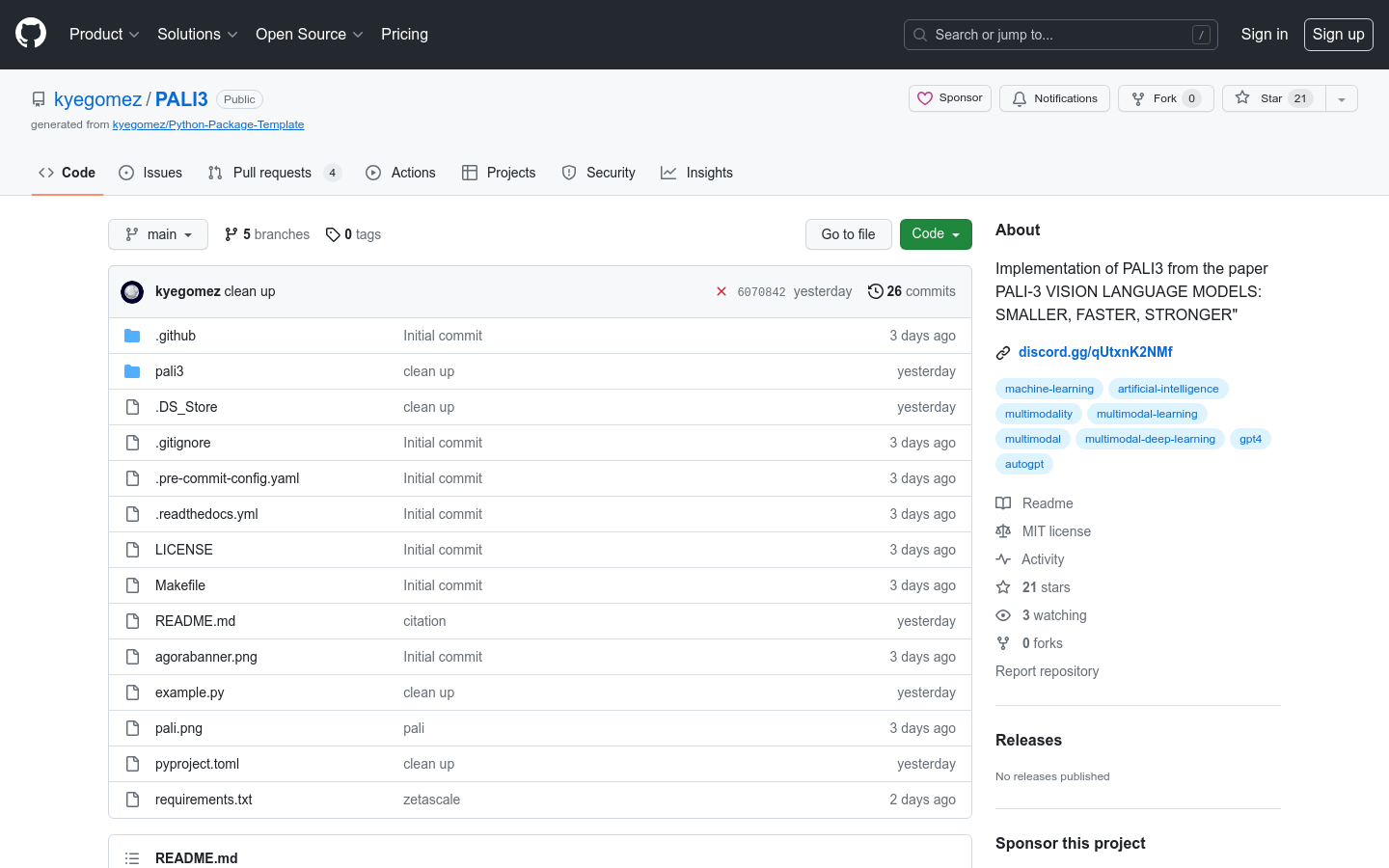

AI model

Found 36 AI tools

36

tools

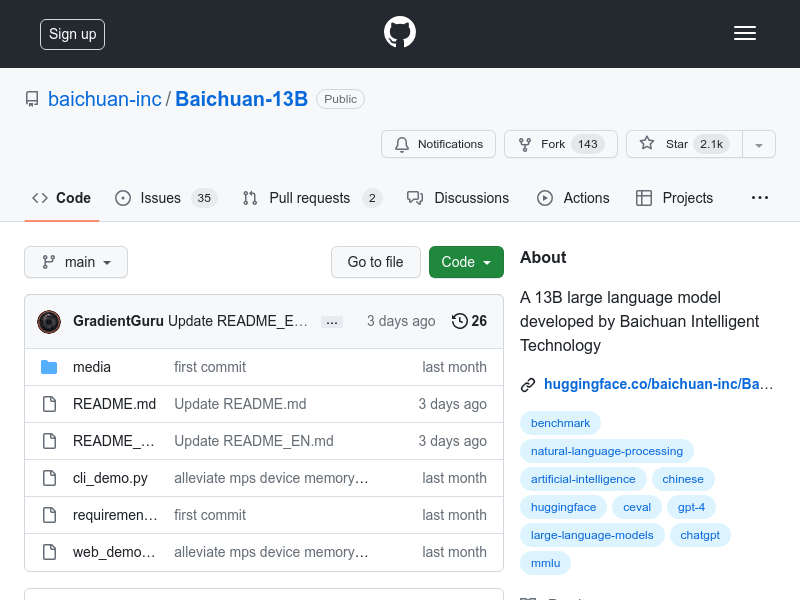

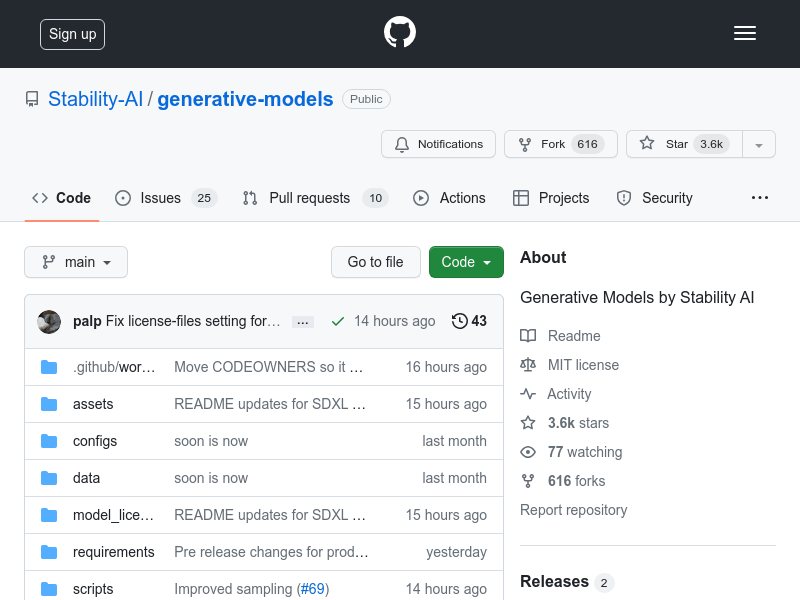

Primary Category: AI

Subcategory: AI model

Found 36 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under AI Other Categories

🤖

Explore More AI Tools

AI model Hot AI is a popular subcategory under 36 quality AI tools