💻

programming Category

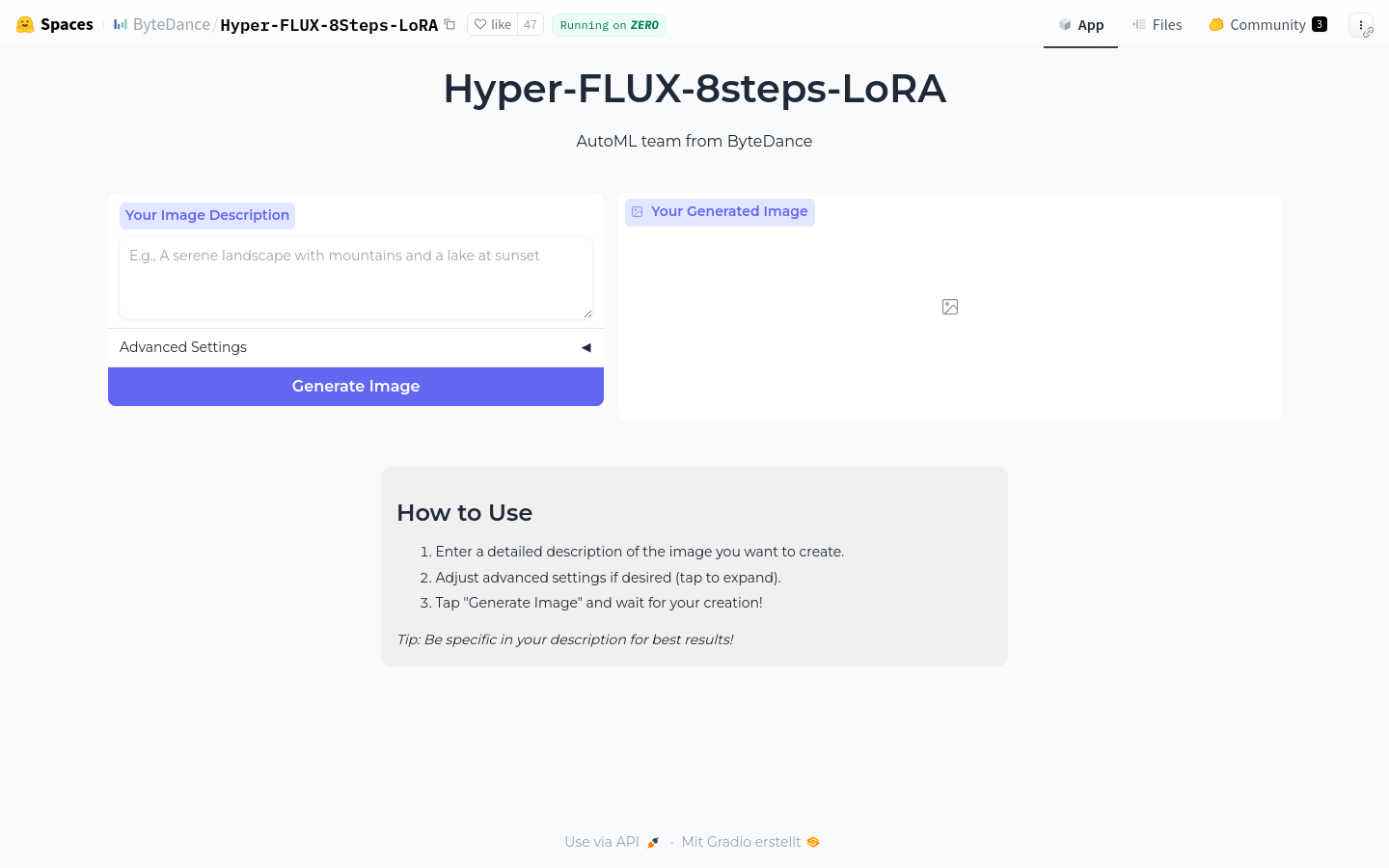

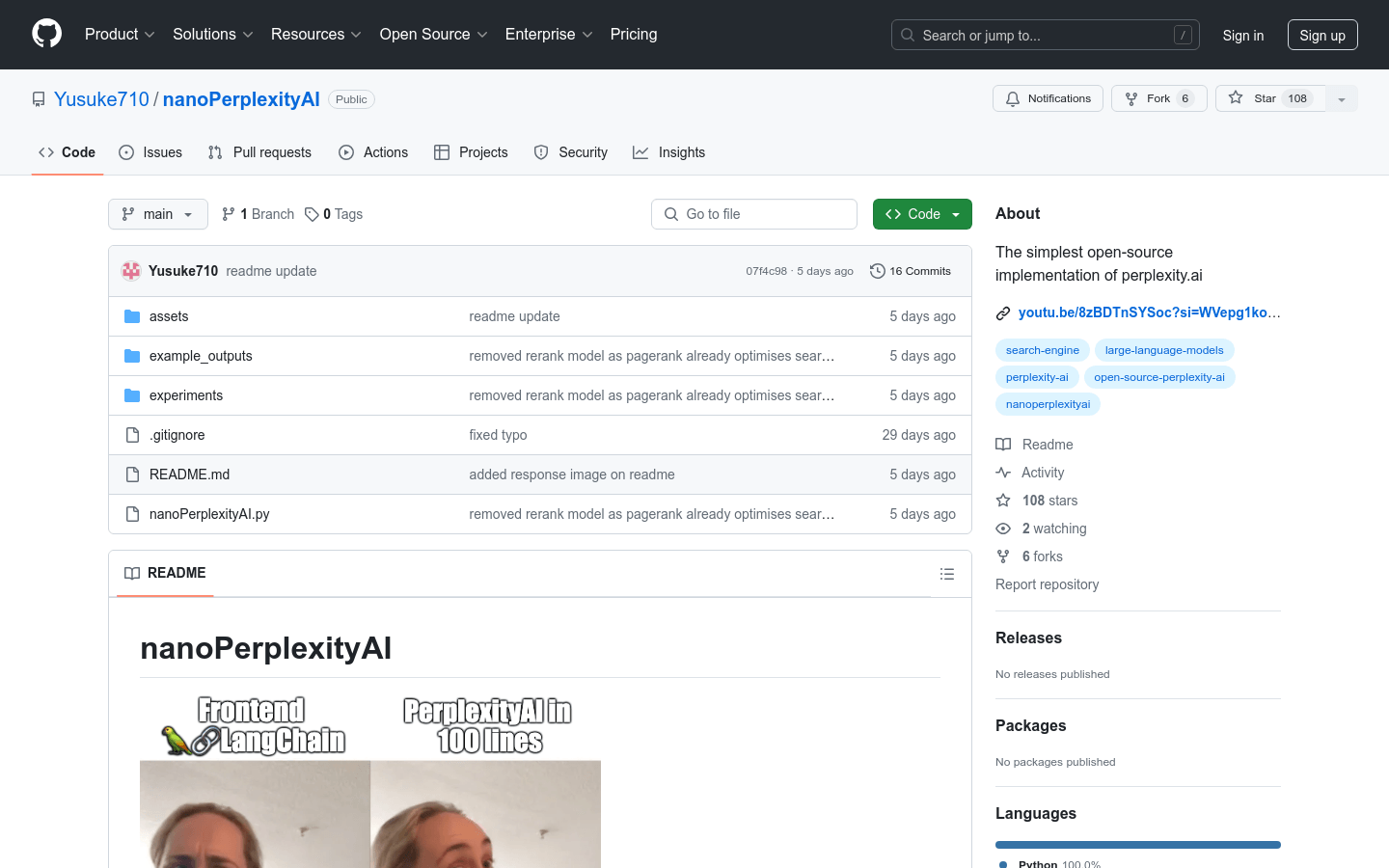

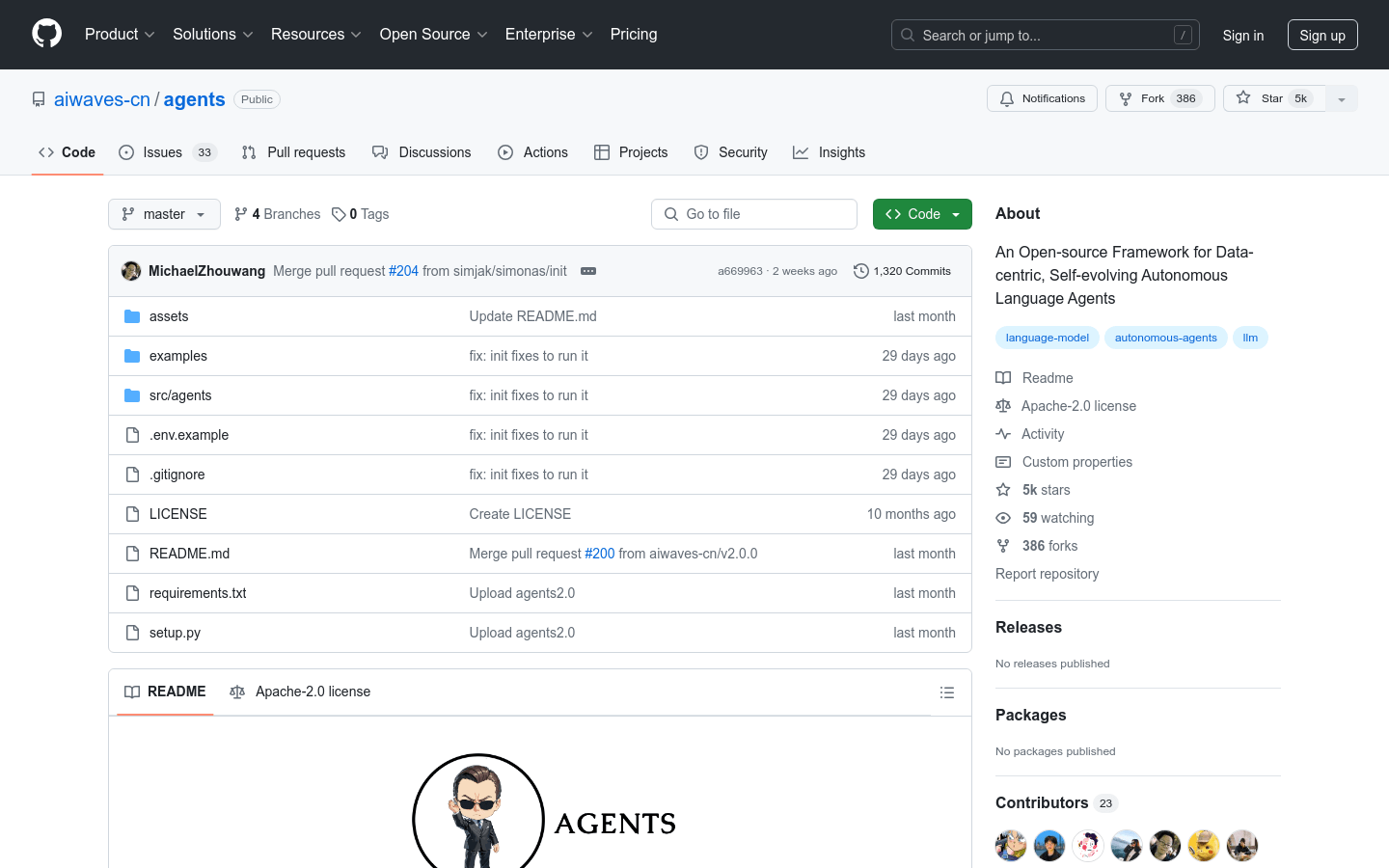

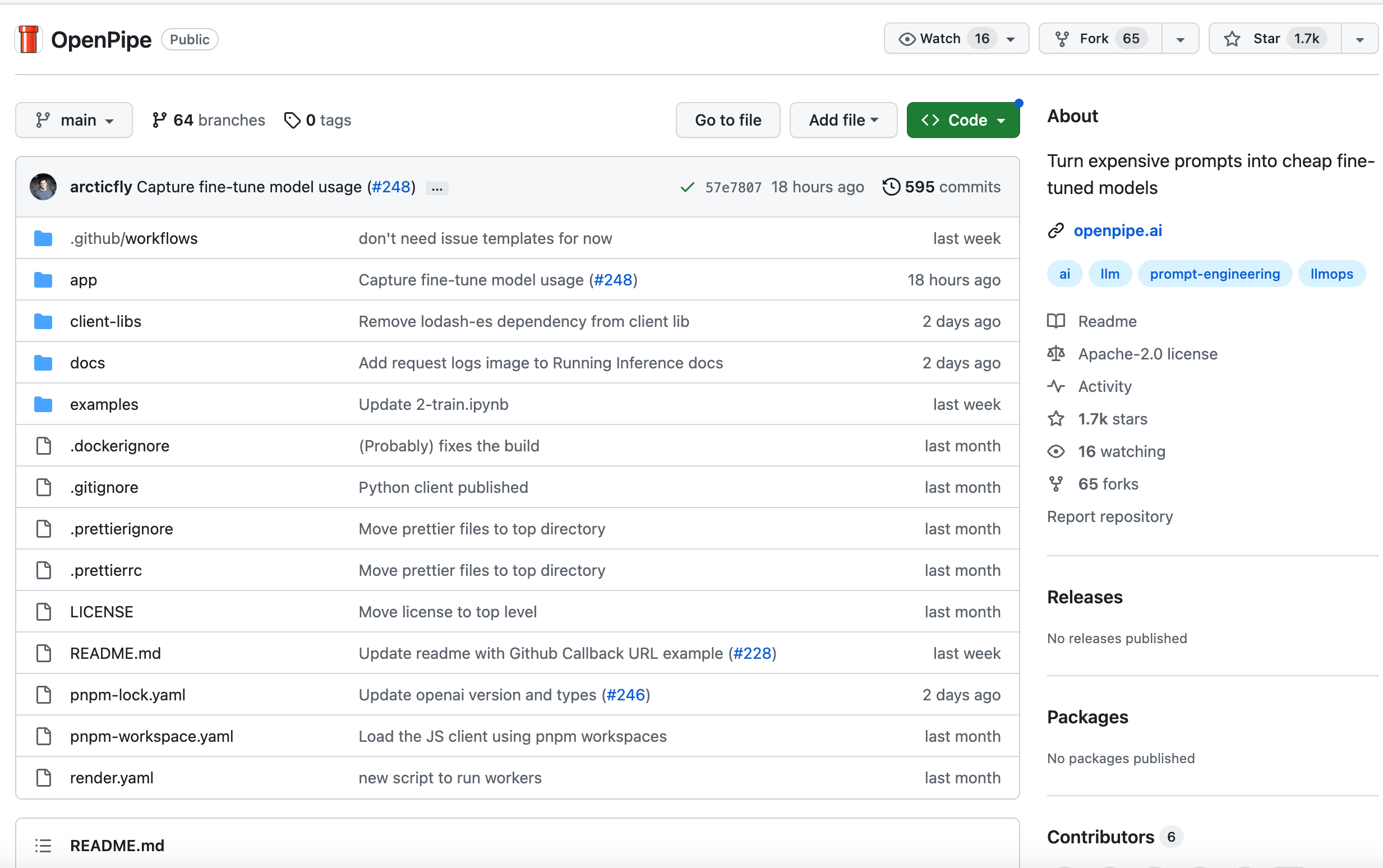

AI model inference training

Found 14 AI tools

14

tools

Primary Category: programming

Subcategory: AI model inference training

Found 14 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under programming Other Categories

💻

Explore More programming Tools

AI model inference training Hot programming is a popular subcategory under 14 quality AI tools