🖼️

image Category

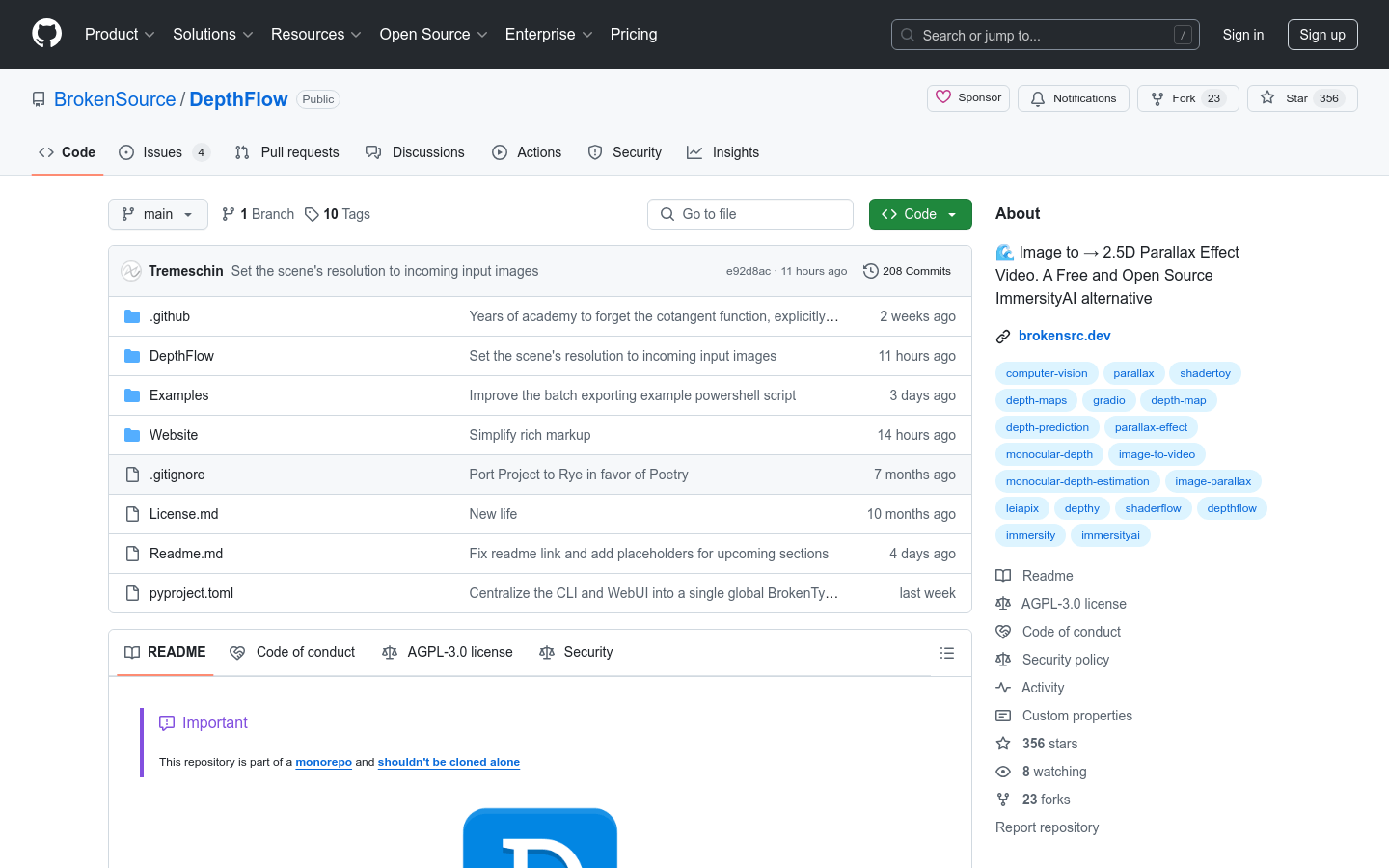

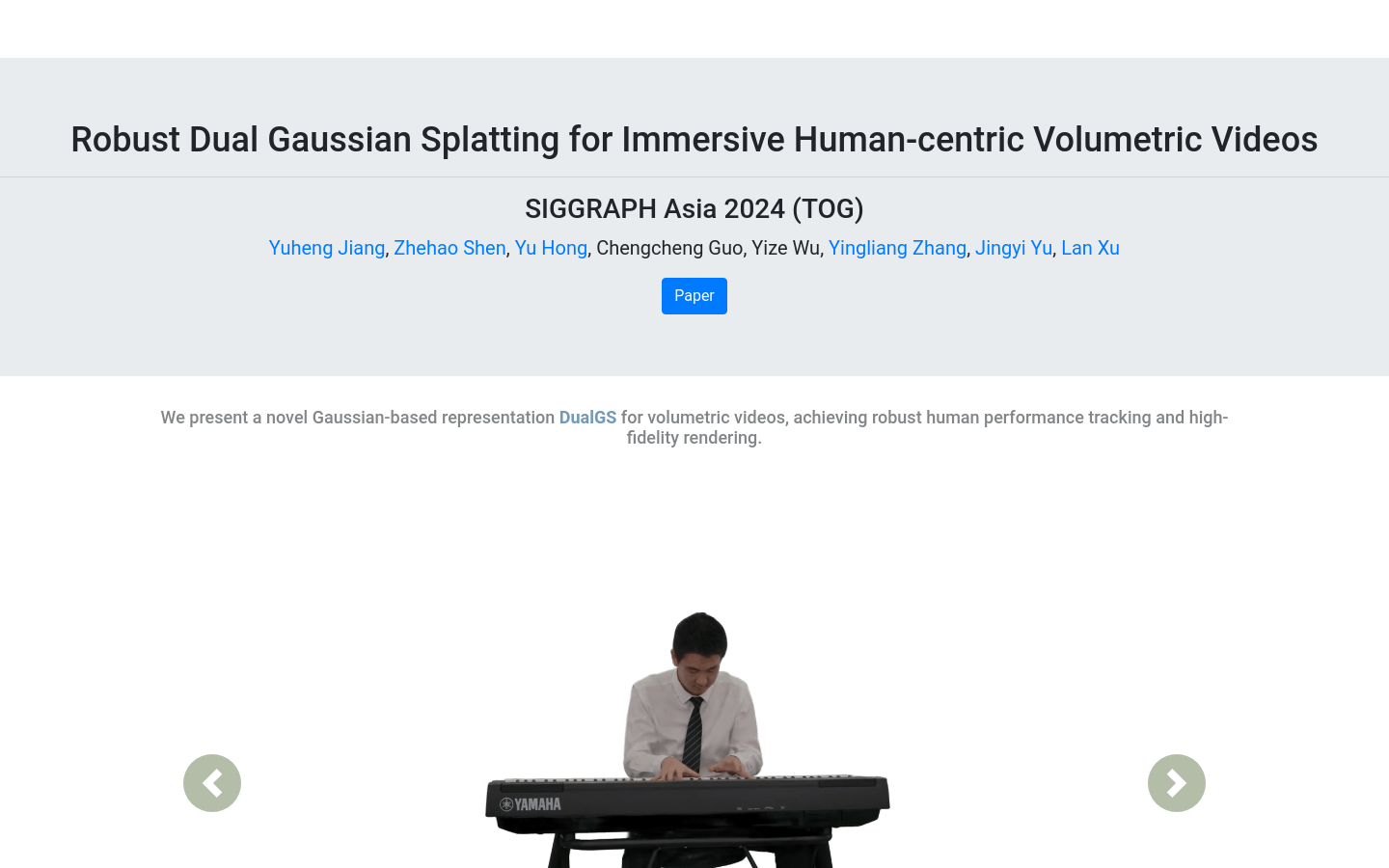

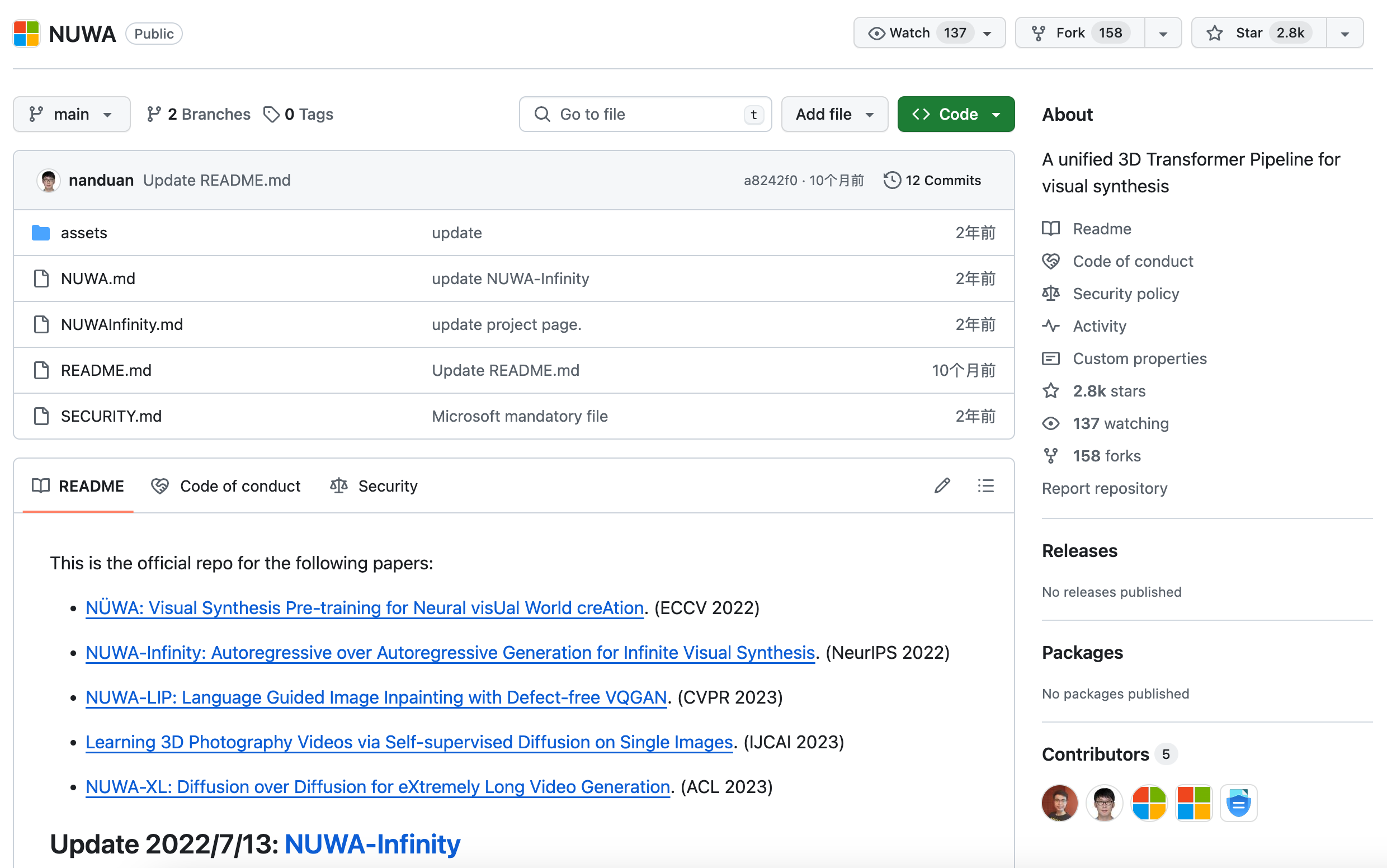

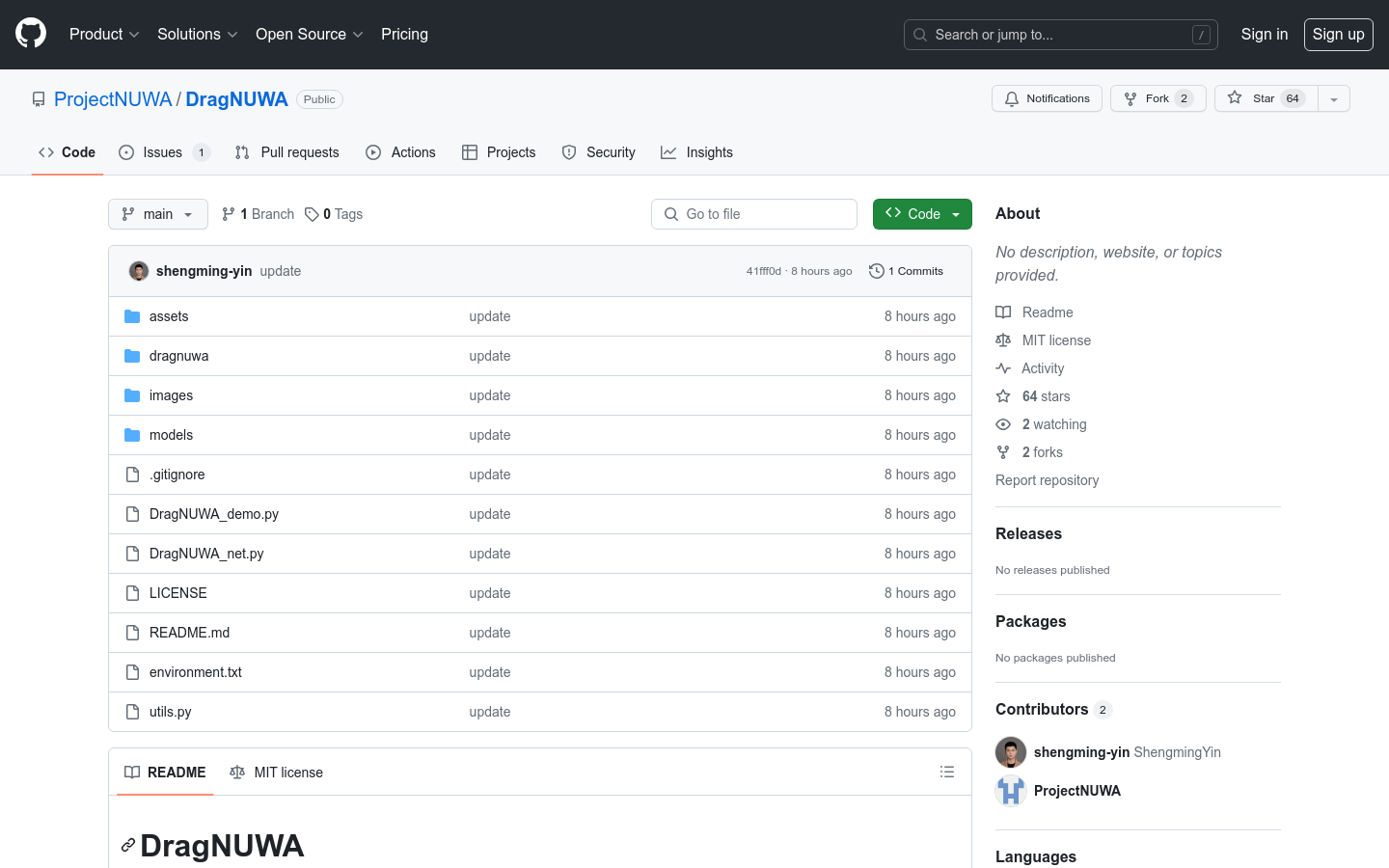

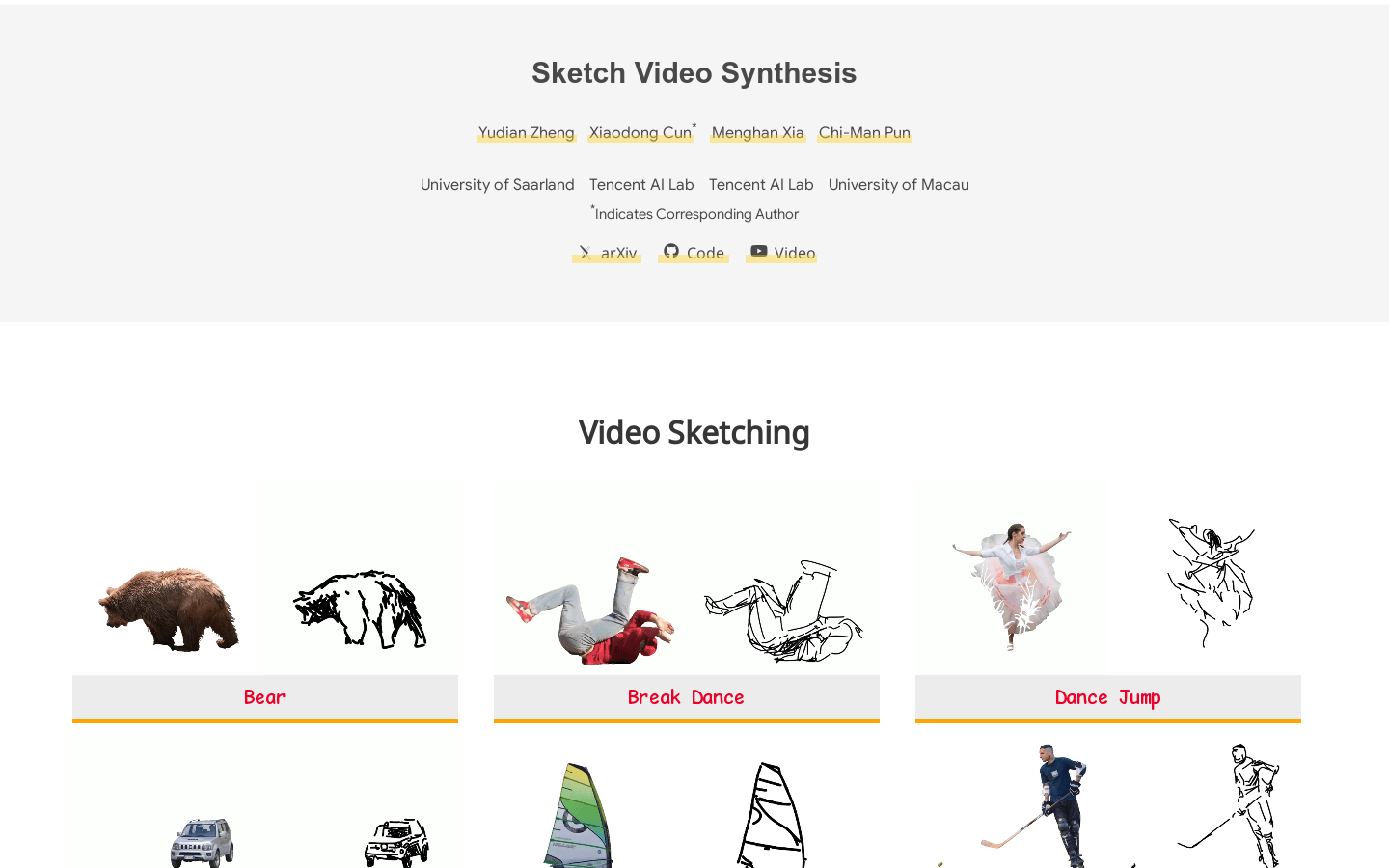

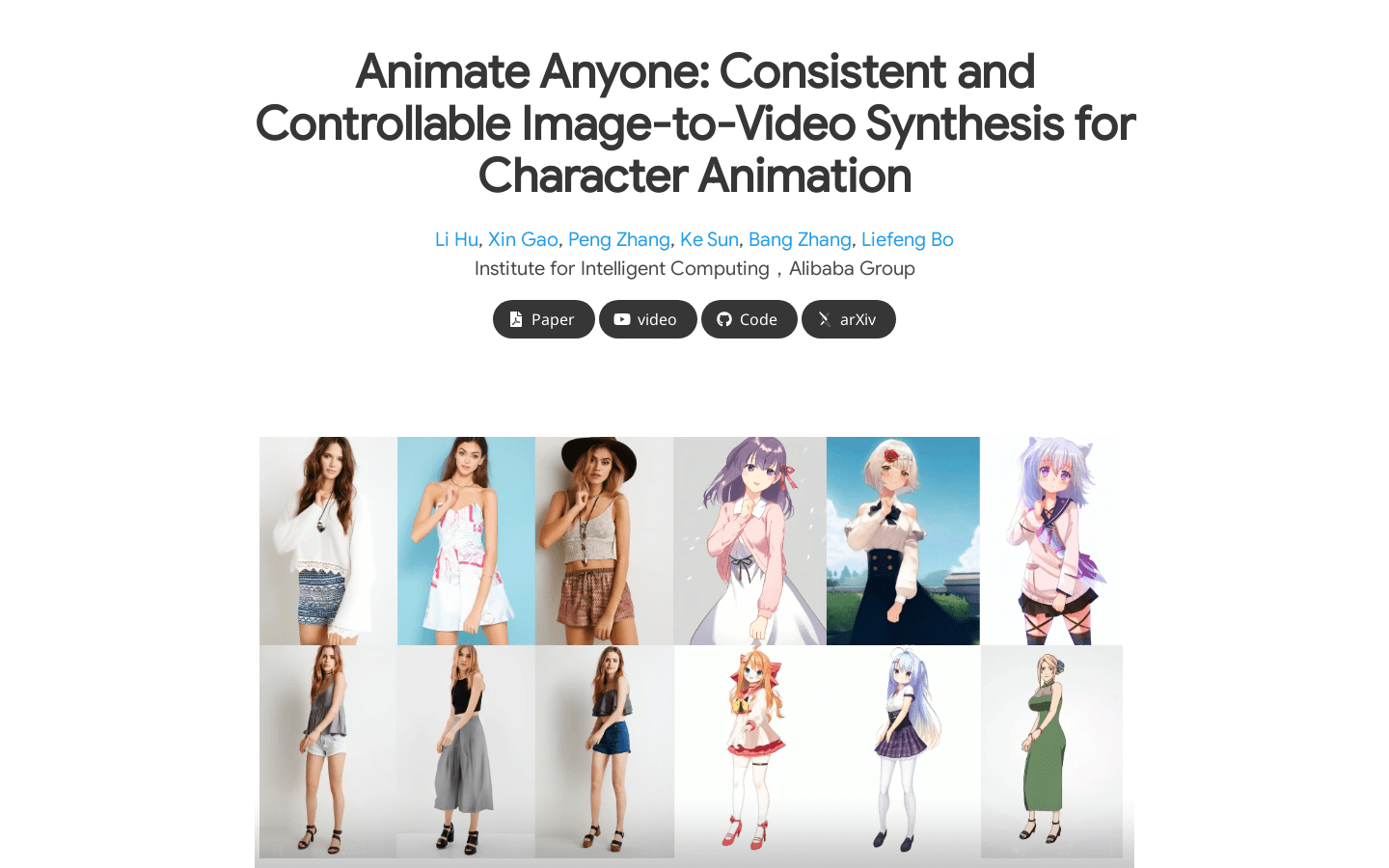

AI video generation

Found 45 AI tools

45

tools

Primary Category: image

Subcategory: AI video generation

Found 45 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under image Other Categories

🖼️

Explore More image Tools

AI video generation Hot image is a popular subcategory under 45 quality AI tools