🖼️

image Category

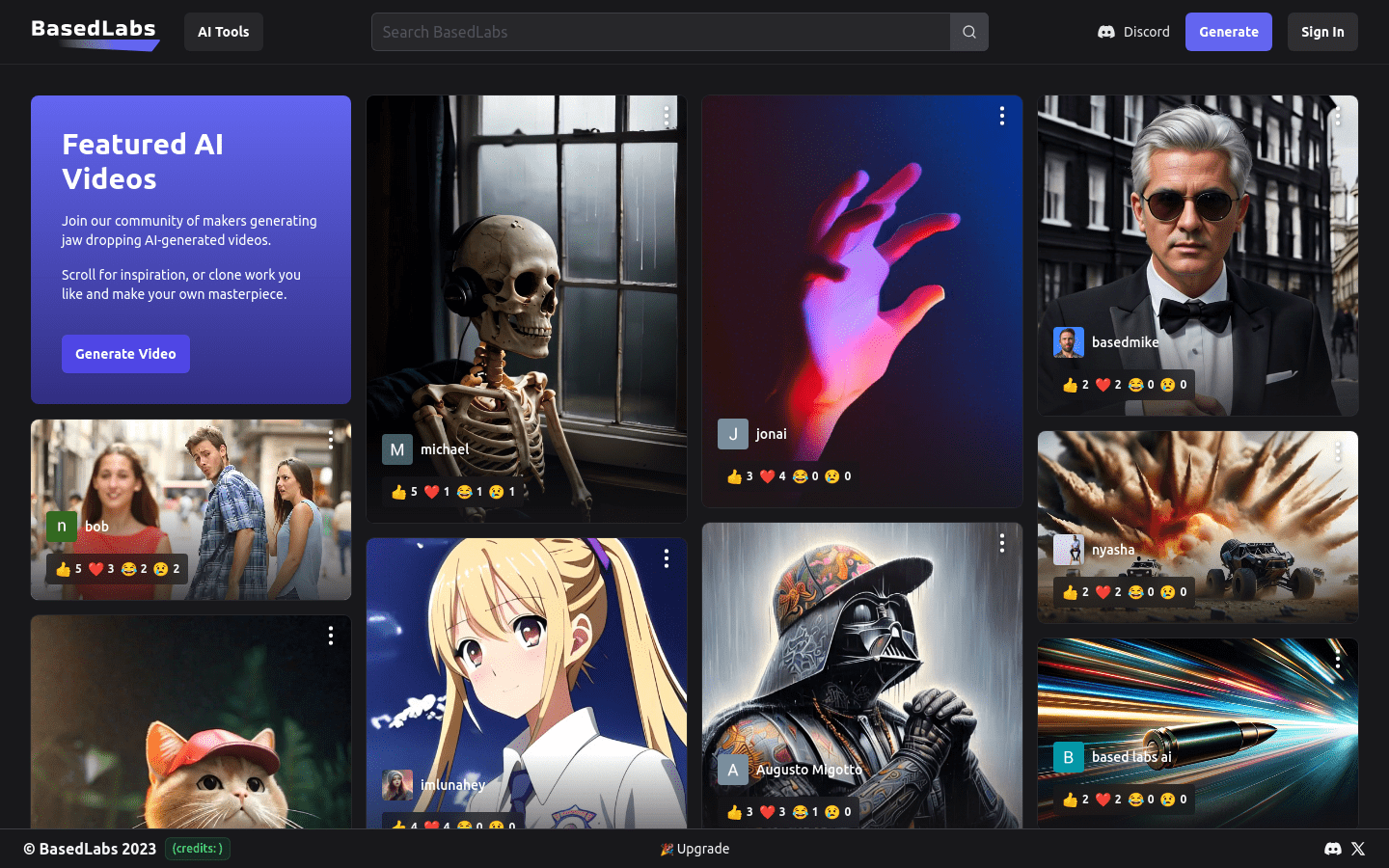

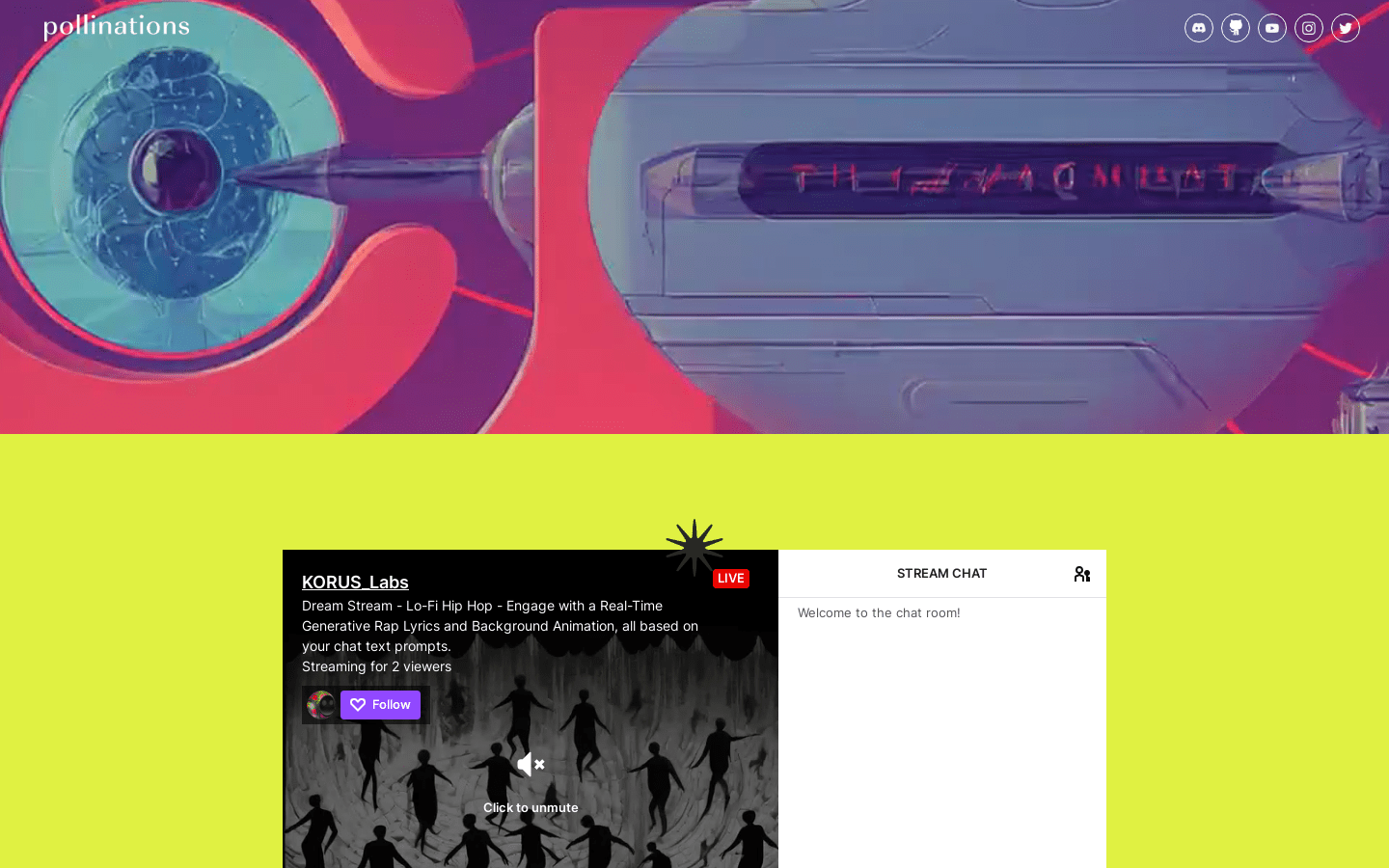

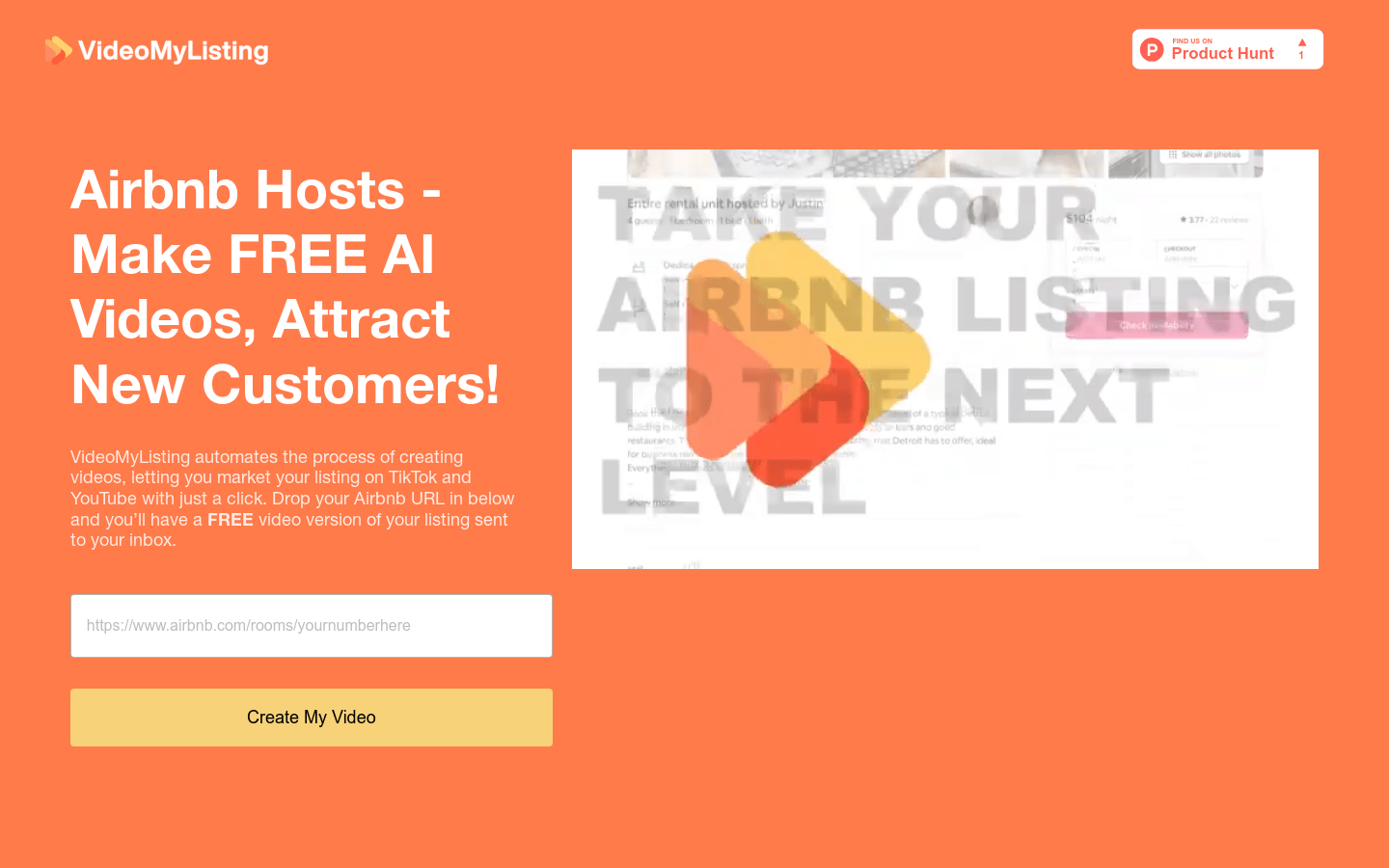

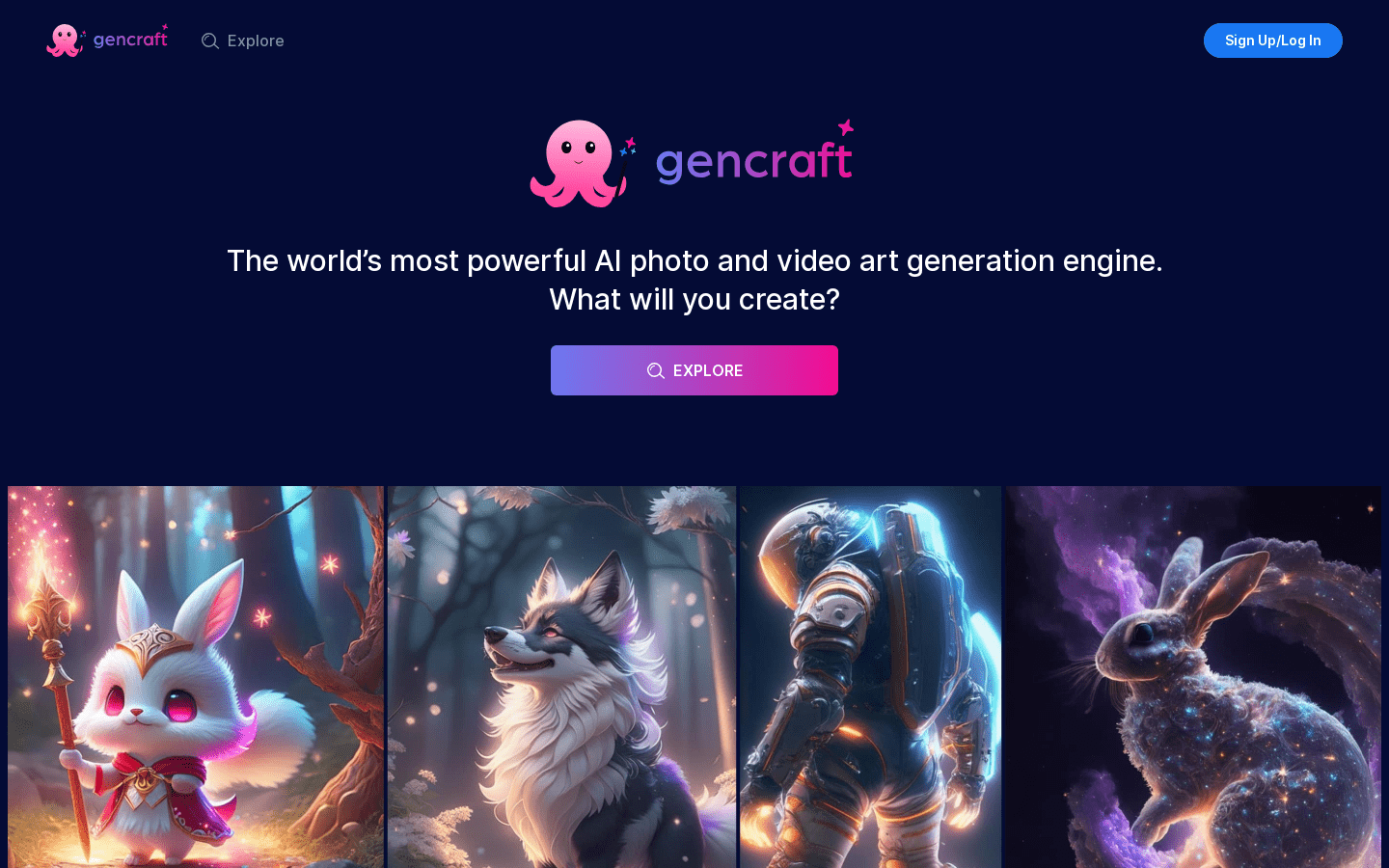

video generation

Found 43 AI tools

43

tools

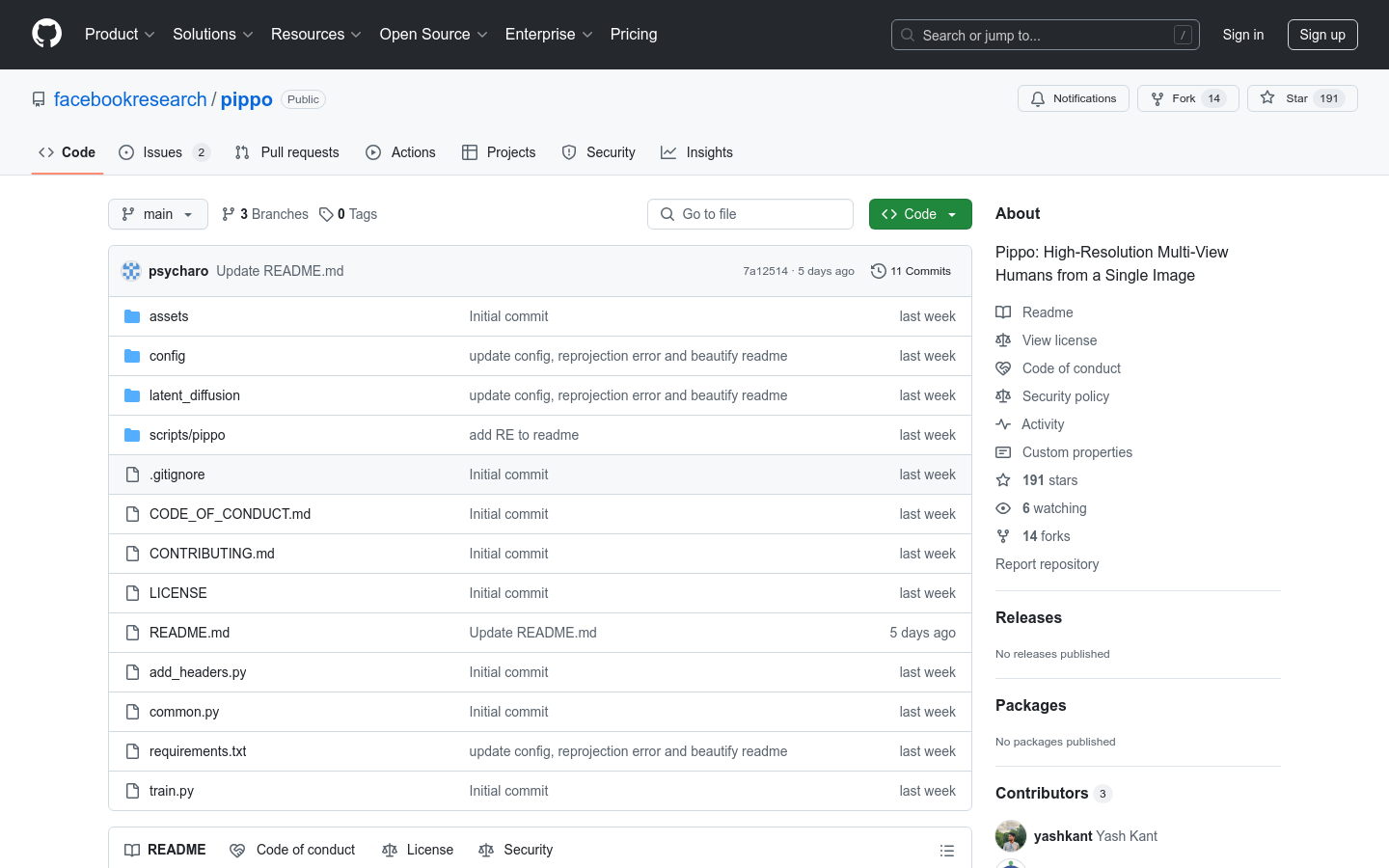

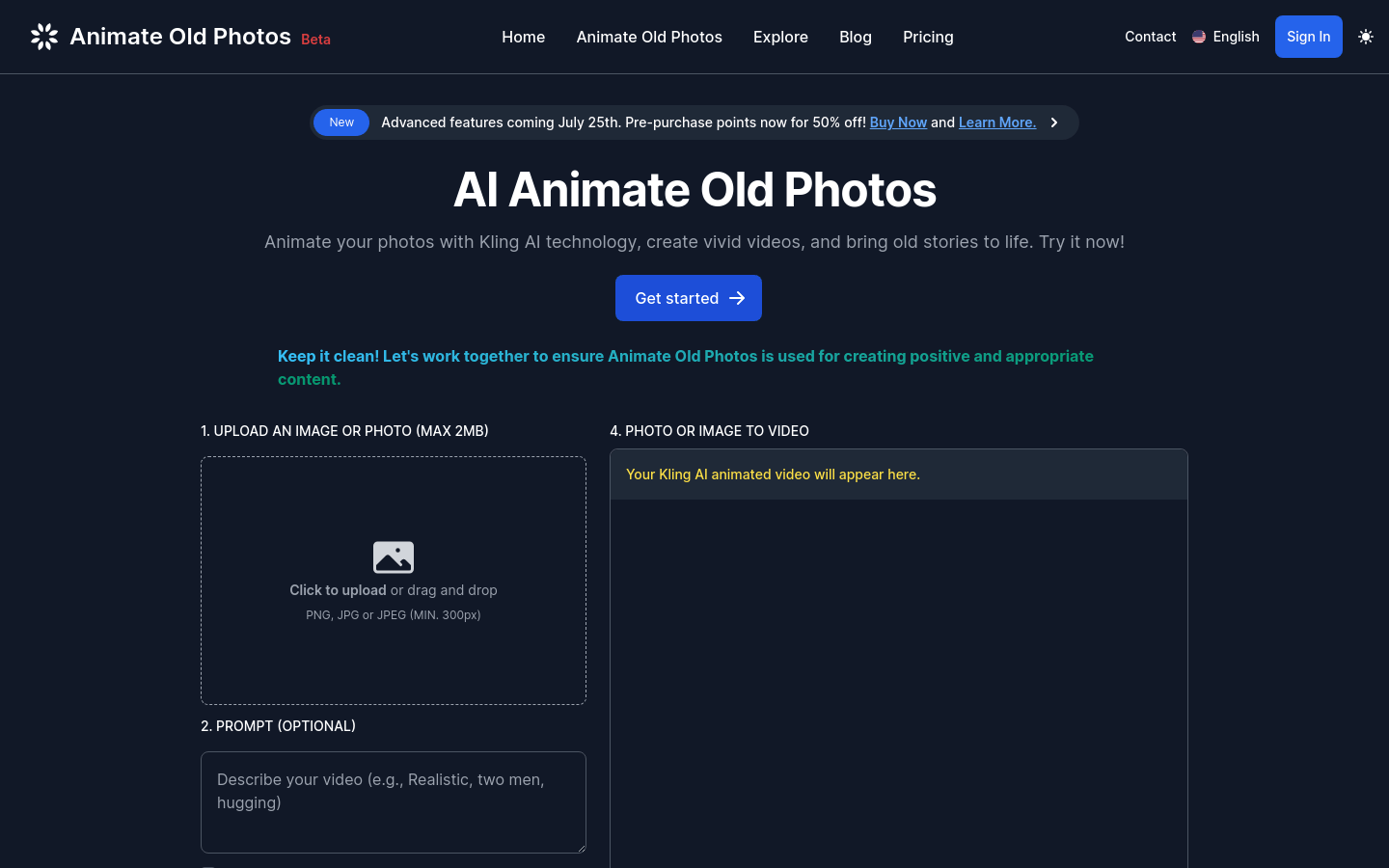

Primary Category: image

Subcategory: video generation

Found 43 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under image Other Categories

🖼️

Explore More image Tools

video generation Hot image is a popular subcategory under 43 quality AI tools