💻

programming Category

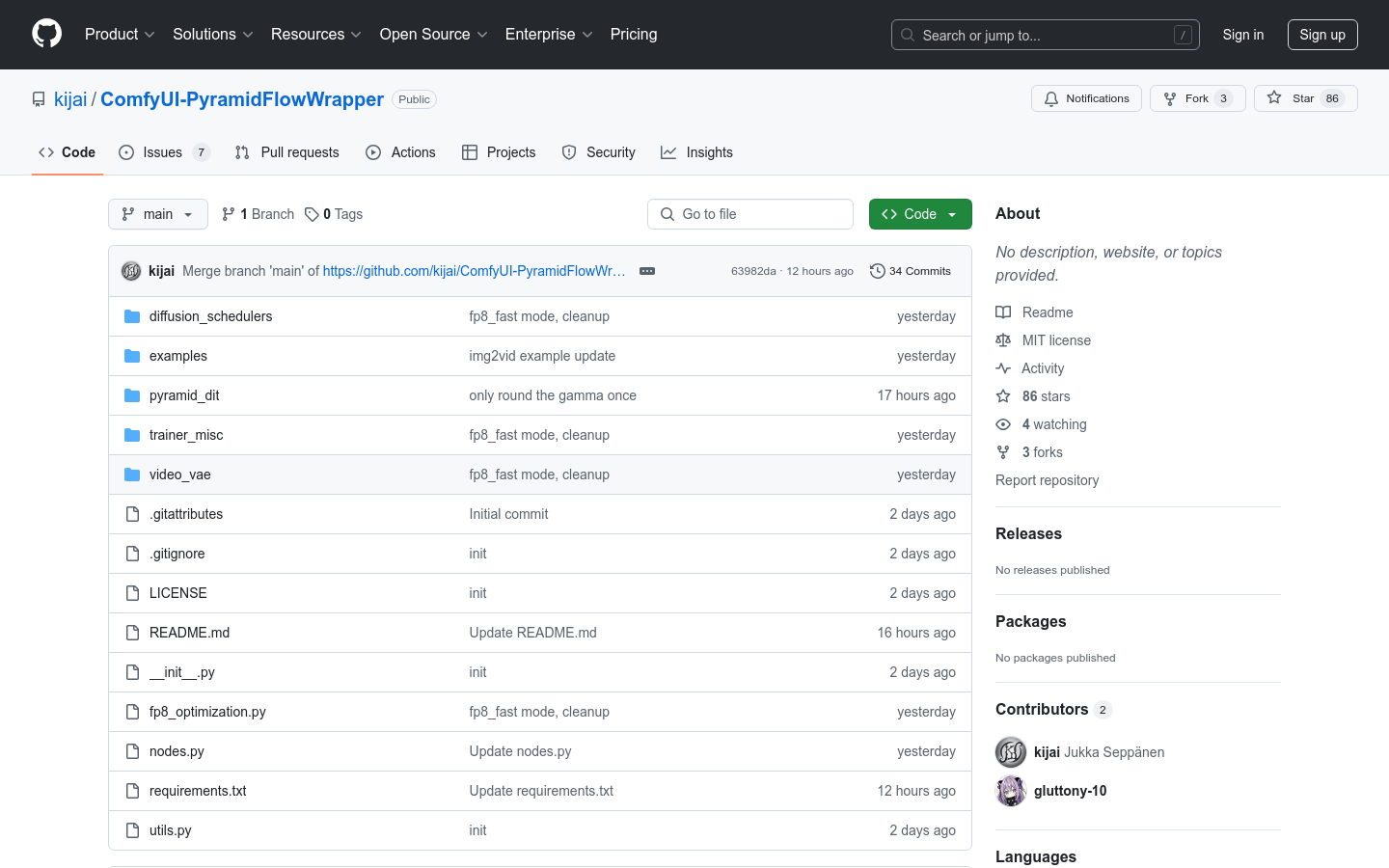

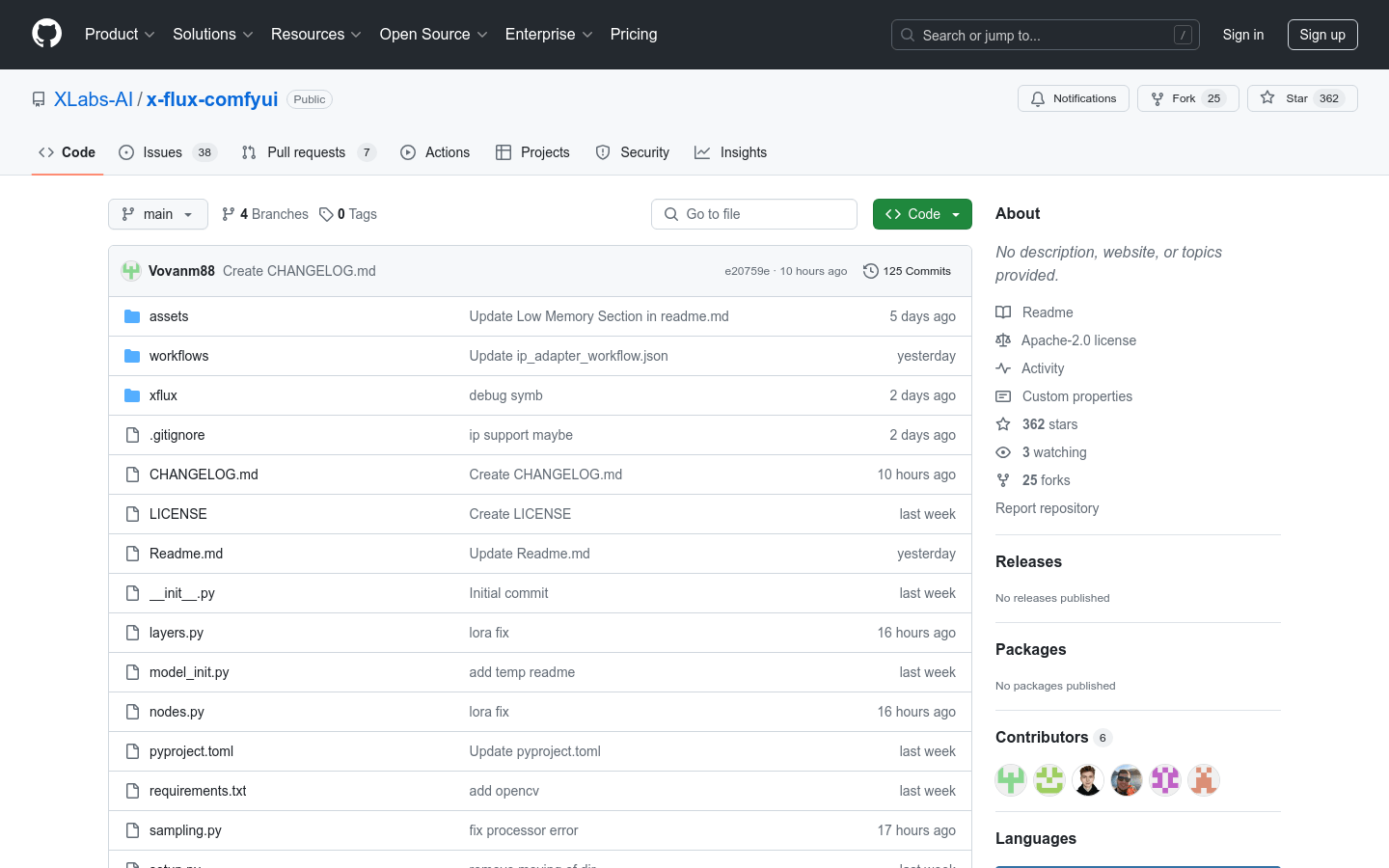

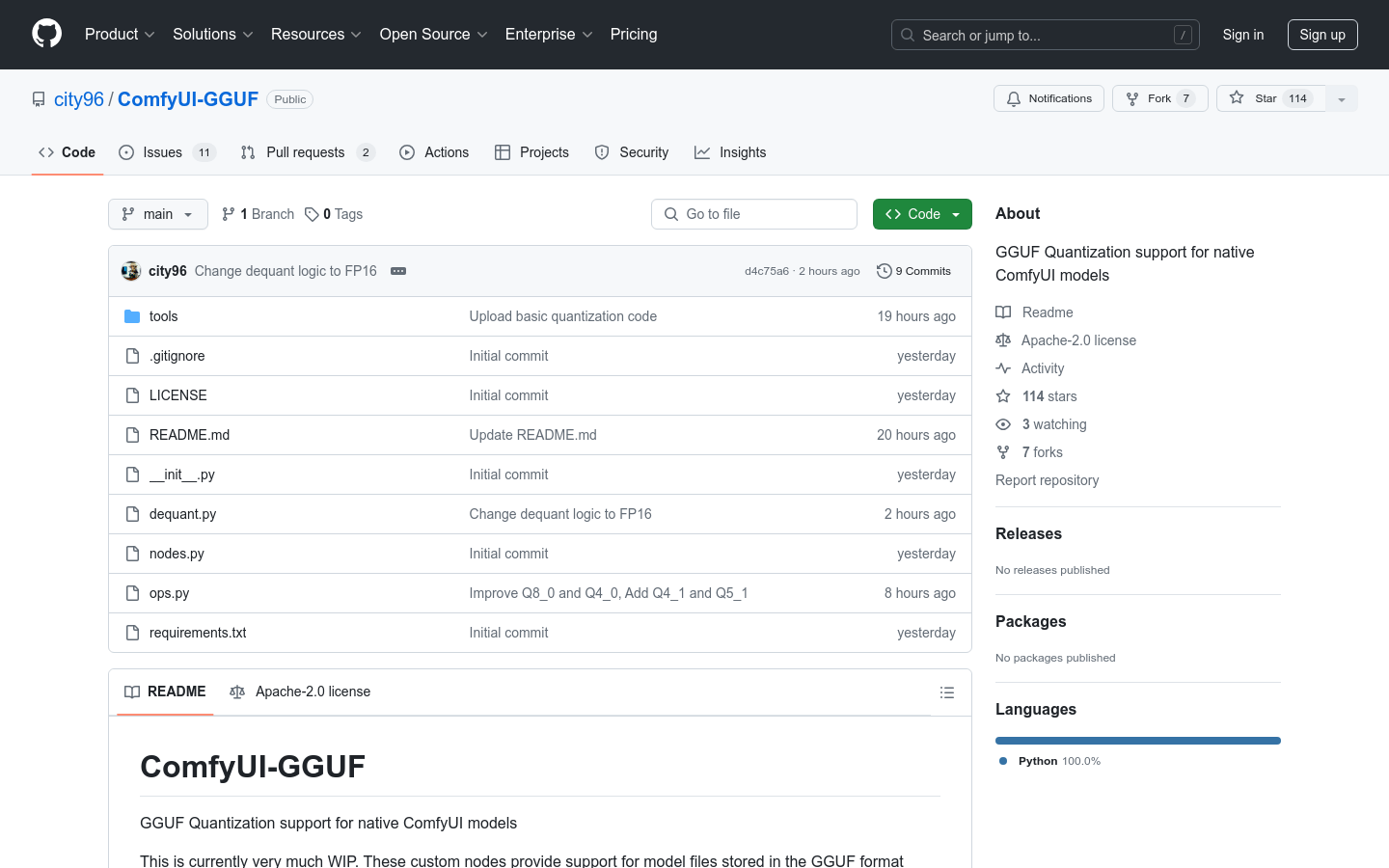

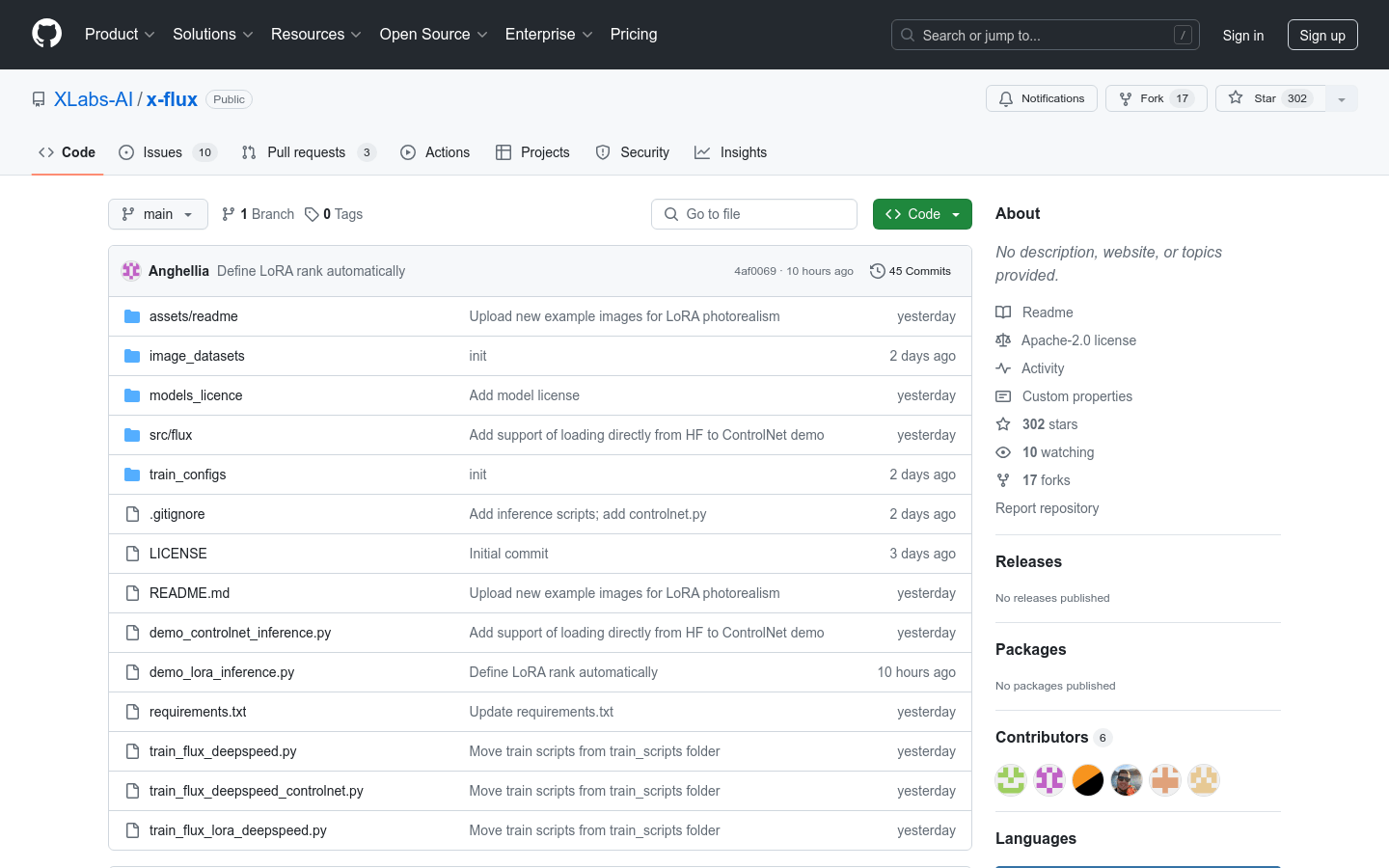

AI image generation

Found 34 AI tools

34

tools

Primary Category: programming

Subcategory: AI image generation

Found 34 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under programming Other Categories

💻

Explore More programming Tools

AI image generation Hot programming is a popular subcategory under 34 quality AI tools