🖼️

image Category

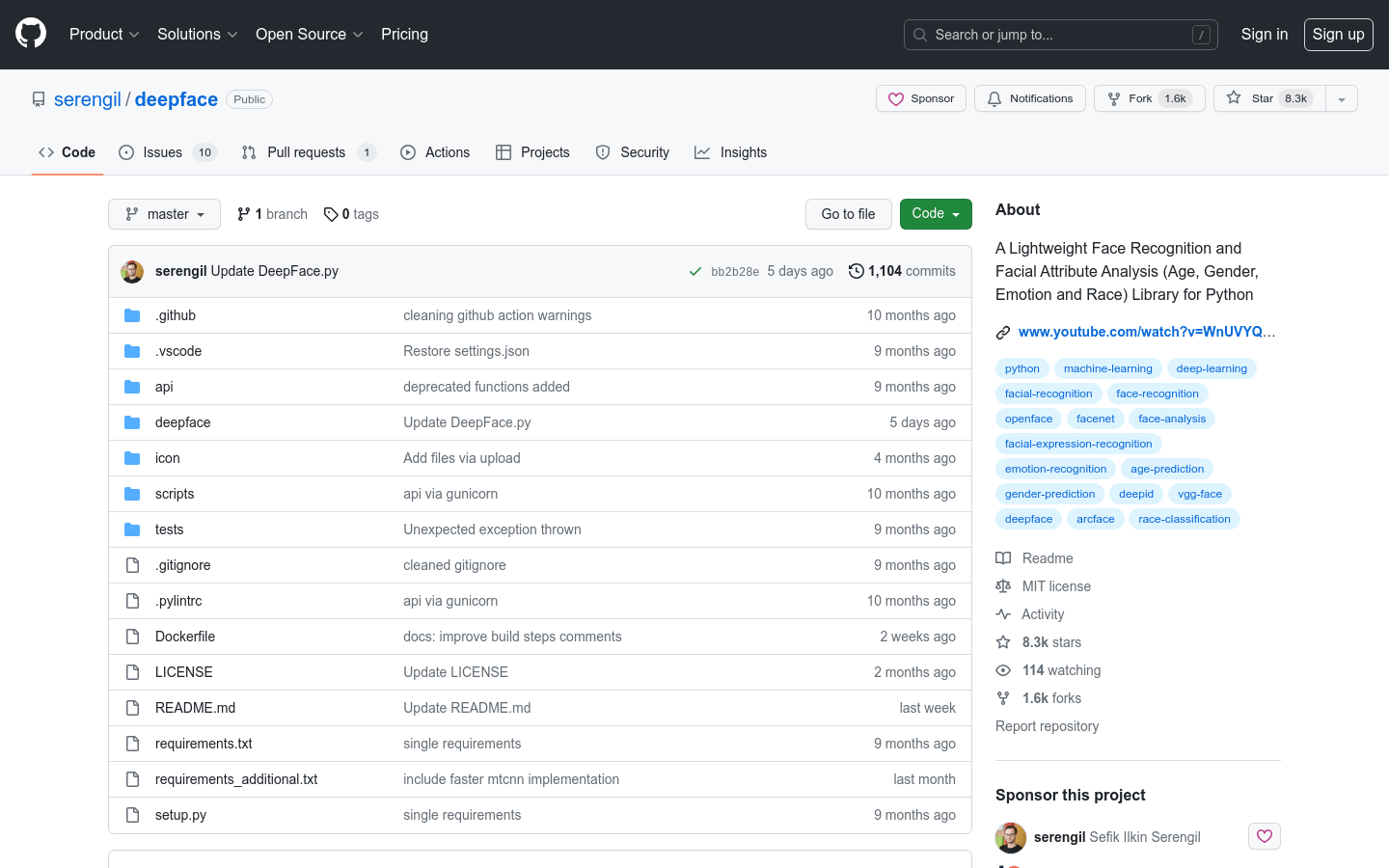

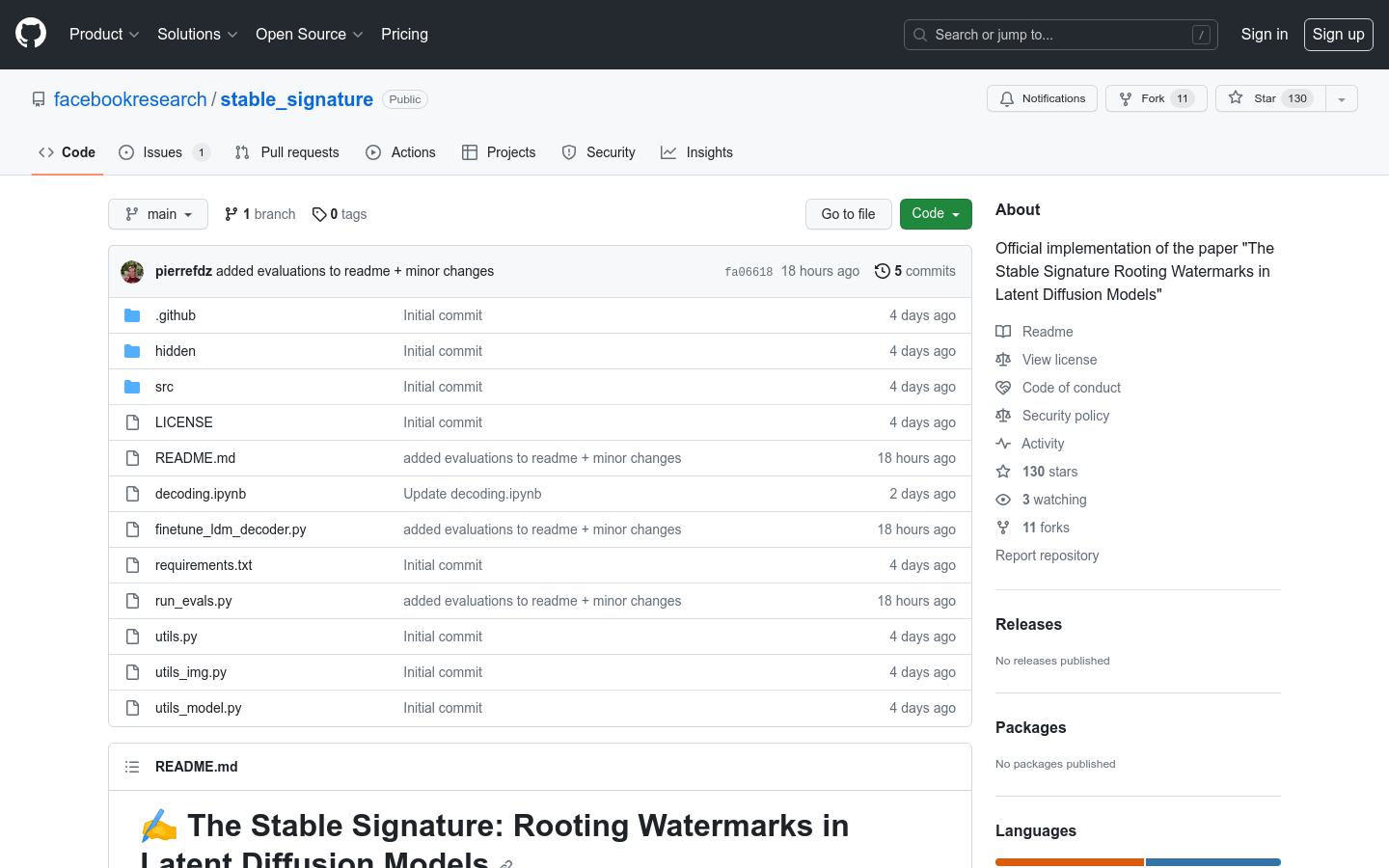

AI image detection and recognition

Found 63 AI tools

63

tools

Primary Category: image

Subcategory: AI image detection and recognition

Found 63 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under image Other Categories

🖼️

Explore More image Tools

AI image detection and recognition Hot image is a popular subcategory under 63 quality AI tools