🖼️

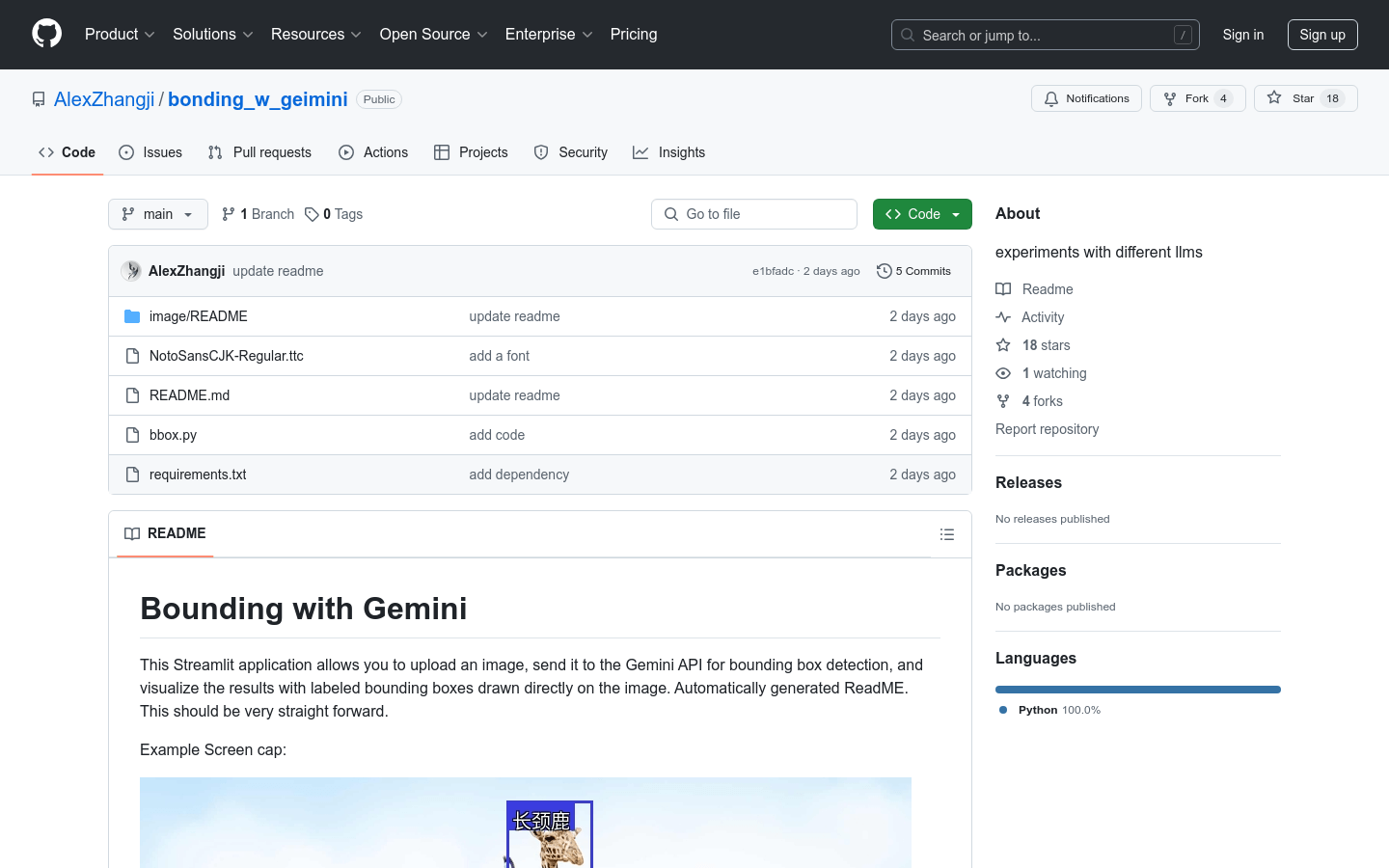

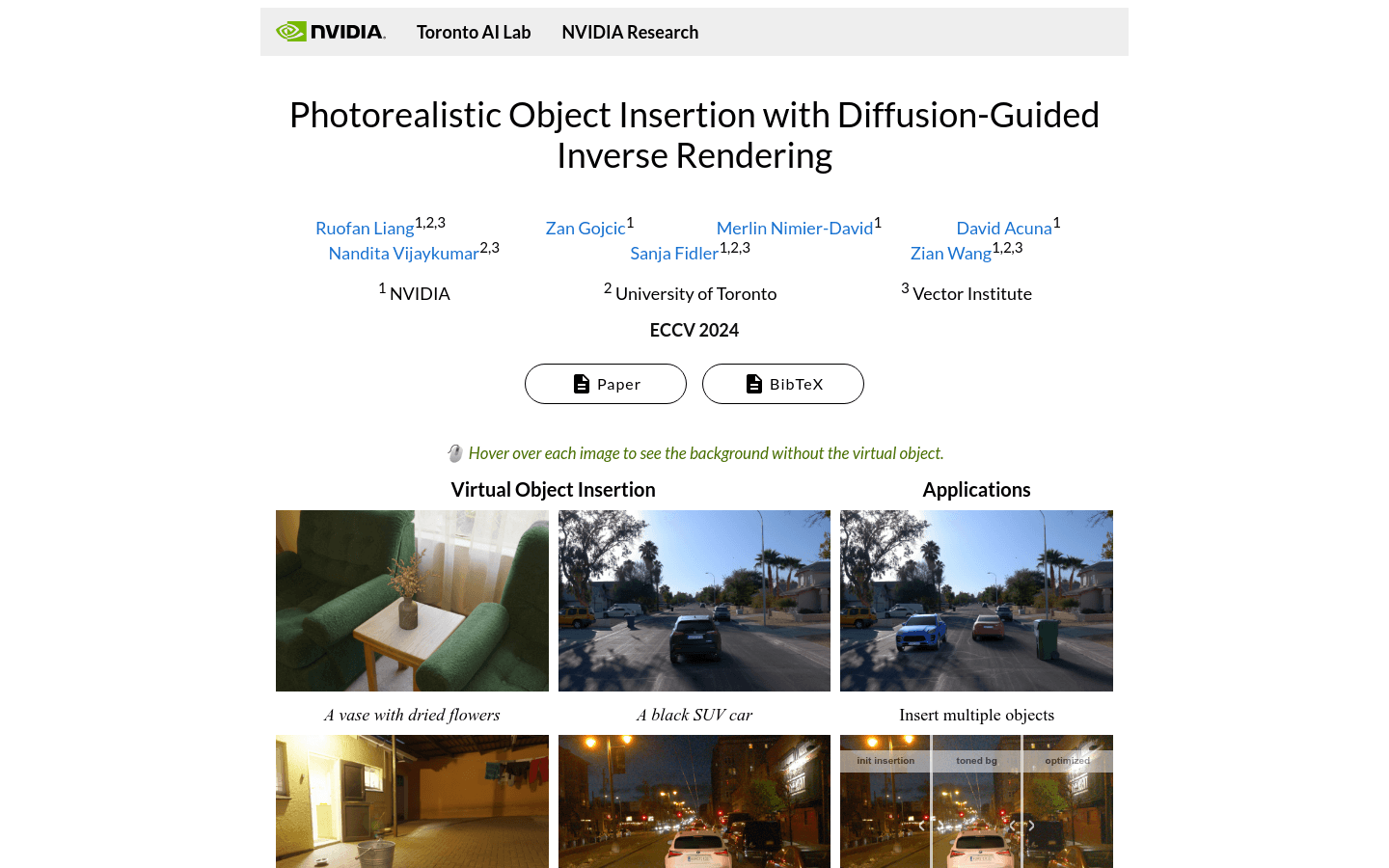

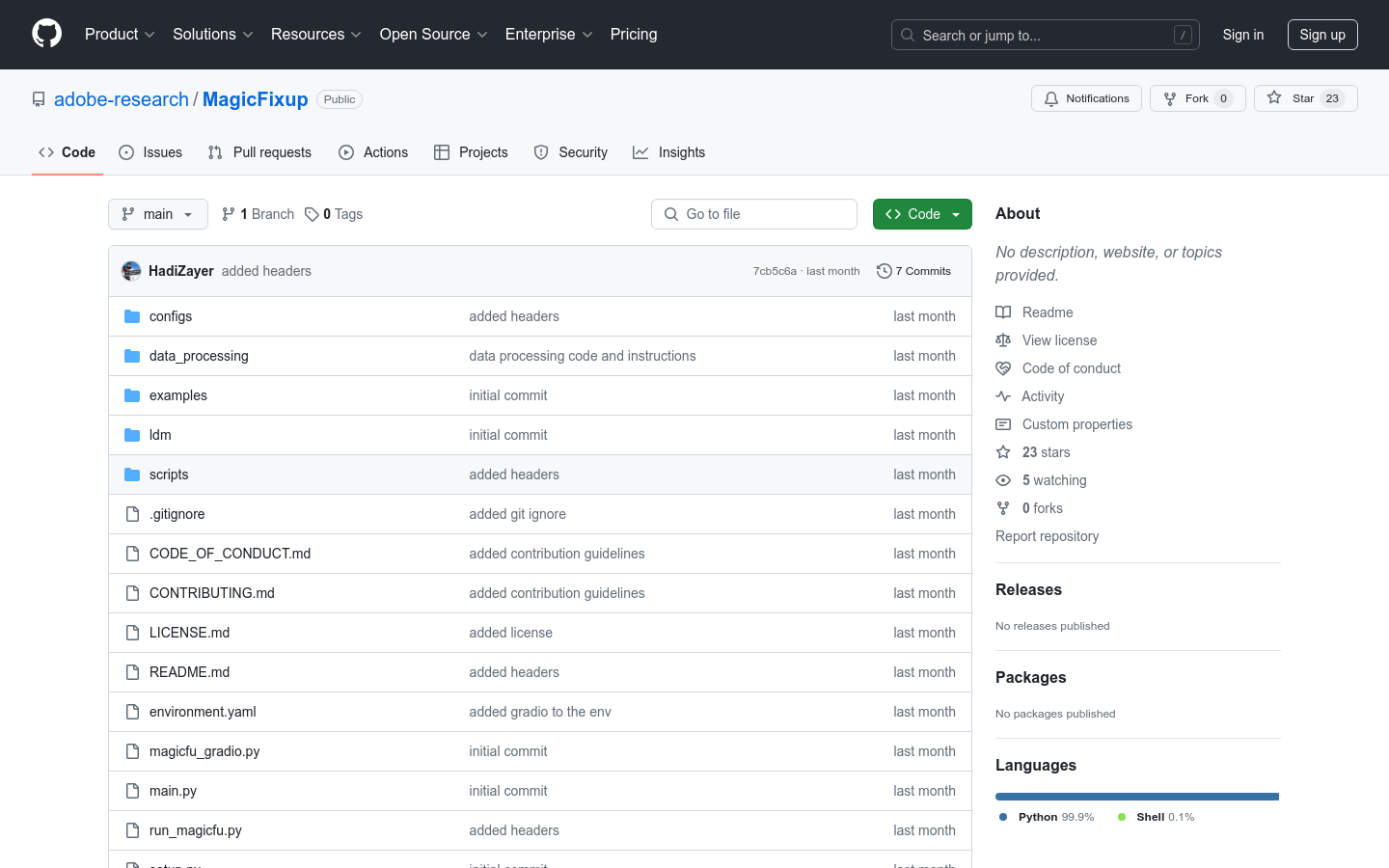

image Category

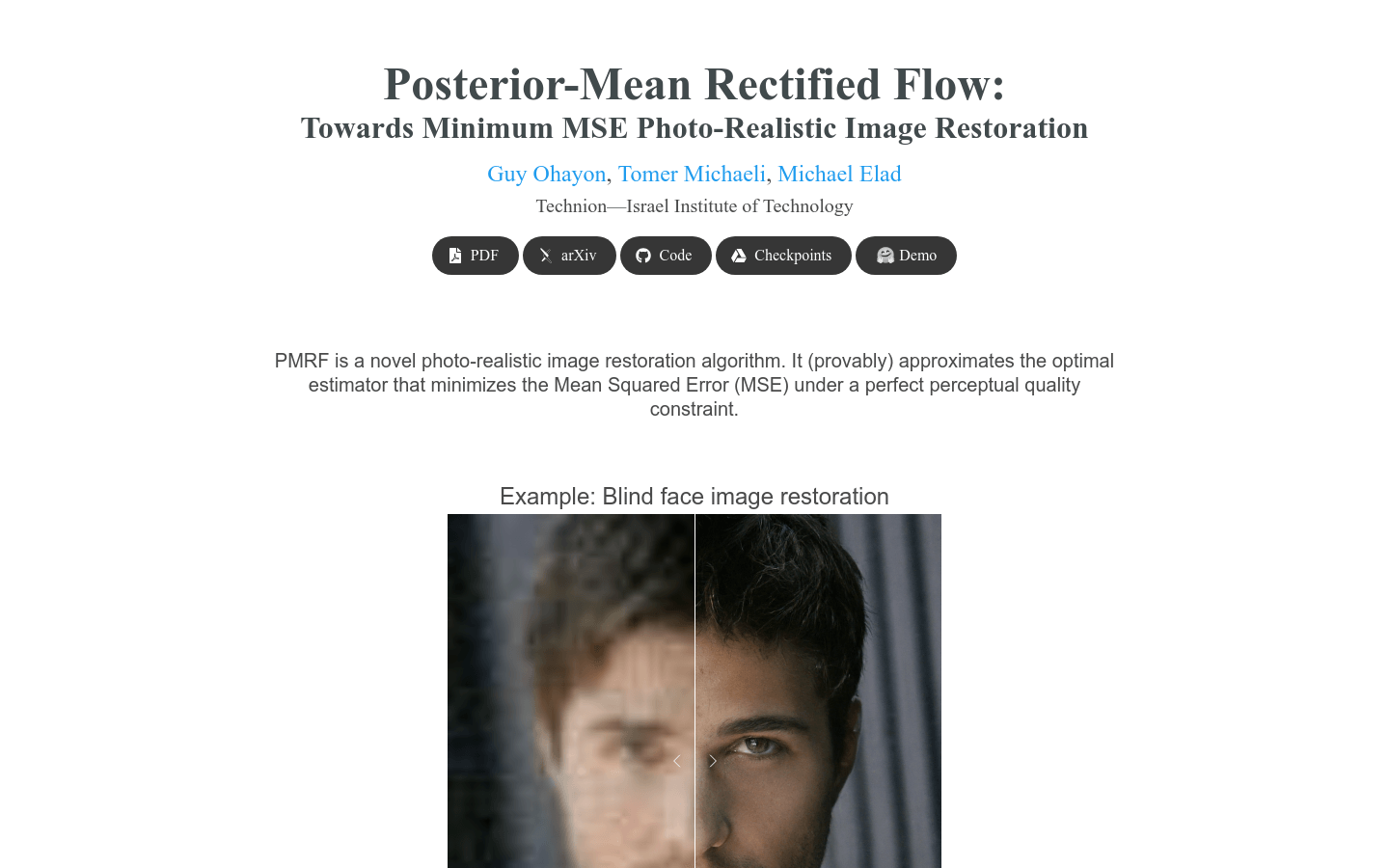

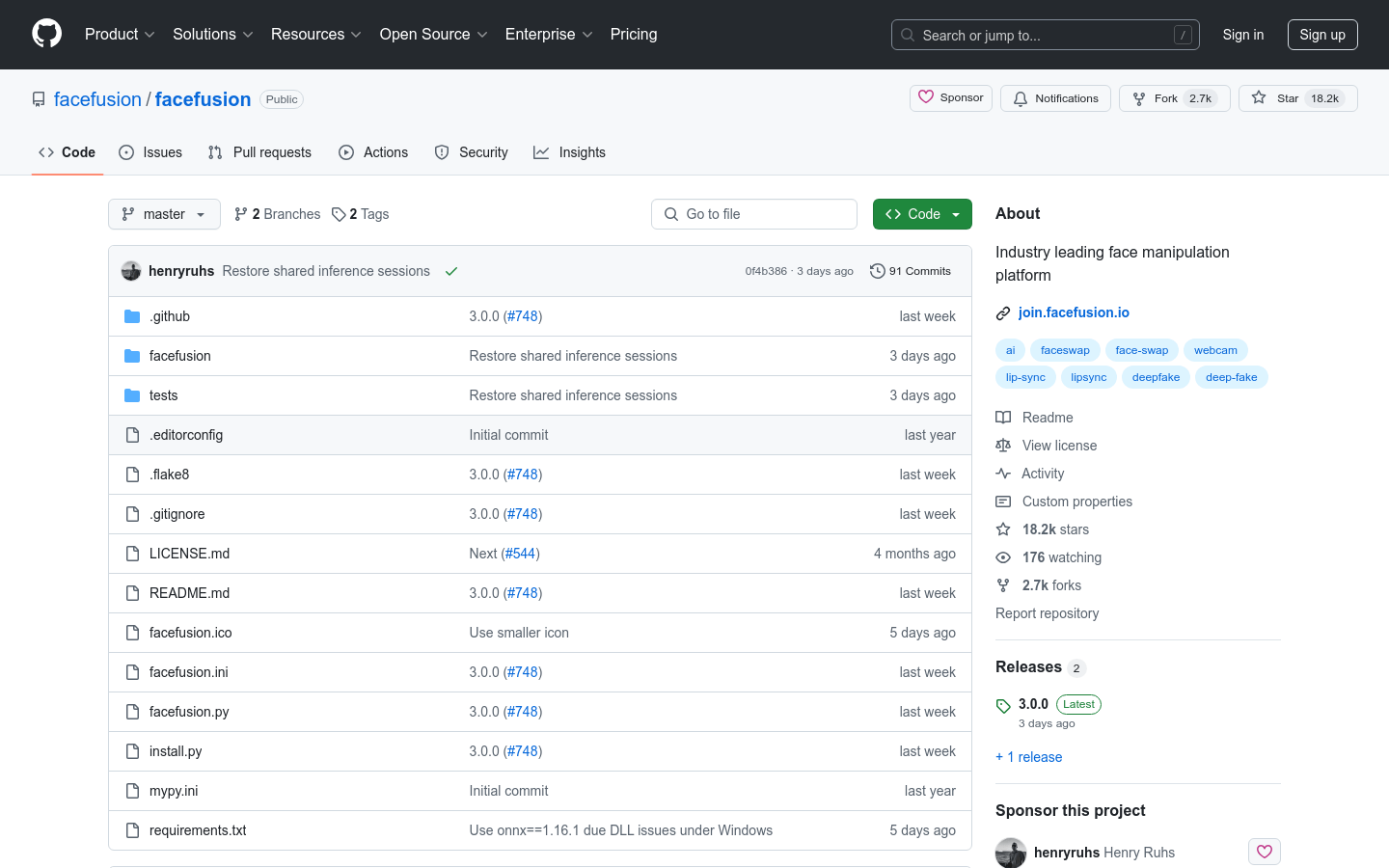

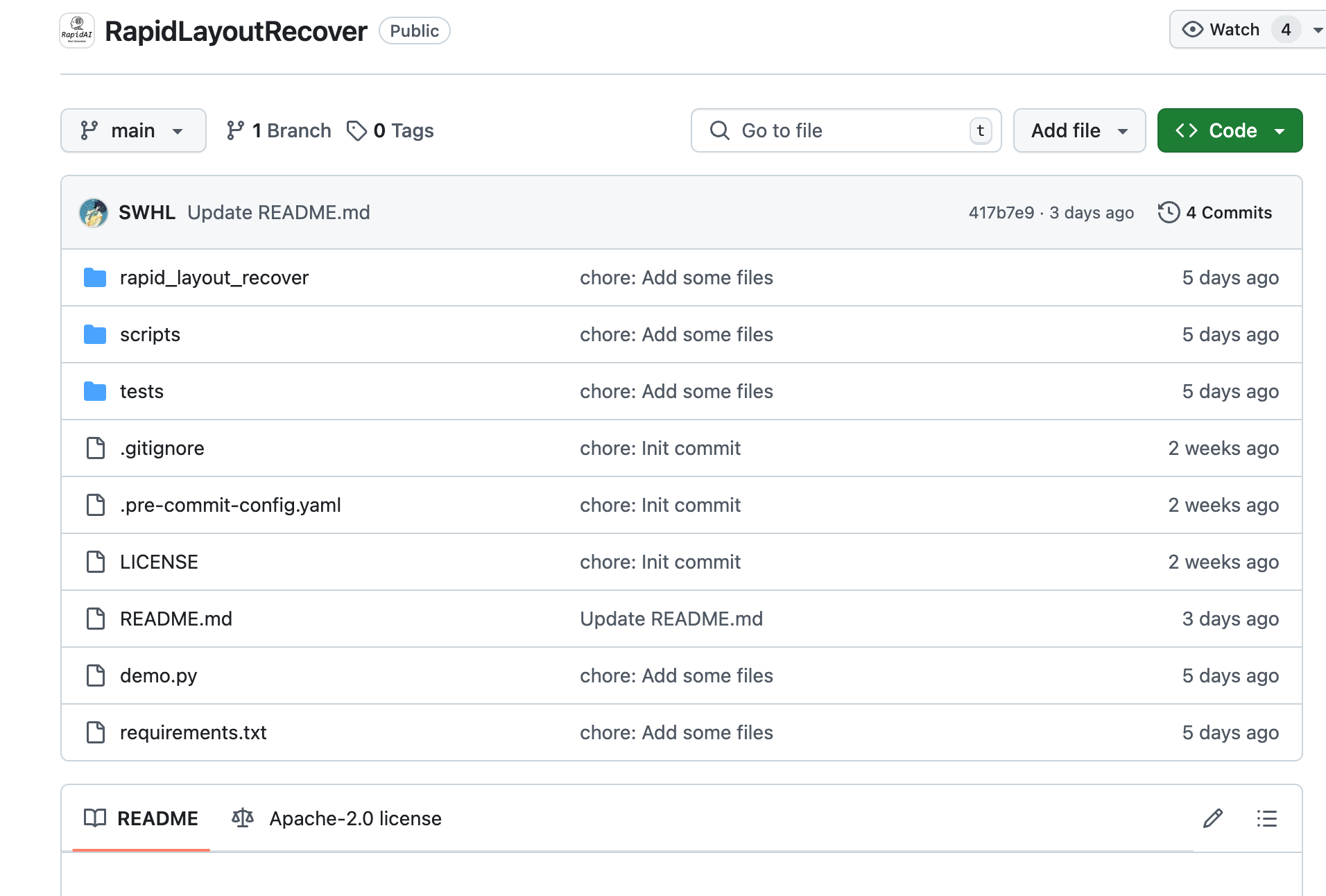

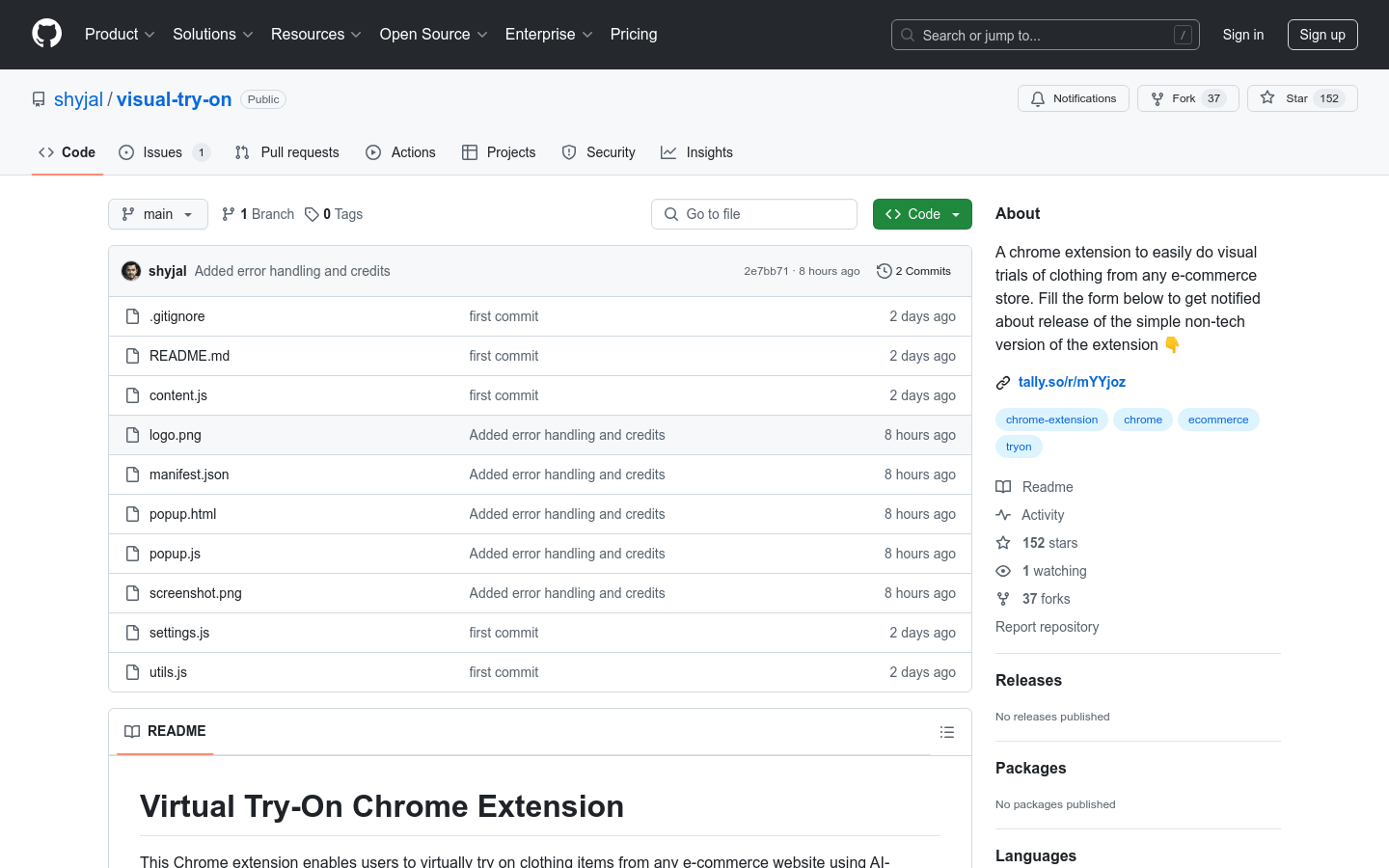

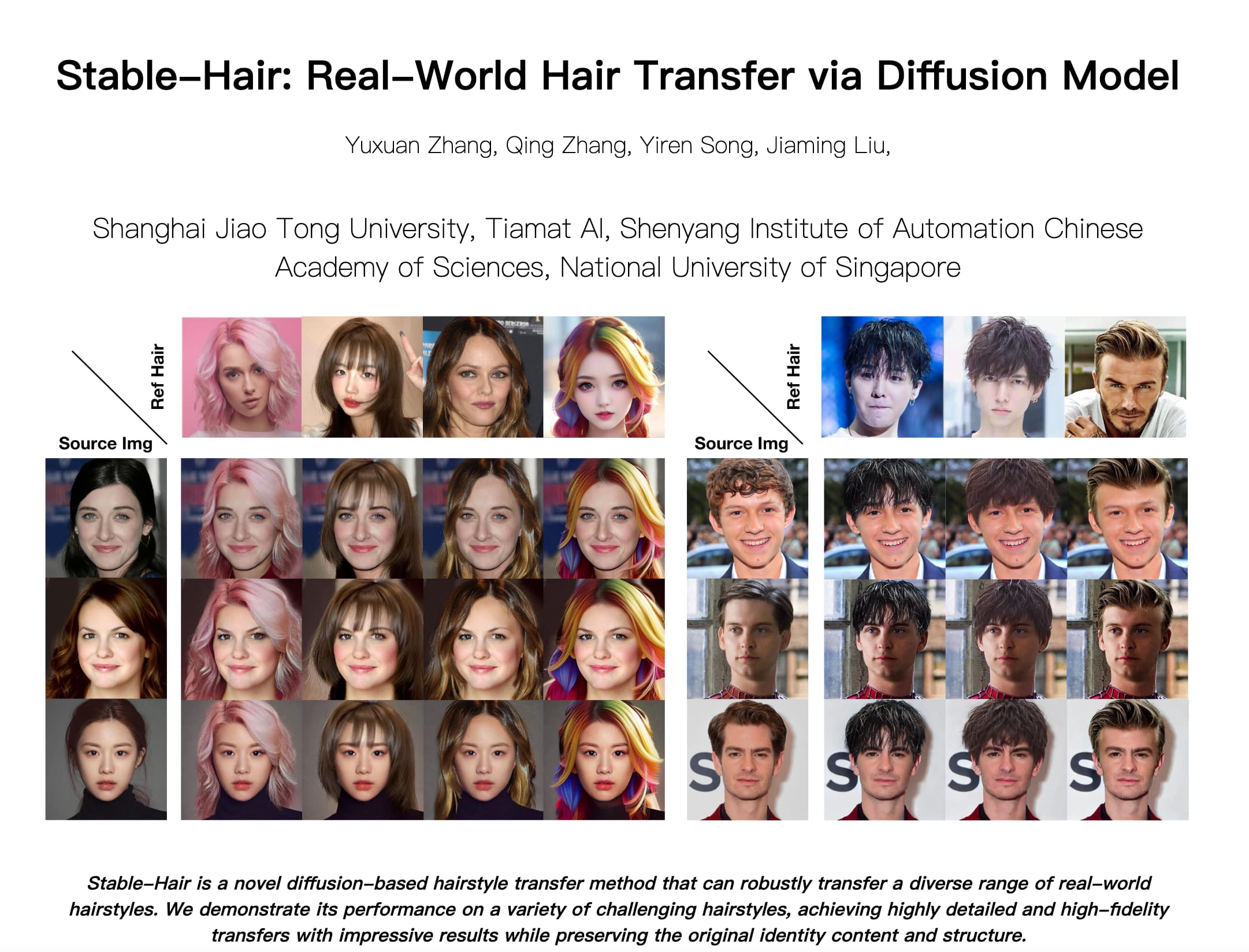

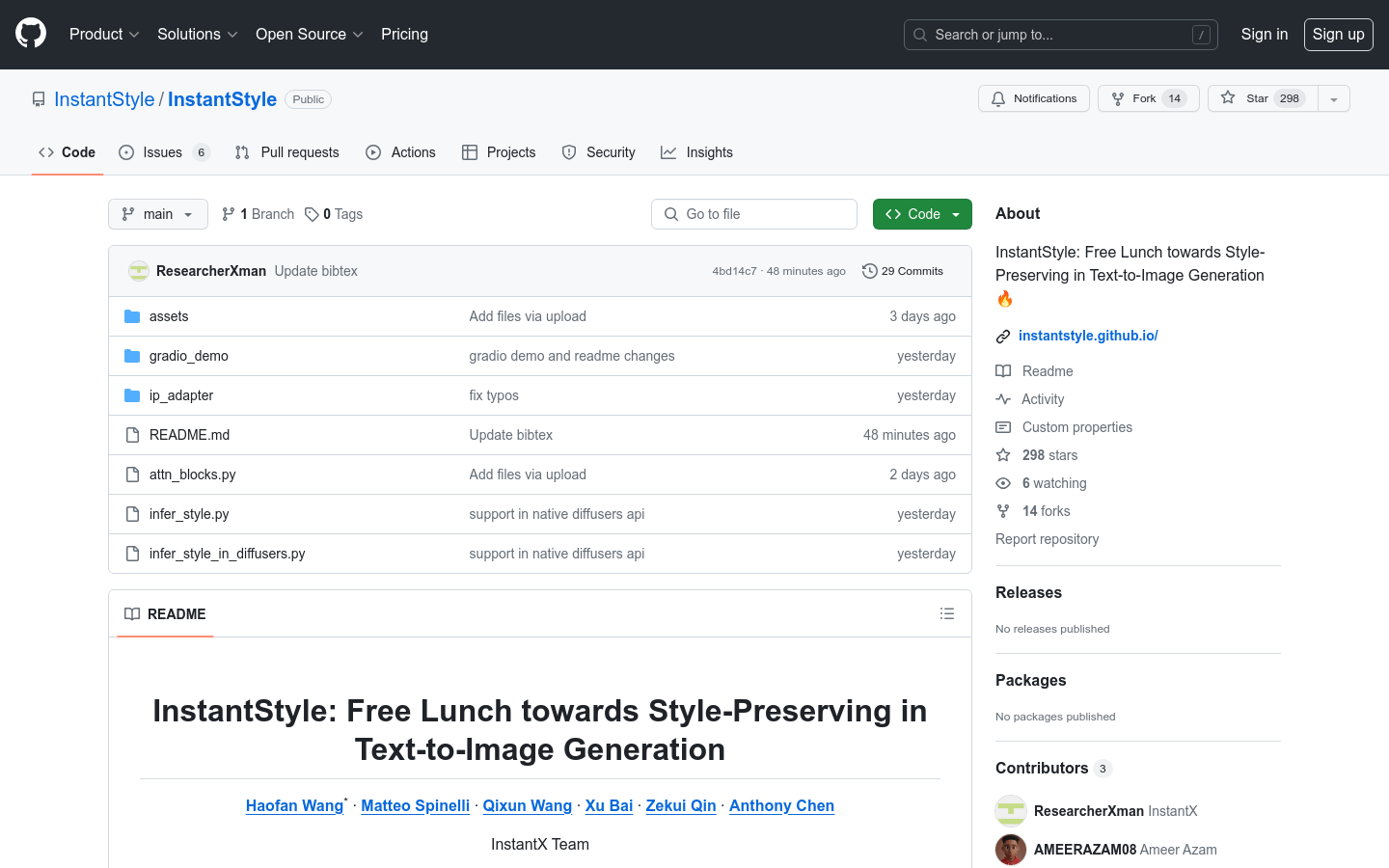

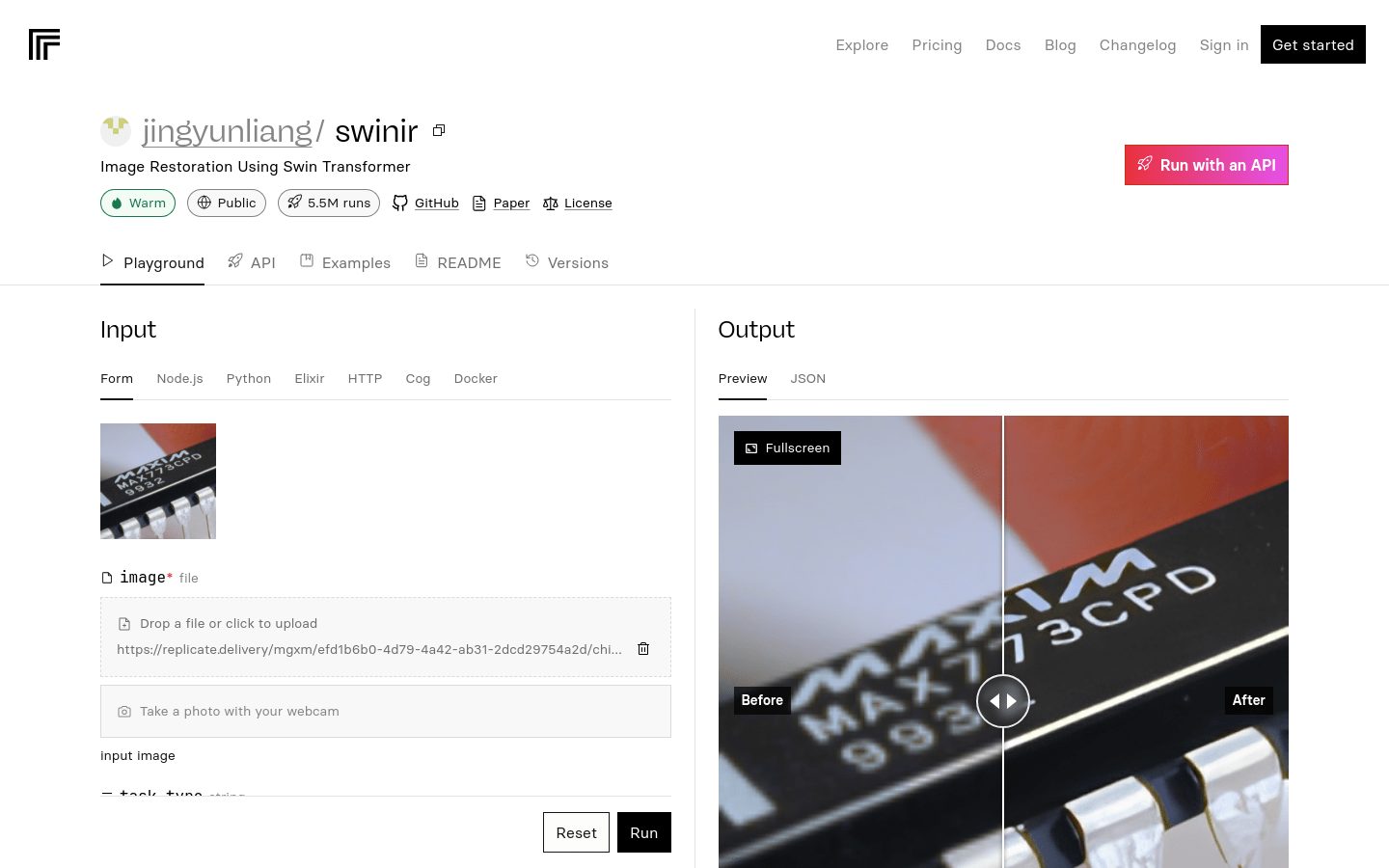

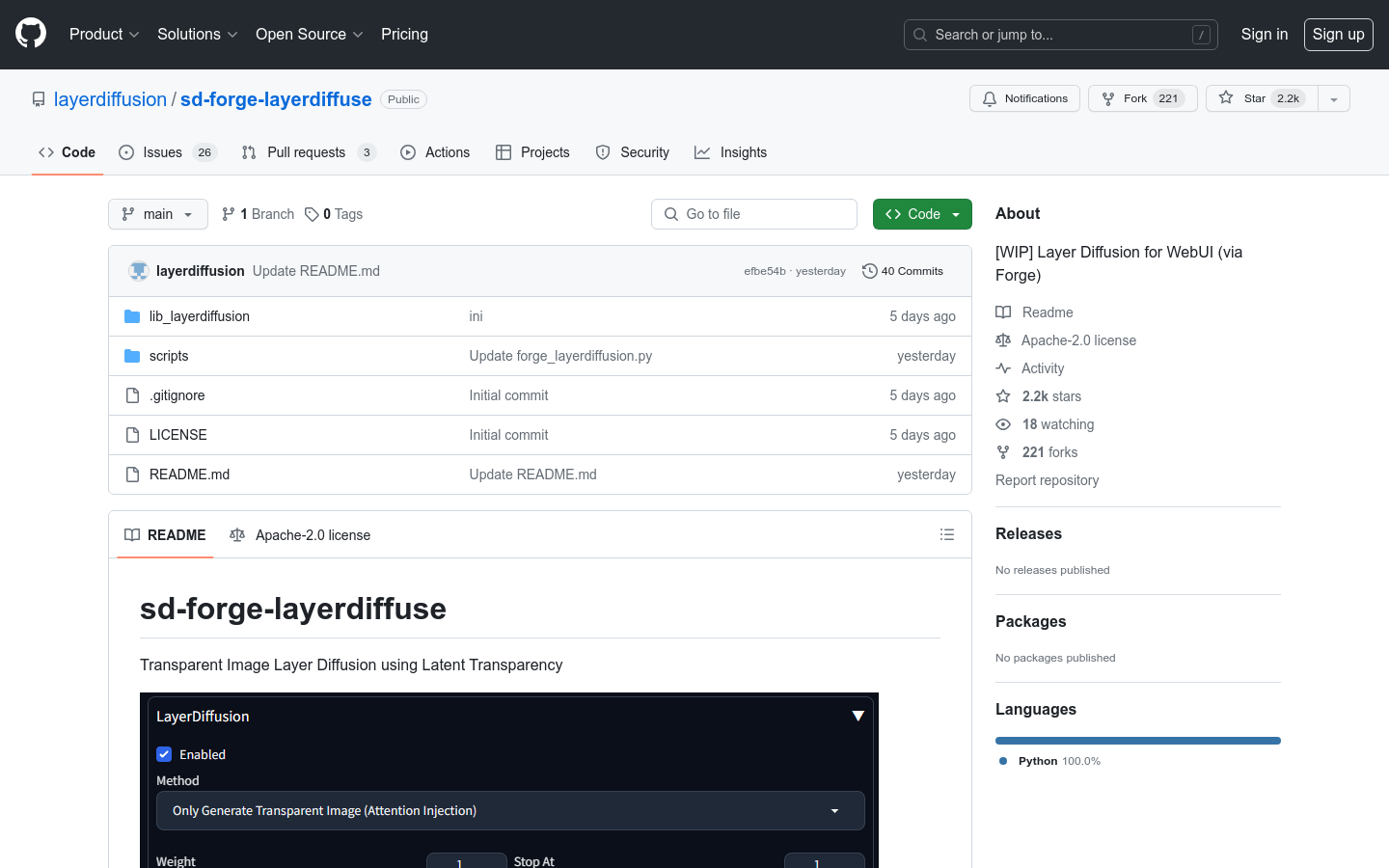

AI image editing

Found 100 AI tools

100

tools

Primary Category: image

Subcategory: AI image editing

Found 100 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under image Other Categories

🖼️

Explore More image Tools

AI image editing Hot image is a popular subcategory under 196 quality AI tools