🖼️

image Category

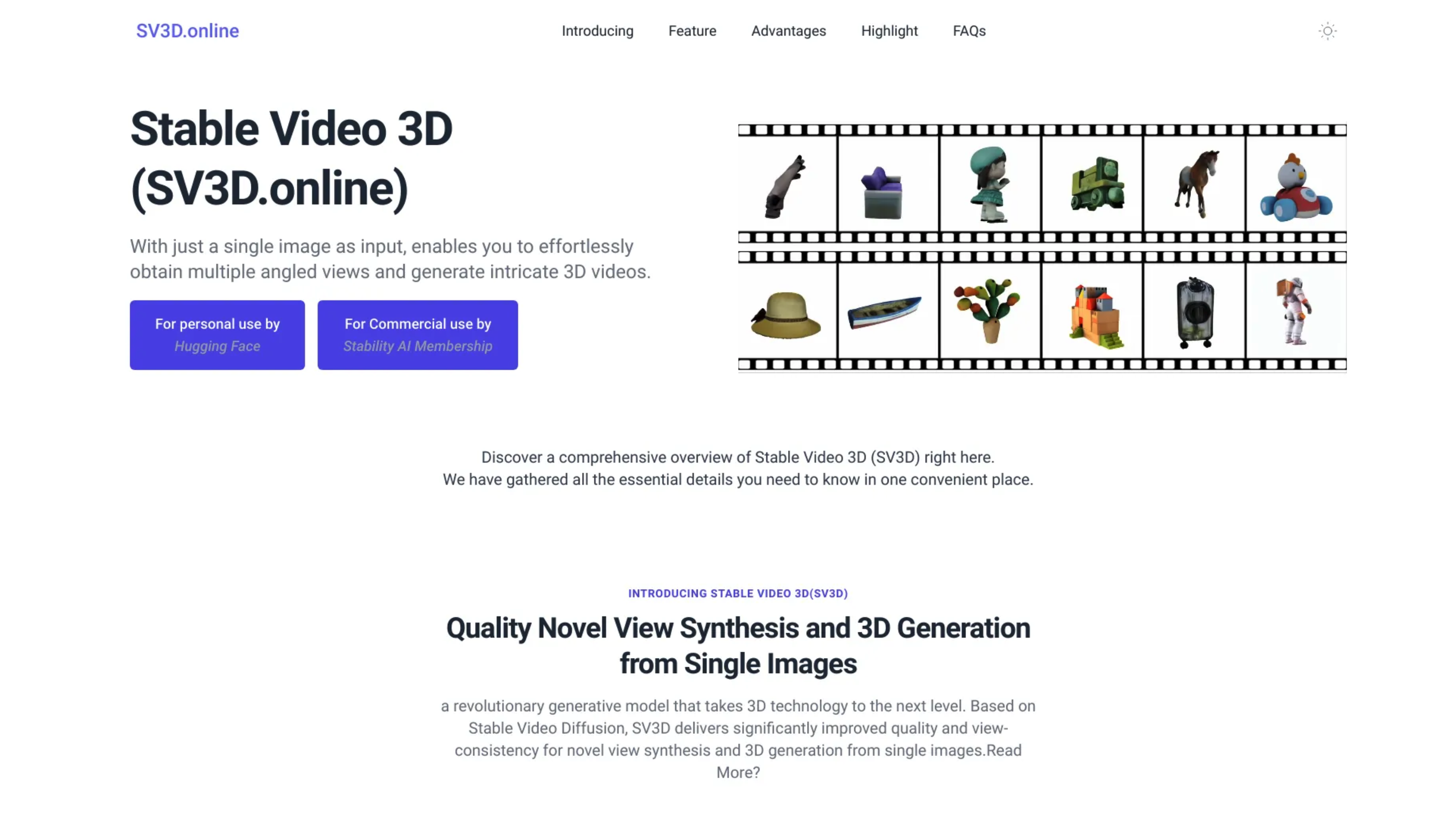

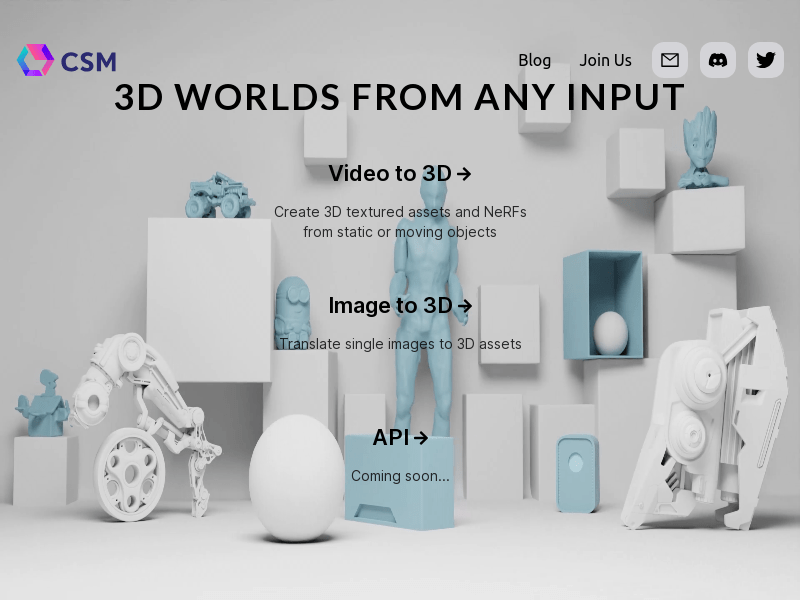

3D modeling

Found 40 AI tools

40

tools

Primary Category: image

Subcategory: 3D modeling

Found 40 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under image Other Categories

🖼️

Explore More image Tools

3D modeling Hot image is a popular subcategory under 40 quality AI tools