🎨

design Category

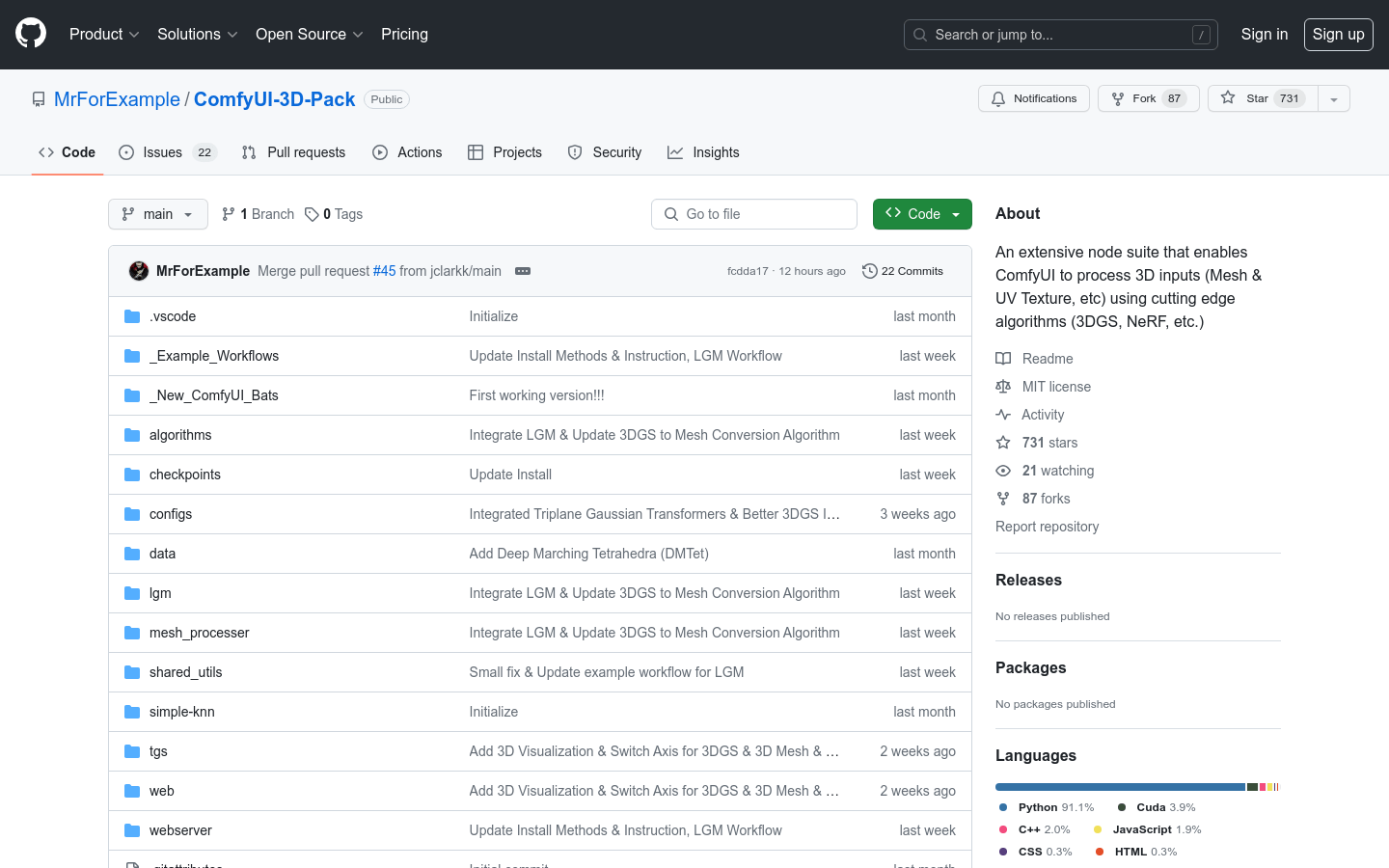

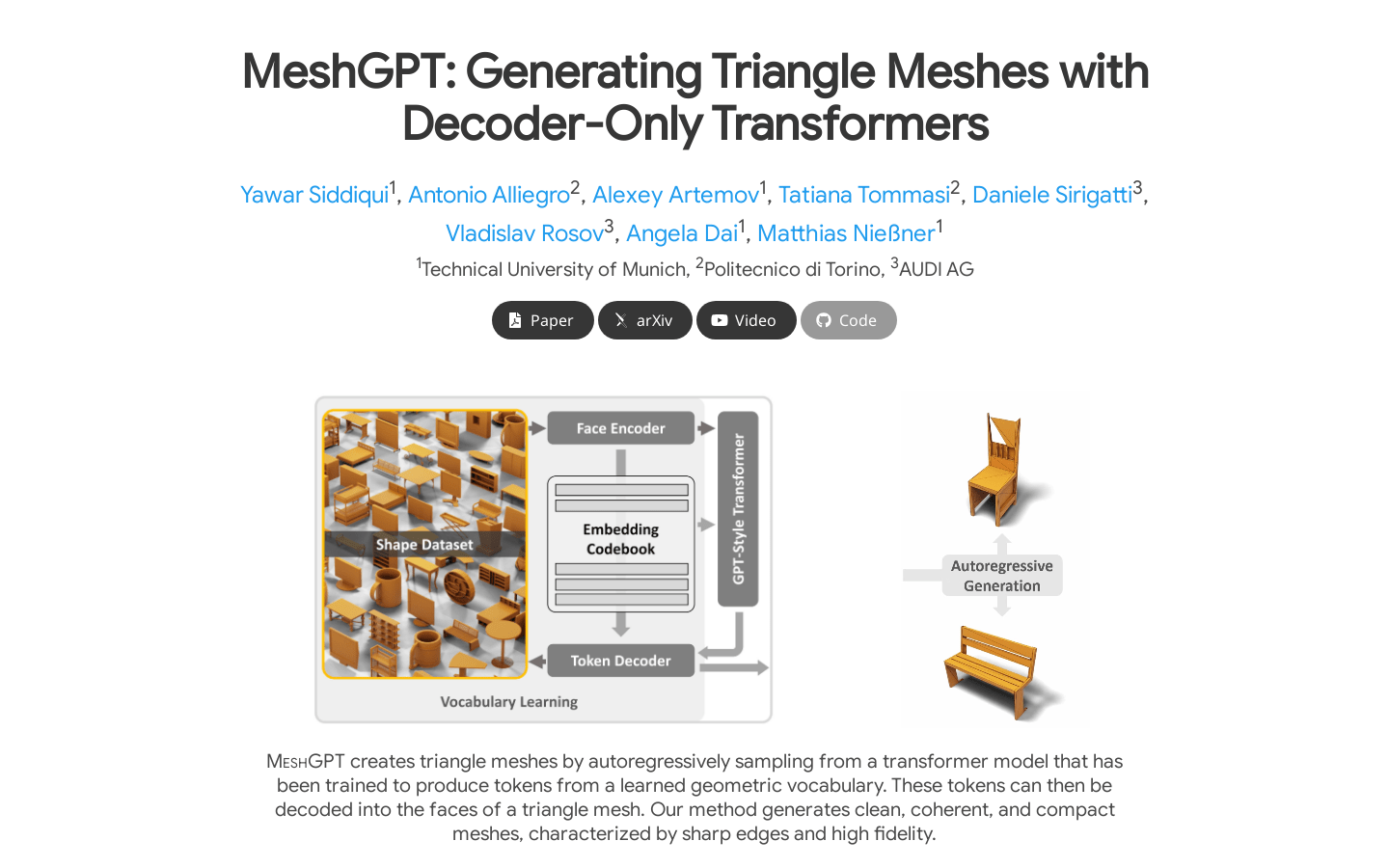

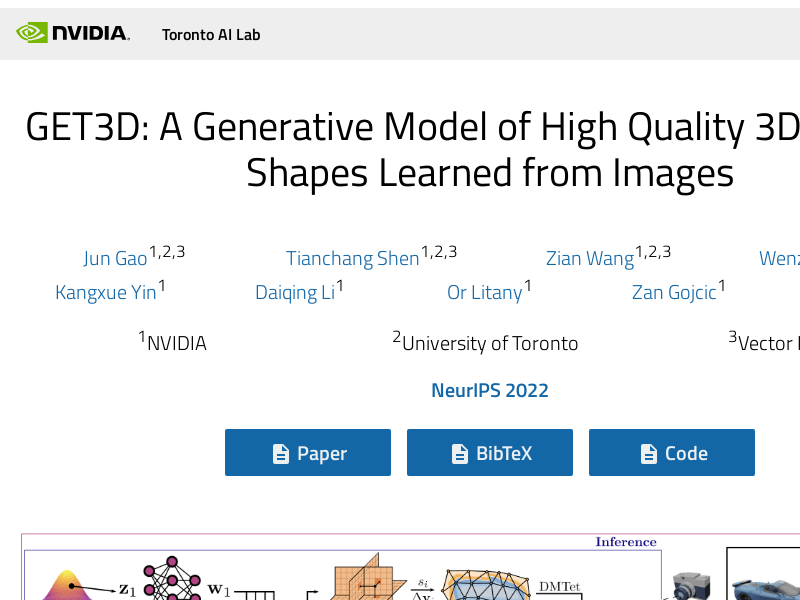

AI 3D tools

Found 33 AI tools

33

tools

Primary Category: design

Subcategory: AI 3D tools

Found 33 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under design Other Categories

🎨

Explore More design Tools

AI 3D tools Hot design is a popular subcategory under 33 quality AI tools