🎬

video Category

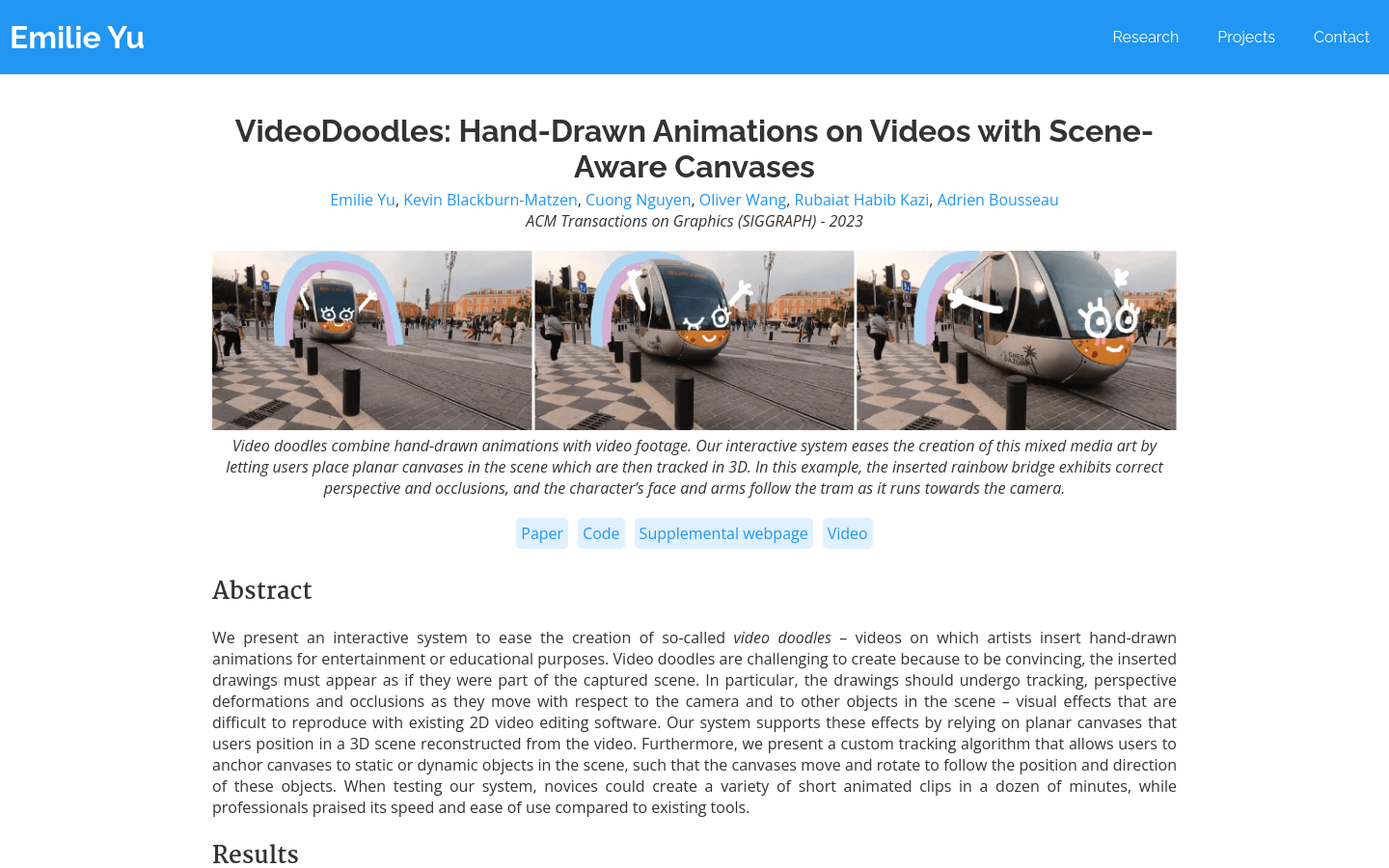

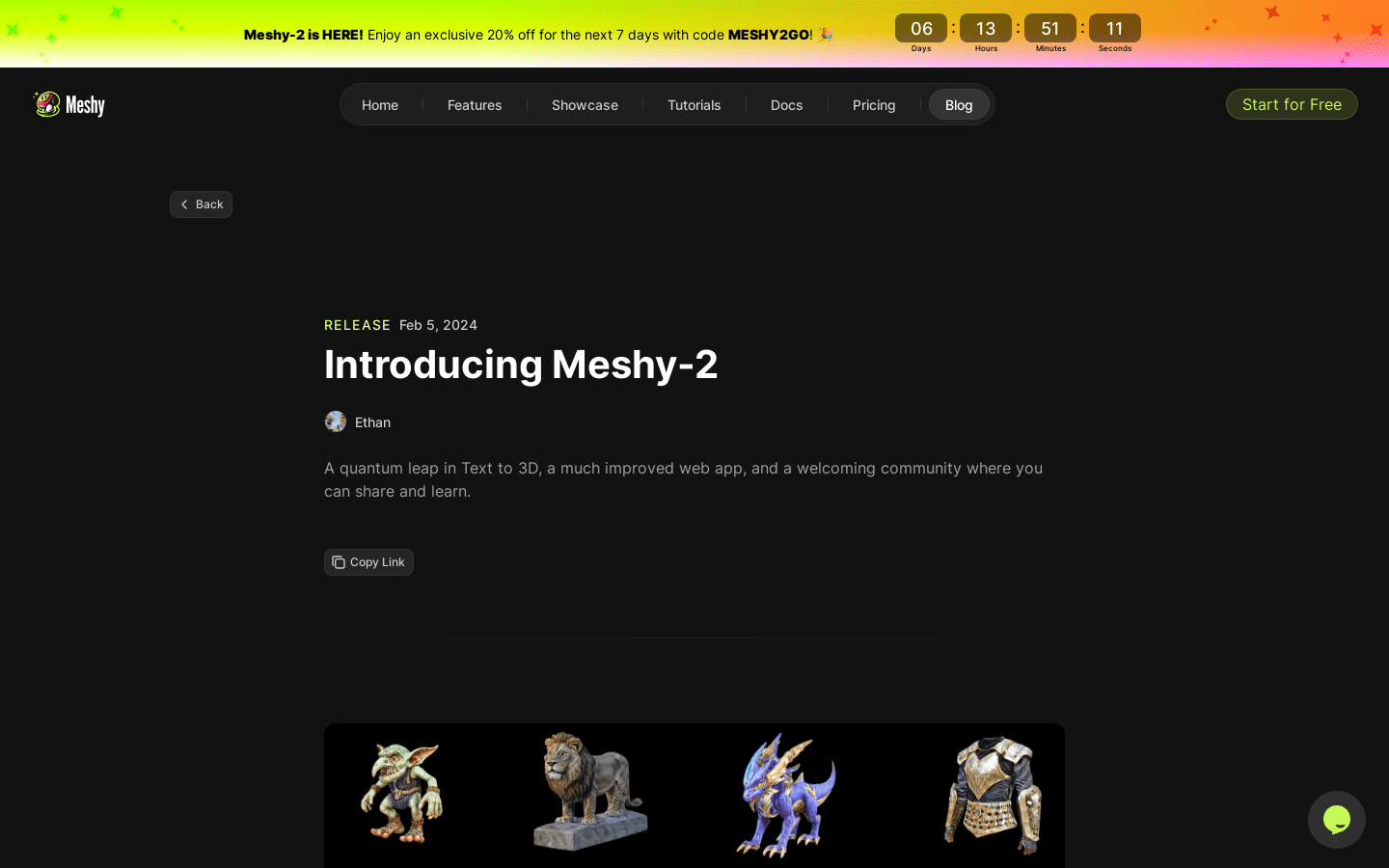

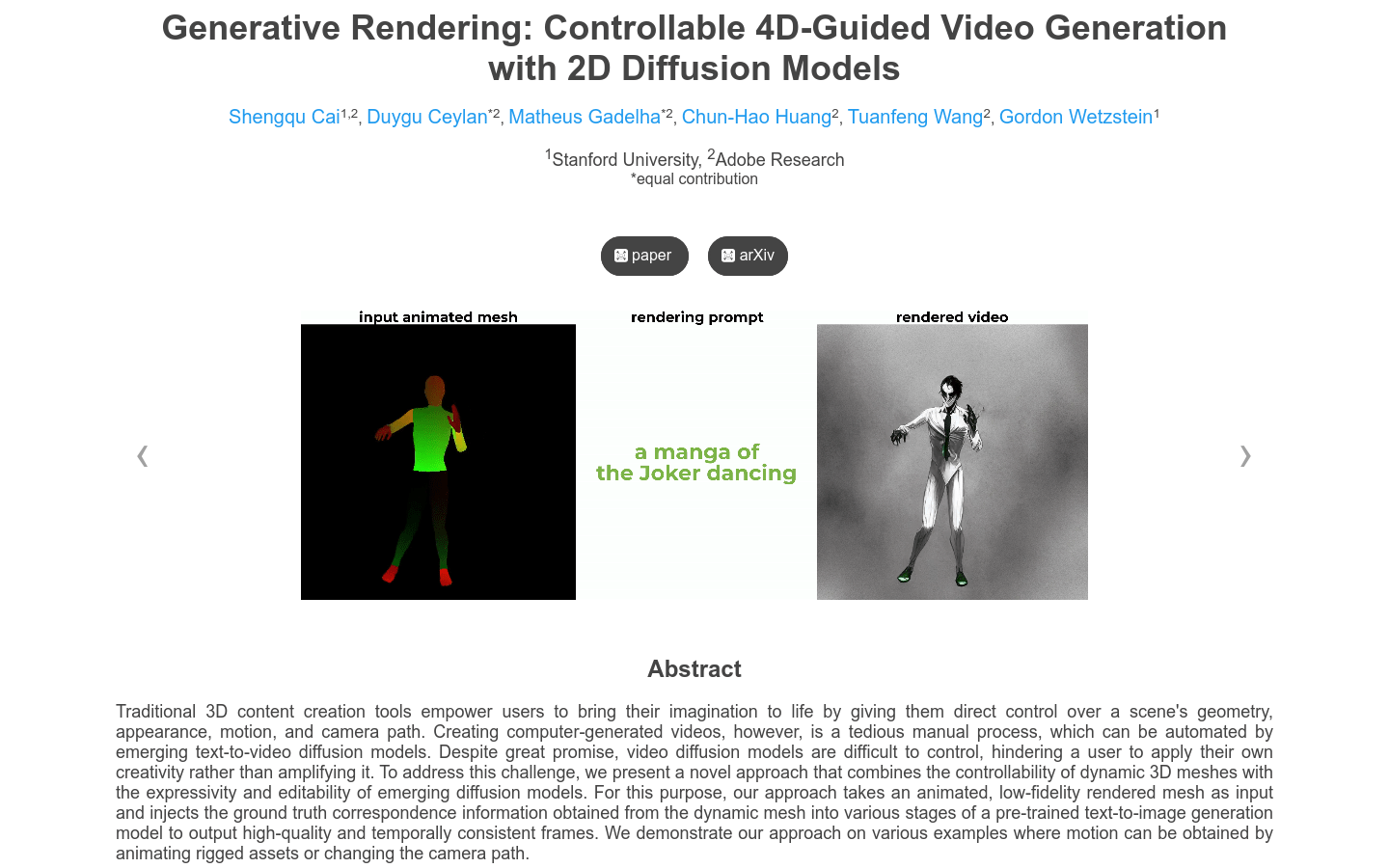

AI 3D tools

Found 7 AI tools

7

tools

Primary Category: video

Subcategory: AI 3D tools

Found 7 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under video Other Categories

🎬

Explore More video Tools

AI 3D tools Hot video is a popular subcategory under 7 quality AI tools