💻

programming Category

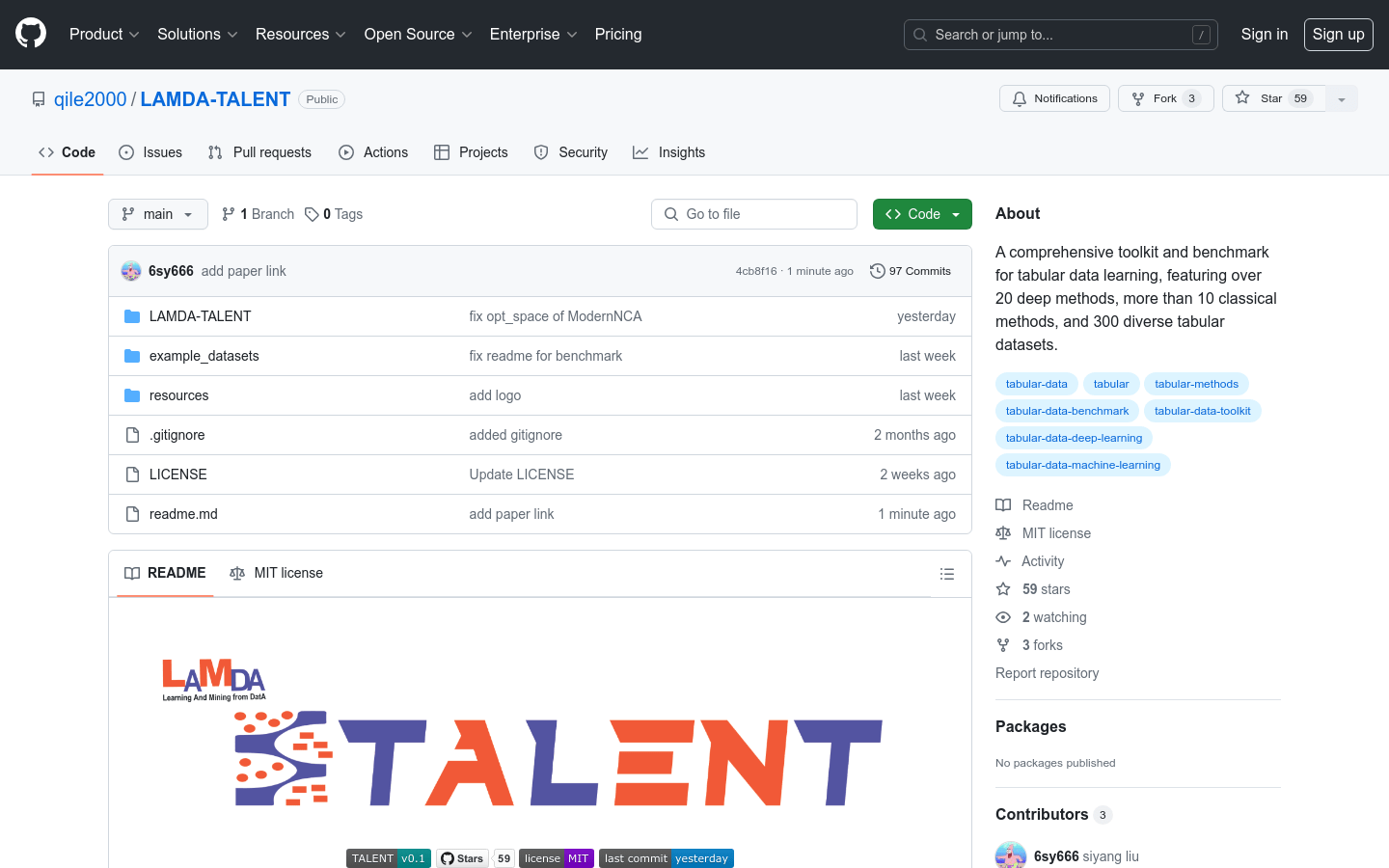

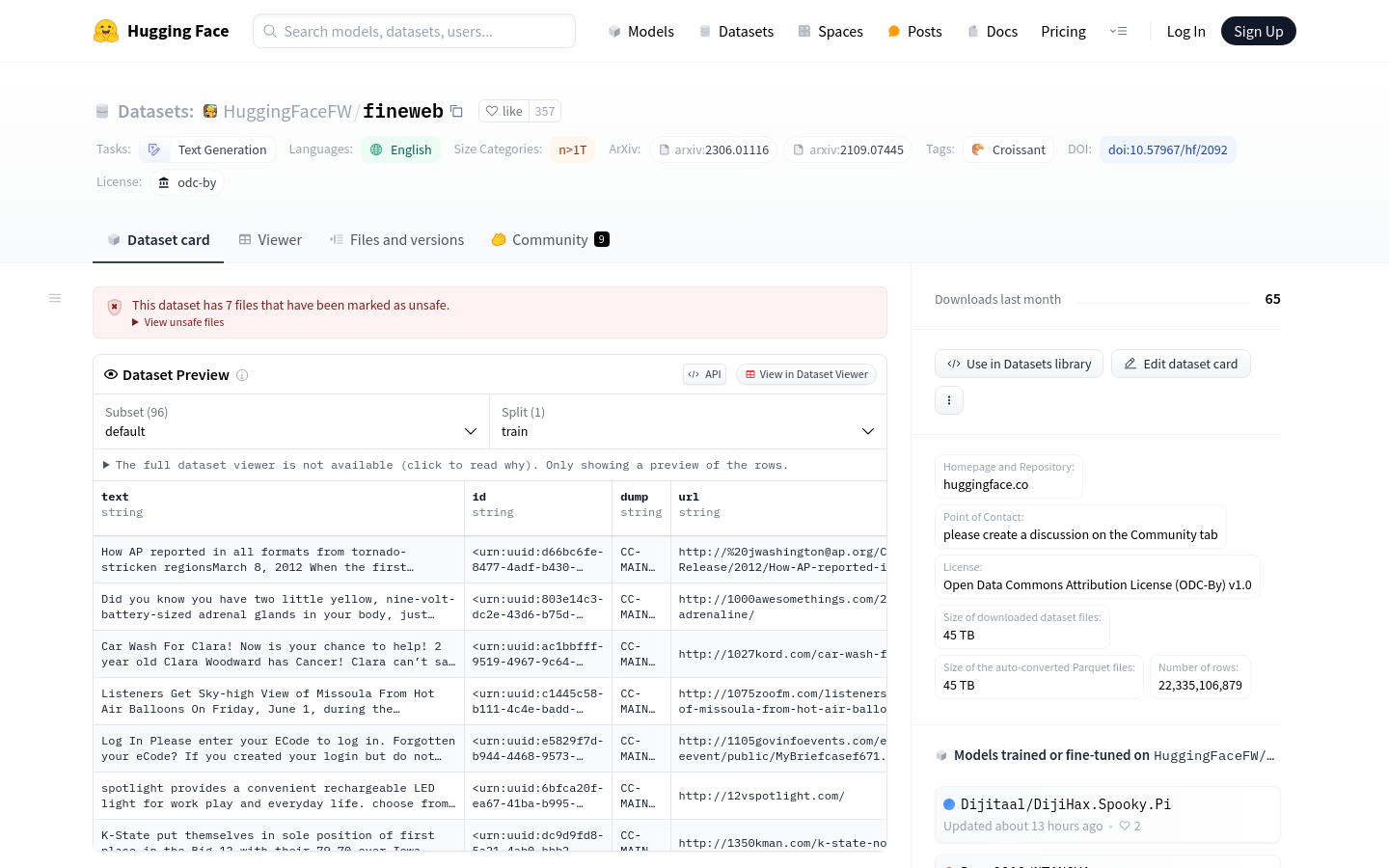

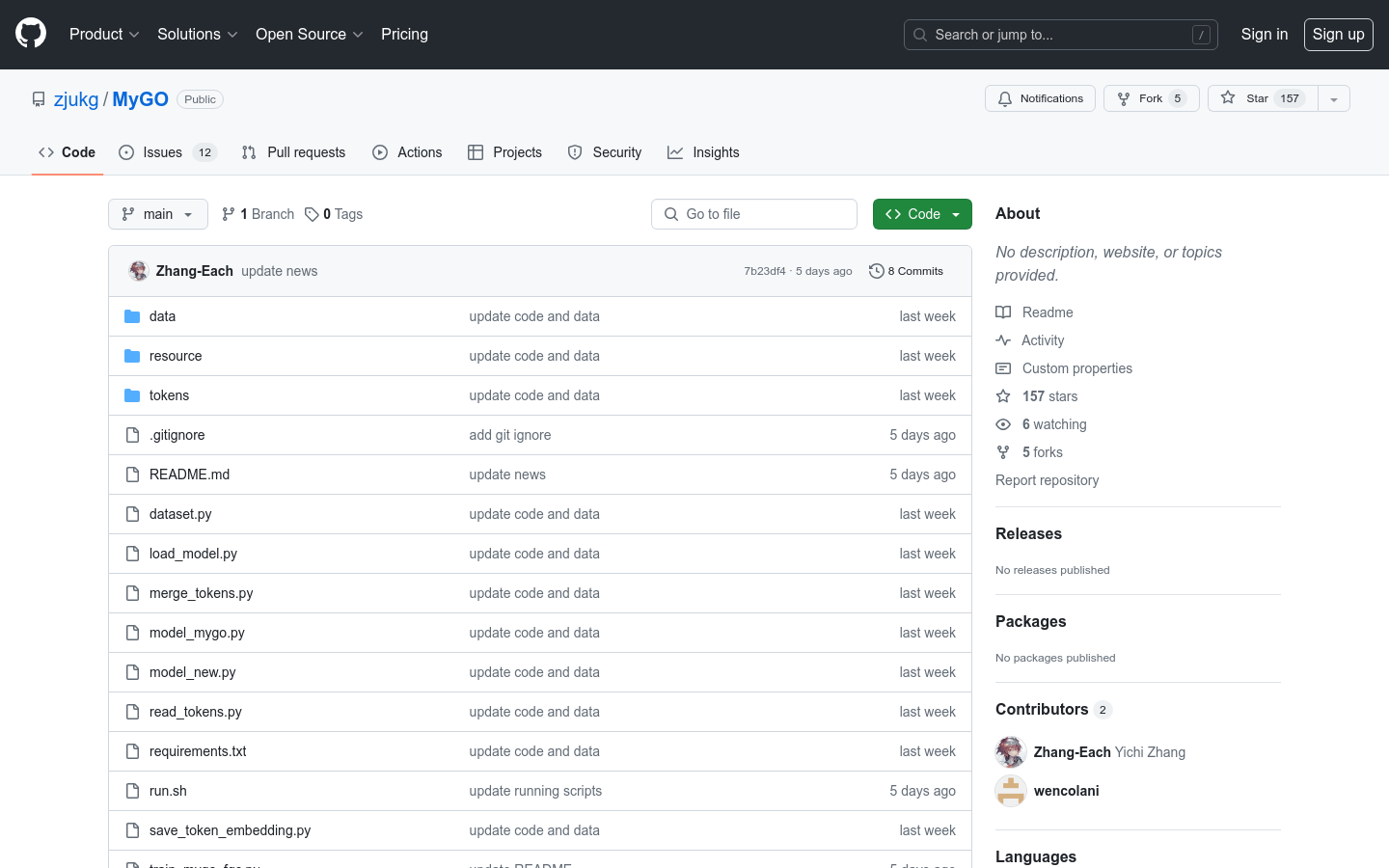

AI data mining

Found 23 AI tools

23

tools

Primary Category: programming

Subcategory: AI data mining

Found 23 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under programming Other Categories

💻

Explore More programming Tools

AI data mining Hot programming is a popular subcategory under 23 quality AI tools