💻

programming Category

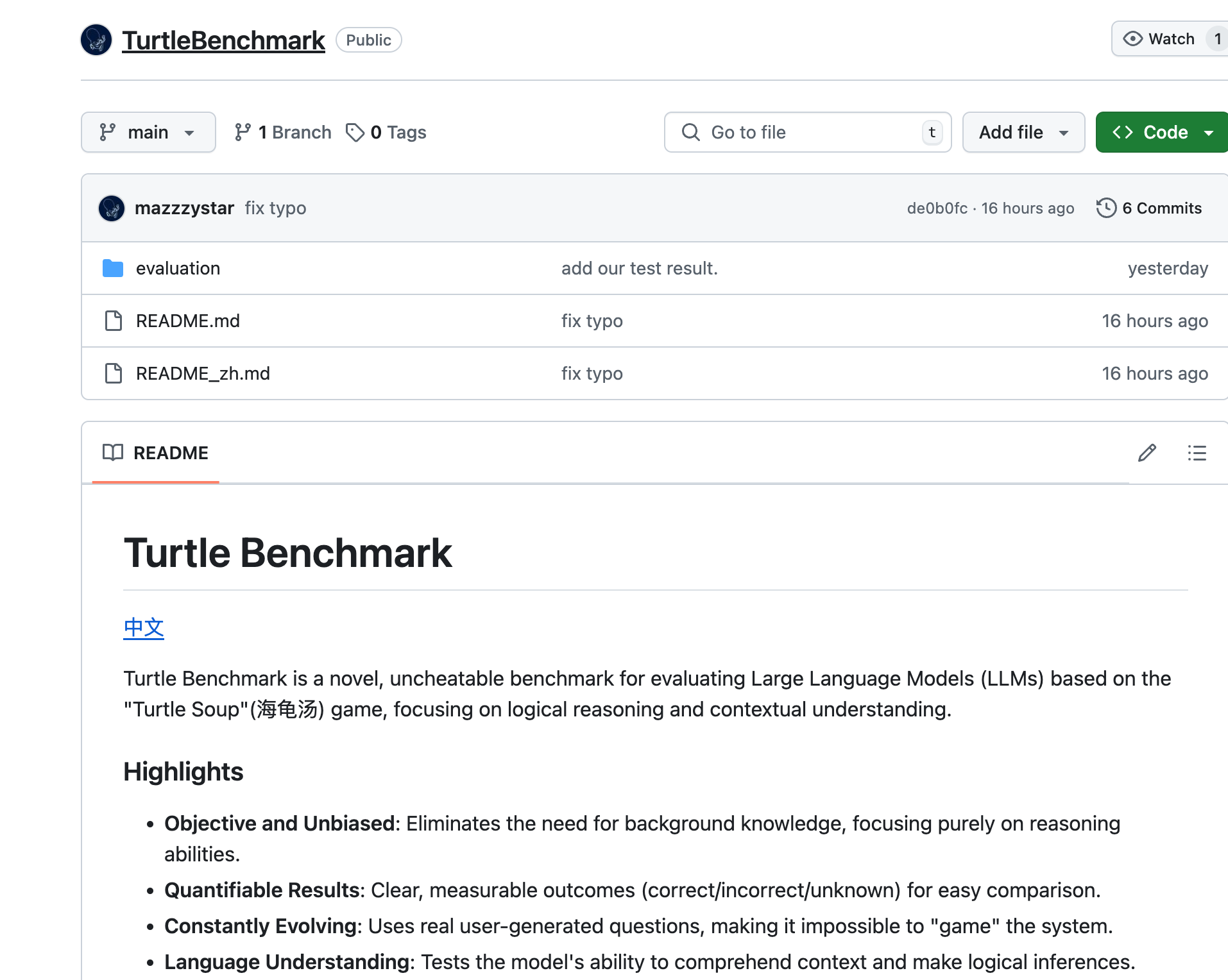

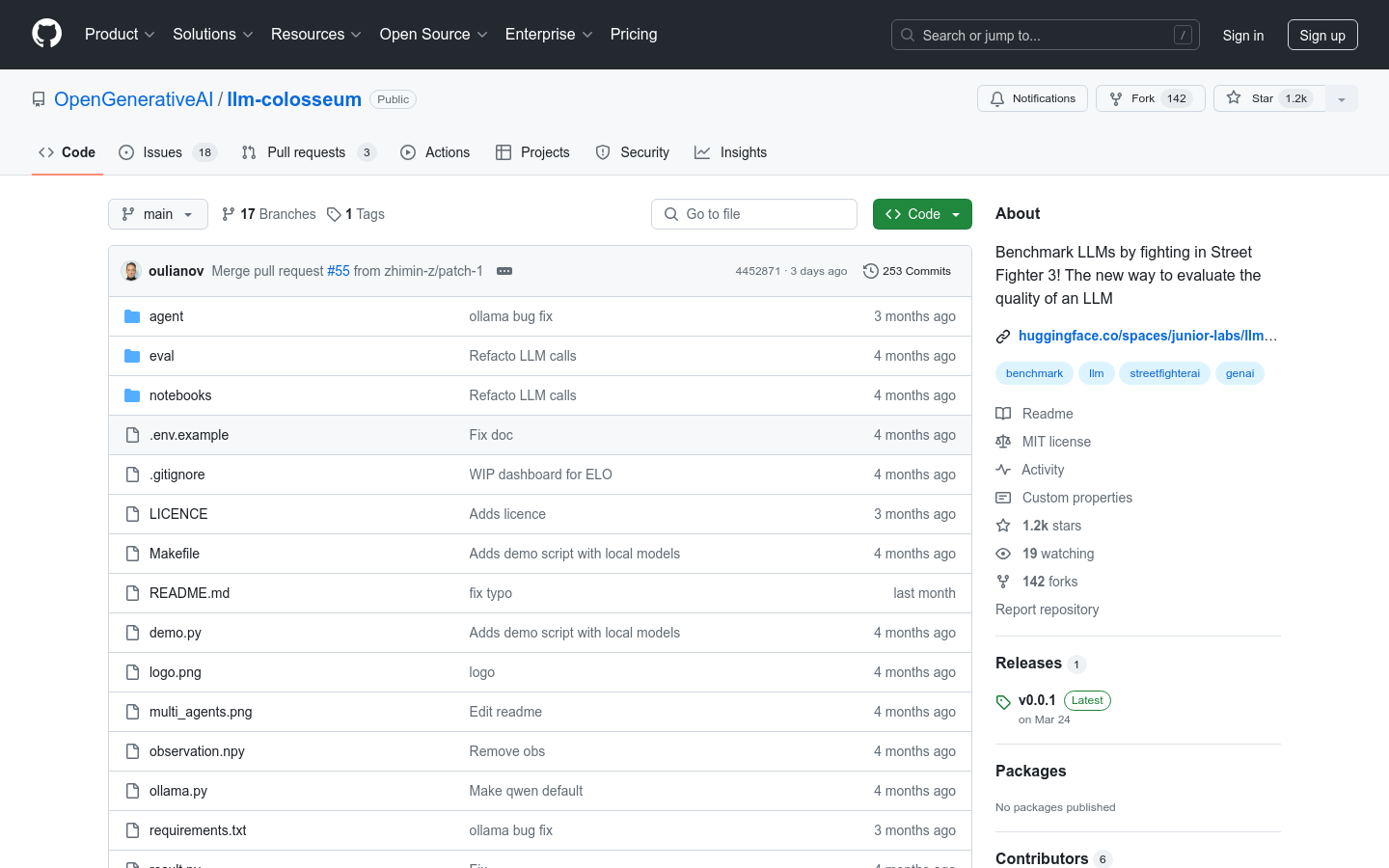

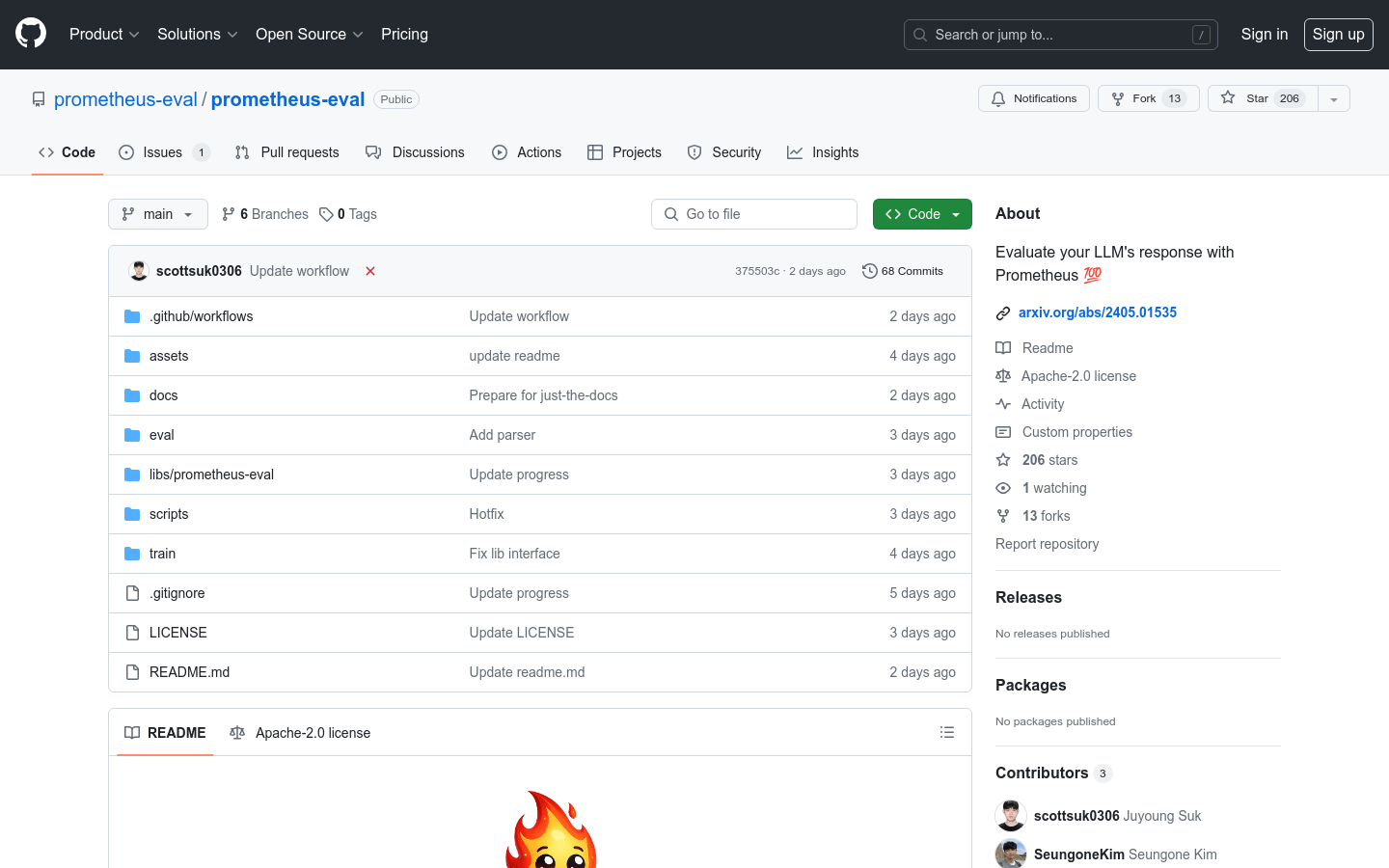

AI model evaluation

Found 8 AI tools

8

tools

Primary Category: programming

Subcategory: AI model evaluation

Found 8 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under programming Other Categories

💻

Explore More programming Tools

AI model evaluation Hot programming is a popular subcategory under 8 quality AI tools