💼

productive forces Category

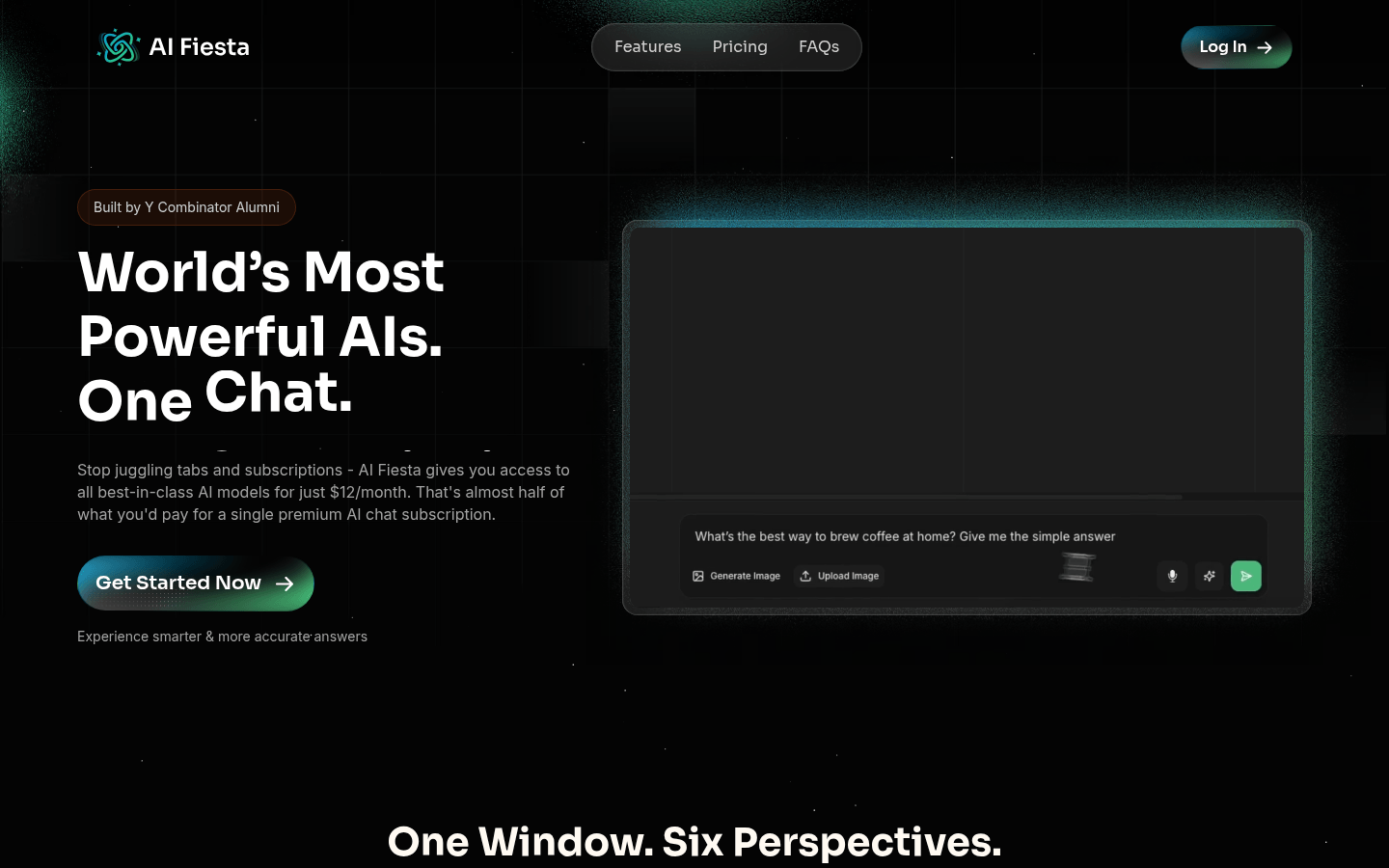

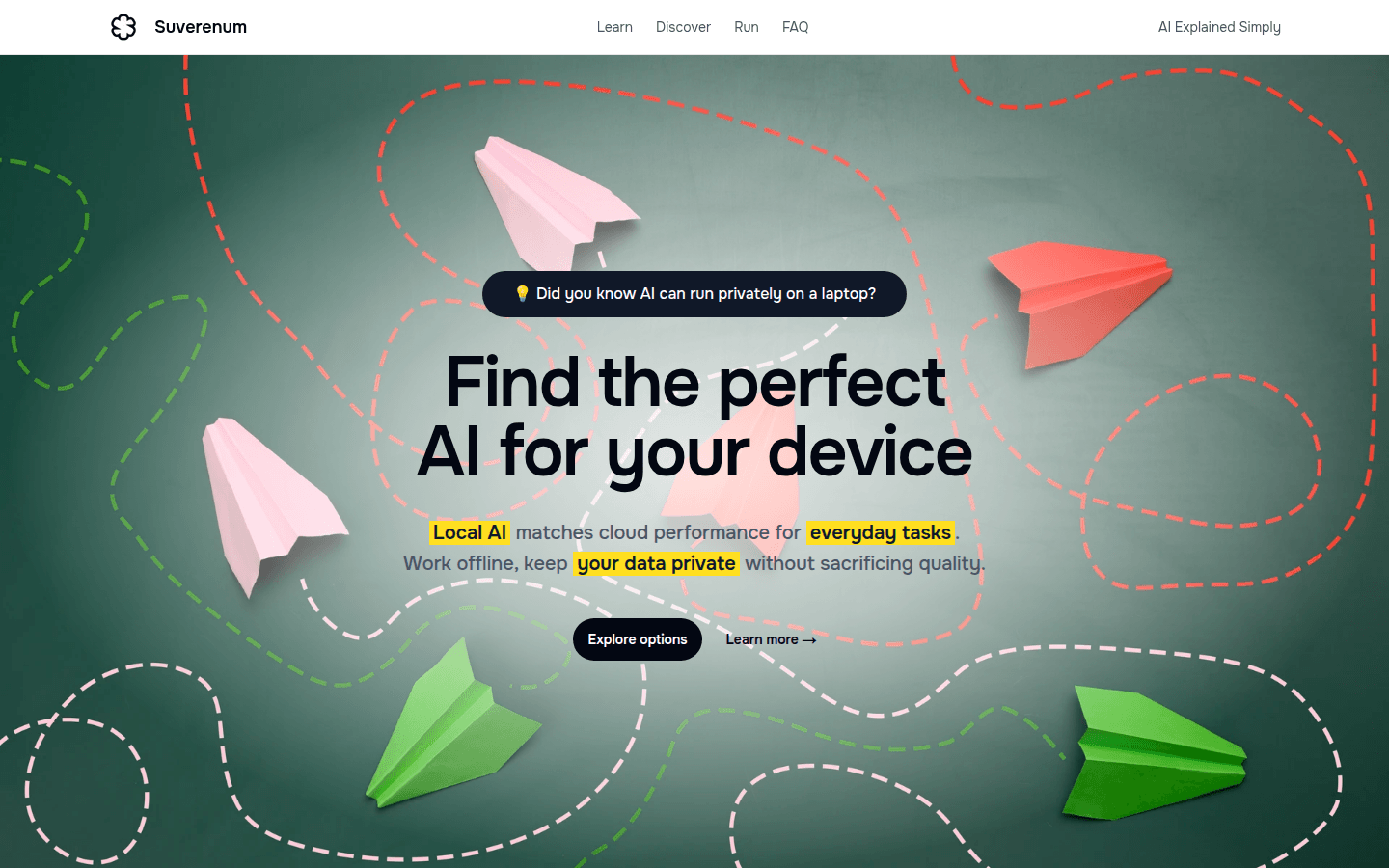

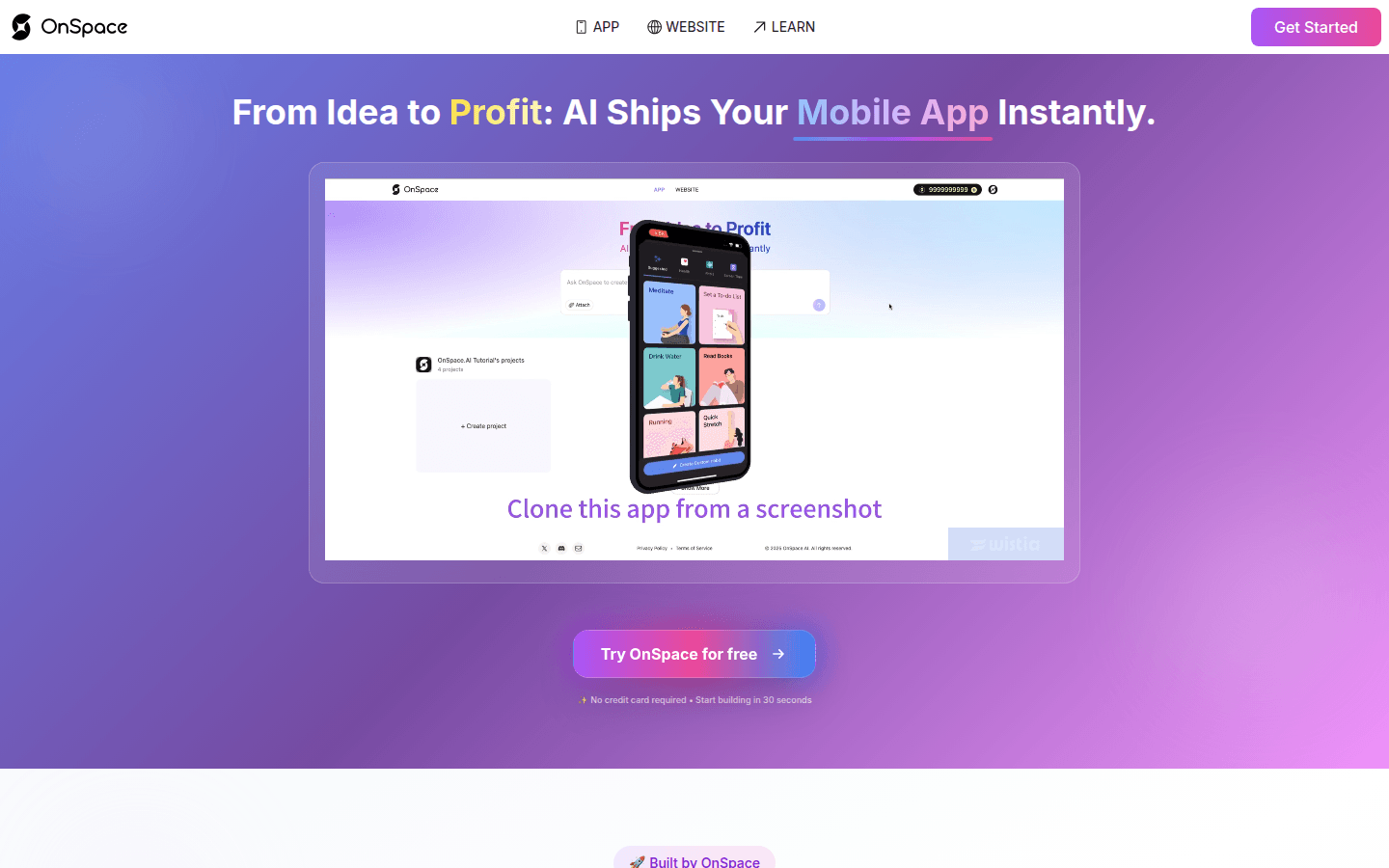

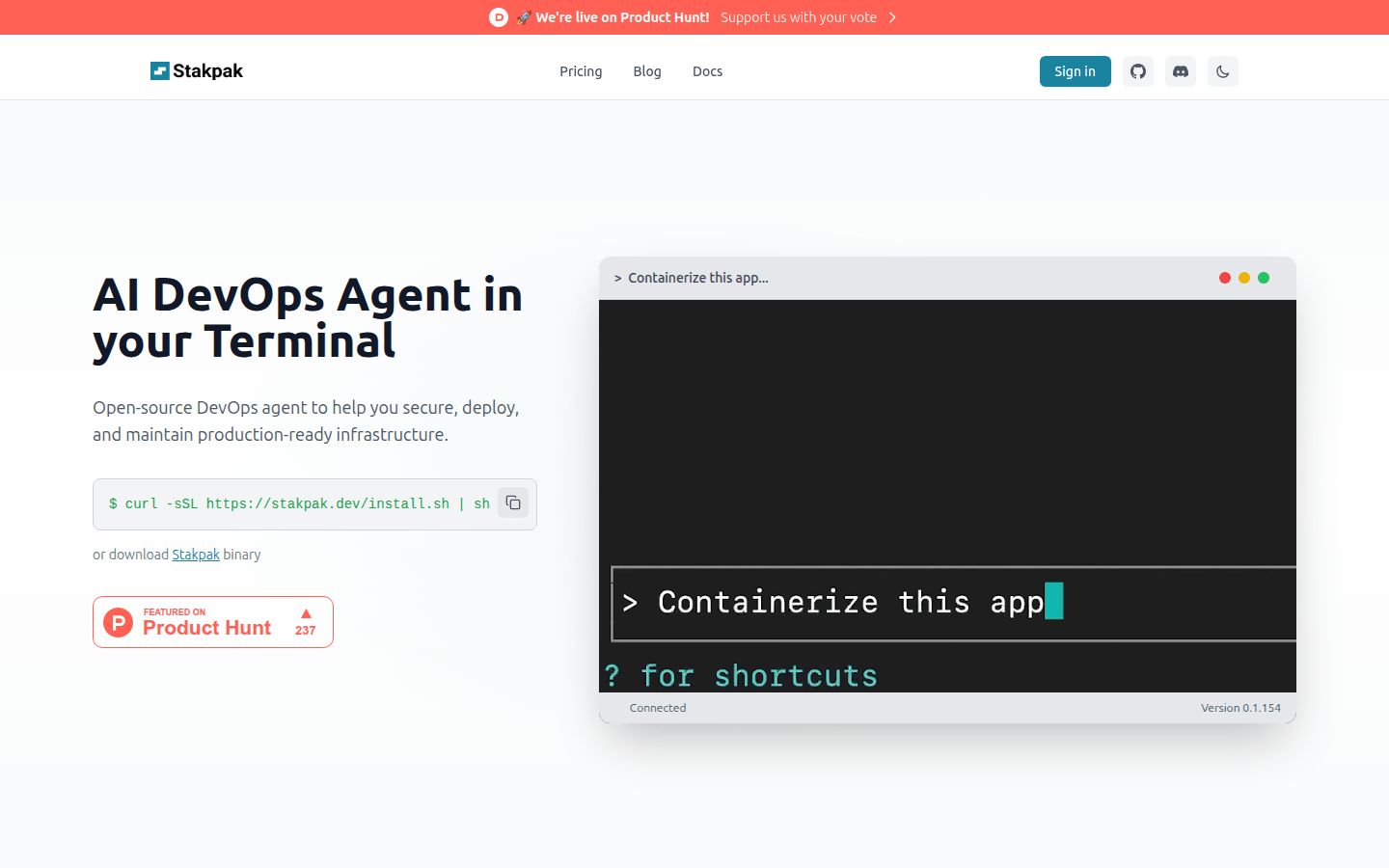

AI model

Found 100 AI tools

100

tools

Primary Category: productive forces

Subcategory: AI model

Found 100 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under productive forces Other Categories

💼

Explore More productive forces Tools

AI model Hot productive forces is a popular subcategory under 619 quality AI tools