🖼️

image Category

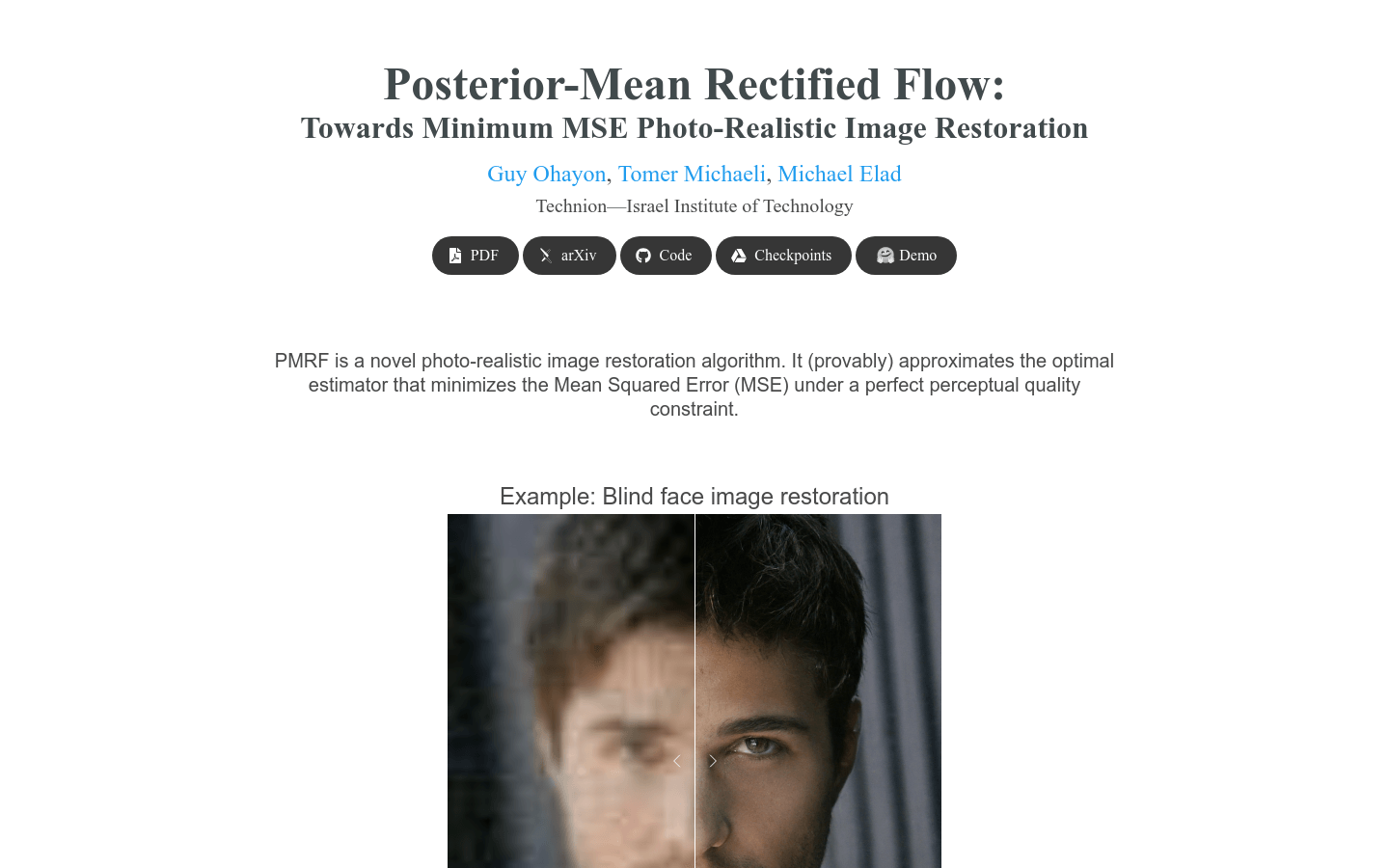

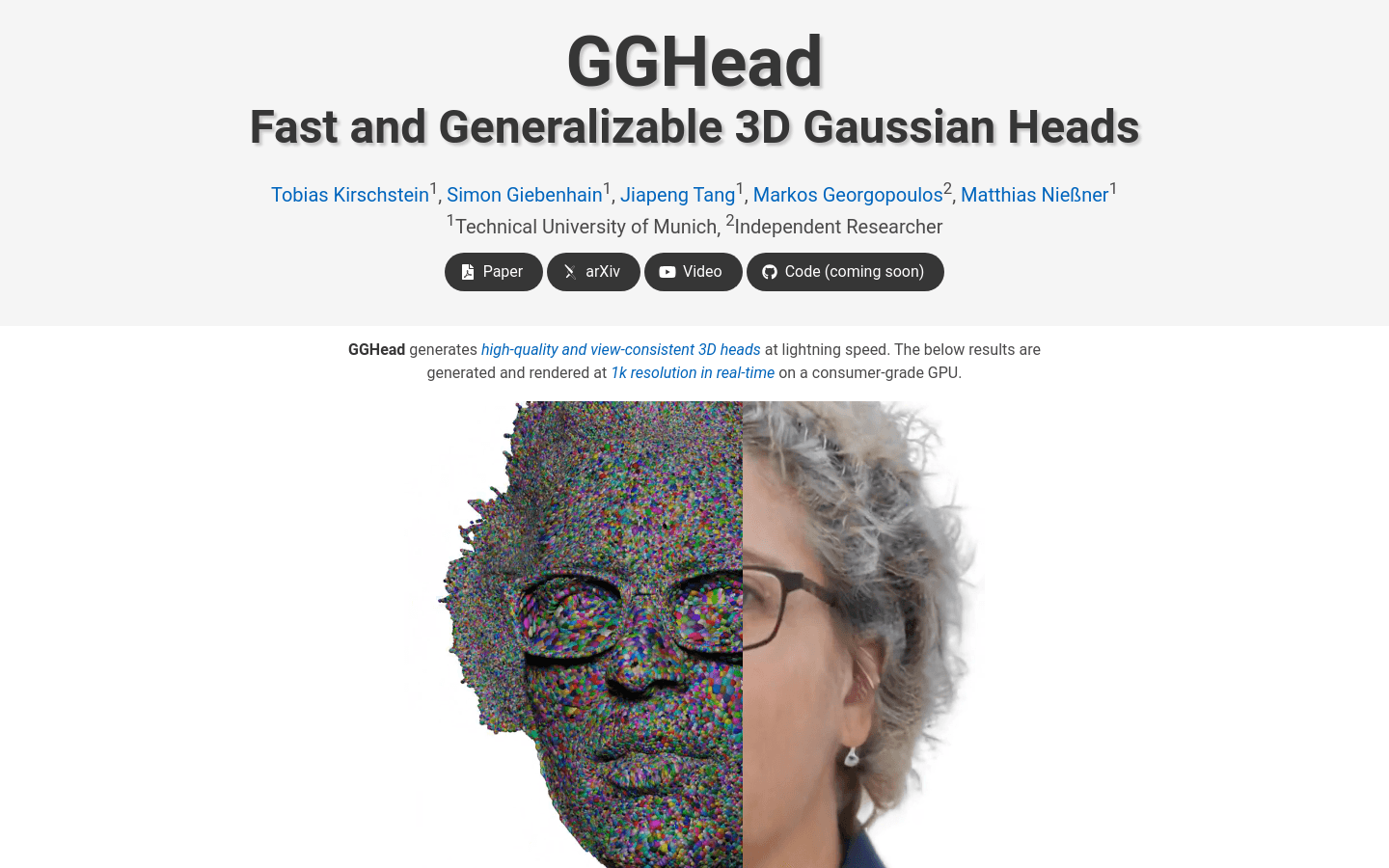

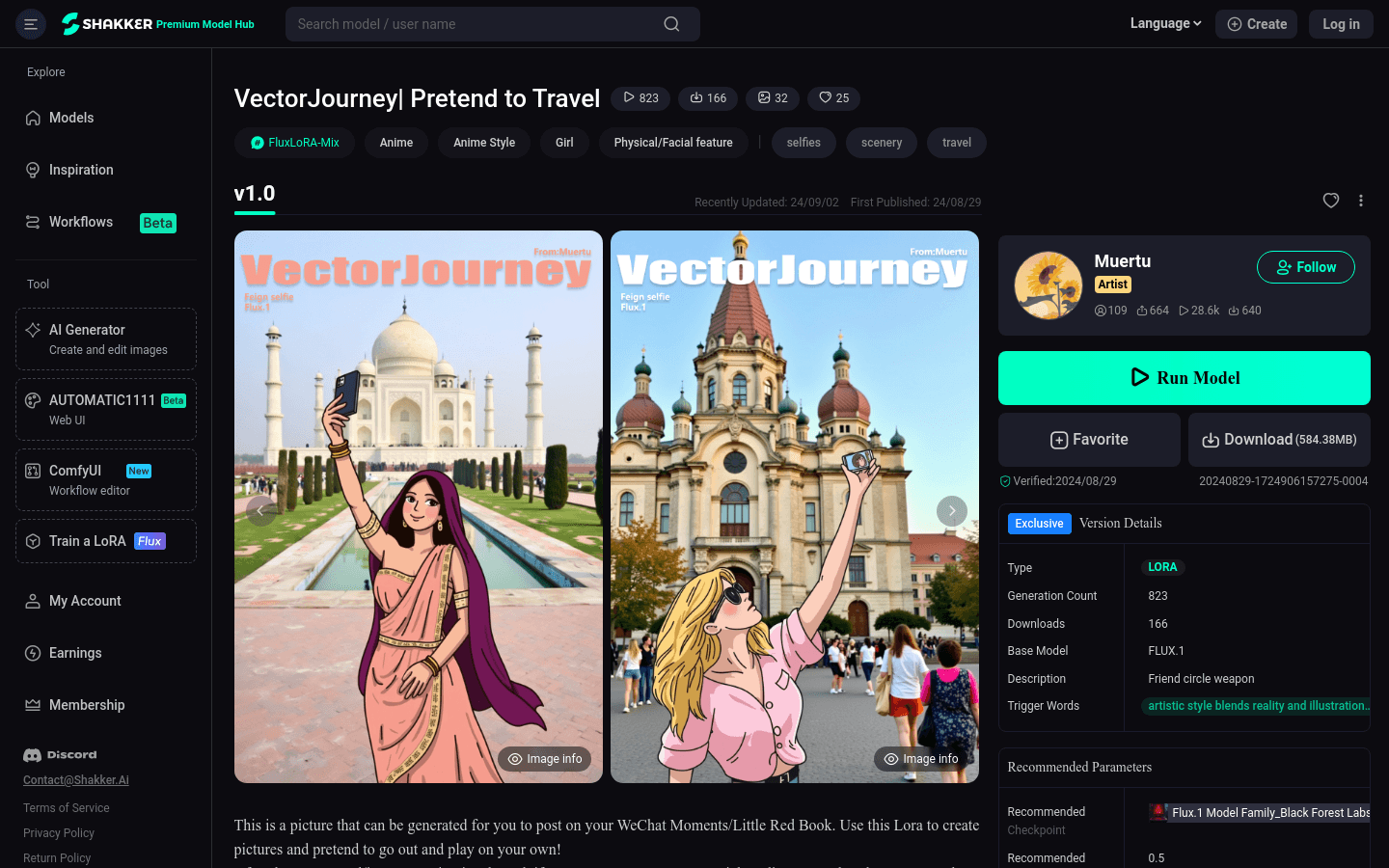

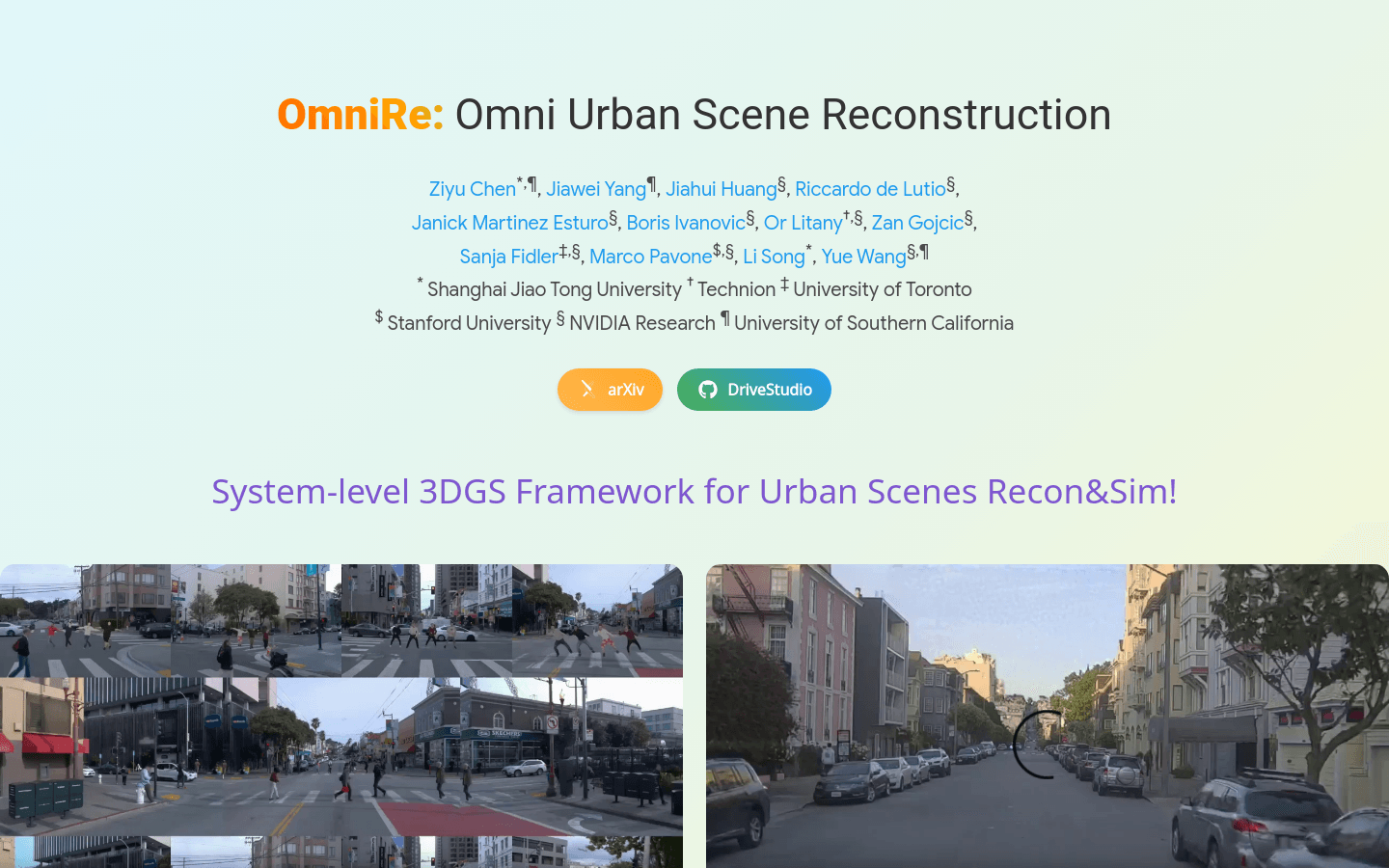

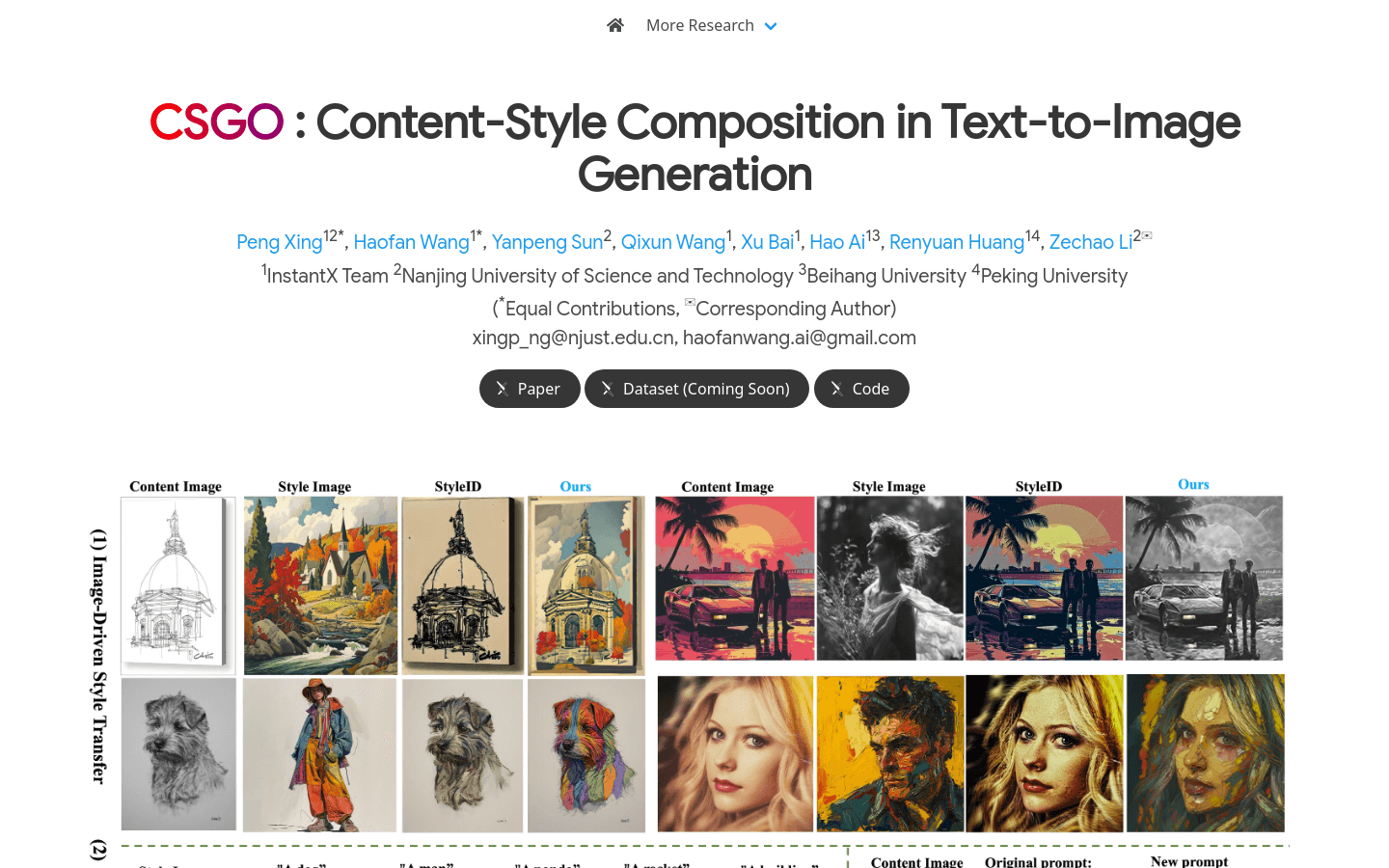

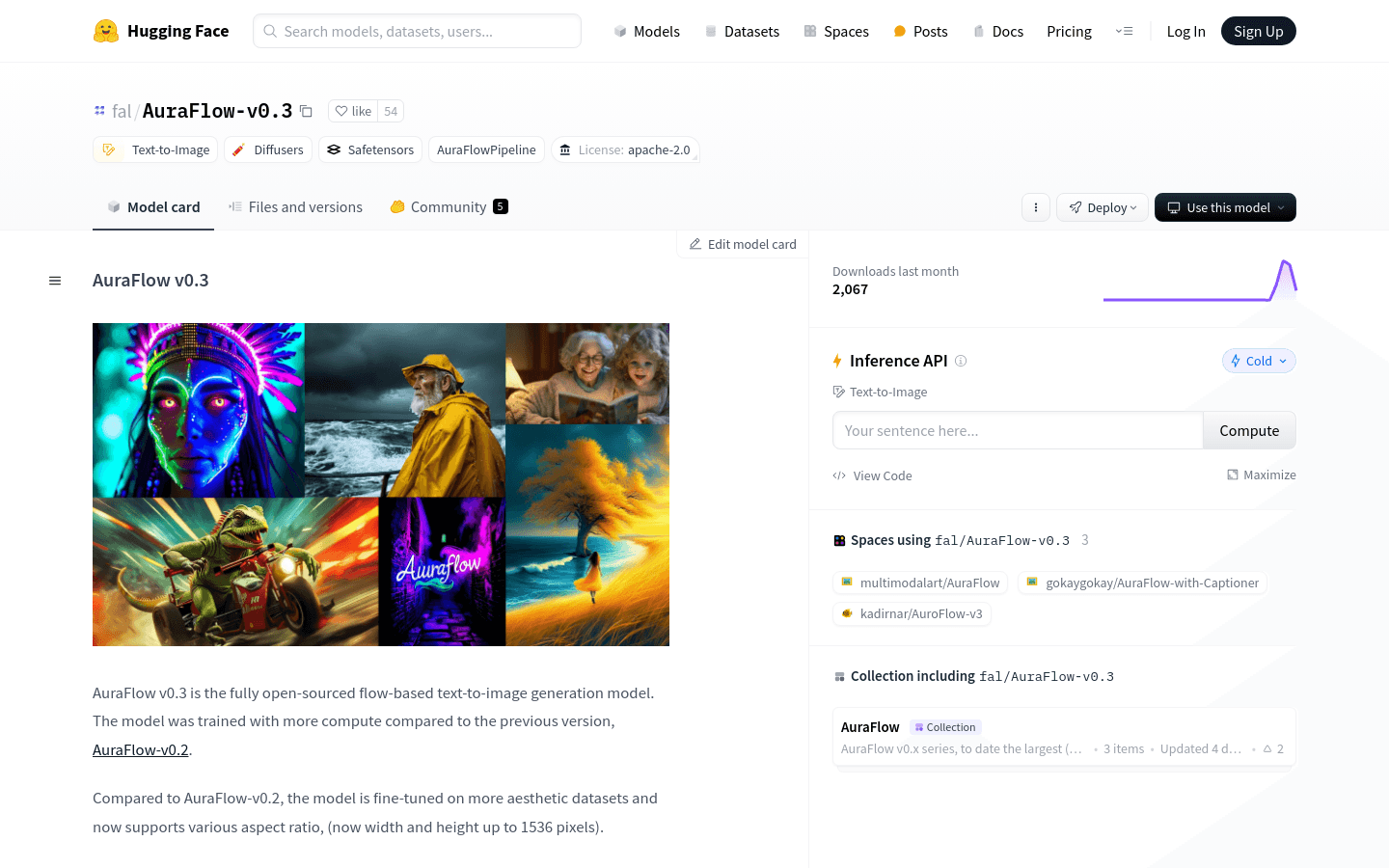

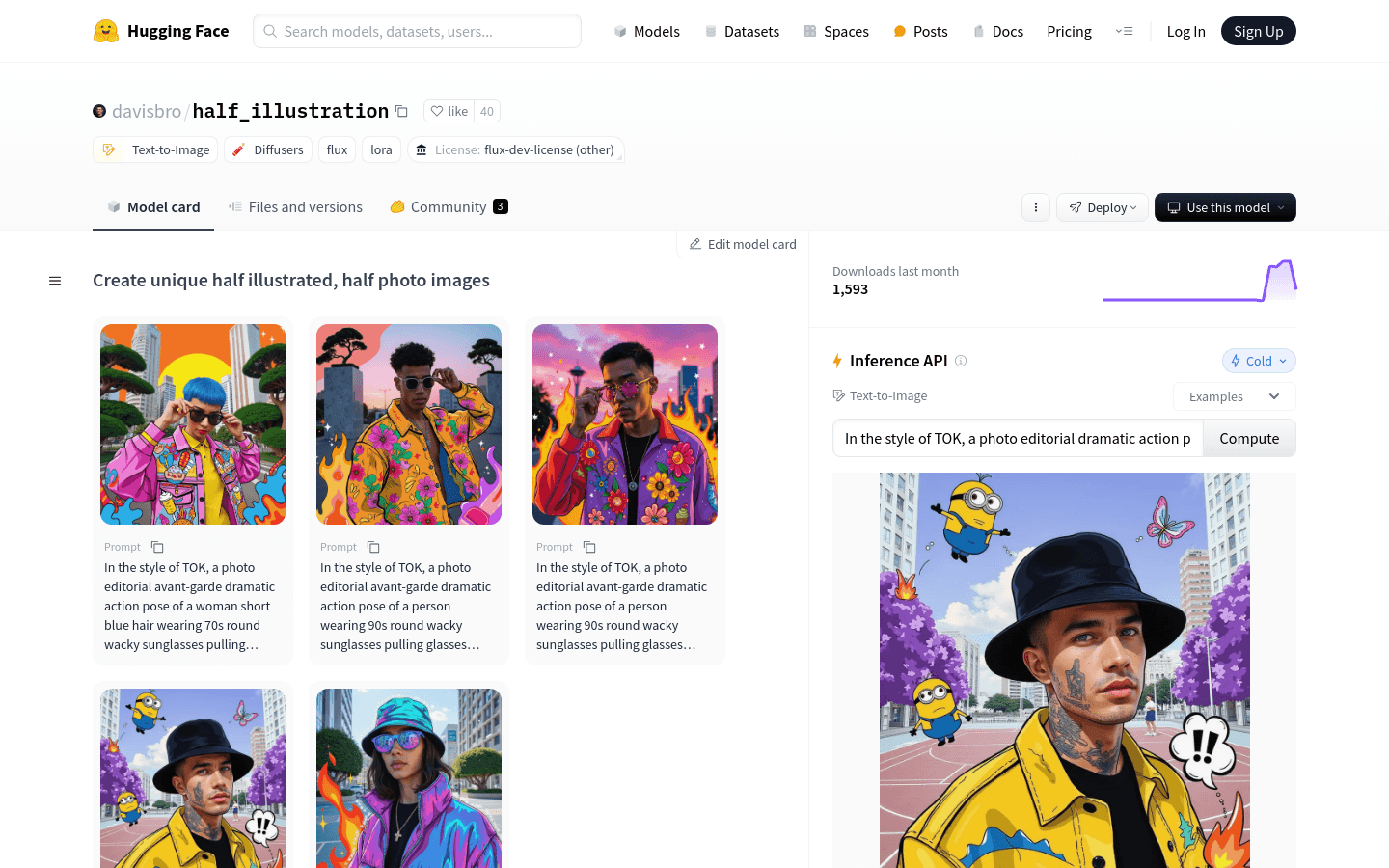

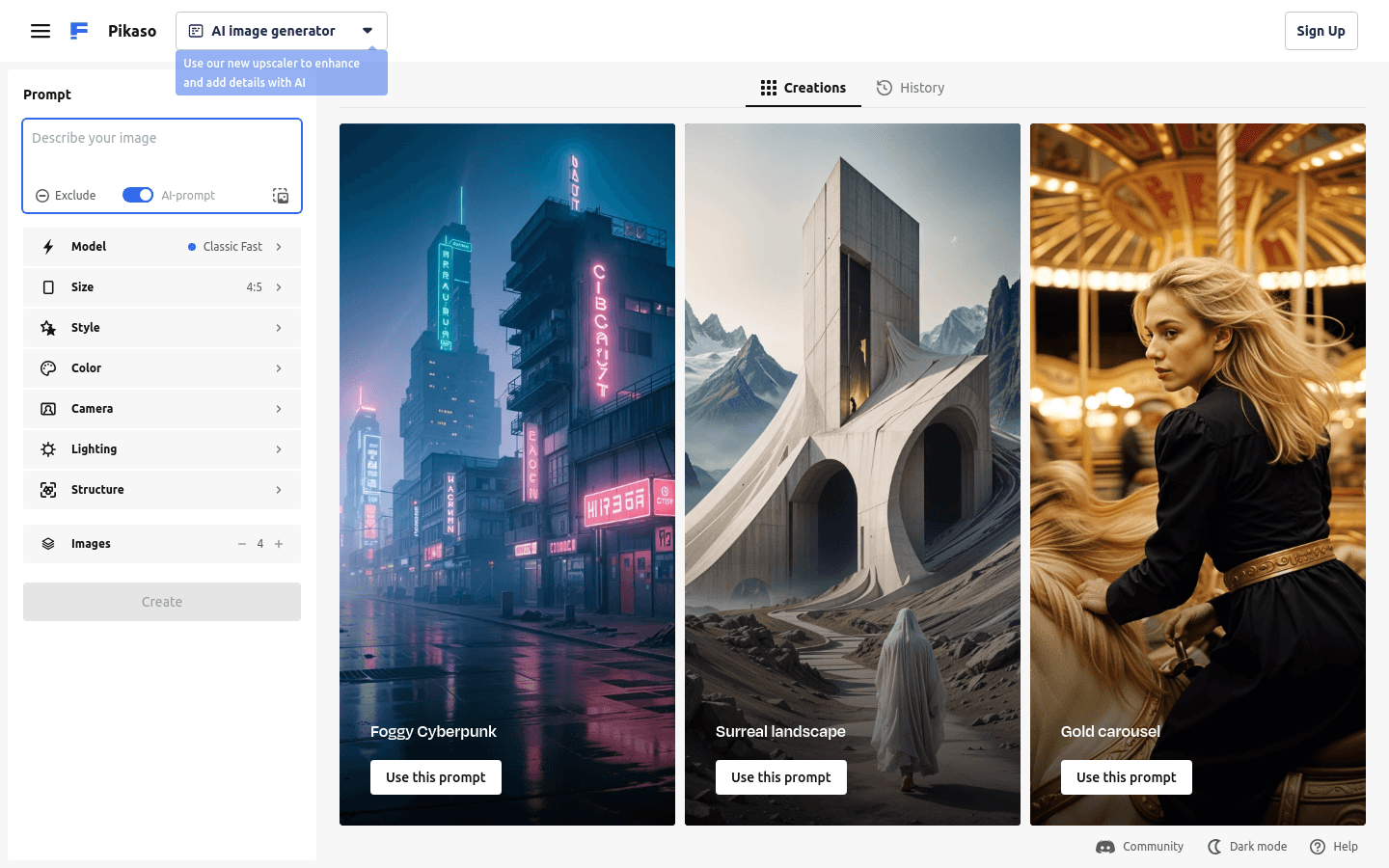

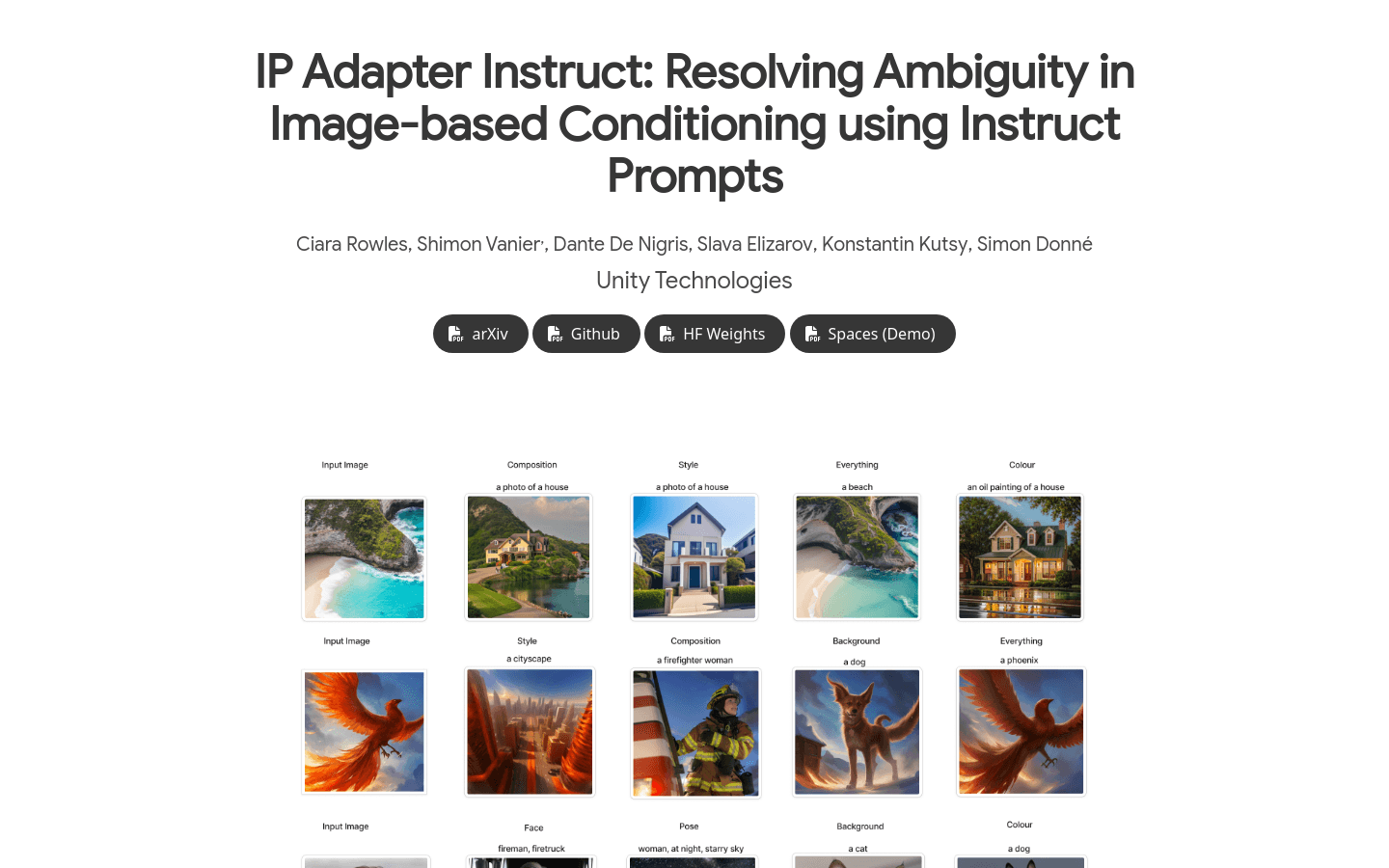

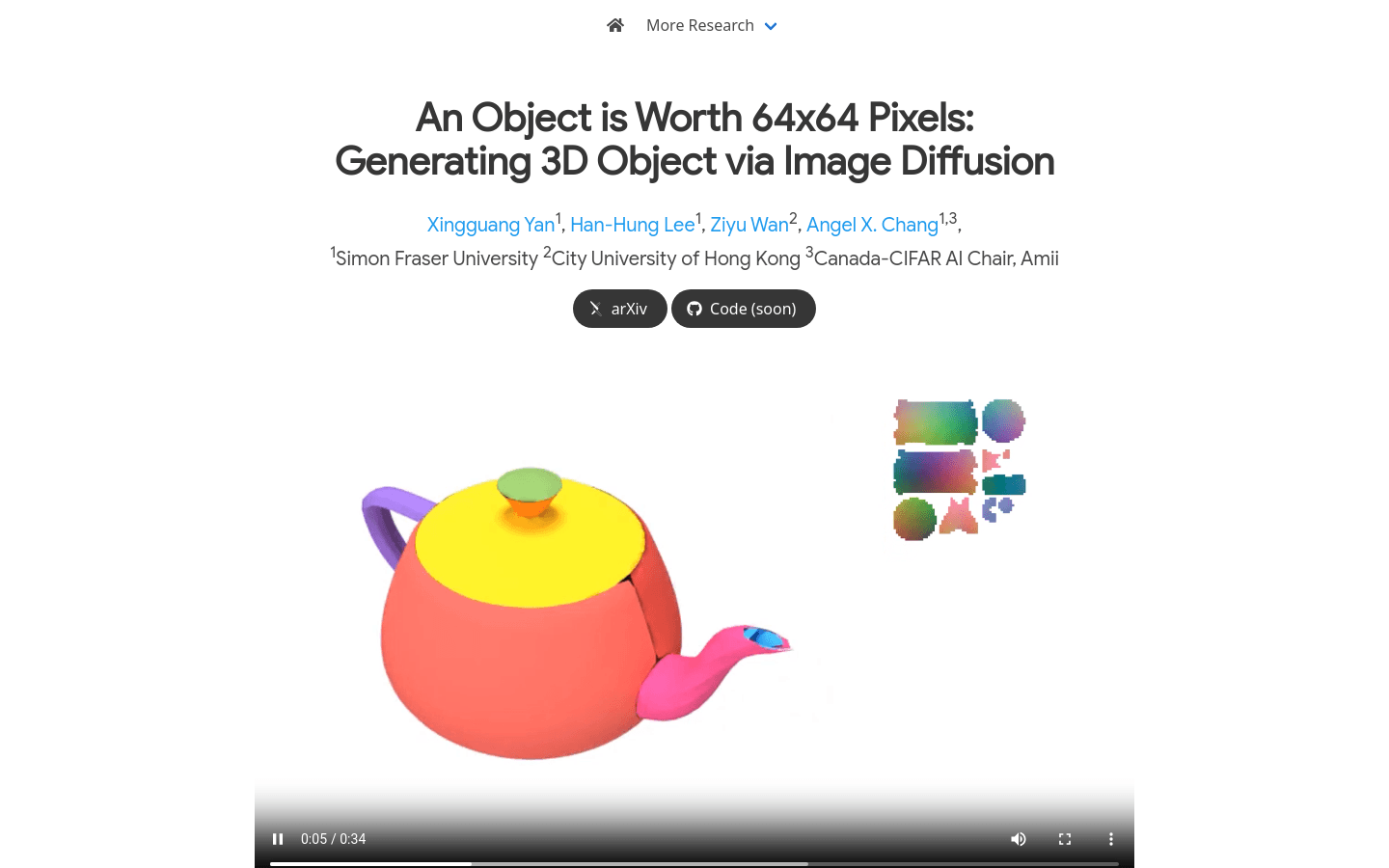

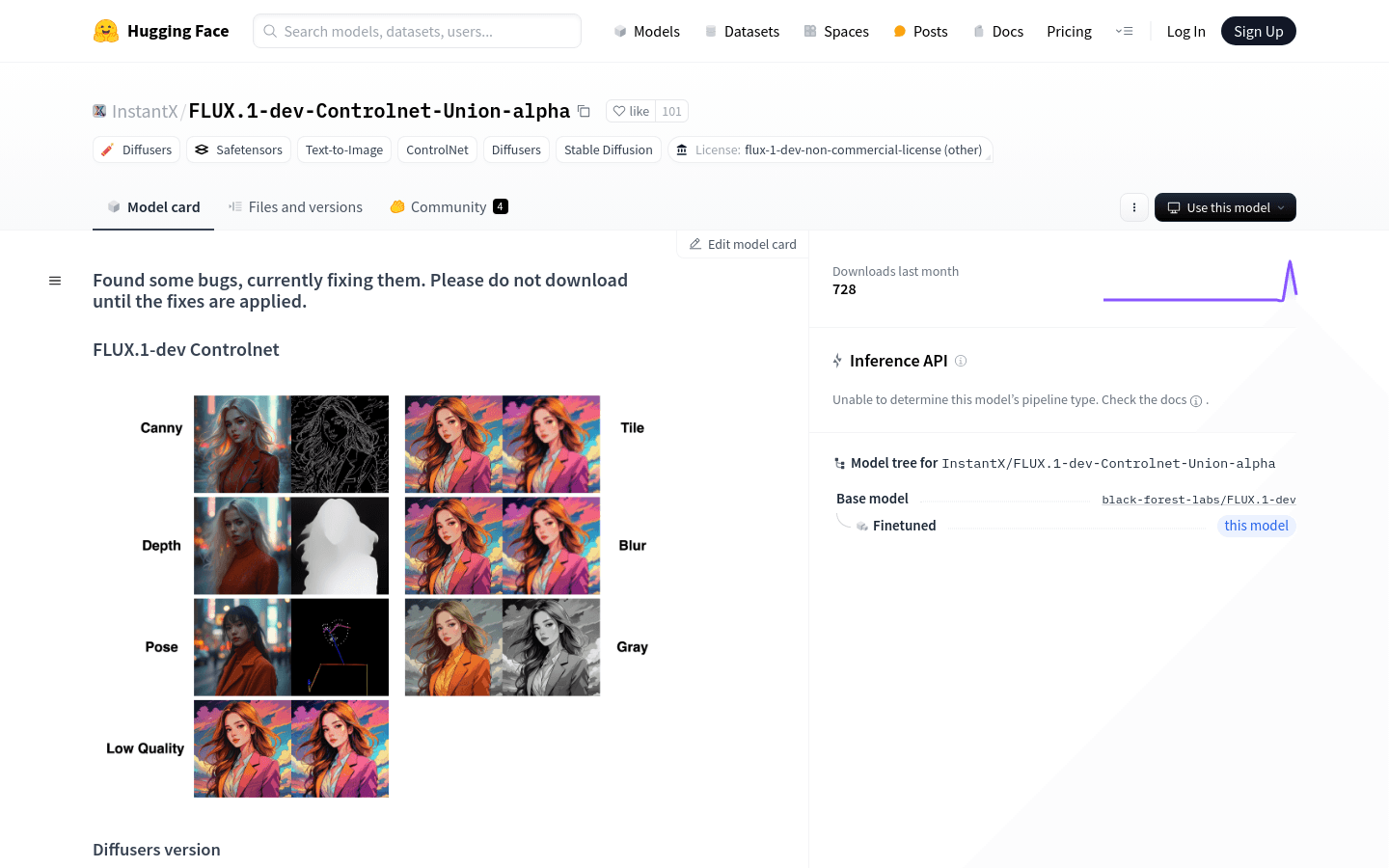

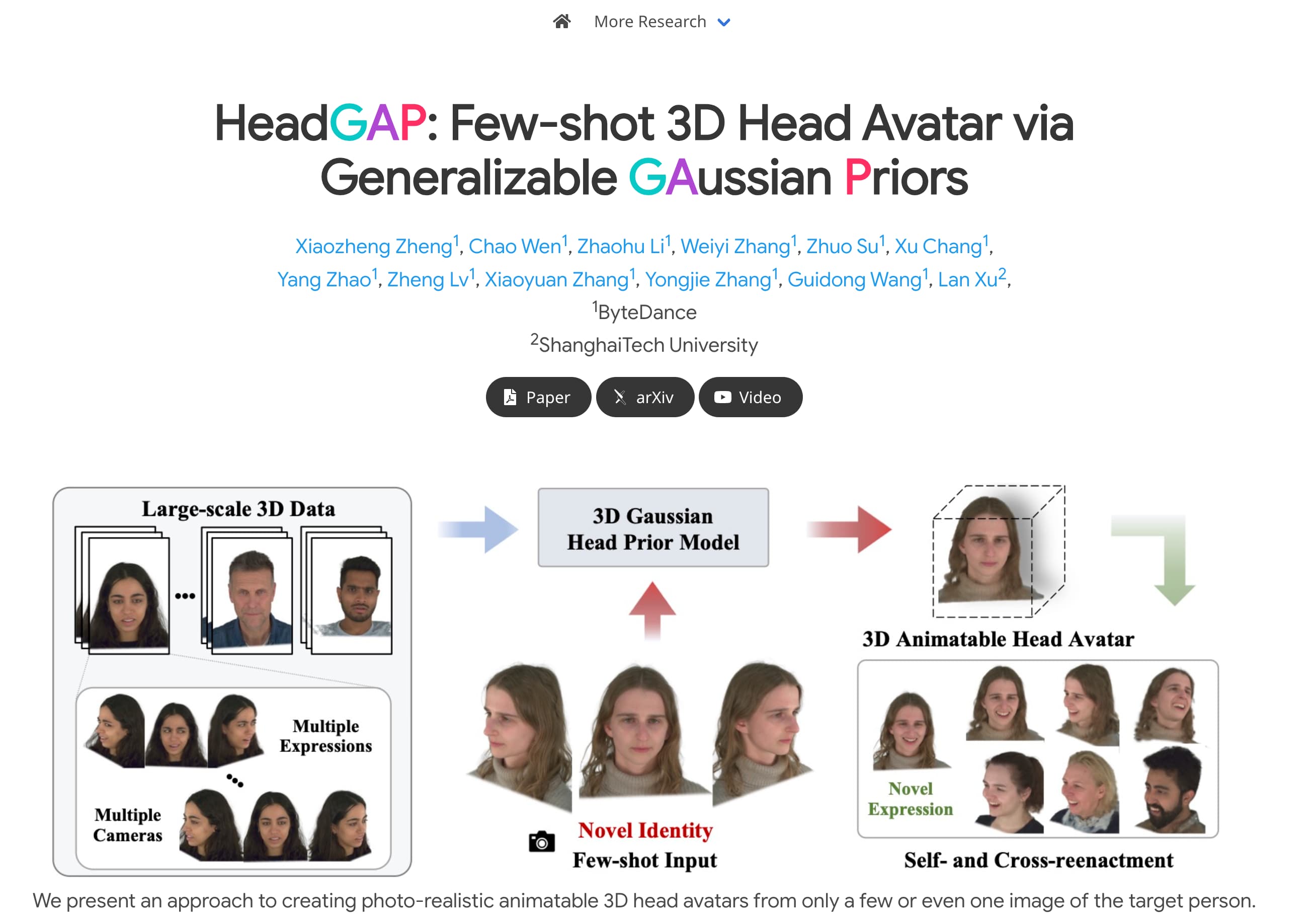

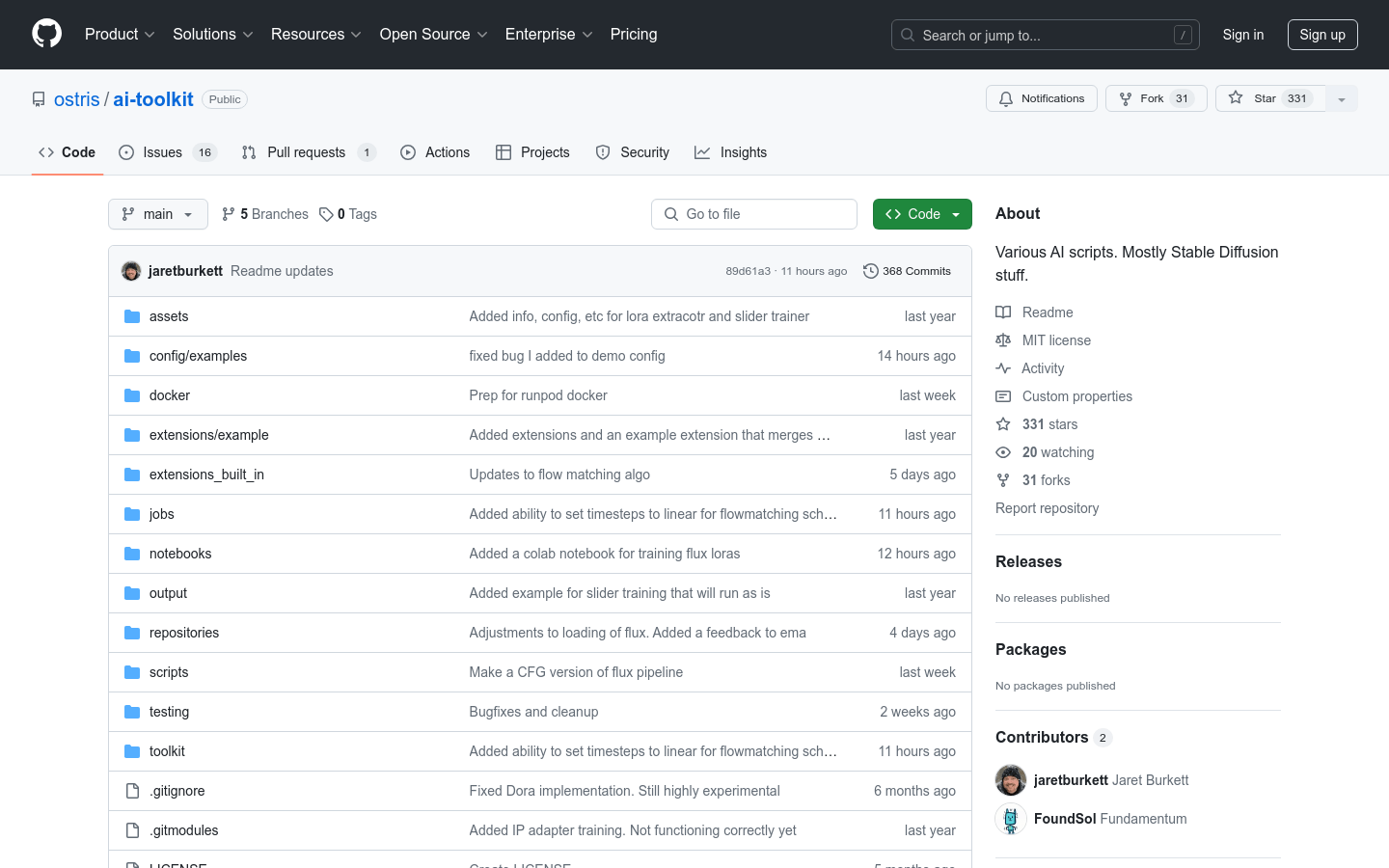

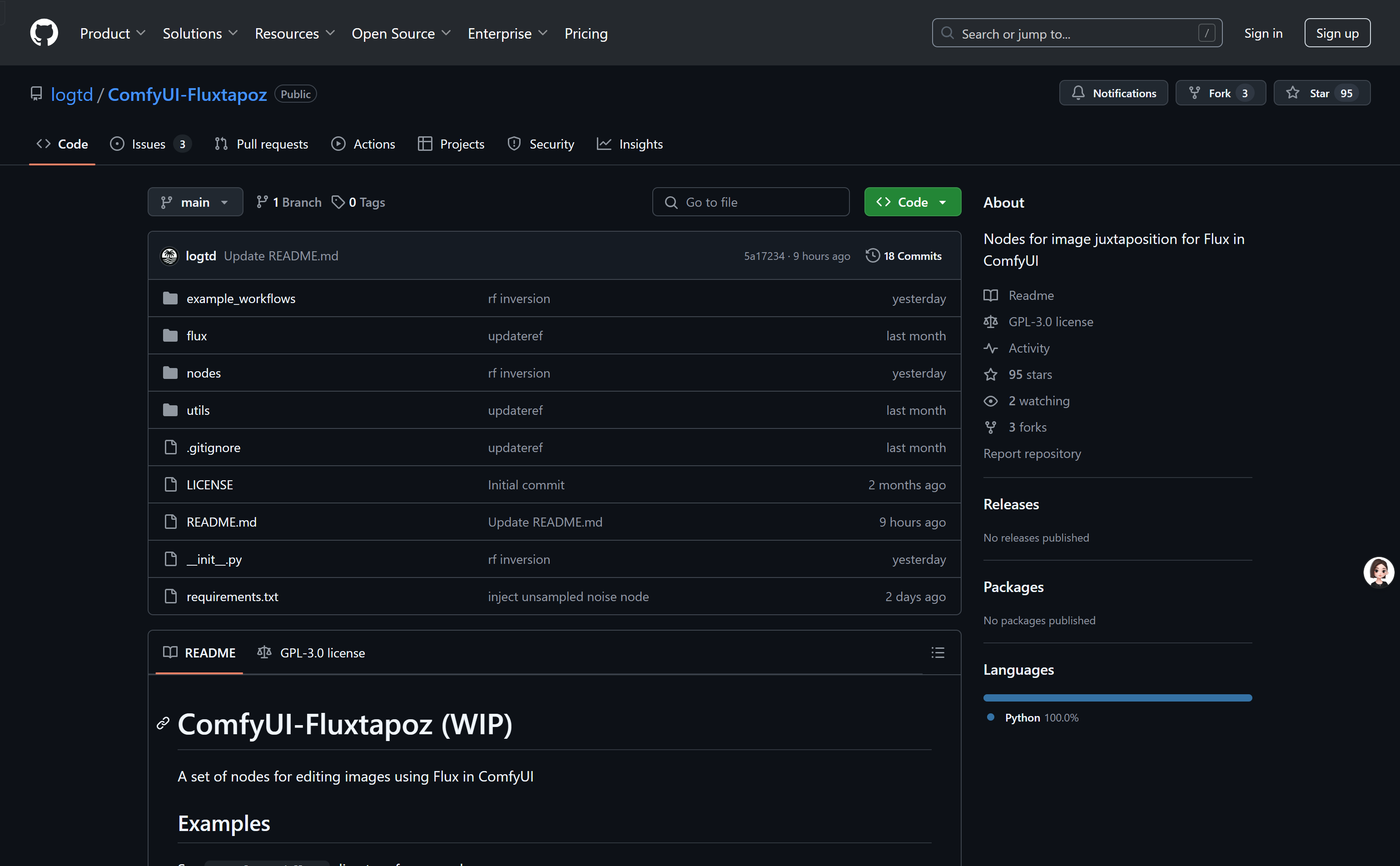

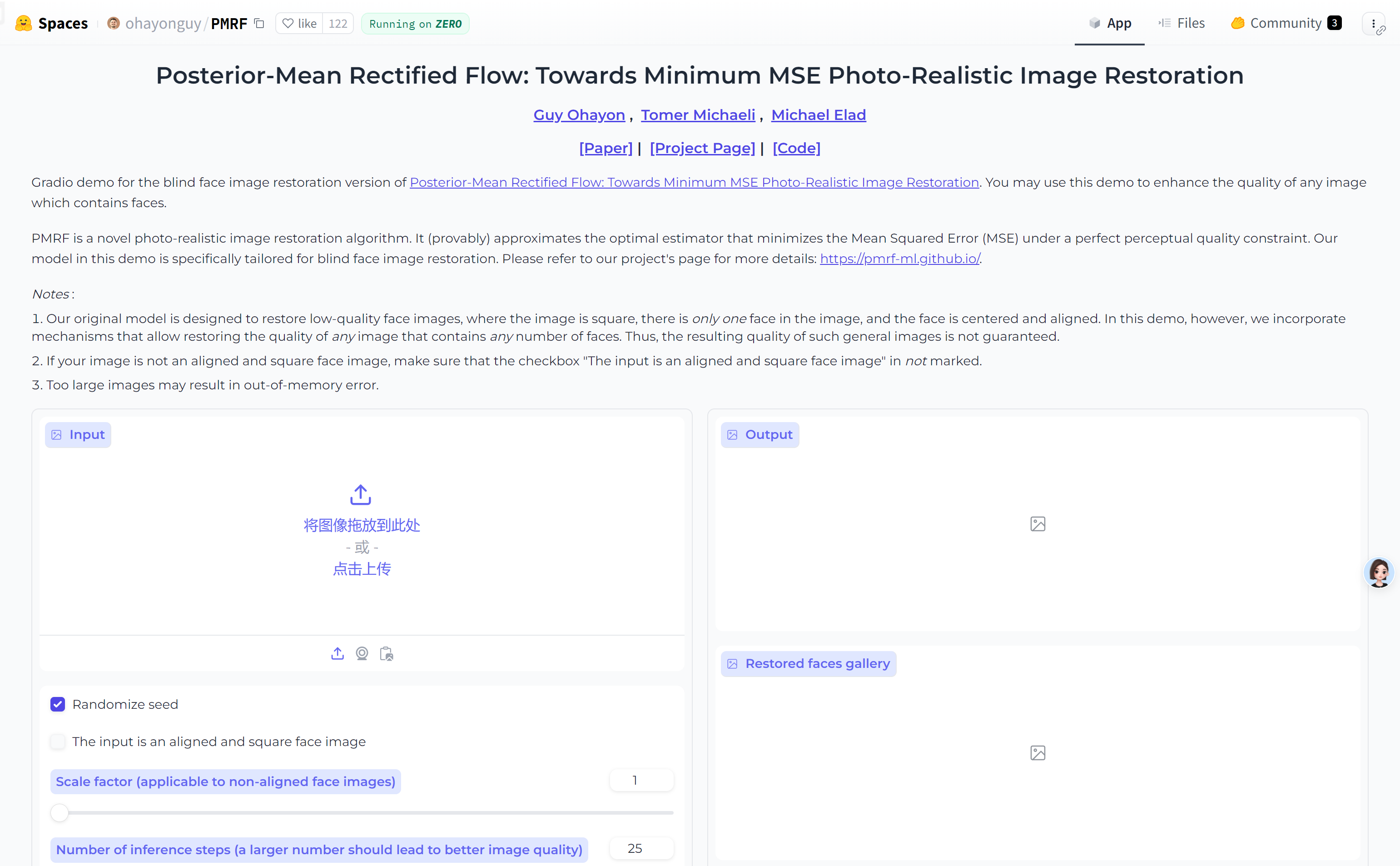

AI image generation

Found 100 AI tools

100

tools

Primary Category: image

Subcategory: AI image generation

Found 100 matching tools

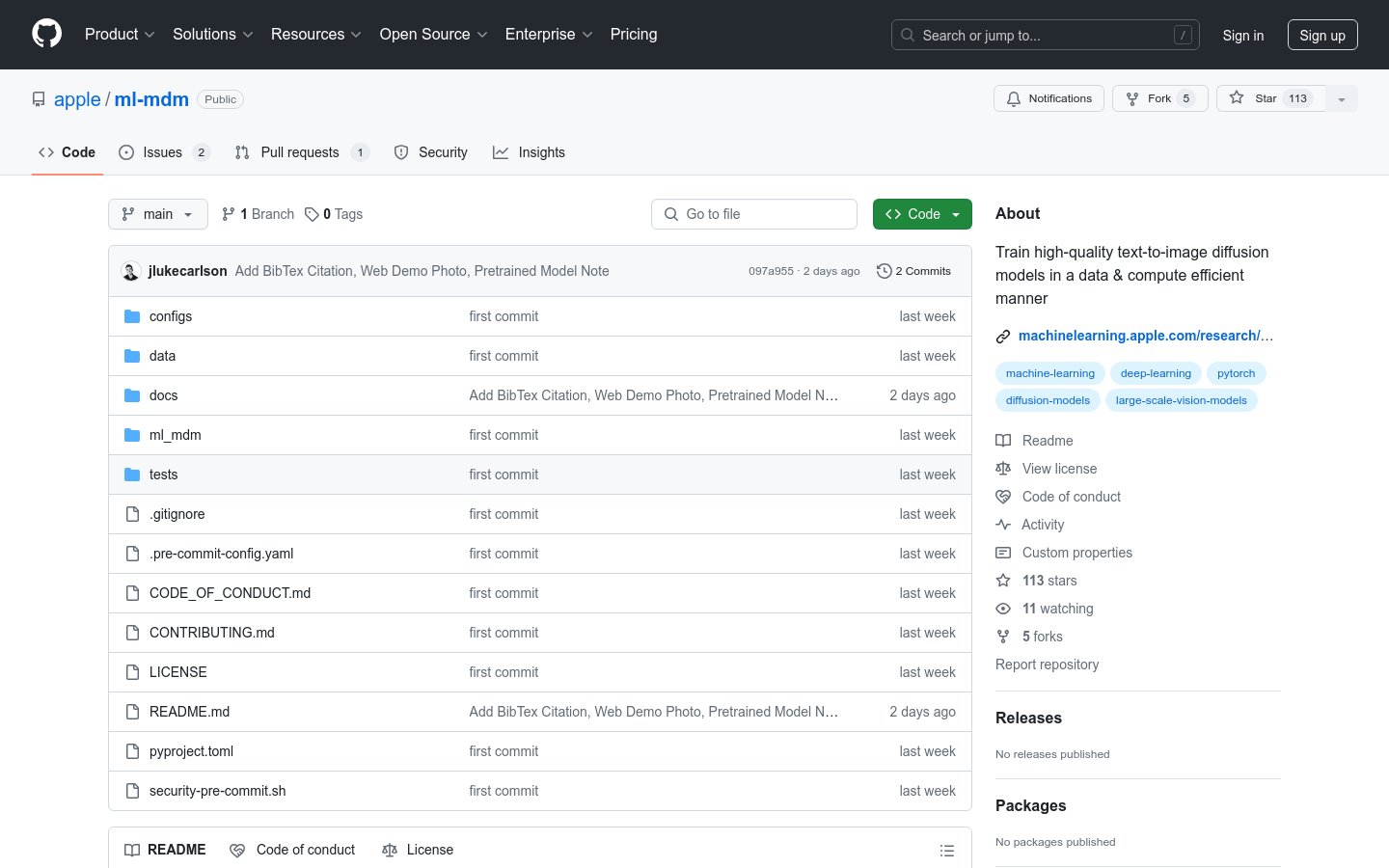

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under image Other Categories

🖼️

Explore More image Tools

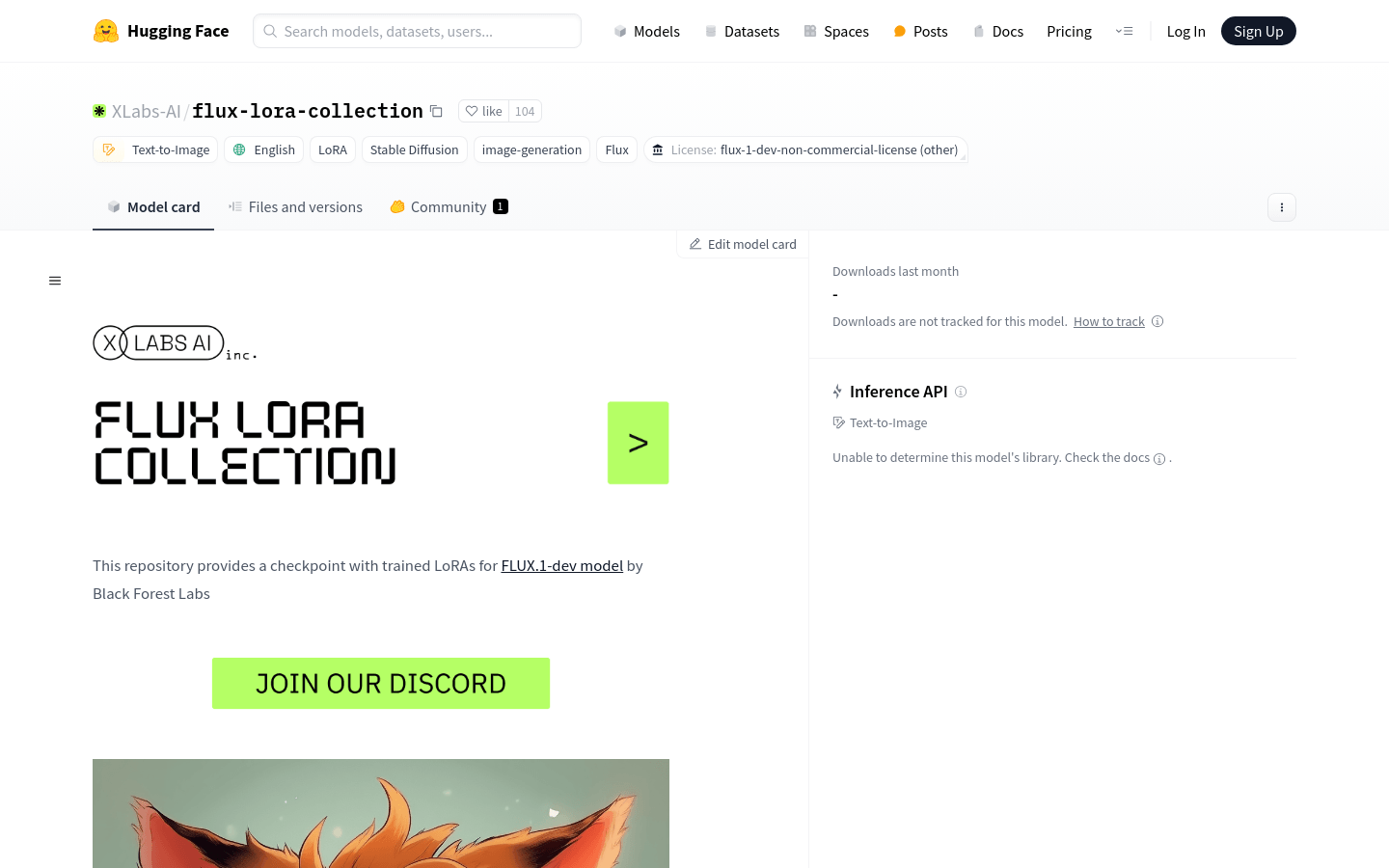

AI image generation Hot image is a popular subcategory under 543 quality AI tools

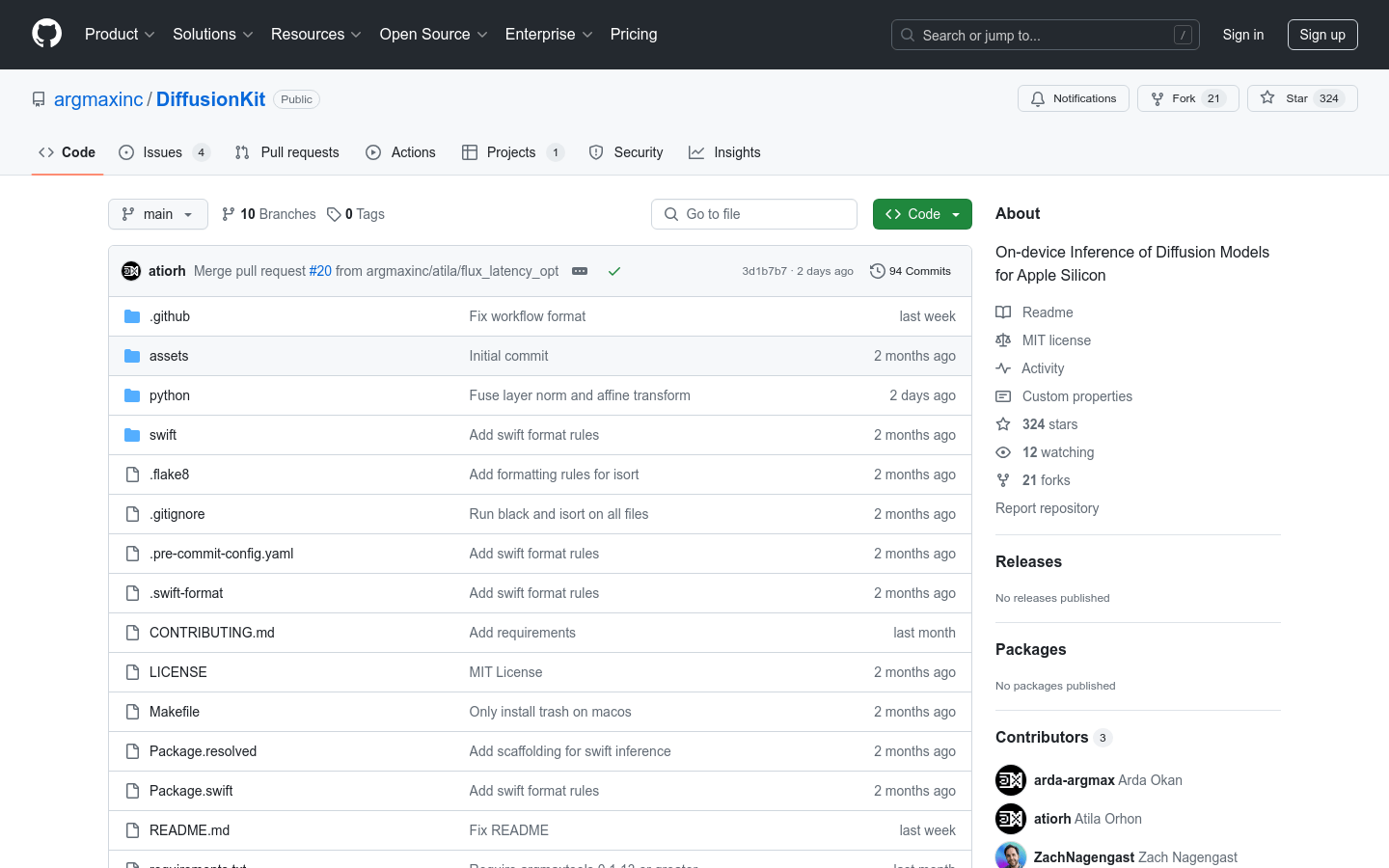

![FLUX1.1 [pro]](https://pic.chinaz.com/ai/2024/10/08/202410081033531725.jpg)