🎬

video Category

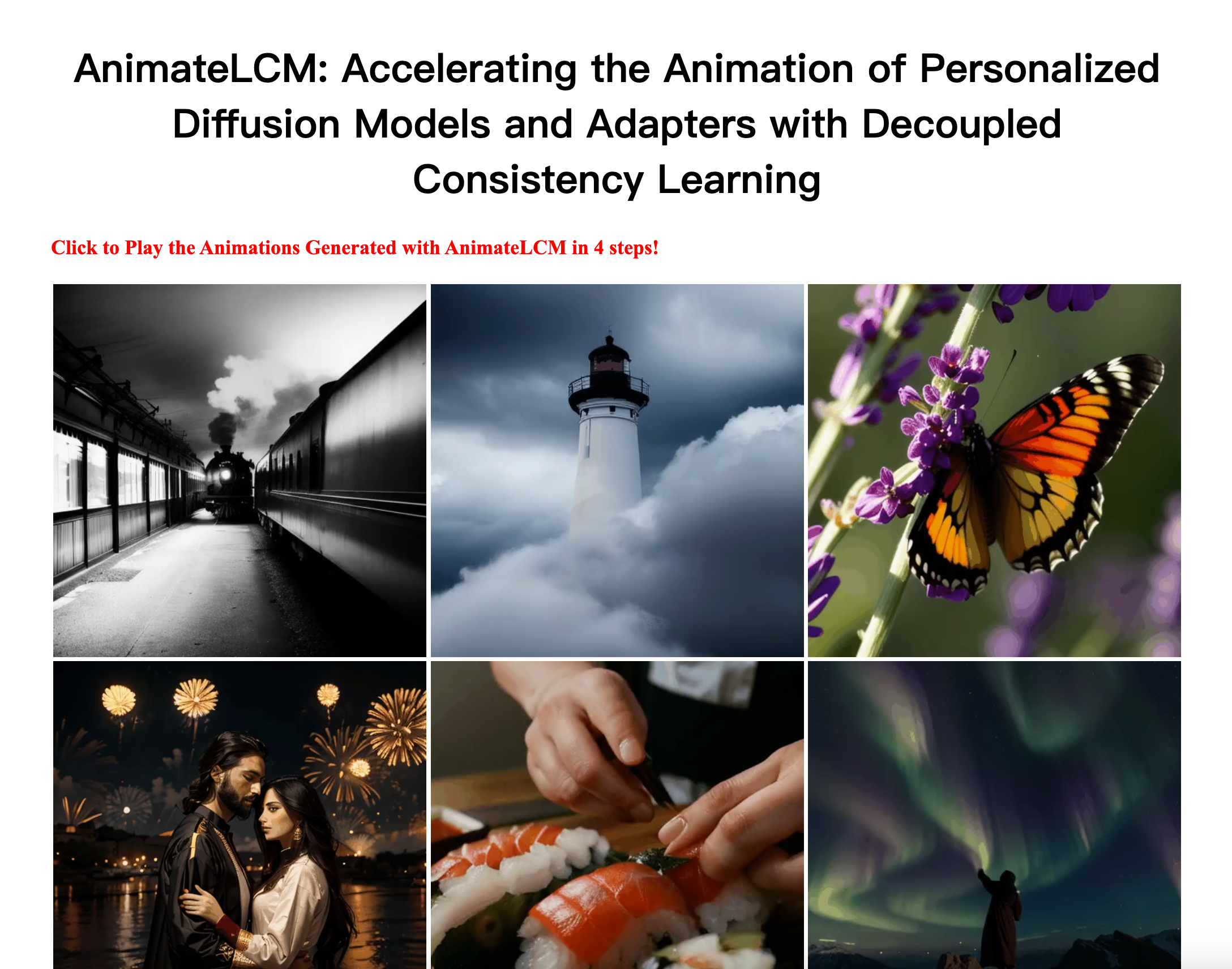

AI image generation

Found 64 AI tools

64

tools

Primary Category: video

Subcategory: AI image generation

Found 64 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under video Other Categories

🎬

Explore More video Tools

AI image generation Hot video is a popular subcategory under 64 quality AI tools